CNAF infrastructures

advertisement

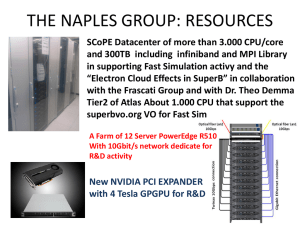

Introduction CNAF is INFN the national center devoted to research and development in the field of information and telecommunication technologies and to the operation of the related services for the INFN research activities. It hosts the National INFN computing center that was fully renovated in its HVAC and power distribution systems in 2007. Established to act as the primary national center for the experiments at the LHC (Tier1), in the context of the WLCG collaboration (Worldwide LHC Computing Grid), the center has quickly become a reference center for the management and processing of data produced by many experiments in which INFN is involved. CNAF provides significant contributions, as INFN national reference center, to the software development, as well as to the implementation and operational management of the distributed computing infrastructure, based on GRID technologies deployed on wide area networks. It also manages various national services that have been gradually enhanced over time, thereby helping to increase overall efficiency and reduce total costs. It participates in various research projects and development in the field of distributed, Grid and Cloud computing, both nationally and internationally, performed also in collaboration with ICT companies and public administrations. From 2011 CNAF has obtained the qualification of Center for Technology Transfer of the Emilia-Romagna Region. CNAF human resources and users On October 10 2012 68 people were working at CNAF, subdivided as 52 employees, 8 post-docs, 5 post-laurea fellowship recipients and 3 project contract holder. Nearly three quarters of the employees were hired as computing professionals, the rest as engineers except four who are serving in administrative roles. CNAF personnel is in general highly qualified, in some cases holding a PhD, with long-term expertise in scientific computing frontier sectors. The high number of temporary positions (nearly 2/3) is due both to the need of additional personnel for the the new Tier1 Center, emerged in the last few years when INFN, due to external constraints, could not open new permanent positions, as well as to CNAF high effectiveness in securing external funding, in particular through the participation in project funded by the European Community. Nearly half of the contracts are currently supported by external funds. Given the pretty high number of temporary positions, CNAF plays an important role in providing training and education opportunities to young computing professionals and engineers in the fields of advanced software development and of management of high performance and throughput computing systems. The computing resources available at CNAF are exploited by many international scientific collaborations, often encompassing hundreds or, as in the LHC experiments case, thousands of researchers belonging to Institutions all over the world. Potentially each collaborator, being registered as an enabled member of a Virtual Organization (VO) can, through secure authentication procedures based on digital certificates, get access to these computing resources. Currently nearly 1600 users hold a CNAF account and a thousand of them has logged into a CNAF system in the last 12 months, A quarter of them are non-INFN users. CNAF infrastructures CNAF hosts and manages two prominent research infrastructures that are made available to national research community and to the international scientific collaborations INFN is engaged with: the high throughput computing center, known as Tier1 with reference to its function as first level computing center for the LHC experiments; the Italian Grid Infrastructure (IGI) whics is described in more detail elsewhere in this document Both are well integrated components of larger European infrastructures. The Tier1 builds, with similar European centers a network of tightly inter-operating large computing centers that plays a unique role for scientific research in Europe. The network could further consolidate its role and could operate in an even more coordinated way if the cooperation among the centers could be formalized with the creation of a new ERIC (European Research Infrastructure Consortium) for distributed scientific computing in Europe. Such an ERIC could provide, in particular, essential large scale distributed computing resources and services needed by the most demanding ESFRI scientific programs. The Tier1 center The Tier1 is the main INFN scientific computing center. The current Tier1 infrastructure, though hosting a significant amount of resources, both in terms of computing power (~140,000 HepSpec, ~ 13,000 concurrently running computing tasks), and of storage capacity (11.5 PB on disk and 14 PB on tape in 2012), has been designed to cope, if needed, with even larger future loads. Indeed the systems providing power and cooling in a fully redundant way, can manage computing equipment power consumption up to 1.2 MW and they can provide uninterrupted electrical power to the various services up to 3.4 MW. In 2012 the tape library has been upgrades with the installation of the latest generation drives, thus expanding the capacity by a factor of five: the library can now handle up to 50 PB of online data. The computing resources of the different experiments are centrally managed by a single system of queues (normally each experiment has at least one dedicated queue) and they are dynamically assigned to the experiments, through a fair-share mechanism, according to predetermined weights proportional to the amount of funding allocated by the INFN scientific commissions. The unified management of computational resources enables the full use of the available CPU for more than 95% of the time. Figure 1 shows the CPU usage at CNAF during the last 12 months, the red area indicates the power of CPU actually used while the green area takes into account the dead time of the CPU typically due to the latency introduced by accessing the data. Their ratio, which is a measure of the efficiency of applications and of the data access system performance, reached this year a very satisfactory (84%). The storage infrastructure is based on industry standards, both at the level of interconnections (all disk-server and disk systems are interconnected via a dedicated Storage Area Network, SAN), as well as with respect to data access protocols that access to the data (the data are resident on parallel file systems, typically one for each main experiment). This has made it possible to implement a data access system which is fully redundant and capable of very high performance. At present, the total throughput between the computing farm and storage systems, is ~ 50 GB/s, making it possible to extend the type of applications run in the center to final user chaotic data analysis jobs that are typically more demanding in terms of access performance than centrally managed productions. In general, access to computing resources and storage (both disk and tape for archiving), takes place through standard protocols and common interfaces. Currently, 20 scientific collaborations are using the resources of the Tier1: In addition to the aforementioned experiments at the LHC, there are experiments related to the the activity of the Scientific Commission 1 (BABAR, CDF, SUPERB and KLOE), 2 (AMS, ARGO, Auger, Borexino, FERMI / GLAST , Gerda, ICARUS, MAGIC, PAMELA, Xenon100, VIRGO) and 3 (AGATA). The LHCb Tier2 facility is also integrated inside the infrastructure of the Tier1, as well as a Tier3 facility used by researchers the INFN Bologna site and other national services used by INFN personnel. CNAF hosts one of the most important hubs of the Italian research network (GARR) . It was the first one, in 2012, to migrate to the new infrastructure based on dark fibers (GARR-X). In addition to the normal access to the Italian research network via a 10 Gbps link providing, through the GEANT network, the connection to the European and global research networks, connectivity with the Tier0 at CERN and other WLCG Tier1 centers is assured by a dedicated optical network LHCOPN to which CNAF is connected with a redundant 20 Gbps link, planned to be upgraded to 100 Gbps in the near future. The CNAF was also among the first TIier1 centers that were connected to LHCONE, an special wide area network under construction dedicated to the interconnections with the main WLCG Tier2 Centers. Main activities CNAF guarantees 24X7 operation of the IT infrastructure and carries out development and technological activities in the ICT field, participating in projects both nationally and internationally. Recently, developments in the following areas have been actively pursued, motivated by the quest for increased resource exploitation efficiency and by the need of establishing collaborations that may improve the project sustainability: study and implementation of new large scale computing architectures and storage systems; study of many-core CPU architecture performance with HEP simulation and data analysis applications; implementation of scalable virtualization architectures for scientific computing and implementation of general purpose computing services; participation in projects at national and international level in collaboration with public and private entities in the filed of Grid and Cloud computing. These projects have led to new technological exchange activities to improvement in the quality of services provided to the users of computing services and storage. CNAF also develops and operates, for the benefit of the entire INFN community various services of national relevance, such as the main Domain Name server, e-mail lists services, the experiment and INFN Web sites, and it is responsible for the management and coordination of activities related to the support of video conferencing systems and events video shooting and streaming. It also manages the infrastructure of the INFN information system and is responsible for the maintenance and development of services related to the accounting and personnel attendance auditing systems. CNAF has carried out for many years the coordination and centralized management of national hardware and software maintenance contracts, as well as the national program for the acquisition and the distribution of software packages, in order to obtain more favorable contractual and economic conditions than those that would be possible with individual negotiations. National and international collaborations CNAF has activated over the years various collaborations with national and international partners, in most cases related to European projects in the computing Grid sector, that have made it possible to secure, in the period 2001-2010, external funds for a total amount in excess of 22 Millions Euro, with 31 approved projects. The main active collaborations are: Worldwide LHC Computing Grid (WLCG), formed to coordinate the management of the computing infrastructures used by the LHC experiments, through extensive use of Grid based services Joint Research Unit IGI (Italian Grid Infrastructure) European Middleware Initiative (EMI) collaboration; European EGI-Inspire collaboration; collaboration with the Marche regional Government for the study and implementation of a regional Cloud infrastructure meant to be used by citizens, Public Administrations and the local business community; Duck collaboration with other Research Institution in the Emilia-Romagna region to disseminate and support the use of Grid technologies; collaborations with Computing Centers located in various Physics Laboratories such as CERN (Swiss), Fermilab (US), SLAC (US), Kisti (South Korea); Future programs The Tier1 Center will require also in the next years gradual but continuous improvements of the computing resources to meet the growing needs of the LHC experiments. The following table shows the amount of resources foreseen for 2013 and 2014, compared to those available in 2012. The resource requirements for 2015 is for the moment know with large uncertainties: for the LHC experiments it is expected that roughly the processing and storage capacity will double with respect to 2012. More precise estimates will be available in 2014, when the outcome of the current data taking period, ending in March 2013, will be known, but they will also depend on the parallel evolution of the experiments computing models. Figure XX: Computing resources requested by experimental collaboration that use the Tier1 Center expressed in terms of: processing capacity (benchmark HepSpec06), net storage capacity on disk and on tape (in TeraByte) Very important for CNAF future will also be the participation to research and development activities, in collaboration with other public and private entities, among which the following are particularly important: analysis of scalability issues related to the management and data access capabilities of data volumes at the hundreds of PB or Exabyte scale with the identification and exploitation of the most promising lines of development; consolidation of a set of “off-the-shelf” tools and services for the management and processing of scientific data; definition of new advances analysis and processing frameworks for INFN experiments ; definition of an architecture and a reference implementation for long-term preservation of scientific data and its applications; maintenance and evolution of the middleware upon which the Grid services are based and extension of the paradigm of distributed computing to Cloud infrastructures; participation in Smarticities projects sponsored by the Ministry of University and Research, in particular by contributing to the developments of IaaS (Infrastrutcure as a Service) and PaaS (Platform as a Service) components that form the basis for a national federated Cloud infrastructure open for research, business and public administration users; participation in Horizion 2020 projects concerning distributed scientific computing on a large scale (Excellent Science - Einfrastructures) and innovation in computer science, in particular with regard to the management of large amounts of data; development of current and future technology transfer programs; creation of an extended knowledge network within INFN to improve of the quality of the software being developed and used.