Memory Management and Virtual Memory in MS Word format

advertisement

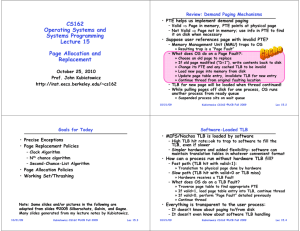

Memory Management and Virtual Memory 1 Address Binding – Mapping from one address space to another Addresses in a source program are generally symbolic A compiler can bind these symbolic source code addresses to relocatable addresses or absolute addresses The linker or loader will next bind the re-locatable addresses to absolute addresses 2 Binding at compile time It is known at compile time where the process will reside in memory and absolute address code is generated at this stage It is known that a process will reside in memory starting at a fixed location and therefore the generated compiled code can start from that location If starting address of the process changes then we must recompile the code 3 Binding at load time It is not known at compile time where the process will reside in memory and re-locatable code is generated at compile time and final binding is performed at load time It is not known that a process will reside in memory at a specific address location If starting address of the process changes then we must only reload the code Binding at execution time The process can be moved during execution from one memory segment to another, then binding is delayed until run time 4 Logical or Virtual Address Space Address generated by the CPU is known as the logical address Compile-time and load-time address binding create an environment where logical and physical addresses are the same Logical and physical addresses are different when using execution-time address binding The memory mapping performed at execution-time from logical or virtual address space to physical address space is done by the Memory Management Unit (MMU) The user program never sees the physical addresses and it only deals with logical addresses The MMU hardware converts logical addresses into physical ones when a memory reference is actually made 5 Process Swapping In priority-based scheduling schemes, when a higher priority process arrives and wants service, the memory manager may swap out the lower priority task and can swap in the higher priority task in it’s place In round-robin scheduling systems, when one process’s time slice expires it can be swapped out and another process is swapped in If binding is done at compile or load time then the process cannot be moved to a different location when it is moved back into memory If binding is done at execution time, then it is possible to swap a process into a different memory space because the physical addresses are computed during execution time To achieve efficient processor utilization execution time for each process must be long relative to the swap time 6 Paging Allows the logical address space to be noncontiguous Logical memory is broken into blocks called pages Physical memory is also broken into blocks of the same size called frames 7 Page sizes are powers of two when addressing a page in memory, the high order address bits are treated as page numbers when the low order address bits are the page offset to that page Therefore every address generated by the CPU is divided into two parts, the page number and the page offset Small pages sizes result in lower page fragmentation Larger page sizes require additional overhead involved in each page table entry and disk I/O is more efficient when the number of data being transferred is larger 8 Frame Table The operating system maintains a data structure known as the frame table that has one entry for each physical page frame indicating whether the frame is free or allocated and to which page of which processes it is allocated Page Table Stored in the process control block In most operating systems, each process has an associated page table Upon start of a process the process page table is reloaded into memory 9 Translation Look-aside Buffers (TLB) are small very fast hardware cache consisting of a key and value used with page tables to store few of the page table entries When a logical address is generated by the CPU, its page number is presented to a set of TLB’s that contain page numbers and their corresponding frame numbers If the page number is found in the TLB, its frame number is immediately available Only if it is not a memory reference to the page table must be made to obtain the frame number At this time, the page and frame numbers are added to the TLB to be used for any subsequent reference to that page If the TLB is full of entries, an entry must be selected for replacement When new page table is selected (i.e. context switch) the TLB is flushed 10 11 Types of memory Virtual memory Supported by system hardware and software Gives the illusion that a user has a vast linear expanse of storage Divided into pages of identical size Real memory – Main memory Divided into page frames Auxiliary memory Pages reside in auxiliary memory and may in addition, occupy a page frame in real memory 12 Page-fault interrupt When an executing program refers to a particular item in the virtual memory, the reference proceeds normally if the item is also in a page frame. Otherwise a page-fault interrupt occurs to enable the operating system to adjust the contents of real memory to make a retry of the reference successful. Page table Hold information about the whereabouts of each page Locality of reference At any instant, there is a favored subset of all pages that have a high probability of being referenced in the near future 13 Operating system policies for handling page-fault interrupts Fetch policy Demand fetching or demand paging - A page comes into real memory only when a page-fault interrupt results from its absence - For most computer systems, memory access, ma ranges from 10 to 200 nanosconds as long as we have no page fault the effective access time is equal to the memory access time. - effective_access_time = (1 – probability_of_page_fault) * ma + p * page_fault_time - A page fault interrupt in most situations will cause the reallocation of the CPU to other users or processes - It is important to keep the page-fault rate low in a demand-paging system to avoid increase effective access time and slowing process execution. Prepaging - Pages other than the one demanded by a page fault are brought into page frames 14 Replacement policy An increase in the degree of multiprogramming and multiprocessing can lead to over-allocation of memory in the system. - While a user process is executing, a page fault occurs. - The hardware traps to the operating system, which checks its internal tables to see that the page fault is genuine and not an illegal memory access. - The operating system determines where the desired page is residing on disk, but there are no free frames on the free-frame list: all memory is in used. - The OS can swaps out a process, freeing all its frames, and reducing the level of multiprogramming or it can use a page replacement policy to replace a frame with the new desired page. Note that system memory is not used only for holding program pages. Buffers for I/O also consume a significant amount of memory. 15 Demand replacement – replacement occurs only when real memory is full Least Recently Used (LRU) - A true implementation requires hardware support to maintain a stack of pages referenced - A policy based on examining use bits and dirty bits approximates LRU well. First-in, first-out (FIFO) - Easy to implement with a circular buffer pointer among page frames - Performance of FIFO is inferior to that of LRU. Last-in, first-out (LIFO) 16 Least frequently used (LFU) - Use counter to determine page usage. - Requires expensive hardware and other methods generally outperform it Most recently used (MRU) 17 Placement policy Segmentation Cleaning policy Opposite of fetch policy Determines when a page that has been modified will be written to auxiliary memory Demand cleaning - A dirty page will be written only when selected for removal by the replacement policy Pre cleaning 18 Load-control policy Without a mechanism to control the number of active processes, it is very easy to over commit a virtual memory system Thrashing - Virtual memory management overhead becomes so grave that a precipitous decrease in system performance is visible upon the moment of memory over commitment. 19 Fixed process allocation policy Variable process allocation policy Frame allocation algorithms Equal allocation Proportional allocation - Allocate available memory to each process according to its size. - Size of the virtual memory for process pi is si then S = si . - If the total number of available frames is m, we allocate ai frames to process pi where: ai=si/S*m. 20 21