Student - University of Maryland

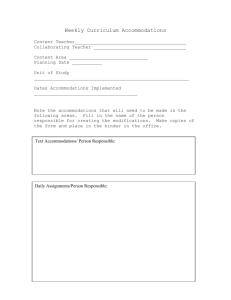

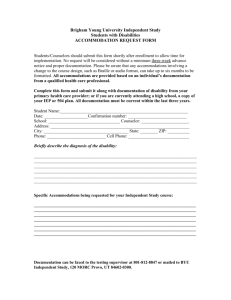

advertisement

March 27, 2006 – Moderator: Weihua Fan YUN YUN DAI: Using Structured Mixture IRT Models to Study Differentiating Item Functioning Yunyun Dai & Robert J. Mislevy University of Maryland Abstract The standard analysis of differential item functioning (DIF) uses a manifest variable, such as gender or ethnicity, to cluster examinees into groups to investigate itemby-group interactions given overall proficiency. A structured mixture IRT model is proposed as a more appropriate method to detect the existence of DIF, in terms of latent classes of examinees, and study its genesis. The structured mixture model accommodates latent group membership into an IRT model in which different item parameters from each latent group may be estimated. One of the most important advantages of the structured mixture model is its ability to study the causes of DIF, which is not part of the model in conventional DIF analysis. This is done by incorporating both person and item covariates, as predictors of latent class membership and item interaction effects respectively in the structured mixture IRT model. JENNIFER KORAN: Teacher and Multi-source Computerized Approaches for Making Individualized Test Accommodation Decisions for English Language Learners Jennifer Koran, Rebecca Kopriva, Jessica Emick University of Maryland J. Ryan Monroe Prince George’s County Public Schools Diane Garavaglia Consultant Abstract There has been a strong call for more systematic methods associated with selecting appropriate large scale test accommodations for students in special populations (Abedi, Courtney, & Leon, 2003; Kopriva & Mislevy, 2001; Thompson et all, 2000; Thurlow et al., 2003). Improvement in this area is especially critical for English language learners (ELLs), a group that has a relatively short history of inclusion in large scale assessments. However, an important prerequisite for the systematic application of theory to select appropriate accommodations is the collection of accurate and relevant data about the student to use as the basis for decision making. This paper introduces a multi-source, theory-driven approach for gathering information to assign appropriate accommodations to individual ELLs. The value of this approach is investigated in the context of a computerized system called the Selection Taxonomy for English Language Learner Accommodations (STELLA), which also uses the data to make a systematic accommodations recommendation based on individual student needs. Results of the study suggest that using a structured data collection procedure with multiple sources does not have an effect on the nature of teachers’ accommodations recommendations for their students, but that it does contribute to improved accommodation recommendations when it is used in conjunction with the STELLA decision rules. Results also support the use of multiple sources, especially the student’s parent, in collecting information to be used in making an accommodation recommendation. ROY LEVY: Posterior Predictive Model Checking for Factor Analysis Roy Levy, University of Maryland Abstract We describe the use of measures for conducting posterior predictive model checking for investigating data-model misfit in factor analytic models. Though such measures have been applied in factor analytic models, the techniques and their potential are not generally understood. This paper discusses these techniques, including their potential advantages over traditional mechanisms in terms of their flexibility and the appropriateness of application to complex models. An example based on a simulated data set illustrates the procedures. It is argued that further methodological attention is warranted. April 3, 2006 – Moderator: Jaehwa Choi ROY LEVY: Alternative Approaches To Validity In A Modeling Framework Roy Levy & Gregory R. Hancock University of Maryland Abstract Developments in modeling, in particular the construction, use, and criticism of latent variable models, have spurred recent interest in viewing validity from a modeling perspective. Alternative approaches to validity are characterized from a modeling perspective. Illustrations of data analysis from under these alternative approaches highlight their differences in terms of model evaluations and recommendations, and the limitations of the approaches themselves. PENG LIN: Characteristics and Differential Functioning of Alternative Response Options for the English Section of ACT Assessment Peng Lin & Amy Hendrickson University of Maryland Abstract An important characteristic of multiple-choice items is the alternative options, or distracters. However, in most multiple-choice item analyses, the item responses are coded as 1 (correct) or 0 (incorrect). With this coding scheme, the distinction among the alternative options is lost (Thissen, 1984). Analyzing multiple-choice items on the option level provides more detailed information about the behavior of both the keyed option and the alternative options. The purpose of this study is to conduct a series of analyses to illustrate how to obtain detailed and useful information about the options. Seventy-five items of the 1995 ACT English section were used. In the first stage, option characteristic curves (OCC) were estimated using a nonparametric IRT approach. Option performance was examined based on the features of the OCCs. In the second stage, DIF analysis was first conducted for all items using a logistic regression method. For the items displaying DIF, multinominal logistic regression analysis was conducted for DOF analysis. The subgroups of interest in this study were gender and ethnicity. BRANDI WEISS: An Investigation of New Computations of Response Time Effort Brandi A. Weiss, University of Maryland Steven L. Wise, James Madison University Abstract In “low-stakes” testing situations, adequate examinee motivation is difficult to assume because the test scores have no personal impact on the students. Wise and Kong (2005) developed an unobtrusive measure of response time effort (RTE) to measure examinee motivation during such testing situations. The development of RTE was based on the notion that examinees who responded too quickly to an item were engaging in rapid-guessing behavior (no effort), whereas examinees that spent a reasonable amount of time on an item were engaging in solution behavior (effort). The computation of RTE is based on an examinee’s response time, in which values of either zero (no effort) or one (effort) are assigned for each test item. These effort values are then summed together and divided by the total number of test items to obtain a proportional RTE index. The current study aimed to evaluate the effect that dichotomizing the response times for individual items has on the measure of RTE. Four new computations of RTE that allowed effort to be considered continuous were compared to Wise and Kong’s (2005) RTE index. Results indicated that the reliability and validity of all five RTE indices were similar. Therefore dichotomizing item responses prior to aggregation did not negatively affect the reliability or validity of the measure of RTE. Interestingly, however, evidence for a third type of response behavior (i.e., abandonment) was found. Recommendations for selecting the most appropriate RTE computation based on the type(s) of response behavior(s) one wishes to identify are discussed.