The demise of libraries in general and reference services in

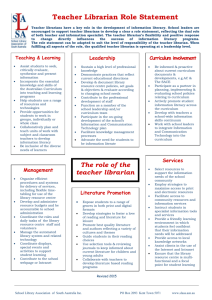

advertisement

A Brief History of Reference Assessment: No Easy Solutions 2 A Brief History of Reference Assessment: No Easy Solutions FIROUZEH F. LOGAN Abstract: Discusses the different methods of reference assessment that have been tried in libraries over the years to help improve service, justify the existence of the service itself, train new staff and counter declining statistics. KEYWORDS: assessment standards, academic libraries, history Firouzeh F. Logan Head of Reference, Assistant Professor University of Illinois, Chicago MLIS, Dominican University 801 S Morgan Chicago, IL 60607 flogan@uic.edu 2 3 A Brief History of Reference Assessment: No Easy Solutions Introduction The demise of libraries in general and reference services in particular have been the subject of debate for so long that the need to justify the value of both has taken on a life of its own. The world of reference services has dealt with this uncertain future by developing a keen interest in assessment–a scientific/statistical means by which to insure a future. Even though many library users consider reference service to be a standard feature of libraries, declining statistics, effective, free internet search engines, and tight budgets have led to predictions of irrelevancy. There is a concern that falling reference statistics are in and of themselves dangerous, and a fear that if one cannot count something then it doesn’t really count. The dilemma is what to actually count and how to count it. Does a hash mark really reflect the reference transaction? And does a statistic adequately represent the quality and value of reference? In comparison to the other library departments such as collection development, acquisitions and cataloging, reference service is a recent addition to the practice of librarianship. “Reference service as we know it today is a direct outgrowth of the nineteenth-century American public education movement.”1 At that time, corporate America felt that a literate working class was vital to productivity. After the birth of the nation Jefferson had planted the idea that literacy was vital to the survival of democracy. The “noble experiment,” universal education for all citizens, spread at a very rapid pace at this time not only to sustain a vital democracy, but also to 3 4 ensure that the immigrant population pouring into the country was socially and politically integrated into this new world. “One direct consequence of the public education movement was the development of true ‘public’ libraries.”2 Reference services were born to help this illiterate population make use of the collections and materials in the libraries. In the late 1870s, Samuel Swett Green of the Worcester Free Public Library in Massachusetts wrote “Personal Relations between Librarians and Readers” in Library Journal in which he set certain standards for reference service: “make it easy for such persons to ask questions … a librarian should be as unwilling to allow an inquirer to leave the library with his questions unanswered as a shop keeper is to have a customer go out of his store without making a purchase … Be careful not to make inquirers dependent.”3 The beginning of reference service is generally attributed to Green, who in his article discussed for the first time the importance of helping patrons use the library. This idea was and is so popular that for most library users, reference services represent the most personal connection they have to the library. The four functions Green defined as reference functions—instructing patrons how to use the library, answering patron queries, helping patrons select resources, and promoting the library within the community—have not changed, but the tools and models of these services have changed drastically. In light of these changes, the need to prove the value of these functions and the ability of reference service to satisfy the unique informational needs of its respective community has been called into question. Even though much has changed since 1876, how to measure how well librarians meet Green’s standards remains an issue. From the beginning, it was understood that reference service consists of a series of activities difficult to define; there was recognition that it was necessary to establish evaluation criteria. Fewer than forty articles on the subject were published prior to the late 1960s. Librarians 4 5 talked more about measuring and evaluating reference services than they actually measured and evaluated. There were warning signs even then that things would change: “Reference librarians in failing to provide the means for accurate judgment of their place and contribution in library service run the serious risk of having their work undervalued or ignored.” 4 For the next four decades, librarians discussed, tested, implemented, and wrote about different methods of assessing reference services. This paper is an attempt to look at the topic from the wider lens of history and appreciate the pros and cons of the favorite measurement categories of each decade, and looks at the following standards: number of transactions, accuracy of answers, quality of the service encounter, and patron satisfaction. The 1970s and 1980s Even though most evaluation studies in the 1960s looked at collections, a new interest in reference service arose in the 1970s. Terence Crowley and Thomas Childers thoroughly examined reference service in Information Service in Public Libraries: Two Studies.5 During that decade, King and Berry, Childers, Ramsden, and House6 studied the ability of library staff to provide accurate answers. This decade also marked the use of unobtrusive testing to assess reference quality.7 In the 1970s and 1980s librarians were becoming more interested in access and service rather than just collections and materials. More approaches begin to appear in the literature to evaluating reference services. Assessment standards and procedures mention goals, methods to conduct user studies and surveys. These studies used independent judges for obtrusive and unobtrusive investigation, and the means to evaluate the accuracy of librarians’ responses and their ability to properly negotiate the reference transaction. Sampling procedures make an 5 6 appearance, and there is acknowledgement that assessing demand numerically needed to give way to qualitative evaluation. The 1980s were the critical decade for service evaluation. Ellen Altman’s seminal article, “Assessment of Reference Service,” which reviewed the literature on the assessment of reference service, noted that there was no standard by which to measure or evaluate reference service.8 In the same year, William Katz reviewed reference evaluation in detail, noting the major literature in this area. Katz’s work in all its editions is influential in defining and establishing objectives for reference. His edited volume Evaluation of Reference Services outlined various techniques that could be used to determine the efficiency and effectiveness of reference services.9 Each of the essays agrees that performance appraisal in the reference setting is sorely lacking both in frequency and quality. The literature indicates the growing importance of accuracy studies stemming from unobtrusive studies showing accuracy rates as low as the infamous “55 percent”10 when reference librarians, irrespective of the kind of library, answered questions. Peter Hernon and Charles McClure called this low quality of service a crisis. “The idea that reference librarians are successful in answering questions only a little over half of the time has had profound effects in the field of librarianship.”11 Another favored method of assessment was the user satisfaction survey. Both performance appraisal and surveys have advantages and disadvantages. An obvious advantage of unobtrusive observation is its immediacy. Similarly, the survey is valued because it’s given immediately after the reference encounter. However, even with the troubling “55 percent rule” looming, there were questions about the validity of unobtrusive testing. Terry Weech and Herbert Goldhor confirmed that staff tends to answer a greater proportion of reference questions 6 7 completely and correctly when they are aware of being evaluated.12 And it was recognized that patrons seemed to care less about the accuracy of the help they received than the friendliness and helpfulness of the librarian. In 1981, Howard D. White recommended a study based on librarians reporting on the following criteria for each transaction: the sources used, the patron class, whether the question was answered by a professional or a nonprofessional, whether the question was directional or reference, the time taken, and the newness of the source to the patron.13 None of the methods identified the factors that could successfully improve the quality of the reference service they were meant to evaluate. As the literature began describing the gathering and analysis of statistics as valuable tools for the management of libraries, using statistics to evaluate reference service also began to take hold. Participants in a 1987 symposium on reference service agreed that reference service is more than short, unambiguous questions and answers. This begged the question: what kinds of statistics would in fact adequately measure the quality and effectiveness of reference? Proceedings of ALA programs of the era consist of many papers by librarians and others on how to develop reliable methods for measuring reference service. It is at this time that definitions for reference and directional transactions were being developed. In the first edition of his seminal Introduction to Reference Work, William Katz claims that “There is neither a definitive definition of reference, nor a consensus as to how reference services may be evaluated and measured.”14 In 1971, Charles Bunge defined reference service as “the mediation, by a librarian, between the need structures of users and the structures of information resources.”15 After multiple iterations, Whitlatch defined reference as “personal assistance given by library staff to users seeking information. This assistance includes answering questions at the reference desk, on the telephone, and via e-mail; performing quick literature 7 8 searches, instructing people in the use of library reference indexes . . . reference sources, electronic resources and providing advisory service to readers.”16 Once definitions were being discussed, people began writing about the need, the methods, the instruments and the pitfalls of assessment. “The need for assessment, from the evaluation of reference and instruction to the evaluation of collections, was a constant theme in the early 1980s and beyond.”17 The need to improve service was universally recognized, and there exists an extensive body of scholarly literature suggesting different methods and tools to improve the quality of reference service in the profession. There are many articles relating to the reference transaction or encounter: the interview, the follow up, the answer, librarian behavior, nonverbal communication, and ethical issues such as privacy, efficiency of service, quality and quantity of resources, and outcomes (patron satisfaction). There is also literature relating to the various approaches to evaluating reference services: the observation method, unobtrusive, obtrusive, user judgement, librarian judgment, surveys and questionnaires, log analysis, outcomes assessment, interviews, and focus groups. The 1990s In the 1990s, evaluation remained important, taken up by many including RUSA president, Jo Bell Whitlatch. She and others wrote not only about traditional face to face service but also reference in the age of electronic technology.18 Like many before her and after, Whitlatch called for consistent principles for evaluation of reference service, regardless of method. However, her guidebook for setting up and understanding the results of a reference service evaluation did not provide an effective tool for measuring reference service quality in a replicable manner. In fact, David Tyckoson argued that evaluating reference based on the librarians’ answers is too simplistic.19 No matter how important it is to correctly identify sources 8 9 for patrons, people themselves are much more satisfied with the transaction if the behavior of the librarian meets their approval (eye contact, listening, open-ended questions). A survey by Whitlatch also suggests that the interpersonal skill of the librarian and the librarian’s task-related knowledge were the most significant variables of the service interaction.20 Many other studies support this conclusion. Studies in the 1990s agree that dimensions of quality in reference service are willingness, knowledge, morale, and time. These studies recognize that reference is not so much providing answers as it is identifying effective sources and advising on research strategies, especially in academic libraries, where reference services are geared to the will to learn and problem solve. With the onset of digital reference (email and chat in the 1990s), evaluation studies of the quality of traditional reference service began to wane. In the 1990s, the profession was concerned with the effectiveness and efficiency of the process: timeliness of response, clarity of procedure, staff training, etc. There was debate over the merits of offering and staffing the service, the cost of the service, the kind of software to select, and whether it is worthwhile to even try to offer synchronous service. The 2000s As digital reference provided new ways of examining the reference transaction, the emphasis on evaluation shifted to an interest in outcomes: “At the October 2000 Virtual Reference Desk Conference in Seattle, the growing digital reference community identified assessment of quality as a top research priority.”21 Digital reference was meant to replicate the personalized service that is offered in a face to face transaction in the library. Once again, assessment meant more than statistics: “The increasingly ubiquitous nature of electronic reference services in academic libraries and the scarcity of evaluation of such services, 9 10 particularly evaluation of user satisfaction, are common themes in the literature.”22 Technical progress has allowed for this service, and digital reference service has the kind of visibility that no reference desk can have, but, in the end, best practices are still rooted in traditional service: a user with an informational need seeking a librarian to clarify and address the need. Even though the method of service delivery is different, the quality characteristics of traditional reference still apply. In the 2000s a new vocabulary emerged. The emphasis in the literature is no longer on the numbers or even the quality of reference so much as it is on the role reference plays in instilling “lifelong learning” skills and how reference contributes to “information literacy.” The focus has shifted to “learning outcomes” and “information competency.” Some, such as Novotny and Rimland, discuss the utility of older methods of assessment such as WOREP (Wisconsin Ohio Reference Evaluation Program)23 but most, such as Sonley, Turner, and Myer describe current and future projects assessing digital reference and online instruction.24 Once again, patron satisfaction is being used to assess the service. Although patron satisfaction is an essential component of successful reference service, and assessing satisfaction is useful, historically it has been proven that patrons are not good evaluators of quality. Many people want a reference librarian to alleviate or confirm their uncertainties. Satisfaction surveys can measure whether the service was quick and whether the librarian was courteous and professional, but they do not seem to be able to measure the accuracy of the answer or the quality of the sources offered. Despite the proliferation of service formats, assessment has come full circle with research focusing on content analysis and patron surveys. The process has always engendered a great deal of discomfort. Librarians are not only hard pressed to define their service, but they are also faced 10 11 with the frustrating search for a standard, comparable methodology. No one method can provide a complete picture of reference or the quality of service. Conclusion Reference assessment emerged as an exercise to maintain or improve the quality of service. It evolved into a need to justify and preserve the service. After many decades of defining and discussing and experimenting, there is not a universally accepted method of assessment. This is not a problem unique to reference. All professions struggle with the need to measure success and improve quality, including fields that have eminently measurable outcomes such as the medical profession.25 At first, in order to measure services, librarians used statistics, counting what they did. And yet there were so many variations in how statistics were kept that the statistics were not a reliable basis for comparison over time, and certainly could not measure the quality of the reference interaction. Part of the problem with numbers only was that numbers could not reflect the intangibles of service. There are many ways to define reference service quality, but no “right” way. With the development of the World Wide Web, the 1990s saw a revolution in how reference librarians work, and there is still no perfect tool with which to evaluate the service. The profession has recognized the need to adopt a series of values that are measurable: cost effectiveness, productivity, satisfaction with service, quantity and quality of resources, staffing, equipment, and facilities. However, just as the objectives of reference are varied—to meet an informational need, to teach about the research process, or to help a user become an independent user—so too must the assessment be varied. The variables are such that it has not been possible to develop a tool that can be replicated everywhere. 11 12 Therefore, the goal must be to establish criteria for good service, but the criteria must be flexible. What tool would be able to reconcile high patron satisfaction with low quality of service, or to measure the proper levels of service, accuracy, and follow-up in different kinds of libraries with different missions? And how would one conduct accurate, ethical, unobtrusive studies? The many variables of budgets, collections, types of library, difficulty and types of questions, the rate of activity at the desk, and the nature of the patron make this a daunting task. Individual programs should develop their own list of qualities associated with good reference service. The list should include behavioral characteristics (attitude, ability to communicate, approachability, etc.), basic knowledge of resources and collections, subject knowledge, etc., and reference skills (ability to discern appropriate level of help, when to refer, use of resources, time limitations, interviewing technique, relevance, accuracy, perspective, and bias). Not every method can be so standardized that it allows for comparative analysis. No one evaluation framework or method will provide all necessary data-one size does not fit all. There is no ideal measurement tool, but every reference department must nonetheless examine its service, not because of the danger of extinction, but in order to set proper departmental priorities, and to define and articulate its level of commitment to meeting people’s information needs. Despite the imperfections inherent in assessment, there is great satisfaction in becoming proficient and accomplished providers of service to the public who, despite the advent of Google and other powerful tools, still need help in meeting their information needs. 12 13 NOTES 1 Tyckoson, Library Trends, 185. 2 Ibid. 3 Green, 5. 4 Rothstein, 173. 5 Crowley and Childers. 6 See References. 7 Hults, 141. 8 Altman, 169. 9 Katz and Fraley. 10 Hernon and McClure, 37. 11 Ibid. 12 Weech and Goldhor, 305. 13 Howard White, 3. 14 William A. Katz, Introduction to Reference Work, 3. 15 Bunge, 109. 16 Jo Bell Whitlatch, Evaluating Reference Services: A Practical Guide. 17 Diane Zabel, 284. 18 See References 19 David A. Tyckoson, The Reference Librarian, 151. 20 Jo Bell Whitlatch, Evaluating Reference Services: A Practical Guide. 21 Lankes et al, 323. 13 14 22 Stoffel and Tucker, 120. 23 Novotny and Rimland, 382. 24 Sonley et al, 41. 25 Jerome Groopman, 36. 14 15 References Altman, Ellen. “Assessment of Reference.” In The Service Imperative for Libraries: essays in Honor of Margaret E. Monroe. edited by Gail A. Schlachter. Littleton, CO: Libraries Unlimited, 1982. Barbier, Pat, and Joyce Ward. “Ensuring Quality in a Virtual Reference Environment.” Community & Junior College Libraries 13(2004) no. 1:55-71. Berry, Rachel, and Geraldine B. King. “Evaluation of the University of Minnesota Libraries Reference Department Telephone Information Service.” Pilot Study. Library School, University Of Minnesota, Minneapolis (1973). Ed-077 517. Berube, Linda. “Collaborative Digital Reference: an Ask a Librarian (UK) Overview. Program 38 (2004) no. 1:29-41. Brumley, Rebecca. TheReference Librarian’s Policies, Forms, Guidelines, and Procedures Handbook. Neal-Schuman Publishers, 2006. Bunge, Charles. “Reference Service in the Information.” In the Interlibrary Communications and Information Networks, edited by Joseph Becker. Chicago: American Library Association, 1971. Carter, David S., and Joseph Janes. “Unobtrusive Data Analysis of Digital Reference Questions and Service at the Internet Public Library: an Exploratory Study.” Library Trends 49 (2000) no. 2:251-265. Childers, Thomas. “The Quality of Reference: Still Moot after 20 Years.” Journal of Academic Librarianship 13(1987) 73-75. Crews, Kenneth D. “The Accuracy of Reference Service: Variables for Research and Implementation.” Library & Information Science Research 10 (1988) 331-355. Crowley, Terence and Thomas Childers. Information Service in Public Libraries; Two Studies. Metuchen, N.J.: Scarecrow Press, 1971. 15 16 Gilbert, Lauren M., Mengxiong Liu, Toby Matoush, and Jo B. Whitlatch. “Assessing Digital Reference and Online Instructional Services in an Integrated Public/University Library.” Reference Librarian 46 (2006) no. 95:149-172. Green, Samuel S. “Personal Relations Between Librarians and Readers;” originally published in October 1, 1876. Library Journal (1976). 118:S5. Groopman, Jerome. “What's the Trouble?” New Yorker 82 (2007) no. 47:36-41. Hall, Blaine H., Benson, Larry D., and Butler, H. J. “An Evaluation of Reference Desk Service in the Brigham Young University Library.” In Mountain Plains Library Association. Academic Library Section. Research Forum. Preservers of the past, shapers of the future. Emporia State Univ. Press, 1988. Hernon, Peter. “Utility Measures, Not Performance Measures, for Library Reference Service.” RQ 26 (1987), 449-459. Hernon, Peter, and Ellen Altman. Assessing Service Quality: Satisfying the Expectations of Library Customers. Chicago: American Library Association, 1998. Hernon, Peter, and Charles R. McClure. “Library Reference Service: an Unrecognized Crisis.” The Journal of Academic Librarianship 13 (1987), 69-71. Hernon, Peter, and Charles R. McClure. “Unobtrusive Reference Testing: the 55 Percent Rule.” Library Journal 111 (1986) no. 7:37-41. Hernon, Peter, and John R. Whitman. Delivering Satisfaction and Service Quality : a Customer-based Approach for Libraries. Chicago: American Library Association, 2001. Hill, J. B., Cherie Madarash-Hill, and Ngoc P. T. Bich. “Digital Reference Evaluation: Assessing the Past to Plan for the Future.” Electronic Journal of Academic and Special Librarianship 4 (2003). Hinderman, Tammy A. “What Is Your Library Worth? Changes in Evaluation Methods for Academic Law Libraries.” Legal Reference Services Quarterly 24 (2005) no. 1:1-40. Hodges, Ruth A. “Assessing Digital Reference.” Libri 52 (2002) no. 3:157-168. 16 17 Hults, Patricia. “Reference Evaluation: an Overview.” The Reference Librarian 38 (1992):141150. Janes, Joseph. “What is Reference for?” Reference Services Review 31 (2003) no. 1:22-25. Jensen, Bruce. “The Case for Non-Intrusive Research: A Virtual Reference Librarian's Perspective.” Reference Librarian 85 (2004):139-149. Katz, William A., and Ruth Fraley. Evaluation of Reference Services. Haworth Press, 1984. Katz, William A. Introduction to Reference Work. New York: McGraw-Hill, 1982. Katz, William A. Introduction to Reference Work. New York: McGraw-Hill, 1969. Kawakami, Alice, and Pauline Swartz. “Digital Reference: Training and Assessment for Service Improvement.” Reference Services Review 31(2003) no. 3:227-236. Kemp, Jan H., and Dennis J. Dillon. “Collaboration and the Accuracy Imperative: Improving Reference Service Now.” RQ 29 (1989) 62-70. Kuruppu, Pali U. “Evaluation of Reference Services--A Review.” The Journal of Academic Librarianship 33 (2007) no. 3:368-381. Lankes, R., et al. “Assessing Quality in Digital Reference Services.” Proceedings of the 64th Annual Meeting of the American Society for Information Science and Technology, (2001) 323-329. Lee, Ian J. “Do Virtual Reference Librarians Dream of Digital Reference Questions?: A Qualitative and Quantitative Analysis of Email and Chat Reference.” Australian Academic & Research Libraries 35 (2004) no. 2:95-110. McClure, Charles R. Statistics,Measures, and Quality Standards for Assessing Digital Reference Library Services : Guidelines andPprocedures. Information Institute of Syracuse, School of Information Studies, Syracuse University, 2002. McNally, Peter F. “Teaching Performance Measurement for Reference Service.” The Reference Librarian (1989) 25-26:591-600. 17 18 Mendelsohn, Jennifer. “Perspectives on Quality of Reference Service in an Academic Library: a Qualitative Study.” RQ 36 (1997), 544-557. Monroe, Margaret E., and Gail A Schlachter., eds. The ServiceImperative forLlibraries : Essays in Honor of Margaret E. Monroe. Littleton, Colo: Libraries Unlimited, 1982. Novotny, Eric, and Emily Rimland. “Using the Wisconsin-Ohio Reference Evaluation Program (WOREP) to Improve Training and Reference Services.” Journal of Academic Librarianship 33 (2007) no. 3:382-392. Peters, Thomas A. “Current Opportunities for the Effective Meta-assessment of Online Reference Services.” Library Trends 49 (2000), no. 2:334-349. Ramsden, M. PerformanceMeasurement of Some Melbourne Public Libraries: a Report to the Library Council of Victoria. Victoria, Australia: Library Council of Victoria, 1978. Rockman, Ilene F. “The Importance of Assessment.” Reference Services Review 30 (2002) no. 3:181-182. Ronan, Jana, Patrick Reakes, and Gary Cornwell. “Evaluating Online Real-Time Reference in an Academic Library: Obstacles and Recommendations.” Reference Librarian (2003) 79:225240. Rothstein, Samuel. “The Measurement and Evaluation of Reference Service.” Reprinted from Libr Trends Ja 1964. The Reference Librarian (1989) 25-26:173-190. Schwartz, Diane G., and Dottie Eakin. “Reference Service Standards, Performance Criteria, and Evaluation.” Journal of Academic Librarianship 12 (1986) no.1:4-8. Sonley, Valerie, Denise Turner, Sue Myer, and Yvonne Cotton. “Information Literacy Assessment by Portfolio: a Case Study.” Reference Services Review 35 (2007) no. 1:41-70. Stacy-Bates, Kristine. “E-mail Reference Responses from Academic ARL Libraries: An Unobtrusive Study.” Reference & User Services Quarterly 43 (2003) no. 1:59-70. Stoffel, Bruce, and Toni Tucker. “E-mail and Chat Reference: Assessing Patron Satisfaction.” Reference Services Review 32 (2004) no. 2:120-140. 18 19 Tobin, Carol M. “The Future of Reference: an Introduction.” Reference Services Review 31 (2003) no. 1:9-11. Tyckoson, David A. “Wrong Questions, Wrong Answers: Behavioral vs Factual Evaluation of Reference Service.” The Reference Librarian (1992) 38:151-173. Tyckoson, David A. “What is the Best Model of Reference Service?” Library Trends 50 (2001) no. 2:183-196. Ward, David. “Using Virtual Reference Transcripts for Staff Training.” Reference Services Review 31 (2003) no. 1:46-56. Weech, T. L., and H. Goldhor. “Obtrusive Versus Unobtrusive Evaluation of Reference Service in Five Illinois Public Libraries. A Pilot Study.” Journalism Quarterly 52 (1982.) no. 4:305324. Wesley, Threasa L. “The Reference Librarian's Critical Skill: Critical Thinking and Professional Service.” The Reference Librarian (1990) 30:71-81. White, Howard D. “Measurement at the Reference Desk.” Drexel Library Quarterly (1981)3-35. White, Marilyn D., Eileen G. Abels, and Neal Kaske. “Evaluation of Chat Reference Service Quality: Pilot Study” D-Lib Magazine 9 (2003). Whitlatch, Jo B. Evaluating Reference Services: a Practical Guide. Chicago: American Library Association, 2000. Whitlatch, Jo B. “Evaluating Reference Services in the Electronic Age.” Library Trends 50 (2001), no. 2:207-217. Zabel, Diane. “Is Everything Old New Again?” Reference and User Services Quarterly 45 (2006) 184-189. 19