Take Home Test

advertisement

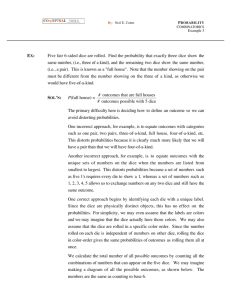

Machine Learning + Extended – 2nd Test (Take home test) Deadline: Thursday 6 December 2012, lecture time. Hand in directly to the lecturer. Question 1. In a casino, two differently loaded but identically looking dice are thrown in repeated runs. The frequencies of numbers observed in 40 rounds of play are as follows: Dice 1, [Nr, Frequency]: [1,5], [2,3], [3,10], [4,1], [5,10], [6,11] Dice 2, [Nr, Frequency]: [1,10], [2,11], [3,4], [4,10], [5,3], [6,2] a) Characterize the two dice by the corresponding random sequence model they generated. That is, estimate the parameters of the random sequence model for both dice. [12%] b) Some time later, one of the dice has disappeared. You (as the casino owner) need to find out which one. The remaining one is now thrown 40 times and here are the observed counts: [1,8], [2,12], [3,6], [4,9], [5,4], [6,1]. Decide the identity of the remaining die, explaining your reasoning. [22%] Question 2. Consider the deterministic reinforcement environment drawn below. The nodes are states, the arcs are actions, the numbers on the arcs are the immediate rewards. Let the discount rate equal 0.8. The L/R/C at the beginning of arcs is the name of the action that arc represents. a) Start with a Q-table that initially contains all Q-values equal to 3 (an arbitrary choice). Use Q-learning to update these values after each of the following three episodes, showing all of your working: [24%] Episode 1: start → a → b → d → end Episode 2: start → a → b → end Episode 3: start → a → d → end b) What is the optimal policy estimate at the end of the last episode in a)? [10%] Question 3. a) Argue whether the following statement is true or false: A classier that attains 100% accuracy on the training set and 70% accuracy on test set is better than a classier that attains 70% accuracy on the training set and 75% accuracy on test set. [10%] b) Describe a conceptual similarity between Independent Component Analysis and Latent Semantic Analysis. You can use examples to help you to explain. [10%] c) Which of the classifiers that you know of would perform well when the data classes are non-linearly separable? List all the classifiers that you would consider for such case. [10%] d) In decision tree learning, can perfect purity be always achieved at the leaf nodes once we used all attributes? Why or why not? [4%]