Towards a pan-European approach to classifying indicators of care

Towards a pan-European approach to classifying indicators of care quality.

Alex Mears

Paul Long

Jan Vesseur

Part 1: Describing a framework

Introduction

Throughout Europe (and indeed the broader developed world) there is increasing interest in measuring the quality of health and personal care services as a mechanism to stimulate improvement, inform consumer choice provide public and payer accountability, and populate risk management strategies.

However, while this occurring widely, one of the potential benefits, providing international comparisons to allow countries to compare themselves with global best practice, contextualise national performance and raise sights above the local has not yet been widely grasped. While some first steps are being undertaken by the OECD, regulators and inspectors themselves have yet to collaborate widely on comparing across existing indicator sets.

One of the reasons for this is that there is not a clear method for classifying various indicators and thus ensuring that they are actually comparable. This is a task made difficult by the fact that each system inevitably collects slightly different data, classifies inputs, outputs and outcomes in rather differently and has rather different conceptualisations of health and care. The task becomes more complex still because the required classification is multi-dimensional

The following paper is a first attempt to define just such a classification. Its genesis was in a meeting of EPSO (European Partnership of Supervising

Organisations), where Dutch, English and Swedish representatives compared experiences in this field and considered how to advance the agenda.

This conversation resulted in the commitment to start to develop a multidimensional approach to classifying indicators about care. The following note sets out how we intend to go about this.

Dimensions

The dimensions that we need to consider are as follows

1 Conceptualisation of quality

2 Donabedian definition (Structure, process, outcome)

1 of 8

3 Data type (derivable, collectable from routine sources, special collections, samples)

4 Indicator use (judgement singular, judgement as part of framework, benchmarking, risk assessment)

1 Conceptualisation of quality

There have been many conceptualisations of quality and this is part of the framework required to classify that have been undertaken by various different international bodies. From these the common elements below have been identified as common themes and will be used as part of the model for this project.

The parameters of the conceptualisation to some extent depend upon how broadly the model of care being assessed. Is it purely medical care, or is it a more holistic view of care, including social and nursing care. If the latter then some notion of health, independent or even fulfilled lives would be added to the conceptualisation.

Safe care (avoidance of harmful intervention)

Effectiveness (care which conforms with best practice and which is most likely to maximise benefit for patients and service users)

Patient/ Service User Experience (how positive an experience for the person receiving was the act of giving care)

There is then often a consideration of:

Efficiency (how effectively are resources distributed to maximise benefit to service users per resource expended)

Where a broader definition of care is included there is often one or both of:

Healthy and independent lives for individuals

Population health

2 Donabedian or structure, process, outcome

The Donabedian (Structure, process, outcome) conceptualisation of achievement of quality is well known. Structure refers to the underpinning infrastructure and resources that an organisation has in place to achieve its aims (people, materiel, policies and procedures). Process refers to what an organisation actually does, and outcome refers to the results of what an organisation does.

There is a widespread enthusiasm for measuring outcomes. Achievement of outcomes for individuals (as opposed to the performance of specific tasks) is, after all, the purpose of care. Yet as measures of quality, outcomes have limitations, largely associated with issues of causality. For example, does a low mortality rate in a hospital point to better care, healthier patients, or

2 of 8

(particularly where low numbers are involved) chance? The honest answer from the outcome measure alone is that we are unlikely to be able to tell.

Process measures have the advantage that they are easier to interpret. For example, if prophylactic antibiotic use is indicated, then the indication should be followed. Because of this, it is possible to construct indicators where higher or lower is unequivocally better. The argument against them is that their specificity can lose sight of the whole process of care, and they can end up rewarding organisations for doing the “wrong thing” very well. Thus, a hospital treats patients according to good clinical practice once admitted, but has extraordinarily high admission rates because the system as a whole does not work together to minimise hospital admissions (which if nothing else is likely to be an inefficient use of resources).

Structure is in general the least valuable of the three types of indicator for identifying areas of weakness or strength; although it has great value for providing hypotheses of why outcomes and processes look good or bad. For example, insufficient, or insufficiently trained staff may explain poor processes and treatment.

As much structural information is necessary for the legal administration of organisations (e.g. expenditure, staff numbers etc), it is the most routinely available, meaning that it is often used as a proxy for unavailable data concerning processes or outcomes. This, however, is risky. The fact that staff are trained in a particular procedure does not mean that they undertake it correctly. The fact that a service is audited does not mean it is good.

The insufficiency of any one of the three types of measure alone means that we should look to use all three in a systematic way wherever possible.

Outcomes and processes combined help us to identify if there may be problems (or indeed exceptional good practice). Structural data may help us determine causes of problems (or shareable lessons about what is working well).

3 Data type

Different types of information can be used according to how this is collected.

There are of course pros and cons of each.

Data derived from individual level data sets

The point of this data is that is collected in the process of giving care, as part of that process. It is therefore timely and clinically relevant in its collection, and thus likely to be a more accurate reflection of what happened. As such the costs of its collection are sunk into the costs of care (although as this implies the development of Electronic Medical Records, these sunk costs may be huge).

3 of 8

The indicators themselves derived will either be derived centrally from all organisations’ data or will be derived according to a set process, meaning that there is less danger of inconsistent interpretation in collection and therefore of poor data quality.

There are however, two weaknesses in this type of data. It depends upon the same data being collected in the same way across all organisations; theoretically (and only theoretically) achievable in centralised systems such as the NHS in the UK, arguably harder in decentralised systems (although theoretically regulation could be used to insist upon the collection of a central core of data).

More fundamentally it can only produce indicators covering those areas that the electronic medical record covers. Thus it is a potentially an excellent source of measures of clinical process, but cannot (or probably cannot) cover patient experience and perception, or outcomes beyond mortality and readmission. For these we need other sources of data.

Routinely collected aggregate data

Aggregate data (i.e. total number of admissions, contacts, deaths etc) collected “after the fact” and returned at set time periods to central management, purchasers etc has been the traditional way of collecting data.

As a method of collecting information this can be seen as an expensive distraction and may deliver inconsistent and inaccurate data. However, longstanding familiarity with the collection of information may mean that systems are set up that collect the data semi-automatically which militate against this.

Specially collected aggregate data

Where data are simply unavailable one approach is to request that information be collected specifically by organisations. This is in many instances the only option available to collect information but has specific risks about interpretation of what is meant to be collected

– leading to inconsistent and low quality information.

Samples

Samples are needed where we do not or cannot collect information routinely as part of the care giving process and where attempting an aggregate collection post hoc is not possible, but where there are too many individual instances to gain information from all of them. A good example is the use of survey mechanisms to gain a closer insight into the experiences of patients.

Indeed for issues of experience and longer-term outcomes, surveys, and thus samples, are likely to be the only practical way of gaining usable data.

4 Data use

4 of 8

The final parameter is how the data is actually used. It may be used to make a clear judgement by itself, used in a framework of different measures to make an overall judgement, used to compare with other organisations but make no explicit judgement, or used to assess likelihood of overall good or poor performance but make no judgement about it. The final section of Part 1 considers each of these in turn.

Judgement from single indicators

Single measures assess one focussed aspect of care with a defined threshold of acceptable performance. Their essence is to measure in isolation of any other measures, typically with a percentage representing acceptable performance. Single measures have some clear advantages, as they are very visibly linked to policy & clearly highlight poor performance; they are also simple to understand for patients and public. From the negative perspective, they can be prone to gaming and manipulation, especially where linked to an incentive.

Judgement framework

These share a common methodology and ideology, but can vary in scale considerably, from small aggregate (composite) measures with a few underlying indicators, through to large complicated systems (for example, a review of the quality of an entire service). A structured system is used to summarise a wealth of data. Using many indicators gives a rounded, holistic view of performance in a service area. Data of different types (as above) can be included, and even qualitative information (once suitably coded and weighted) can be contained in the framework. In short they are also a useful way to summarise complexity. From a negative perspective, they can be timeconsuming and resource intensive. The more comprehensive frameworks become, the more complex the aggregation models need to be. This can make them opaque to those being judged.

Benchmarking

Benchmarks do not make judgements of absolute performance. Measuring variation (either between similar organisations or from an accepted level of performance) is at the heart of benchmarking. Their purpose is to inform organisations where service improvements could and should be made. Their particular power lies in allowing organisations to know, and thus explore, the areas of potential weakness and good practice.

Risk assessment

Risk assessment is, effectively, a more sophisticated application of the benchmarking idea, in that it looks for variation, but does so for statistically meaningful outliers, rather than looking at top or bottom deciles or quartiles.

They can be considered in two types

Time series outliers

5 of 8

These draw on control chart type methods to show when a particular measure is going “out of control” – that is cross pre-set boundaries which indicate performance of unacceptable levels. These then trigger some form of management or regulatory intervention (even if only in the first instance, to understand whether the data represent reality)

Multiple outlier patterns

These pull together tangentially related measures which, if all, or a majority, show statistically significant variation from the average indicate risk of overarching poor performance. Again these measures are used to trigger some form of management or regulatory intervention.

Using the framework

The framework allows two things. First we can use it to ensure that the indicators we have are likely to be broad enough in scope, collectable, and of sufficient quality, to allow us to form an accurate view of quality. Second, the classification gives us a check on comparability between systems. The following sets out a tool that would allow this to be done.

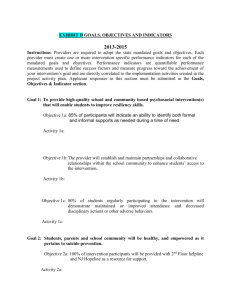

The following shows the template. On the left hand side, each measure is categorised according to the dimension of quality it considers, whether this covers structure process or outcome and the data type. On the right hand side the use(s) to which it can be put are recorded.

To illustrate, a range of indicators being considered in England as part of the

“Quality Account” regime are categorised in the tool below. This hospital acquired infection rate a safety related outcome measure, collected routinely as aggregated data which can in theory be put to any of the uses. Whereas condition specific mortality rates are measures of effective clinical outcomes derivable from routinely collected care related data which can be used to benchmark and risk assess, and could be used in an overarching judgement framework, but not to make a judgement on their own.

Dimension of quality

Safety

Donabedian

Data type Structure Process Outcome Judge single

Derivable

Aggregate √

Special collection

Process in place that identify events that may lead

Mistakes in prescription of drugs and other medications

MRSA and Cdifficile rates per 1,000 bed days

Data use

Judge B’mark Risk frame

√ √ √

√ √

√

6 of 8

Clinical

Effectiveness

Sample

Derivable to avoidable patient harm

Patient

Experience

Aggregate

Special collection

Sample

Derivable

Aggregate

Mortality rates for stroke,

AMI, fractured neck of femur

(FNOF)

Compliance with best practice care pathways and procedures

(e.g. Acute myocardial infarction, asthma management)

30-day readmission rates

Patient recorded outcome measures

% of A&E patients seen within 4 hours

√

Special collection

Sample

Efficiency Derivable

Aggregate

Special collection

Sample

% of patients who always felt treated with respect and dignity

?√

?√

?√

√

√

√

√

√

√

√

√

Part 2 – how to use the tool to categorise information from different systems to encourage comparison

Cross-national comparison has yet to become a key consideration of

European healthcare regulators and performance monitoring organisations, let alone a factor in routine data collection. This project does not seek to achieve this, for that, should it be deemed desirable, would be some years away. What it does propose to do is to examine current data collections, and categorise them to facilitate some proxy of national comparison through the framework above. It is unlikely, due to the differences in culture, policy and delivery system, that countries will collect data items that are directly comparable, measuring exactly the same thing. What the tool described

√

√

√

√

√

√

7 of 8

above will enable is the categorisation of ostensibly different indicators to facilitate the construction of methods for indirect, blunt comparison.

For the pilot project, indicators in the acute setting and the safety domain from

England and the Nederlands will be subjected to analysis and categorisation in the above template.

Populating the framework: a pilot study

As described above, in an attempt to test the tool more thoroughly, a pilot has been conducted across two nations (England and the Nederlands), using a subset of the full framework: the safety dimension in acute hospital settings.

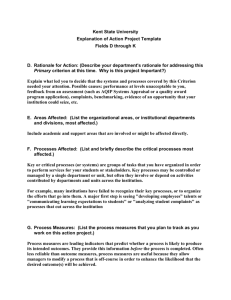

The grid below shows a comparison of datastreams in use in those two countries, highlighting data that are directly or indirectly comparable using a traffic-lighting system: amber where a loose proxy comparison can be done, and green where the measures are directly comparable.

What are we measuring?

Where? Indicator Source Data type

Donabedian Judge single

HCAI Ned POWI % per type of operative proced mean

SCAD Agg Outcome

HCAI Eng HPA Agg Outcome

HCAI Ned

SSI % rate per operation mean

SCAD Agg Process

HCAI Eng

% compliance with

POWI bundle

Bundle of hygiene code measures

CQC Agg Process

Medication Ned

Medication Eng

% patients with medication verification at admission/ discharge

Bundle of medication measured from staff survey

SCAD Agg

CCQ

Process sample Process

Judge frame

B’mark Risk

It is proposed in the first instance to use these three indicators as a beginning point to examine the practicality and robustness of our approach. Data specialists in both counties will be contacted and liaison made to consider the most appropriate way to aggregate these data. In all cases, the indicator described is a composite, and we need to be careful to ensure that we are robust in our approach, to yield meaningful outcome. Our approach will be to develop a suite of indicators where possible, to be available for comparison.

We will look at an overall national mean value, as well as variance within each nation, any trend that can be derived, and national regional variations.

8 of 8