Testing of I/O in WRF: NetCDF versus Binary with and without Quilt I/O

advertisement

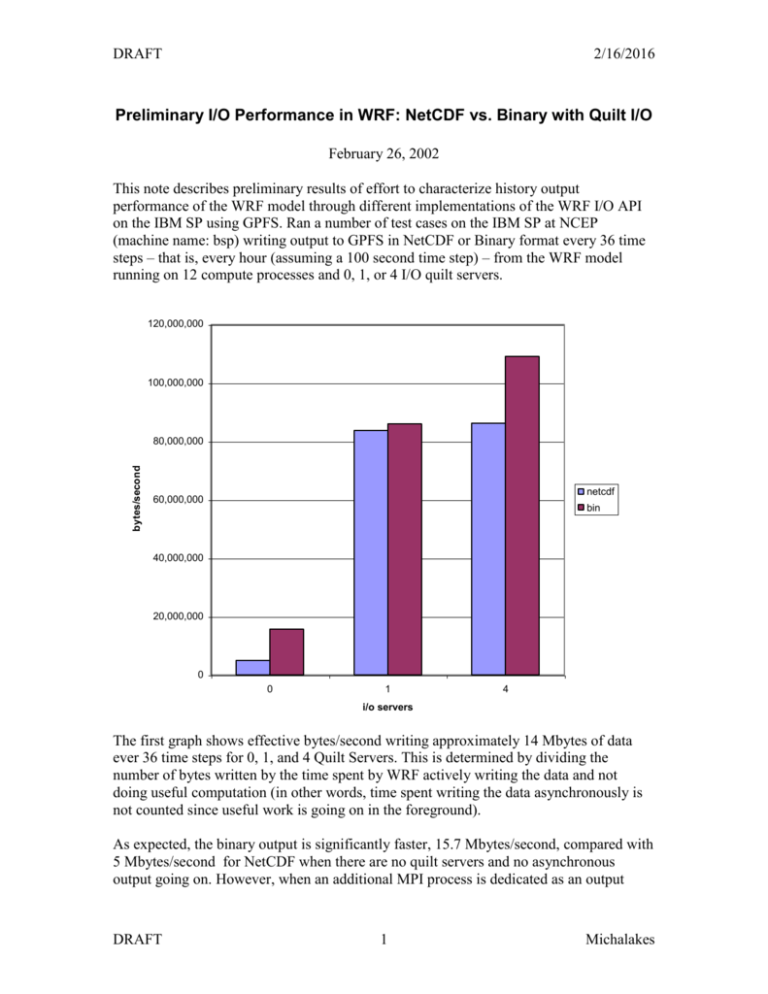

DRAFT 2/16/2016 Preliminary I/O Performance in WRF: NetCDF vs. Binary with Quilt I/O February 26, 2002 This note describes preliminary results of effort to characterize history output performance of the WRF model through different implementations of the WRF I/O API on the IBM SP using GPFS. Ran a number of test cases on the IBM SP at NCEP (machine name: bsp) writing output to GPFS in NetCDF or Binary format every 36 time steps – that is, every hour (assuming a 100 second time step) – from the WRF model running on 12 compute processes and 0, 1, or 4 I/O quilt servers. 120,000,000 100,000,000 bytes/second 80,000,000 netcdf 60,000,000 bin 40,000,000 20,000,000 0 0 1 4 i/o servers The first graph shows effective bytes/second writing approximately 14 Mbytes of data ever 36 time steps for 0, 1, and 4 Quilt Servers. This is determined by dividing the number of bytes written by the time spent by WRF actively writing the data and not doing useful computation (in other words, time spent writing the data asynchronously is not counted since useful work is going on in the foreground). As expected, the binary output is significantly faster, 15.7 Mbytes/second, compared with 5 Mbytes/second for NetCDF when there are no quilt servers and no asynchronous output going on. However, when an additional MPI process is dedicated as an output DRAFT 1 Michalakes DRAFT 2/16/2016 Quilt Server, both formats attain over 80 Mbytes/second because there is ample computational work to hide the time spent writing the data to GPFS using either format. With 4 Quilt Server processes, the binary output is again faster but the reason for this is unknown, since the number, pattern, and volume of messages between the compute processes and the Quilt Servers is identical regardless of format used to write the data to disk from the master Quilt Server. Perhaps there is additional message traffic internal to GPFS as a result of NetCDF moving the file pointer and this additional communication contends with the communication between the multiple quilt servers or the model execution itself? In any case, the difference on 4 Quilt Servers is actually negligible. Cost for output when using four quilt servers for this 12 processor run is 1/3 of a percent of total run time with NetCDF compared with 1/4 of a percent with Binary output. Obviously, output performance must be considered in relation to the corresponding computational cost of a scenario. The ratio of output cost to computational cost will vary with problem size, configuration, and number of processors. The volume and frequency of output data is also variable. The relative cost of Binary and NetCDF output must be considered in this context. 80% percentage of total run time in output 70% 60% netcdf (0) 50% netcdf (1) netcdf (4) 40% binary (0) binary (1) 30% binary (4) 20% 10% 0% 0 250 500 750 1000 1250 1500 1750 2000 2250 2500 2750 3000 com putational speed (sim ulated hours per hour) The second graph projects output cost as a percentage of runtime when computational speed is increased and the volume and frequency of output is held fixed. Note that this is an extrapolation based on the 12 processor timings. The X-axis is the computational speed of the run – that is, the speed of the run in hours per hour excluding I/O cost (the DRAFT 2 Michalakes DRAFT 2/16/2016 12 processor data point represents 79 simulated hours per hour); the Y-axis is the percentage of total run time that will be consumed by I/O for a run whose computational speed is X simulated hours per hour. NetCDF without asynchronous output, “netcdf (0)”, consumes 6 percent of the cost of the run on 12 processors. It will exceed 10 percent of the cost of the run if computational speed is doubled. On the other hand, binary output, “binary (0)” consumes only about 2 percent of cost of the run on 12 processors and this cost does not exceed 10 percent until computational speed is increased 6-fold. Using asynchronous output with Quilt Servers, the cost of output with NetCDF, “netcdf(1, 4)”, becomes indistinguishable from the cost of Binary output, “binary (1,4)”, up through a 20-fold increase in computational speed. At 20-fold computational speed, I/O is only 6 percent of the run time regardless of whether the data is being written via NetCDF or Binary. Beyond that point, the amount of computation becomes insufficient to mask the cost of writing the NetCDF output to GPFS on the Quilt Server and cost jumps to that of the non-asynchronous NetCDF output time -- with the result that the code takes twice as long to write the data as to compute it. At this point, however, the model is running at almost 3000 times real time: total time for a 48 hour simulation – I/O and computation – is only 2 minutes anyway. In summary: Binary is faster than NetCDF for WRF history output on IBM’s GPFS file system, and The asynchronous Quilt-output mechanism built into WRF is sufficient to remove the penalty for using NetCDF. DRAFT 3 Michalakes