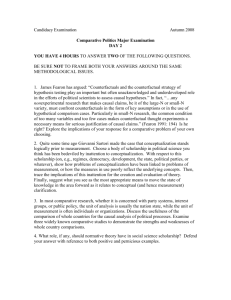

In a series of papers and a forthcoming book manuscript

advertisement