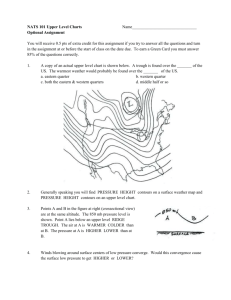

Converging Technologies for Human Progress:

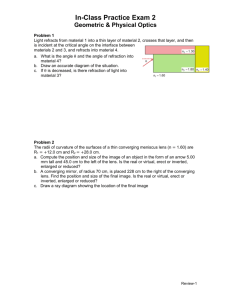

advertisement