notes-5b-dyn-prog-sc..

advertisement

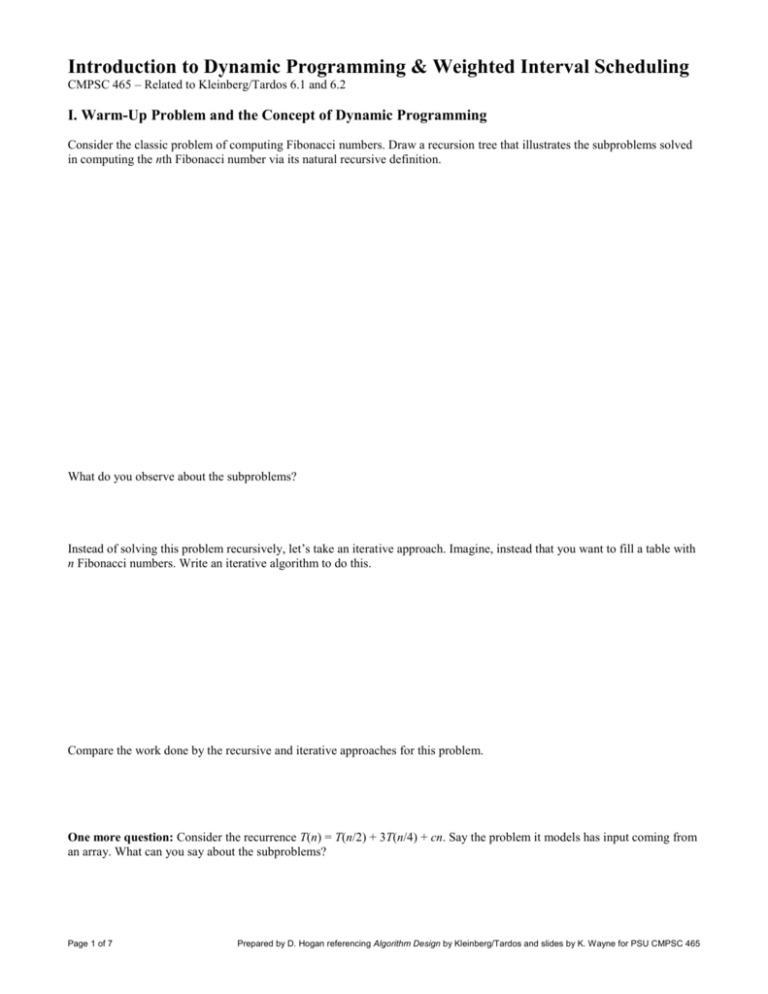

Introduction to Dynamic Programming & Weighted Interval Scheduling

CMPSC 465 – Related to Kleinberg/Tardos 6.1 and 6.2

I. Warm-Up Problem and the Concept of Dynamic Programming

Consider the classic problem of computing Fibonacci numbers. Draw a recursion tree that illustrates the subproblems solved

in computing the nth Fibonacci number via its natural recursive definition.

What do you observe about the subproblems?

Instead of solving this problem recursively, let’s take an iterative approach. Imagine, instead that you want to fill a table with

n Fibonacci numbers. Write an iterative algorithm to do this.

Compare the work done by the recursive and iterative approaches for this problem.

One more question: Consider the recurrence T(n) = T(n/2) + 3T(n/4) + cn. Say the problem it models has input coming from

an array. What can you say about the subproblems?

Page 1 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465

These examples lead nicely to a new algorithmic design strategy called dynamic programming. This one’s easier to define!

We use dynamic programming when we have a problem to solve where the problem breaks down into a number of

subproblems that overlap. If we took the divide-and-conquer approach, we would end up solving the same subproblem more

than once. So instead we record the results of subproblems we solve (or memoize), usually in a table, and use those results to

build up solutions to larger subproblems.

Before we go too much further, it’s worth noting that the “programming” here is not programming in the sense of writing

code, but rather programming in the sense of planning. The technique was invented by Richard Bellman in the 1950s and he

tried to give it an impressive name – a name that “not even a Congressman could object to.”

II. The Weighted Interval Scheduling Problem and Some Exploration

When we recently studied greedy algorithms, we looked at the Interval Scheduling Problem. We now turn to (yet another)

twist on this problem: Weighted Interval Scheduling. The basic gist is that each interval now carries a weight or value

(perhaps like a priority) and our goal is now to pick a set of intervals that maximizes that weight.

Let’s note the parameters:

Each job i starts at time s(i) and finishes at time f(i)

Each job has weight or value vi

We call two intervals compatible if they do not overlap

Our goal is to find a subset S s.t. ________________ of __________________________ so as to maximize ____________.

We define some notation to help out:

p(j) =

Example: Consider the following instance of Weighted Interval Scheduling and find p(i) for each interval i:

Page 2 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465

Let’s consider an arbitrary instance of the problem and think about an optimal solution O and explore it…

Interval n…

If n O, …

If n O, …

We’re looking at both optimal solutions and their values here, so we make the following notational distinction, for j [1, n]:

Oj is an optimal solution to the problem consisting of requests {1, 2, …, j}

OPT(j) is the value of an optimal solution to the problem consisting of requests {1, 2, …, j}

So then…

The solution we really want is…

OPT(0) =

OPT(j) =

We decide that request j belongs to an optimal solution on the set {1, 2, …, j} iff…

Note that this work does not find an optimal solution, but rather finds the value of an optimal solution. But, computing the

value of an optimal solution is an achievement in and of itself.

Page 3 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465

Let’s write down an algorithm to compute OPT(j):

Compute-Opt(j)

if j = 0

return 0

else

return max(vj + Compute-Opt(p(j)), Compute-Opt(j-1))

Let’s firm up the correctness of this algorithm.

Claim: COMPUTE-OPT(j) correctly computes OPT(j) for all j [1, n]

Proof:

Page 4 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465

III. Performance of Algorithm to Compute Optimal Value and Improving Upon It

Problem: Describe the recursion for the following instance of the problem, where all intervals have equal weight:

like Fiobnacci – number of calls to Compute-Opt will increase exponentially – we don’t have polynomial time solution

We’d really like a polynomial-time solution, but we’re not far. Observe that…

The recursive solution solves many subproblems, but we see several that are the same

COMPUTE-OPT(n) really only solves ____________ different subproblems

The bad running time comes from …

Instead of recomputing the results of the same problem, we will store the results of each subproblem we compute in a table

and look up subproblems we want instead of recomputing them. This technique is called memoization. (Be careful: there is

no ‘r’ in there; think of it as keeping memos of what we’ve done.)

We’ll suppose there’s a globally accessible array M[0..n] to store results and say each value is empty before we begin. Let’s

twist COMPUTE-OPT(n) to add in memoization:

MEMOIZED-COMPUTE-OPT(n)

if j = 0

return 0

else if M[j] is not empty

return M[j]

else

M[j] =

return max(vj + Compute-Opt(p(j)), Compute-Opt(j-1))

Page 5 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465

Let’s analyze the running time of this new algorithm:

We assume…

In a single call to MEMOIZED-COMPUTE-OPT(n), ignoring recursion, the work is…

We look for a good measure of “progress.” The best is the number of elements of M[0..n] that are not empty. So…

Thus, the total running time is ________.

IV. Computing a Solution to Weighted Interval Scheduling

Notice that while we’ve made significant progress, we have only computed the value of an optimal solution, but not an

optimal solution itself. We’d like to do that too.

Question: A natural idea would be to maintain the solutions of each subproblem like we did their values. We would maintain

an array of the sets of solutions S[0..n]. What does this do to the running time?

Instead, we will write an algorithm that takes only a single value j and traces back through M to find the solution:

Page 6 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465

We conclude by figuring out the running time:

V. Generalizing Dynamic Programming

A fundamental idea in dynamic programming is…

iterate over subproblems, rather than computing solutions recursively

We can formulate an equivalent version of the Weighted Interval Scheduling algorithm we just built by to capture the essence

of the dynamic programming idea:

ITERATIVE-COMPUTE-OPT(n)

M[0] = 0

for j = 1 to n

{

M[j] = max(vj + M[p[j]], M[j-1])

}

The running time of this algorithm is ______.

We will use the remainder of our study of this algorithm design technique to look at case studies of problems that can be

solved by dynamic programming. In each case, we’ll iteratively built up the solutions to subproblems, noting that there is an

equivalent way to formulate the algorithms using memoized recursion.

To set out to develop an algorithm using dynamic programming, we need a collection of subproblems based on the original

problem satisfying the following properties:

There is a ____________________________ number of subproblems.

The solution to the original problem can be computed…

There is an ___________________________ of the subproblems ____________________________________

together with…

Homework: TBA

Page 7 of 7

Prepared by D. Hogan referencing Algorithm Design by Kleinberg/Tardos and slides by K. Wayne for PSU CMPSC 465