USING QUESTIONS TO IMPROVE COMPREHENSION AND

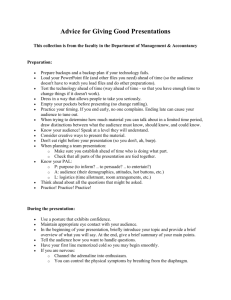

advertisement