Speech synthesis

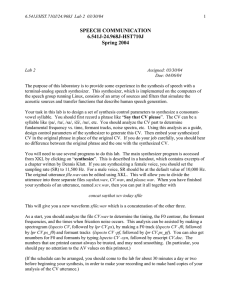

advertisement

Speech synthesis Speech synthesis concerns generation of speech from some kind of input, i.e. parametric representation of speech acoustics or speech production, or text. The input to the synthesizer varies with synthesis type: rule-based, i.e. articulatory and formant synthesis, and data-based, i.e. concatenative speech synthesis . A short overview of speech synthesizers is given in the following subsections. Articulatory synthesis Articulatory synthesis simulates speech production. This type of synthesis requires a dynamic model of vocal tract which makes it possible to simulate motions of the articulators during speech production. Another component is glottis model which generates the excitation signal (i.e. random noise or a quasi periodic sequence of pulses). The synthesis requires also a method of generating and monitoring air pressure and velocity. In the source-filter model of speech production the excitation signal is considered as the source. The signal passes through the vocal tract (and nasal tract) which acts as a filter: depending on its shape there occur resonances or formants at certain frequencies. Their location determines the identity of a sound. The other filter are lips whose rounding also determines formant frequencies. The vocal tract is represented as a transfer function (because it transfers the excitation signal) and in terms of parameters as illustrated and described in the figure below: TTTTTTPPP::: TONGUE TIP POSITION TTTTTTH H H::: TONGUE TIP HEIGHT V V A VA A::: VELIC APERTURE G G A GA A::: GLOTTAL APERTURE LLLPPP::: LIP PROTRUSION LLLA A A::: LIP APERTURE TTTH H H::: TONGUE HEIGHT TTTPPP::: TONGUE POSITION Figure 1: A model of vocal tract from Kroeger (1998) During synthesis the parameters representing the vocal tract are assigned specific values in time to model motions of the articulators, the glottis is set in a proper position by defining its aperture and tension of the vocal cords, lung pressure and air velocity are also defined. It has to be determined whether the nasal tract functions as an additional resonance tube or not. Articulatory synthesis is very attractive for research on speech production and perception, but the quality of speech generated in this way is far from perfect. This is mainly due to computional and mathematical complexity of the underlying models and the insufficient knowledge concerning articulatory processes involved in the production of natural speech. Articulatory synthesis is available in Praat based on the models developed in (Boersma 1998). For more literature and examples of a dynamic http://www.haskins.yale.edu/facilities/INFO/info.html articulatory synthesis see Formant synthesis Formant synthesis is based on the source-filter-model and knowledge concerning speech acoustics. It consists of two components: the generator of an excitation signal and formant filters that represent the resonances of the vocal tract. The former is considered as a source and the latter as a filter according to the source-filter model of speech production. Formant frequencies and bandwidths are modelled by means of a two-pole resonator. The number of formants varies from 3 to 6: with the latter number a high quality speech can be obtained. Additional information concerning radiation characteristics of the mouth which influence formant frequencies are also necessary. Usually from more than a dozen up to a couple of dozens of control parameters are used as an input to the waveform synthesizer. Some of the control parameters vary in time, e.g. formant frequencies/amplitudes/bandwidths, frequency and bandwidth nasal pole/zero, fundamental frequency, amplitude of voicing/aspiration/friction/turbulence, spectral tilt of voicing etc., whereas other parameters remain constant, e.g. sampling rate, number of formants in cascade vocal tract (Klatt 1980). Formant synthesizers can have pararell, cascade or combined pararell-cascade structure. The best results are obtained with the last structure type. An example of a pararell-cascade synthesizer is illustrated in the figure below: VOICING SOURCE + ASPIRATION SOURCE LARYNGEAL TRANSFER FUNCTION (CASCADE) FRICATION TRANSFER FUNCTION (PARALLEL) FRICATION SOURCE + RADIATION CHARACETRISTICS OUTPUT SPEECH Figure 2: Structure of the MITTalk systems (Allen et al. 1987) Formant synthesis is very useful for the research in the field of speech acoustics and phonetics and for the study of speech perception. Speech generated by formant synthesizers is characterized by metallic sounding. However, this sort of speech synthesis can bring satisfying results if control parameters are hand-tuned, which is impossible in a fully automatic system. Examples of formant synthesizers are: KlattTalk, MITalk, DECtalk, Prose-2000, Delta and Infovox. Concatenative synthesis Concatenative synthesis concerns the generation of speech from an input text. The speech results from concatenation of acoustic units stored in a database. The units are annotated for features referring to linguistic structure and suprasegmental context in which they occur. During synthesis the database is searched for units which match a target utterance the best. Features of the target utterance are determined on the basis of linguistic and contextual analyses which are carried out in a NLP (Natural Language Processing) component of a TTS system. In general, NLP includes: text preprocessing module, morphosyntactic analyser, phonetizer and prosody generator (Dutoid & Stylianou 2004). The second component of a TTS system is called Digital Signal Processing module. Acoustic units are stored in a parametric and compressed form – techniques such as LPC (Linear Predictive Coding), TD-PSOLA (Time-Domain Pitch-Synchronous-Overlap-Add) or MBROLA (Multi-Band Resynthesis Overlap Add) are applied for this task. They also perform concatenation of the units, smoothing out of concatenation points and prosody manipulation. This kind of approach does not have to deal with the problems concerning speech production, which is the case in rule-based speech synthesis systems. However, other problems arise that are related to the choice of the units (e.g. diphones, triphones, uniform units, i.e. units of the same length or non-uniform units, i.e. of varying length), the concatenation itself (how to find units which match specific segmental and suprasegmental contexts the best), prosody modification (i.e. manipulation of intonation and duration in order to adapt units to the target prosodic structure) and compression of acoustic units (Dutoit & Stylianou 2004). The basic idea behind concatenative speech synthesis is that speech can be generated from a limited inventory of acoustic units and that their concatenation should account for coarticulation. Therefore systems based on phonemes and words are impractical – they do not account for coarticulation and as a result unnatural synthetic speech is obtained. Moreover, an inventory consisting of all words (or syllables) occuring in a given lanaguge is too numerous, requires too much memory and computationally too costly. These problems do not arise if diphones or demisyllables are used as acoustic units. On the one hand they account for coartilculation, and on the other their inventories are not too numerous. However, in order to generate natural sounding synthetic speech, the modification of F0 and duration of the units is required. Another way to achieve this goal is to use an inventory of non-uniform units and to choose from it the longest units that match the target utterance specifications the best. This method is known as unit selection speech synthesis (Hunt & Black 1996). The selection of units is based on two costs – concatenation and target costs. The former one specifies the amount of discontinuity at the concatenation point between two acoustic units, whereas the latter determines to what extent the unit matches the target utterance specifications. This approach makes it possible to decrease the number of concatenation points and to reduce significantly the amount of prosodic modification. Recently, novel solutions have been proposed to unit selection synthesis which aim at improving the quality of the synthetic speech prosody. They use inventories of prosodic units that correspond to syllables (Clark & King 2006) or phones, feet and phrases (van Santen et al. 2005) and carry out a joint selection of the segmental and prosodic units. The results are encouraging, for details see the papers.