Consciousness and Neuromorphic Chips: A Case for Embodiment

advertisement

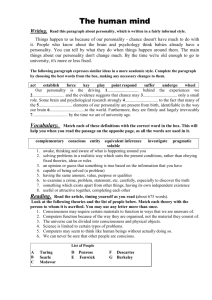

Consciousness and Neuromorphic Chips: A Case for Embodiment Introduction The philosopher John Searle is often cited for his Chinese Room argument against strong-AI in which he states that a nonbiological medium, such as a computer, is not conscious. Searle’s argument rests on the belief that specific biological mechanisms within human brains cause conscious perception to occur (Searle, 1984). Proponents of whole brain emulation (WBE) and “mind uploading”, on the other hand, do not view nonbiological reproductions of human brain structure and function as limiting factors for consciousness. In this paper we propose that embodiment is a necessary condition for any emulated, nonbiological brain to be conscious. Such an argument is not as adverse to strong-AI as Searle’s critique, yet holds to the ontological notion of consciousness requiring a minimal level of biological brain-like structure in the computer’s hardware/software. We will first address the neurobiological aspects of embodied human consciousness from both a functional and structural standpoint, and then present the counterexample, which states that a simulated, biophysically accurate software reconstruction of the human brain is sufficient for “virtual” consciousness. Lastly, we discuss the limitations of current von Neumann-based computers and propose neuromorphic chip technology as an alternative method for achieving embodied, human brain-like consciousness. The importance of embodiment Unless you are a floating head from the television show, Futurama, chances are that you possess both a brain and a body. For obvious reasons, the one requires the other. Our brain wires itself, and rewires itself, based not solely on our genetic makeup but also our interactions with the external world. This notion of embodiment is well accepted in the field of biology and is also popular among robotics researchers such as Rodney Brooks, who believes that a robot can only learn and update its behavioral state by way of actuator and sensor feedback (Brooks, 2003). Why, then, is embodiment important for replicating human consciousness in a nonbiological system? What capacity is missing from our current computers that we currently have inside our own heads? Hypothetically speaking, let us imagine a supercomputer in 2010 containing a software program that has generated an accurate replica of Daniel Dennett’s brain. On this very day, the research team responsible for collecting and organizing this daunting amount of data decides to “turn on” the simulation in front of the entire world. Would the virtual, multi-realizable Dennett 1 Version 1.0 be capable of speaking (given that we had supplied the proper output devices) with us or giving us a qualitative report of what it’s like to reside in a new physical form? We would reply “no”. Dennett Version 1.0 may meet the requirements for weak-AI whereby he shows signs of intelligence yet, as Searle contends, cannot describe the qualia of his new environment. In other words, Searle argues that the biology of information transfer is just as important as the information itself. Hardware requirements aside, how can we even know other humans are conscious? Giulio Tononi has written extensively on methods for quantifying conscious experience, relying on 1) observable reports and 2) oral reports from subjects (Tononi and Koch, 2008). Were these the only requirements for consciousness in a computer, it would probably be sufficient to say our current computers can indeed be conscious. Unfortunately, a third prerequisite is needed: observed and measurable brain activity. Isolated regions of activity, synchrony, oscillations, and trace networks of activity are items measured outside of the agent. We are not privy to the synchrony and oscillatory behavior of our own mental states, yet we are now able to observe these states using numerous recording methods. This leads to the obvious question: if a researcher was able to probe the inner workings of a computer, would she see, for example, oscillations which are known to be required for conscious perception? In order to distinguish between conscious and unconscious states in the human brain, an analysis of experimental work targeted at characterizing brain activity in various states of consciousness must be performed. These states range from attentive waking behavior, often referred to as “conscious” behavior in the neuroscience literature, to sleep and pathological states such as schizophrenia, autism, and coma. Experimental studies have begun to unveil the electrophysiological correlates of brain states associated with consciousness…and the absence of it. Electroencephalograph (EEG) recordings of cortical and thalamic nuclei (a subcortical nuclear complex that relays sensory information to the cortex and reciprocally links cortical areas) have shown that slow, large, and regular frequency neural oscillations are the signatures of anesthesia and dreamless sleep. The waking state, as well as the dream state, is usually labeled as conscious and is associated with fast, small, and irregular oscillations (Steriade et al., 1993a,b). Furthermore, the classes of brain oscillations associated with specific behaviors tend to be the same across different mammals (Destexhe and Sejnowski, 2003). These oscillations are what a measuring tool such as EEG can tell us about the interactions of billions of neurons in a large-scale network of excitatory and inhibitory cells during a specific brain state. Ultimately, these interactions determine what is processed, giving rise to the resulting qualia, introspectively associated with a conscious or 2 unconscious state. What gives conscious states, or dreams, their peculiar status in our personal experience yet makes dreamless sleep and anesthesia a “psychological vacuum” that is devoid of the richness of conscious experience? Steve Esser and colleagues have researched the neural basis of consciousness via sleep function using transcranial magnetic stimulation (TMS) and draws on information theory to show there is a breakdown of information integration during sleep (Esser et al., 2009). If this theory were to be true, breakdown of information during sleep would coincide with regularity in neural oscillations. One would be tempted to claim that fast oscillations, often labeled as beta and gamma frequency, are functional to “produce” conscious states (Uhlhaas et al., 2009). This argument is simply shifting the problem to why the brain that expresses fast oscillation frequency produces conscious experience, and the brain that expresses low frequency oscillations does not. What makes fast oscillations special? In a fast oscillatory regimen, for instance when reading an article or listening to a talk, neurons tend to discharge, or spike, at a fast rate, which has been shown to promote propagation of information across the cortical hierarchy and promote synaptic plasticity, or learning. In these states, neurons in various brain areas experience highly non-uniform pattern of activations, which eventually leads to the typical beta and gamma oscillatory activity as recorded, for instance, in EEG. These physically embedded patterns of activations are the qualia, or the perceptual correlate, of our conscious experience. What turns subconscious (or unconscious) states, then, into conscious ones? Networks of biological neurons do not cease to discharge during sleep or within a coma. To the contrary, oscillations caused by neuronal spikes are often more pronounced, but they tend to be slower and more synchronized. In other words, during sub- or un-conscious states, many neurons tend to spike together, which eventually produces powerful, slow, and synchronized oscillations. Slow frequency oscillations have also been shown to express weaker learning in both brain recordings and computational modeling work (Wespatat et al., 2004; Grossberg and Versace, 2008). On the microscopic level, large scale synchronized oscillations mean that neurons tend to process, and broadcast, uniform information over time. There is no pattern. Or better – the pattern is uniform. What changes between these two states is the content of what is processed. During sleep, for instance, neurons tend to discharge in sync: all neurons communicate “the same message” to the next information processing stage. Uniform messages have a peculiar effect on the nervous system, as a simple but powerful experiment can demonstrate. In the 1930s, psychologist Wolfgang Metzger described the ganzfeld experiment (from the German for “entire field”) as part of his investigation into gestalt theory. In a typical visual ganzfeld experiment, the subject is presented a 3 uniform visual field by wearing translucent, monochromatic and completely homogeneous goggles (simply ping-pong balls halves), whereby the subject experiences a “loss of vision,” often accompanied with altered states of consciousness. Why is vision “lost”? The eyes are open and light impinges on the photoreceptors, causing neural discharges on the retina, which propagate to the cerebral cortex and higher association areas. Nevertheless, a brain function is “lost”, at least by introspective accounts of subjects (Metzger, 1930). The ganzfeld experiment is a simple, easily reproducible protocol that points out how an altered brain state, such as the loss of a brain function as important as vision, can be caused by uniform stimulation. What determines the subjective label of conscious and unconscious experience is the content of this processing, where lack of consciousness has been mistaken by states where uniform information is processed. Figure 1 provides an illustration of how conscious and unconscious states might be generated by the activity of an underlying neural network, linking single neuron activation and EEG recording. Figure 1. Left: conscious states might be generated by the non-uniform activity of large-scale neural networks. The activation of each cell, depicted as a round gray circle, is proportional to its gray level (top). The activation in time is also non-uniform (middle), causing the characteristic irregular shape of a “conscious state” EEG. Right: during states commonly labeled “unconscious”, cells are express uniform patterns of activation (top), which in time (middle) result in the typical slow, large-scale, synchronized oscillations observed in sleep, anesthesia and coma (Steriade et al., 1993a,b). 4 From this brief overview of oscillatory networks, it becomes evident that the physical transmission of electrical and chemical activity from neuron to neuron within large networks is required for various conscious or unconscious states to emerge. Such states can be analyzed by way of EEG or other such techniques required by our third stipulation for consciousness: observable and measurable “brain” activity. Thus, from the above segment we can state that oscillations within the brain are necessary but not sufficient for conscious experience; they are the signature of the underlying physical interaction in biological brains. Such a statement would preclude large-level simulation work currently being explored by proponents of whole brain emulation, which we will now explain in more depth. Counterexample: whole brain emulation Whole brain emulation (WBE) posits that consciousness can in fact arise from computer simulations or software replications of the brain. A technical report1 by Anders Sandberg and Nick Bostrom at the Future of Humanity Institute, Oxford University, presented a 130-page roadmap for WBE. This detailed account of one-to-one modeling of the human brain lays out economic, scientific, technological, and ethical WBE concerns that will assuredly arise as computational neuroscientific research progresses. Even though WBE strives to achieve consciousness in their brain uploading activities, it is unclear as to whether this effort will be able to pass our third test for embodiment; there must observed and measurable activity within the physical medium. In fact, given the current technological status of the computer industry, it is almost a guarantee that WBE would not be able to pass this test. Traditional von Neumann architectures would not be able to support brain activities like those described in the previous section including, but not limited to oscillations, localized regions of activation, networks of distributed activity, and self-organization of activity. Despite these limitations in the approach, several researchers have attempted to construct future plans for simulating and emulating the human brain from within the confines of a computer program. One recent example comes from IBM’s Almaden Research Center which recently simulated a fully connected large scale network with the number of elements on the order of those found in a cat brain (Ananthanarayanan et al., 2009). This feat garnered a lot of attention for its computational methods and visually stunning results. However, many critics pointed out that this was little more than a pretty show and had little to do with neural physiology, and much less with 1 URL: www.fhi.ox.ac.uk/reports/2008‐3.pdf 5 cognitive functioning2. Thus, if either the WBE proposal or the IBM project is to have any future success, they should focus more on relating high-level functional theory to low-level neural activity. Even if the simulation performed by IBM contained faithful adherence to the biology (i.e. neural structure, connectivity, etc.), there is still no established causation of consciousness or any other form of coherency in neural processing output. WBE and other mind uploading proposals assume that a computer will be able to faithfully run a model of our own brain once the "mind upload" is complete. Our simulated mind, running on a sophisticated computer of the future, should, to all intents and purposes, be a working version of our conscious selves - only virtual. The question is whether or not such a simulation could indeed be our conscious doppelganger. We suspect that the software/hardware distinction must collapse into a sort of embodied hardware for conscious perception and cognitive action to be enabled from a computer. Furthermore, the embodied hardware must be able to pass our third criterion for measuring consciousness by being able to observe and measure its activity. Embodiment in a neuromorphic chip From a philosophical standpoint, computers send commands to the CPU via numeric computations, a sort of metaphysical representation of an ontological representation of the mental state. The actual electrical processing of that numeric code is separate from what the human brain processes. In other words, a traditional computer's physical state change involves electrical activity alteration on a chip for memory storage and retrieval; this electrical activity does not correlate well with the activity seen during an active brain state, but rather the current sequence state for interpreting that brain state. For the computer to be conscious, both the hardware and software should be the same so as to eliminate a distinction between numeric interpretation and the physical, electrical CPU response. As a result, an embodied neuromorphic chip would be different than a von Neumann-based chip and, it would be able to generate observable and measurable activity. Such a neural chip would be built to adhere to neurobiological principles of neural structure, organization, and connectivity. A von Neumann chip was not designed to adhere to these biological principles but rather to achieve computational efficiency. Thus, the electrical activity of the actual neuromorphic chip should show 2 URL: http://spectrum.ieee.org/tech-talk/semiconductors/devices/blue-brain-project-leaderangry-about-cat-brain 6 some correlation to activity in the human brain. It should be able to self organize into localized regions of activation and show coherent, meaningful oscillations. Is a speculative neuromorphic chip such as the one described above sufficiently embodied in order to be conscious? Yes, however, were we to completely transfer biological Dennett over to its neuromorphic twin, Dennett Version 1.0, the new iteration of Professor Dennett would quickly become his own identity with a very unique sort of conscious perception that would most probably vary exponentially from “ordinary” human consciousness. The millisecond that a researcher uploads Dennett’s mind to the neural chip, his experience will become very different based on the change in physical systems. Human minds are wired by their embodied experience, thus whatever device an individual’s mind has been uploaded to will determine what the contents of this new mind will be. This is similar to Thomas Nagel’s argument in “What Is It Like To Be a Bat?” (Nagel, 1974).” Such a chip is not purely hypothetical. In fact, Defense Advanced Research Projects Agency (DARPA) of the United States government is currently funding a project aimed at creating such chips. This project, Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE)3, aims “to develop electronic neuromorphic machine technology that scales to biological levels.” In other words, the program goal is to develop nano-scale chips capable of functioning in a similar manner to the synapse between two neurons in a brain in the way in which electric signals are passed from one to the other. Not only could these chips adhere to neurobiological architecture and functional constraints, but they will also be required to perform useful behaviors including learning and navigation in virtual environments. It seems then that the hypothetical embodied neuromorphic chip that we have proposed may still be a thing of the future, but its development will be completed sooner than one may think. Conclusion Although Searle has argued for biological embodiment as a necessity for causation of consciousness, this paper puts forth the argument that biological embodiment is not the only embodiment that can produce consciousness. Instead, we argue that the brain is an optimal form of embodiment giving rise to consciousness because it can produce observable reports, oral reports, and observed and measured activity. The first two qualifications of consciousness can be replicated 3 URL: http://www.darpa.mil/dso/thrusts/bio/biologically/synapse/index.htm 7 with computer simulations as discussed by the proponents of WBE. However, the third qualification requires a unique stipulation for embodiment that is able to self organize and generate unique global and local patterns of activities within its constituent elements. At this point in time, this is only achievable within brain tissue. However, with the advancement of neural chip development, we would argue that embodiment necessary for consciousness would be achievable in a new medium, the neuromorphic chip. References Ananthanarayanan, R., Esser, S.K., Simon, H.D. and Modha, D.S. (2009). The Cat is Out of The Bag: Cortical Simulations with 10^9 neurons and 10^13 synapses. Supercomputing 09: Proceedings of the ACM/IEEE SC2009 Conference on High Performance Networking and Computing, Nov 14-20, 2009, Portland, OR. Brooks, Rodney. Flesh and Machines: How Robots Will Change Us. London: Vintage, 2003. Destexhe, A., and Sejnowski, T. (2003). Interactions between membrane conductances underlying thalamocortical slow-wave oscillations. Physiological Reviews, 83, 1401-1453. Esser, S , Hill, S. and Tononi, G. (2009). Breakdown of effective connectivity during slow wave sleep: investigating the mechanism underlying a cortical gate using large-scale modeling. Journal of Neurophysiology,102 (4), 2096111. Grossberg, S., and Versace, M. (2008). Spikes, synchrony, and attentive learning by laminar thalamocortical circuits. Brain Research, 1218C, 278-312 [Authors listed alphabetically]. Metzger, W. (1930). Optische Untersuchungen am Ganzfeld. II. Zur Phänomenologie des homogenen Ganzfelds. Psychologische Forschung, 13, 6-29. Nagel, T. (1974).What Is It Like To Be a Bat? The Philosophical Review LXXXIII, 4, 435-50. Searle, John (1984). Minds, Brains and Science: The 1984 Reith Lectures, Harvard University Press. Steriade M., Nuñez A., and Amzica F. (1993a). A novel slow (<1 Hz) oscillation of neocortical neurons in vivo: depolarizing and hyperpolarizing components. Journal of Neuroscience, 13, 3252-3265. Steriade M., Nuñez A., and Amzica F. (1993b). Intracellular analysis of relations between the slow (<1 Hz) neocortical oscillations and other sleep rhythms of electroencephalogram. Journal of Neuroscience, 13, 32663283. Tononi, G. and Koch, C. (2008). The neural correlates of consciousness: an update. Annals of the New York Academy of Sciences, vol. 1124, 239-61. Uhlhaas, P.J., G. Pipa, B. Lima, L. Melloni, S. Neuenschwander, D. Nikolić and W. Singer (2009). Neural Synchrony in Cortical Networks: History, Concept and Current Status. Frontiers in Integrative Neuroscience, 3, Article 17. Wespatat, V., Tennigkeit, F., Singer, W., (2004). Phase sensitivity of synaptic modifications in oscillating cells of rat visual cortex. Journal of Neuroscience, 24, 9067–9075. 8