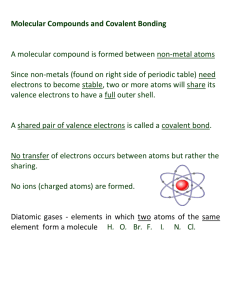

A molecular dynamics primer - Computational physics resource

advertisement