Statistical Consulting Firm

advertisement

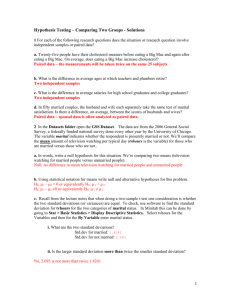

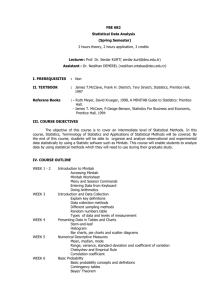

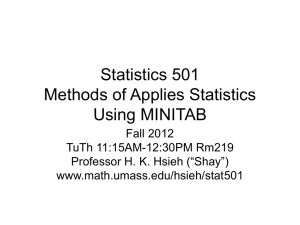

1 Customer’s Question: “How well does a student’s GPA at the end of the three semester period predict whether the student remained a CS, engineering, or other science-related major?” Firm’s Response: We will be explaining the step-by-step process by which we answered the customer’s question, and we will also be explaining the meaning behind the statistical output corresponding to the model. The given question poses an “either-or” scenario. Either a given student remained within the warm embrace of science, or he/she decided to major in a field outside engineering or science. Therefore, we can model this problem with a Binary Logistic Regression, but we must first re-code the original categorical variable of major. The data given to us had broken the students into one of three categories: major one indicates a student who remained a CS major at the end of the three semester period, major two indicates a student who changed to a major in engineering or some other science, and major three indicates a student who changed to a major outside engineering or science. The students were classified as a 1, 2, or 3 according to their respective major. We re-coded the data and classified any student that was a 1 or a 2 as a “success” (=1). We also re-coded any student that was a 3 as a “failure” (=0). We renamed our re-coded major column as “remains science major,” in preparation of running the Binary Logistic Regression in Minitab. We ran the Binary Logistic Regression with “remains science major” as our response and “gpa” as our model. The first part of the Minitab output gives us some basic statistics about the number of successes (=1) and the number of failures (=0): Response Information Variable remains science major Value 1 0 Total Count 156 78 234 (Event) The next part of the Minitab output gives us the p-value for the omnibus test that all the coefficients are equal to zero (with G statistic), and the p-value for the test that the individual coefficients are equal to zero. We can see from the output that we reject the omnibus null hypothesis, as well as the null hypotheses that the individual coefficients are zero. The regression coefficients are not equal to zero: Logistic Regression Table Predictor Constant gpa Coef -3.12405 1.43270 SE Coef 0.652317 0.241520 Z -4.79 5.93 P 0.000 0.000 Odds Ratio 4.19 95% CI Lower Upper 2.61 6.73 Log-Likelihood = -125.493 Test that all slopes are zero: G = 46.902, DF = 1, P-Value = 0.000 2 We can also determine how well this particular model fits the data by inspecting the goodness-of-fit tests in Minitab’s output. Overall, the p-value associated with each test does not allow us to reject the null hypothesis, so we can conclude that there is insufficient evidence to claim that the model does not fit the data adequately: Goodness-of-Fit Tests Method Pearson Deviance Hosmer-Lemeshow Chi-Square 164.532 184.252 3.672 DF 126 126 8 P 0.012 0.001 0.885 Finally, we can determine whether our model contains any real explanatory power by observing the concordant pairs, discordant pairs, and ties under the measures of association. If our model is accurately predicting the probability of success for a given student that is indeed a success, then the percentage of concordant pairs would be near 100%, and the percentage of discordant pairs would be near 0%. Our model shows a relatively high percentage of concordant pairs, and a relatively low percentage of discordant pairs, which allows us to have confidence in the predictability of our model. In addition, the Summary Measures give us a similar confidence because larger values (up to one) indicate that the model has a better predictive ability. Table of Observed and Expected Frequencies: (See Hosmer-Lemeshow Test for the Pearson Chi-Square Statistic) Value 1 Obs Exp 0 Obs Exp Total Group 5 6 1 2 3 4 6 4.9 9 9.9 12 13.7 13 15.1 17 16.1 17 18.1 23 14 13.1 23 13 11.3 25 11 8.9 24 6 6.9 23 7 8 9 10 Total 19 17.1 20 19.0 19 20.1 21 20.1 20 20.0 156 4 5.9 23 4 5.0 24 5 3.9 24 2 2.9 23 2 2.0 22 78 234 Measures of Association: (Between the Response Variable and Predicted Probabilities) Pairs Concordant Discordant Ties Total Number 9316 2794 58 12168 Percent 76.6 23.0 0.5 100.0 Summary Measures Somers' D Goodman-Kruskal Gamma Kendall's Tau-a 0.54 0.54 0.24 In conclusion, a student’s GPA clearly holds statistically significant power in predicting whether a student remained within the “warm embrace of science” (classified as a success =1) or whether the given student changed to a major outside the field of CS, science, or engineering (classified as a failure =0). 3 Customer’s Question: “What are the effects of the variables “SEX” and “Maj” on the variable “HSS”? Firm’s Response: The question presents the case involving two categorical explanatory variables (SEX and Maj) and one quantitative response variable (HSS). This observation can be recognized through the values that correspond to each variable throughout each individual sample. The variable “SEX” attains a value of either “1” or “2” and the variable “Maj” possesses one of three values (“1”, “2”, or “3”), whereas the response variable “HSS” can equal a wide range of values (continuous distribution). Now that we identified the characteristics of the variables in question, it is time for us to declare which test would be best to effectively and accurately answer the customer’s question. We can measure the effects of the explanatory variables on the response variable through the use of 2-Way ANOVA. In order to do so, we used the Minitab software to run the test. First, we scrolled to the appropriate test (under the stats menu) and entered the variables in question into their respective slots (with “SEX” as the row factor and “Maj” as the column factor). Also, make sure that the confidence level is at the value of 95 . The first part of the Minitab output gives us the ANOVA table of data: Source sex maj Interaction Error Total S = 1.599 DF 1 2 2 228 233 SS 12.927 44.410 24.855 582.923 665.115 R-Sq = 12.36% MS 12.9274 22.2051 12.4274 2.5567 F 5.06 8.69 4.86 P 0.025 0.000 0.009 R-Sq(adj) = 10.44% From this data, we can infer that “SEX,” “Maj,” and the interaction between the two are all statistically significant in testing their effects on “HSS” because of their low p-values (all below the 0.05 significance level). Immediately following this output, a residual plot for “HSS” pops up so we can visually confirm that the residuals are normal (except for some left skewedness). We do this to satisfy the assumption that the residuals are independent and have a normal distribution for a 2-Way ANOVA test to be adequate. We then created a main effects plot for “HSS” using Minitab. Upon viewing the graph, we were able to notice that there were indeed some main effects happening between the explanatory variables and the response variable. For instance, the graph indicates that females had a somewhat significant higher “HSS” mean than males did. Also, the graph portrayed that students who changed to a major outside engineering or science had a very significant lower mean than students who remained a CS major or who changed to a major inside engineering or some other science. Accordingly, there was really no main effect between those who remained CS majors or switched to some other major inside the field of science because of the basically horizontal line connecting the two on the graph. 4 Main Effects Plot for hss Data Means sex maj 8.50 Mean 8.25 8.00 7.75 7.50 1 2 1 2 3 Interaction Plot for hss Data Means 9.5 sex 1 2 Mean 9.0 8.5 8.0 7.5 1 2 maj 3 After this analysis, we then generated scatterplots to view the potential interactions between the two explanatory variables on the response variable. We instantly noticed an interaction effect between gender and students who remained a CS major. This analysis shows that both males and females who remained a CS major at the end of three semesters were correlated through their high school science means. 5 Residual Plots for hss Normal Probability Plot Versus Fits 99 2 90 0 Residual Percent 99.9 50 10 -2 -4 1 0.1 -5.0 -2.5 0.0 Residual 2.5 -6 5.0 7.5 8.0 Histogram 2 Residual Frequency 9.0 Versus Order 30 20 10 0 8.5 Fitted Value 0 -2 -4 -5 -4 -3 -2 -1 Residual 0 1 2 -6 1 20 40 60 80 100 120 140 160 180 200 220 Observation Order We created a residual plot for “HSS” so we can visually confirm that the residuals are normal (except for some left skewedness). We do this to satisfy the assumption that the residuals are independent and have a normal distribution for a 2-Way ANOVA test to be adequate. 6 Customer’s Question: “What are the best predictors of whether a student remains a CS major after three semesters?” Firm’s Response: The question posed by the customer offers a scenario similar to the customer’s first question. The given question poses an “either-or” scenario. Either a given student remained a CS major after three semesters, or he/she decided to major in engineering, some other science, or a field outside science. Therefore, we can model this problem with a Binary Logistic Regression, but we must first re-code the original categorical variable of major. The data given to us had broken the students into one of three categories: major one indicates a student who remained a CS major at the end of the three semester period, major two indicates a student who changed to a major in engineering or some other science, and major three indicates a student who changed to a major outside engineering or science. The students were classified as a 1, 2, or 3 according to their respective major. We re-coded the data and classified any student that was a 1 as a “success” (=1). We also re-coded any student that was a 2 or a 3 as a “failure” (=0). We renamed our re-coded major column as “remains CS major,” in preparation of running the Binary Logistic Regression in Minitab. We ran the Binary Logistic Regression with “remains CS major” as our response and “hsm, satm, sex” as our model. Those three predictors were chosen because they led to the strongest model, as explained with the help of Minitab’s output. The first part of the Minitab output gives us some basic statistics about the number of successes (=1) and the number of failures (=0): Response Information Variable remains CS major Value 1 0 Total Count 78 156 234 (Event) The next part of the Minitab output gives us the p-value for the omnibus test that all the coefficients are equal to zero (with G statistic), and the p-value for the test that the individual coefficients are equal to zero. We can see from the output that we reject the omnibus null hypothesis, but our individual p-values only allow us to reject the null hypothesis for “hsm” that the coefficient is equal to zero (no explanatory power). Despite our inability to reject the null for “satm” and “sex” that the coefficients are equal to zero, we are confident that these variables do indeed offer explanatory power to our model, as seen later in the Minitab output: Logistic Regression Table Predictor Constant hsm satm sex Coef -2.77585 0.348232 -0.0010238 -0.215419 SE Coef 1.34368 0.119025 0.0020640 0.299857 Z -2.07 2.93 -0.50 -0.72 P 0.039 0.003 0.620 0.473 Odds Ratio 1.42 1.00 0.81 95% CI Lower Upper 1.12 0.99 0.45 1.79 1.00 1.45 Log-Likelihood = -143.637 Test that all slopes are zero: G = 10.615, DF = 3, P-Value = 0.014 7 We can also determine how well this particular model fits the data by inspecting the goodness-of-fit tests in Minitab’s output. Because we are using multiple variables to predict whether a student remains a CS major, we have to account for the grouping structure of the Goodness-of-Fit Tests, and the only one over which we have control of grouping is the HosmerLemeshow Test. So we had to aggressively bin our data in order to have the grouping for all combinations of the variables to be equivalent, so we limited the tests to five groups. But once we aggressively binned our data, the P-values for the Pearson and Deviance Tests dropped significantly, forcing us to focus on the p-value for the Hosmer-Lemeshow Test. The p-value associated with the Hosmer-Lemeshow Test does not allow us to reject the null hypothesis, so we can conclude that there is insufficient evidence to claim that the model does not fit the data adequately: Goodness-of-Fit Tests Method Pearson Deviance Hosmer-Lemeshow Chi-Square 183.154 221.483 3.233 DF 119 119 3 P 0.000 0.000 0.357 Finally, we can determine whether our model contains any real explanatory power by observing the concordant pairs, discordant pairs, and ties under the measures of association. If our model is accurately predicting the probability of success for a given student that is indeed a success, then the percentage of concordant pairs would be near 100%, and the percentage of discordant pairs would be near 0%. We began our search for the best predictors by examining the difference between the concordant pairs and the summation of discordant pairs and ties (as a conservative estimate). We ranked the best predictors of whether a student remains a CS major based upon this difference: “satv” was first, “satm” second, and “hsm” was third. Those rankings required that the individual variables failed to reject the null hypothesis for the HosmerLemeshow Test, so we could conclude that the variables are accurately fitting the data. We began our process of determining which variables are the best predictors by starting with “satv” and then trying each other variable to see which model contains the most predictive power. We discovered that if two more variables are added to the model with “satv,” we would reject that the model fits the data well. But we also discovered a model with predictors “satv” and “gpa” that compares to our model, though it contains slightly less predictive power. After many trialand-error binary logistic regressions, we decided upon the three predictors “satm,” “hsm,” and “sex” for our model. Our model shows a relatively high percentage of concordant pairs, and a relatively low percentage of discordant pairs, which allows us to have confidence in the predictability of our model. The Summary Measures are not as close to one as we would hope, but they are still higher than most other models. Table of Observed and Expected Frequencies: (See Hosmer-Lemeshow Test for the Pearson Chi-Square Statistic) Value 1 Obs Exp 1 2 11 8.3 11 13.3 Group 3 15 17.2 4 5 Total 17 18.6 24 20.7 78 8 0 Obs Exp Total 35 37.7 46 36 33.7 47 34 31.8 49 29 27.4 46 22 25.3 46 156 234 Measures of Association: (Between the Response Variable and Predicted Probabilities) Pairs Concordant Discordant Ties Total Number 7609 4461 98 12168 Percent 62.5 36.7 0.8 100.0 Summary Measures Somers' D Goodman-Kruskal Gamma Kendall's Tau-a 0.26 0.26 0.12 In conclusion, we have determined that the predictors “satm,” “hsm,” and “sex” hold the most predictive power in determining whether a given student remains a CS major. Our search for predictors focused on three areas in Minitab’s output: the p-value for the omnibus test that all the coefficients are equal to zero, the p-value associated with the Hosmer-Lemeshow Goodness-of-Fit Test, and the difference between the concordant pairs and the summation of discordant pairs and ties (C-(D+T)). Our model rejected the omnibus test (so our model contains predictive power), failed to reject the Hosmer-Lemeshow Test (so our model fits the data), and had the biggest difference in concordant pairs. Overall, we are confident that the chosen three variables offer the greatest predictive power for determining whether a student remains a CS major.