Initiative 4B-Access Infrastructure

MOS IV: Personal Productivity

INITIATIVE 4B: ACCESS INFRASTRUCTURE

___________________________________________________________________ . Highlights .

All campus connections to the CSU backbone network have been upgraded to OC-3 capacity (155 Mbps) where such service is commercially available. The backbone has been upgraded to OC-12 capacity (622 Mbps), and enhanced network management tools have been implemented.

Eight campuses have begun construction associated with the Technology Infrastructure Initiative: three have received favorable bids and are negotiating contract and three anticipate receiving bids within the year.

On half of the campuses, no network outlets meet CSU telecommunications infrastructure standards for highspeed connectivity. Only 25 percent of all network outlets in the system meet these standards.

In comparison to public institutions nationally, CSU campuses are solidly ahead in three networking areas: wireless, ISP, and broadband. In other areas of networking, the CSU tends to be on par with these institutions

(e.g., in classroom connections, high speed video, ATM, gigabit Ethernet, and voice over IP).

________________________________________________________ . Description of Initiative .

The Technology Infrastructure Initiative (TII) is the keystone initiative upon which the ITS rests. Students, faculty and staff are increasingly reliant on telecommunications networks to access information, to transact university business, and to communicate across campus, between campuses and globally. There are two major network components to the TII: Inter-campus infrastructure, and intra-campus infrastructure. The CSU established systemwide standards for the telecommunications infrastructure and has begun to upgrade intra-campus and inter-campus networks to meet these standards. (See Appendix B.)

_______________________________________________________________ . Current Status ..

Inter-Campus Infrastructure

4Cnet, the systemwide network, has enabled better communications among CSU campuses and community colleges,

University of California campuses, and other educational entities. Thanks to improvements accomplished through

CENIC (the Corporation for Education Network Initiatives in California) and the Digital California Project, CSU campuses can interact with a growing number of K-12 schools with the reliability and speed they now enjoy across the

CSU system.

The 4CNet backbone network connects 27 CSU sites at 45 Mbps (T-3), and 125 California Community Colleges and

30 plus K-12 sites at T-1 or lower speeds. Videoconferencing facilities were installed on each CSU campus in the mid

1990s. Voice services were provided by a mix of campus operated and externally contracted telephone networks.

The Technology Infrastructure Initiative (TII) will triple 4CNet speed/bandwidth capability, and will improve substantially the reliability and the quality of network service.

Progress in 2001/2002:

All campus connections to the backbone have been upgraded to OC-3 capacity (155 Mbps) where such service is commercially available.

The 4CNet backbone has been upgraded to OC-12 capacity (622 MBps) and enhanced network management tools have been implemented.

All of the California Community College Campuses have received video conferencing connections and equipment.

Work is underway to upgrade the network backbone to 1 gigabit/second and to provide an infrastructure to allow campus connectivity to that backbone at similar speeds. This work is being carried out in coordination with CENIC, a consortium of the CSU, the University of California, the University of Southern California,

Stanford University, and the California Institute of Technology.

Measures of Success-November 2002 Access Infrastructure: 1

MOS IV: Personal Productivity

Intra-Campus Infrastructure

To achieve the target level of network access, the campus inter- and intra-building infrastructures must be engineered to assure end-to-end compatibility. The scope of this activity demands unprecedented cooperation, collaboration and participative management by campuses at all levels. The buildout on each campus is being accomplished in two stages. Stage 1 requires individual campuses to construct physical plant facilities and to install inter- and intrabuilding media (cabling). In Stage 2, the acquisition of terminal resources (electronics) is centrally managed and involves the participation of a private-sector provider.

Stage 1 construction began in 2001/2002 for the first group of campuses. Additional groups of campuses commenced activities late in the fiscal year or are projected to begin construction in 2002/2003. Thus far, program implementation has been on track with schedule forecasts. The funding source for Stage 1 construction for the first two cohorts has been Proposition 1A capital outlay. Funds to support the third and fourth (final) cohort will be supported by

Proposition 47 funds, a statewide ballot initiative approved in November 2002.

Progress in 2001/2002:

Infrastructure designs have been initiated for all campuses. Construction documents are completed for the 14 campuses in the first two cohorts. Design work is well along for the eight campuses in the final cohort.

Eight of the 14 campuses with completed construction documents have: initiated construction; three more campuses have received favorable bids and are negotiating contracts; and, the last three campuses were anticipating bids by early fall 2002. To date, most of the successful bidders have quoted prices at or below the engineers’ estimates for the Stage 1 projects.

A contract has been negotiated with SBC to serve as the Stage 2 Systems Integrator. Detailed plans for designing, provisioning, managing and refreshing campus networks are in near final form. For campuses in the first two cohorts, the Integrator’s field engineering representatives are on a schedule consistent with ongoing Stage 1 construction activities, and are working with campus personnel. Several campuses have received network equipment as a result of the collaboration with SBC.

Through the efforts of a systemwide alliance of key technology professionals, the CSU has created an internal mechanism for defining, maintaining and updating applicable standards and requirements. One product of that effort has been a set of common baseline campus network standards. The Systems Integrator is applying these standards in campus network designs.

__________________________________________________ . Outcomes and Measurements .

OUTCOME: To provide students, faculty and staff with network

Ability to contact someone at any time and from any place is the goal of an advanced communication system. Voice communication via telephone networks is close to 100 percent reliable and of very high quality, but it is heavily focused on real-time exchanges. The challenge to the CSU with respect to voice communications is to access, which allows high-speed preserve (and possibly enhance) current services while reducing their costs. The goal communications on and between campuses, other of the Integrated Technology Strategy (ITS) is to provide a data communications network that is as reliable as the telephone system, but capable of carrying many educational agencies and around the world. times more information in multiple forms (text, data, images, audio) as required to meet the burgeoning academic and administrative demands of the university.

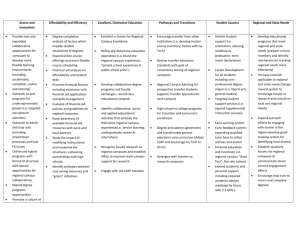

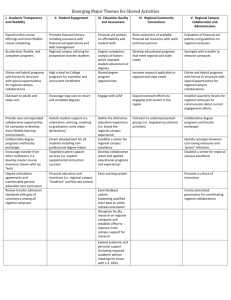

Change Data: Intra-Campus Networks

MEASUREMENT:

Reduction in service interruptions on the

Commonly accepted industry standards for quantifying interruptions in data network service do not exist at this time. In the absence of common standards, interruptions in network connectivit y are often measured in “downtime.” “Downtime” is a term used to network. identify periods of time (usually minutes) when users cannot send or receive information via a data network because of a problem in the network. Calculation of “downtime” is complex because a campus “network” typically consists of several local and wide area networks employing different kinds of hardware and software and operated by different units, all of which depend on local power and telephone utilities.

Measures of Success-November 2002 Access Infrastructure: 2

MOS IV: Personal Productivity

As used below, “downtime” is defined for the campus network as a whole; i.e., if a network user is able to send and receive information thanks to redundant capabilities, there is no “downtime,” even though a major piece of networking equipment may have failed. “Planned downtime” refers to interruptions in service scheduled to enable maintenance, upgrade or other activities related to the operation of the network. “Unplanned downtime” means unscheduled interruptions caused by a failure in some part of the network.

Table 13.1 and Figure 13A provide a summary of “downtime” reported by CSU campuses for the best and the worst months in the twelve-month period covered in this report. Despite increases in the volume of network traffic, the amount of downtime decreased over the previous year, especially for worst months.

Table 13.1 - System Profile: Campus Network Downtime in Total/Average Downtime

Downtime Total Minutes

All Campuses

Best Month

Average Minutes

All Campuses

1999/2000 (Baseline Year)

Total Minutes

All Campuses

Worst Month

Average Minutes

All Campuses

Planned

Unplanned

420

125

(7.0 hrs)

(2.1 hrs)

19

6

4,119

5,414

(68.7 hrs)

(90.2 hrs)

187

246

(3.1 hrs)

(4.1 hrs)

Planned

Unplanned

Planned

Unplanned

245

365

240

215

(4.1 hrs)

(6.1 hrs)

(4.0 hrs)

(3.6 hrs)

11

16

11

10

(0.3 hrs)

(0.1 hrs)

2000/2001

(0.2 hrs)

(0.3 hrs)

2001/2002

(0.2 hrs)

(0.2 hrs)

9,785

11,667

7,950

6.560

(163.1 hrs)

(194.5 hrs)

(132.5 hrs)

(109.3 hrs)

425

507

361

298

(7.1 hrs)

(8.5 hrs)

(6.0 hrs)

(5.0 hrs)

Figure 13A - System Profile: Median Campus Network Downtime

100

80

60

40

20

0

52

90

75

0 0 0

1999-2000 2000-2001 2001-2002

Fiscal Year

Best Month

Worst Month (x10)

Campus network utilization can be expressed as a percent of bandwidth capacity. Figure 13B shows the median peak and average bandwidth utilization for CSU campus networks.

Measures of Success-November 2002 Access Infrastructure: 3

MOS IV: Personal Productivity

Figure 13B - System Profile: Median Campus Network Utilization

50%

40%

30%

20%

10%

0%

Average

Peak

1999-2000 2000-2001

Fiscal Year

2001-2002

Baseline Data: Inter-Campus Network

Currently, 4CNet provides access to the Internet for 30 sites in the California State University, each with its own set of

Wide Area and Local Area Networks. CSU sites include 23 campuses, 5 off-campus centers, the office of

Government Affairs, and the Chancellor’s Office.

Determination of “downtime” for 4CNet is vastly more complicated than for a single campus. The methodology used in this report (explained below) was developed by 4CNet in 1999/2000 and was applied for the first time to measure the inter-campus network performance for 2000/2001. Dramatic improvement in network performance occurred in

2001/2002, as noted in Table 13.2. Following are the definitions of the components used to describe 4CNet performance.

“4CNet downtime” – data connectivity was unavailable between a CSU campus border router(s) or other electronics and adjacent 4CNet router, because of a 4CNet problem or circuit problem. (Note: if the campus is redundantly connected, a failure of one connection that leaves the other intact is not counted in this metric.)

“Internet connection downtime” – one or more CSU campuses, taken as a whole, could not reach the

Internet, taken as a whole. (Note: if a single campus host or LAN is unable to reach the Internet, or some subset of the Internet is unreachable, the incident is not counted in this metric.)

Utilization – This is calculated, on a per-campus basis, by measuring for the first business day of each month, the peak utilization on the campu s’s link to 4CNet. The peak utilization is derived by measuring utilization every five minutes (or more frequently if feasible) and taking the largest measurement. If the campus is redundantly connected, only the primary link is measured. The largest of the monthly measurements is then used as the metric. The percentage is derived by dividing this measurement by the physical capacity of the connection that was in place at the time of the measurement.

Measures of Success-November 2002 Access Infrastructure: 4

MOS IV: Personal Productivity

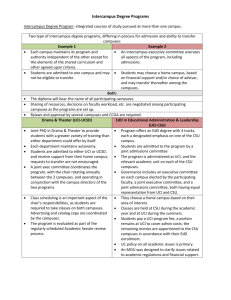

Table 13.2

– System Profile: Inter-Campus Network Performance as Measured by Minutes of Non-Availability

Fiscal Year

Best Month

4CNet Downtime

Worst Month

2000/2001 Baseline Year

Internet Connection Downtime

Best Month Worst Month

Planned 352 Minutes

Unplanned 200 Minutes

(0.027%)

(0.015%)

1020

Minutes

57600

Minutes

(0.079%)

(4.444%)

352 Minutes

200 Minutes

2001/2002 Baseline Year

64 Minutes (0.005%) 0 Minutes

(0.027%)

(0.015%)

1020

Minutes

57600

Minutes

(0.079%)

(4.444%)

Planned 0 Minutes (0.000%) (0.000%) 0 Minutes (0.000%)

Unplanned 0 Minutes (0.000%) 314 Minutes (0.024%) 0 Minutes (0.000%) 0 Minutes (0.000%)

*The total number of “minutes per month” is 1,296,000 (the number of minutes in 30 days times 30, the number of CSU sites served by 4CNet). Downtime for the inter-campus network is calculated by multiplying the number of minutes per outage by the number of sites affected. For example, if three campuses experience an outage of 10 minutes, 30 minutes of downtime is logged for the network. In the above table, the portion of all minutes per month network connectivity was not available is shown as a percentage.

The ability of a network to transport information is typically defined in terms of “bandwidth”. The typical link from the

4CNet backbone to a CSU campus has a bandwidth of 155 megabits/second; i.e., the link can carry up to 155 million bits each second. The bandwidth that is being used, or that is available for use, is determined by measuring the actual amount of data carried on a network link in a specified unit of time (typically one second), and dividing that figure by the bandwidth of the link. If, for example, the actual amount of data carried on the link in one second is 12 megabits, the utilization of that link during that second is 23/155 or 14.8 percent. Table 13.3 shows average and peak bandwidth utilization for 4CNet.

Table 13.3 – System Profile: Inter-Campus Network Traffic as Percent of Bandwidth Utilization

Fiscal Year

2000/2001

2001/2002

Average Bandwidth Utilization

13.000%

10.600%

Peak Bandwidth Utilization

32.000%

46.500%

4CNet currently provides adequate capacity to CSU campuses to support inter-campus traffic and connection to the

Internet. For this reason, no funding for 4CNet improvement was included in the TII upgrade. Because inter-campus and Internet connection are vital to the success of the ITS, inter-campus network performance is included in

Measures of Success . Moreover, the improvements to campus technology infrastructures will intrinsically increase demand for access to the 4CNet backbone network, since it will increase the number of networked users on each campus.

MEASUREMENT: Compliance of all inter-campus and intra-

The quality of network service available to students, faculty and staff is dependent campus networks with CSU established standards by in part on the physical infrastructure and in part on other factors such as the compatibility of networking equipment, protocols and operating systems, and on the effectiveness of network operations’ management. Network operations and

2008. the physical infrastructure must be seamless throughout the entire system to provide the high-speed communications envisioned in the ITS-TII. Adoption of common standards is a critical first step toward establishing a coherent telecommunications environment by the year

2008.

Change Data: Intra-Campus Network Standards

Figure 13C provides an overview of changes in the number of campus local area networks (LAN) and wide area networks (WAN) over the three-year period covered by these reports. Increases in the number of LANs are consistent with growth in the use of networked applications and online resources by all members of the university community.

The number of WANs has not increased, indicative of campus efforts to maintain a coherent and manageable environment.

Measures of Success-November 2002 Access Infrastructure: 5

MOS IV: Personal Productivity

80

70

60

50

40

30

20

10

0

Figure 13C - System Profile: Campus Network Environment

79

57

9

67

2 2

LANs

WANs

1999-2000 2000-2001

Fiscal Year

2001-2002

A profile of the campus networks for 2001/2002 is presented in Figure 13D. As might be expected, the larger campuses have more networks and more complex telecommunications environments.

Figure 13D - Campus Profile: Network Environment

30

25

20

15

10

5

0

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

<5k

|

5-10k

|

Campus FTES

10-

15k

|

15-20k

|

20k+

LANs (x10 over 10)

WANs

Measures of Success-November 2002 Access Infrastructure: 6

MOS IV: Personal Productivity

Less diversity in the number of network operating systems and network protocols generally correlates with greater operational coherence and manageability. Figures 13E shows that little change has occurred over three years in the percent of campus networks employing common operating systems and common network architecture.

Figure 13E - System Profile: Campus Network Environment - Common Architecture/Operating Systems

95%

90%

85%

80%

Arch/Protocols

Operating System

1999-2000 2000-2001 2001-2002

Fiscal Year

Baseline Data: Physical Infrastructure Standards for Network Connectivity

Appendix B describes the two sets of telecommunications infrastructure standards the CSU has adopted for upgrading campus physical network infrastructures; i.e., the pathways, cabling and electronics. These transport information between the main campus “backbone” network to instructional, office and other spaces linked by the campus network. The components behind the faceplate determine the quality and performance of all network applications. Minimum Baseline Standards apply to the network provisioning of campus buildings that were in existence or in the planning stage prior to implementation of the Integrated Technology Strategy. The

Telecommunications Infrastructure Planning Standards (TIP) apply to the provisioning of buildings planned and constructed subsequent to implementing the ITS. Differences between sets of standards recognize constraints imposed by the higher cost of retrofitting existing buildings.

Previous reports in the series attempted to establish a base for measuring progress in implementing the physical infrastructure standards of the Minimum Baseline and the Telecommunications Infrastructure Standards. The

1999/2000 Measures of Success report sought to describe the baseline campus infrastructure status in terms of type of space (instructional, laboratory, faculty and administrative office) and by type of network (data, voice and video).

This approach reflected closely the original conceptual framework that guided planning and engineering. Subsequent advances in digital technology, however, had the effect of blurring distinctions between data, voice and video networks. In recognition of these advances, the 2000/2001 Measures of Success adopted the number of network connections (outlets or jacks) meeting Minimum Baseline Standards as a more reliable indicator on which to chart progress toward the goals of the Integrated Technology Strategy –Technology Infrastructure Initiative (TII).

Accordingly, campus outlet “entitlements”—i.e., the number of outlets calculated by applying the Minimum Baseline

Standards to existing structures-were reported as baseline data for measuring progress of improvements to campus infrastructures.

Two problems with this approach became evident in preparing the current report. The 2000/2001 Measures of

Success failed to take into account additions to the number of network outlets (the socalled “outlet entitlements”) that had occurred and were occurring as a result of the approval of new building construction. Since the network provisioning standards for new construction (TIP) are different from the Minimum Baseline Standards applicable to the renovation of existing buildings, adjustments in the total campus “entitlement” had to be made. Second, it became apparent that all campuses had not interpreted “outlets” in the same way. Several campus “outlet” counts did not correspond to the numbers from the preliminary engineering studies. On investigation it became clear that some campuses had understood “outlets” to mean faceplates, or sets of two to four individual network outlets (or jacks).

Measures of Success-November 2002 Access Infrastructure: 7

MOS IV: Personal Productivity

Other campuses counted the number of connections supported by servers, a number which did not always correspond to the outlet inventory compiled in the preliminary engineering studies.

A revised approach to defining and measuring progress toward meeting the baseline standards for the telecommunications infrastructure has been developed for this year’s Measures of Success . The new method takes into account the dynamic nature of the campus “entitlement” by including additions to the TII preliminary engineering figure s resulting from the approval of new building construction. Confusion about the meaning of “outlets” was addressed by contacting campuses individually to assure that outlet counts are based on a common understanding.

The revised baseline data for the physical infrastructure for the system are displayed in Table 13.4.

Table 13.4 - System Profile: Progress Toward Meeting Minimum Baseline Telecommunications Infrastructure Standards

No. of

Campuses

Outlets at

MB Standards

Outlets Below-

MB Standards

Approved New

TIP Outlets

Total Outlet

Entitlement

Total Outlets at

Baseline

Percent at

Baseline

22 73,981 200,825 16,271 291,077 73,981 25.4%

As Figure 13F makes clear, campuses differ greatly in the quality of their telecommunications infrastructure.

Currently, ten campuses have essentially no network connections that meet CSU standards. At the high end, approximately 75 percent of the network connections on campus meet CSU quantity and quality standards.

Figure 13F - Campus Profile: Progress Toward Baseline Telecommunications Infrastructure

40%

30%

20%

10%

0%

80%

70%

60%

50%

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

Campus

OUTCOME: To provide students, faculty and staff with network access that allows information searching and retrieval and research within and beyond the CSU.

MEASUREMENT: Provision of 7 by 24 network access to all faculty, staff and students.

The ability of students, faculty and staff to use the CSU data network depends on access to appropriately equipped and configured computer workstations connected to 4Cnet. It also depends on the capability of the network to meet whatever traffic demands may be placed on it at the expected level of service. Information about access to computer workstations is included in the discussion of Initiative 4A, Baseline User

Hardware and Software Access. The characteristics, capacity and performance of CSU data networks were described above. This section focuses on the quality of network connectivity from the desktop to the faceplate.

Measures of Success-November 2002 Access Infrastructure: 8

MOS IV: Personal Productivity

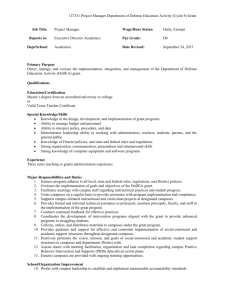

Baseline Data: Availability of High Speed Network Connectivity

To qualify as “high-speed,” a workstation must have a direct (not shared) connection to network that enables 100-155

Mbps (million bits per second) or faster transmission from the desktop to the Internet. This is the speed required to support full-motion video, a useful indicator of the bandwidth required for applications routinely used in the sciences.

An error in the technical specifications for high-speed connectivity in the summer 2001 survey caused discrepancies that were unable to be corrected. Change data on the number of workstations capable of supporting high-speed network connection are reported below.

The median percent for high-speed connectivity to the workstation ranges from 50 percent for faculty and staff to 65 percent for students. The higher median for students is explained by the much smaller number of computers available for student use. (See Initiative 4A.) Figures 13.G.1-3 profile high-speed network connectivity for each campus, as defined above, on university-provided workstations available for the use of full-time faculty, staff and administrators, and students.

Figure 13G.1 - Campus Profile: Network Connectivity - Faculty Workstations

100%

80%

60%

40%

20%

0%

-20%

-40%

-60%

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

Campus

+/- Median

% Hi-Speed

Figure 13G.2 - Campus Profile: Network Connectivity - Staff and Administrator Workstations

100%

80%

60%

40%

20%

0%

-20%

-40%

-60%

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

Campus

+/- Median

% Hi-Speed

Measures of Success-November 2002 Access Infrastructure: 9

MOS IV: Personal Productivity

Figure 13G.3 - Campus Profile: Network Connectivity - Workstations Available to Students

100%

80%

60%

40%

20%

0%

-20%

-40%

-60%

-80%

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

Campus

+/- Median

% Hi-Speed

Change Data: Student, Faculty and Staff Access to and Satisfaction with Network Use

MEASUREMENT:

Demonstration of user satisfaction with ease of use of the network.

Both faculty and staff report much lower satisfaction with remote access to network than with on-campus access. Staff express greater satisfied than faculty with both on- and off-campus access. In general, the pattern is one of increased staff use of and satisfaction with network services over results reported in the 2000 survey.

Table 13.5 – System Profile: Faculty, Staff and Student Use of and Satisfaction with Network Services

User Group Network Use On Campus Satisfaction

Remote

Satisfaction

2000/2001

Faculty

Staff/Administrators

Students

98.0

50.3

----

8.51

8.74

7.68

6.55

6.65

7.54

2001/2002

Faculty

Staff/Administrators

98.0

60.2

8.23

8.86

6.90

7.20

Most faculty and staff responses showed little or no significant relationship between the quality of network connectivity on the one hand, and their patterns of use of these resources. However, faculty on campuses with a higher percentage of outlets that met minimum standards did tend to reflect slightly higher levels of satisfaction with computing and technology resources available to them; among staff, mean satisfaction ratings were not affected.

One possible explanation for the lack of positive relationships is the “noise” introduced by workstation quality. A robust network cannot compensate for an inferior workstation. In future faculty and staff surveys, data will be gathered on the quality of individual workstations; this will permit “controlling” for workstation quality in order to assess the independent effects of the network on attitudes and behavior.

Measures of Success-November 2002 Access Infrastructure: 10

MOS IV: Personal Productivity

In many areas of networking, the CSU tends to be equal to, yet seldom behind, comparable institutions nationally

(e.g., in classroom connections, high speed video, ATM, gigabit Ethernet, and voice over IP). While CSU campuses are roughly even with public institutions on most indicators pertaining to the quality of network infrastructure, they appear to be solidly ahead in three areas: wireless, ISP, and broadband. For example:

91 percent of CSU campuses have functional, local area wireless networks compared to 75 percent nationally;

73 percent provide dial-up/ISP services for students compared to 51 percent nationally; the percentages for faculty are 86 and 63, respectively;

36 percent have full campus wireless networks compared to 23 percent nationally;

In DSL and broadband services, CSU campuses are ahead 18 to 7 percent for students, and 27 to 9 percent for faculty (although usually for a fee); and,

14 percent of CSU students have access to off-campus, wireless network services compared to 6 percent nationally, and the corresponding percentages for faculty are 18 and 4, respectively (usually without a fee).

Measures of Success-November 2002 Access Infrastructure: 11