Cellular Neural Network Simulation and Modeling

advertisement

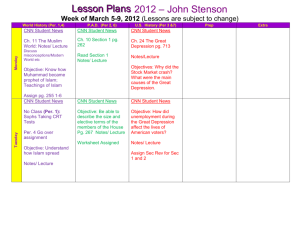

Cellular Neural Network Simulation and Modeling (Oroszi Balázs) Introduction Real time image and video processing is becoming a more and more demanding need for every day use, not just in various industries, and surveillance monitoring systems, but also in the consumer market. One of the main challenges for microprocessor manufacturers in the near future will be to build efficient processors and infrastructure for the real time handling of images and videos (or time signals in general), coming from spatially distributed sources. Because both of these tasks are strictly related to spatio-temporal computing, a great effort was taken to create supercomputers able to perform high performance spatio-temporal calculations. From one point of view these operations are need to be performed in real time. While from another one, extremely high accuracy is often not required. From this perspective the possibility to use analog computation directly on signal flows instead of doing digital computation on bits arises. Cellular Nonlinear Networks (CNNs) fully realize this concept by introducing a new paradigm for analog signal processing. This processing aspect (the highly parallel and analog nature of the CNN) combined with programmability leads to the concept of the so-called analogic computer. Based on the huge number of applications developed, CNNs can be considered as a paradigm for solving nonlinear spatio-temporal wave equations (a very difficult task in the digital world) within the range of microsecs. In 1988 papers from Leon O. Chua introduced the concept of the Cellular Neural Network. CNNs can be defined as “2D or 3D arrays of mainly locally connected nonlinear dynamical systems called cells, whose dynamics are functionally determined by a small set of parameters which control the cell interconnection strength” (Chua). These parameters determine the connection pattern, and are collected into the so-called cloning templates, which, once determined, define the processing of the whole structure. In this document, the main characteristics of the CNN are presented, and the problems of simulating the analog CNN architecture in a digital environment are discussed along with a ready-to-use computer program, simulating the functional behavior of the CNN, capable of performing some of the most common image processing tasks realized with CNN cloning templates. Basic characteristics of the CNN The basic idea of the CNN is to use an array of nonlinear dynamic circuits, called cells, to process large amounts of information in real time. This concept was inspired by the Cellular Automata and the Neural Network architectures. This new architecture is able to perform time-consuming tasks, such as image processing, being at the same time, suitable for VLSI implementation. The original CNN model was introduced in 1988. Each cell is characterized by uij, yij and xij being the input, the output and the state variable of the cell respectively. The output is related to the state by the nonlinear equation: yij = f(xij) = 0.5 (| xij + 1| – |xij – 1|) The CNN can be defined as an M x N type array of identical cells arranged in a rectangular grid. Each cell is locally connected to its 8 nearest surrounding neighbors. The state transition of neuron (i, j) is governed by the following differential equation: xi , j xi , j A(i, j, k , l ) y C ( k ,l )S r ( i , j ) k ,l (t ) B(i, j, k , l )u C ( k ,l )S r ( i , j ) k ,l (t ) z i , j Where C(i,j) represents the neuron at column i, row j, Sr(i,j) represents the neurons in the radius r of the neuron C(i,j), and zi,j is the threshold (bias) of the cell C(i,j). The coefficients A(i, j, k, l) and B(i, j, k, l) are known as the cloning templates. In general, they are nonlinear, time- and space variant operators. If they are considered linear, time- and space invariant, they can simply be represented by matrices. This means, that they are constant throughout the whole processing of the CNN. The A template controls the feedback of the output of the neighboring cells (A is called the feedback template), while the B template controls the feed-forward of the input of the neighboring cells (B is called the control template). –2– Operation of the CNN We shall investigate the operation of the CNN in the special case, when the cloning templates are considered linear, time- and space invariant, and we shall focus on the task of image processing and enhancement, as that is the primary objective of this document. In the field of image processing, though the operation of the CNN in time is continuous, its distribution in space is discrete, so each cell essentially holds one pixel of the image. There are 3 pictures present during the workings of the CNN: the input (U), the state (X) and the output(Y). At the initialization stage, an input image is fed (or uploaded) into the CNN through its input matrix. Optionally, an initial state may also be set, if desired. After this, the state-transition stage takes place (as described in the previous section), in which the CNN will converge into a stable state. This convergence takes place in the range of microsecs, which makes the CNN a remarkably powerful and extremely fast image processing architecture. At the end of the state-transition, the final output is retrieved according to the output-state nonlinear equation, as described above. What the CNN essentially performs is a mapping from the input image U with cloning templates A and B, to the output image Y. Typically, the cloning templates are of size 3x3. This size represents the case, when only the directly surrounding cells are connected. There is one more thing, that needs to be taken care of, and those are the boundary conditions. Boundary cells are those, which are at the edges of the CNN array. Beyond them are so-called irregular or virtual cells. They are not part of the regular CNN cells, so there is nothing uploaded into them in the initialization phase, also, the state transition is not applied for them as well. This requires, that the initial input and output of these neurons must be assigned somehow. There are different methods for this: Dirichlet (fixed) conditions: the input and output of the virtual cells are set to some constant (typically 0). Neumann (zero-flux) conditions: the input and output of the virtual cells are set to the same as the neighboring boundary cells. Toroid (periodic) conditions: the input and output of the virtual cells are set to the same as the opposite side boundary cells. –3– Modeling and simulation of the CNN architecture The true processing capabilities of CNNs for high-speed parallel processing are only fully exploited by dedicated VLSI hardware realizations. Typical CNN chips may contain up to 200 transistors per pixel. At the same time, industrial applications require large enough grid sizes (around 100 x 100). Thus, CNN chip designers must confront complexity levels larger than 106 transistors, most of them operating in analogue mode. Simulation plays an important role in the design of the CNN cloning templates. Therefore, it has to be fast enough to allow the design phase of various templates be accomplished in reasonable time. At the same time, the simulation has to be accurate enough, to reflect the behavior of the analog circuitry correctly, and provide reliable information to guide the designer in making the right decisions. In practice, the simulation of the CNN involves a trade-off between accuracy and computation time. On the one hand, high-level simulation, which is focused on emulating the functional behaviour, cannot reflect realistically the underlying electronic circuitry. Their lack of detail makes them ill-suited for reliable IC simulation. On the other hand, the SPICE-type transistor-level simulators, although very accurate, are barely capable of handling more than about 10 5 transistors and may take several days of CPU time for circuit netlists containing about 10 6 transistors. Hence, these low-level tools are ill-suited for simulating large CNN chips. In a straightforward approach, high-level simulators might be configured and programmed to achieve a more realistic simulation of the CNN hardware, but at the expense of a considerable coding effort, and a great increase in CPU usage, resulting in slowdown of the simulation. Alternatively, the use of macromodels in SPICE-like tools will decrease CPU time consumption. This results in a simplified but still accurate description of the circuit, although the simulator core still has to handle the whole network interconnection topology. Again, the limitations on computing power make this approach inefficient when dealing with large CNN chips. Therefore, it is necessary to bridge the gap between these approaches; that is, in order to achieve the efficient simulation of CNN chips, an intermediate solution must be found. Even though this intermediate solution would give the best results from a design point of view, it is beyond the scope of this document. In the rest of this writing we shall focus on the functional modeling of the CNN architecture. –4– Functional modeling of the CNN architecture The output of a CNN model simulation is the final state reached by the network after evolving from an initial state under the influence of a specific input and boundary conditions. The following block diagram shows the state-transition and output of a single cell: In the most general case, the final state of one cell can be described by the following differential equation: t x(t ) x(t 0 ) f ( x( )) d or f ( x(t )) x (t ) t0 where dx(t)/dt is given as described in the previous sections. As a closed form for the solution of the above equation cannot be given, it must be integrated numerically. For the simulation of such equations on a digital computer, they must be mapped into a discrete-time system that emulates the continuous-time behavior, has similar dynamics and converges to the same final state. The error committed by this emulation depends on the form of the discrete-time system selected, which in turn depends on the choice of the method of integration, i. e. the way in which the integral is calculated. x(t n 1 ) x(t n ) t n 1 f ( x(t ))dt tn –5– There is a wide variety of integration algorithms that can be used to perform this task. However, only three of them are going to be considered here. These methods are: the explicit Euler’s formula: t n 1 f ( x(t ))dt t f ( x(t )) , n tn the predictor-corrector algorithm: t n 1 f ( x(t ))dt tn t [ f ( x(tn )) f ( x(tn 1 )( 0) )] 2 where x(tn 1 )( 0) x(tn ) t f ( x(tn )) , and the fourth-order Runge-Kutta method: k1 t f ( x(tn )) t n 1 1 f ( x(t ))dt 6 (k 1 2 k 2 2 k3 k 4 ) 1 k2 t f ( x(tn ) k1 ) 2 1 k3 t f ( x(tn ) k2 ) 2 1 k4 t f ( x(tn ) k3 ) 2 where tn From all of them, the Euler method is the fastest, but gives the least accurate convergence behaviour, while Runge-Kutta gives the best results, however, much slower. In the Runge-Kutta algorithm, four auxiliary components (k1-k4) are computed. These are auxiliary states, which are then averaged. For applications targeting accuracy and robustness, undoubtedly Runge-Kutta would be the method of choice. In our case, however, as the primary target is a fast, working implementation of a CNN simulator as an image processor, we shall choose the Euler method. –6– Handling special cases for increasing performance It is not uncommon within templates that extract local properties of the image (like edge detectors) to use a fully zero A template. I have discovered that handling this special case, we can speed up processing dramatically. Given A = 0, the state equation takes the following form: X X BU Z (BU + Z) is constant, as U does not change during the process. Let: BU + Z = C Using Euler integration: X 1 X 0 t X 0 X 0 t ( X 0 C ) X 0 (1 t ) C t X 2 X 1 (1 t ) C t [ X 0 (1 t ) C t ](1 t ) C t X 0 (1 t ) 2 C t (1 t ) C t X 3 X 2 (1 t ) C t [ X 0 (1 t ) 2 C t (1 t ) C t ](1 t ) C t X 0 (1 t ) 3 C t (1 t ) 2 C t (1 t ) C t X 4 X 3 (1 t ) C t [ X 0 (1 t ) 3 C t (1 t ) 2 C t (1 t ) C t ](1 t ) C t X 0 (1 t ) 4 C t (1 t ) 3 C t (1 t ) 2 C t (1 t ) C t ... The pattern can clearly be seen by now. In each new step X 0 (1 t ) n gets multiplied by (1 t ) so it’s power index increases. The remaining part is a geometric series, so the general equation of calculating the n-th state is: n 1 X n X 0 (1 t ) n C t (1 t ) k k 0 Using the general formula of calculating the sum of a geometric series: S n a1 qn 1 q 1 the sum of the above geometric series turns into: n 1 C t (1 t ) k C t k 0 (1 t ) n 1 (1 t ) n 1 C t C [(1 t ) n 1] C [1 (1 t ) n ] (1 t ) 1 t So the state equation using this will be: X n X 0 (1 t ) n C [1 (1 t ) n ] X 0 (1 t ) n C C (1 t ) n ( X 0 C )(1 t ) n C X n ( X 0 BU Z )(1 t ) n BU Z This result is of utmost importance regarding the speed of the simulation, because the number of iterations that need to be performed is reduced to 1. We can get to the final state immediately, given the U input, the B template and Z bias. As multiple iterations through an image causes lots of non-cacheable memory accesses (which is very slow), this improvement in the special case of A = 0 gives a huge boost in speed. –7– Realization of the CNN simulator To get from theory to practice, and to implement a fast, relatively correctly working CNN simulator within a limited timeframe, that is capable of demonstrating some real-life example candidates of practical CNN applications in the image processing field, the right combination of software and development tools was need to be chosen. To speed up development, and to skip the time-consuming task of implementing low-level image reading/writing/displaying code, the CNN simulator is realized as an Avisynth plugin. AviSynth (http://www.avisynth.org) is a powerful tool for video post-production. It provides almost unlimited ways of editing and processing videos. AviSynth works as a frameserver, providing instant editing without the need for temporary files. AviSynth itself does not provide a graphical user interface (GUI) but instead relies on a script system that allows advanced non-linear editing. While this may at first seem tedious and unintuitive, it is remarkably powerful and is a very good way to manage projects in a precise, consistent, and reproducible manner. Because text-based scripts are human readable, projects are inherently self-documenting. The scripting language is simple yet powerful, and complex filters can be created from basic operations to develop a sophisticated palette of useful and unique effects. Avisynth uses a special programming language, designed specifically for video processing. It is a scripting language, and it’s functions are implemented under-thehood as C/C++ dynamic link libraries (DLLs), which are called plugins. These plugins expose an interface towards the scripting language, from which these functions can be called. This allows very flexible use and parameterization Avisynth itself is composed of a huge collection of plugins. The CNN simulator itself is also implemented as a plugin. This makes it possible to use it in Avisynth’s scripting language, effectively and easily processing any type of image or video that Avisynth can open. The programming language of choice was C++, and the plugin uses Avisynth’s compiler-independent C interface. The following parameters can be set from within the scripting language: – – – – – – – – – A template B template Z bias X initial state (including either initial image/clip or initial value) U initial image, boundary conditions Timestep of Euler integration Number of iterations to perform Black color value White color value –8– The plugin operates in the YUY2 colorspace, which is very well suited for grayscale image processing. In the YUY2 colorspace, pixel data is separated to chrominance and luminance information and is arranged in the following way (each component being 8 bits long): [Y][Cr][Y][Cb] This block represents 2 pixels. Horizontal color resolution is half of that of RGB24, but luminance resolution is unchanged. Each pixel’s luminance ranges between 0 and 255 (8 bit grayscale). This range is then converted down to a custom range (represented by 4-byte floating point values) specified by the Black and White color value script function parameters. Care needs to be taken to adjust these parameters correctly, as each CNN template can operate in very specific ranges, and it is the template designer’s task to specify the value range, when designing the templates. Here is an example script, that does simple black and white edge detection (also requires adaptive B/W conversion plugin, that accompanies the CNN plugin): LoadCPlugin("C:\Program Files\AviSynth 2.5\plugins\c\cnn.dll") LoadCPlugin("C:\Program Files\AviSynth 2.5\plugins\c\adaptivebw.dll") DirectShowSource("C:\video.mpg") # --- BW edge --AdaptiveBW() CNN( \ "A", \ 0,0,0, \ 0,0,0, \ 0,0,0, \ "B", \ -1, -1, -1, \ -1, 8, -1, \ -1, -1, -1, \ Z=-1.0, \ XInitValue=0, \ YInitValue=0, \ timestep=1, \ iterations=1, \ black=-1, \ white=1 \ ) Real-time processing of captured video: To achieve direct real-time processing of captured video, the excellent ffdshow raw video DirectShow filter can be used with its built-in Avisynth post-processing capability. –9– Speed, accuracy and limitations of the CNN simulator plugin As mentioned earlier, every CNN simulator has to make a decision between speed and accuracy. During the development of the CNN Avisynth plugin, the primary target was traditional PCs, nowadays available in every household. As such, no specific hardware was expected to be available to aid the processing of the CNN simulator. This means, that the simulator itself is not as robust as other special CNN simulators, but it is decently accurate and fast. Selecting the right timestep and iteration count for a given template, very good and accurate results can be achieved. I cannot stress enough, that the most important parameters of the CNN simulator are the timestep and iteration count parameters (along the cloning templates of course). For fine results, choose a relatively small timestep (around 0.1-0.2) and a high iteration count (around 20-40). This will most possibly give good results, but processing speed will drop to a crawl. For quasi real-time performance (15-25 FPS) set the iteration count to 1-5, and increase the timestep parameter accordingly. Also, make sure not to process images with too high resolutions. Resolutions around 400x300 with an iteration count of 1-5 should give good performance. Is has to be noted, that when the A template is fully 0, the modified form of the state equation is used (as presented in Handling special cases for increasing performance). So the iterations parameter no longer specifies the number of iterations, as that is always 1 in this case, but is used in a different manner. See the noted section for details. Though the code is decently optimized, there is a great deal of floating point calculations required, as well as lots of memory accesses, which has a huge impact on performance. The simulator correctly simulates the state-transition rule using the Euler integration method. Though it is the fastest, it may not give the most accurate result. This limitation comes from the design choice of speed. An up-to-date version of this document can be found at: http://digitus.itk.ppke.hu/~oroba/cnn/ along with other useful information and the CNN simulator program itself. Make sure to check out the following directories: http://digitus.itk.ppke.hu/~oroba/cnn/documentation/ (ppt presentation!) http://digitus.itk.ppke.hu/~oroba/cnn/pictures/ (screenshots!) References: Cellular Neural Networks: A paradigm for Nonlinear Spatio-Temporal Processing by Luigi Fortuna, Paolo Arena, Dávid Bálya and Ákos Zarándy Behavioral Modeling and Simulation of CNN Chips by R. Carmona, R. Domínguez Castro, S. Espejo and A. Rodríguez-Vázquez – 10 –