2.2 Document-Centric multimedia Browsers

An ego-centric and tangible approach to meeting indexing and browsing

Denis Lalanne, Florian Evequoz, Maurizio Rigamonti, Bruno Dumas, Rolf Ingold

DIVA Group, Department of Informatics, University of Fribourg, Bd. de Pérolles 90

CH-1700 Fribourg, Switzerland

{ firstname .

lastname }@unifr.ch http://diuf.unifr.ch/diva

Abstract.

This article presents an ego-centric approach for indexing and browsing meetings. The method considers two concepts: live annotation of meetings through intentional tangible actions and meetings’ data alignment with individuals’ personal information. The article first introduces the concept and present a brief state-of-the-art of the domain of tangible interaction and of documentcentric multimedia browsing, an interesting type of tangible object. The article then presents a first application of this idea to multimedia browsing and further discusses in detail the approach in the context of meeting recording, indexing and browsing.

1 Introduction

With the constant growth of information a person owns and handles, it is particularly important to find ways to support information personalization and organization. Information is dematerializing in our daily and professional life and thus, people are often experiencing the “lost in infospace” effect. Our documents are multiplying in very large file hierarchies, meetings attendance is increasing, emails are no longer organized, our pictures are no longer stored in photo-albums, our CDs are taking the form of mp3 files, etc. What we often miss in our daily-life and professional life are personal shortcuts to our information, either tangible or digital, like used to be books in our shelves or the librarian who knew our interests.

Google and Microsoft recently to solve the “lost-in-infospace” issue by providing, respectively, a desktop search engine and a powerful email search engine, in order to minimize the effort made by people to organize their documents and access them later by browsing. However, in order to find a file, one has to remember a set of keywords or at least remember its “virtual” existence. If one does not remember to have a certain document, browsing could still be helpful. Browsing can reveal related keywords and documents that help you remember, since this works by association, like our human memory does (Lalanne, 2005, Rigamonti, 2005, Whittaker, 2006). For this reason, information is generally easier to retrieve, and to “remember” if it is associated to personal information , either digital or physical .

Meeting is a particularly interesting example where personalized access would be useful. Meetings are central in our professional lives, not only formal meetings but also meetings at the coffee machines. Numerous recent works have tried to support the meeting process with recorders, analyzers and browsers, however most of those projects try to automate the whole process for every user, which is central with the long term goal of automating meeting minutes authoring. Our claim is that replaying a meeting is personal in that it depends of each individual interest and that it is hard to find an agreement on what is interesting for everybody during a meeting. For this reason, we propose in this article a complementary approach, ego-centric, which consider

(a) meeting participants as potential live annotators and (b) persons who consult meetings afterwards as individuals, and thus with their own interests, derived from their personal information. devices, and further at analyzing the captured data in order to create indexes to retrieve interesting parts of meeting recordings.

The first application is briefly presented in this article, and a larger part is dedicated to the meeting scenario. In the next section, a brief state-of-the-art of tangible user interfaces is depicted. Further, the section presents a state-of-the-art on past and current document-centric browsers. The third section presents the first application of our concept, i.e. a tangible multimedia browser developed at the University of Fribourg.

The forth section presents our ego-centric approach for indexing and browsing meetings, with tangible live annotations first, and secondly through the alignment with personal information structure. Finally, a tangible document-centric browser is presented as a illustrative application. Finally, last section presents our conclusion remarks and future works envisioned.

2 State of the art

We believe tangible interfaces can be useful to establish links between our memory and information. They roost abstract information in the real world through tangible reminders, i.e. tiny tagged physical objects containing a link towards information sources that can be accessed by several devices. We consider them as tangible hypermarks, i.e. embedded hyperlinks to abstract information attached to everyday physical objects. A brief state-of-the-art of tangible user interactions is presented in this section as well as on document-centric multimedia browsers that we consider being an interesting case of tangible interaction in the context of meetings. Finally, a brief introduction to the domain of personal information management is presented.

2.1 Tangible User Interfaces

Tangible User Interfaces (TUIs) is a genre of human-computer interaction that uses spatially reconfigurable physical objects as representations and controls for digital information [1]. Tangible User interfaces give a physical form to digital information.

They are physical artifacts used as interfaces to control and interact with digital information. We present in this section several recent research projects that are related to our vision. The realization of those projects proves the technological feasibility of our project and its usefulness in regards to user evaluations.

Phidgets, or physical widgets, are building blocks that help a developer construct physical user interfaces [2][3]. The philosophy behind phidgets is: … just as widgets make GUIs easy to develop, so could phidgets make the new generation of physical user interfaces easy to develop. Phidgets arose out of a research project directed by

Saul Greenberg at the Department of Computer Science, University of Calgary;

DataTiles allow people to physically express and manipulate database queries; The

DataTiles system integrates the benefits of two major interaction paradigms: graphical and physical user interfaces [4]. Tagged transparent tiles are used as modular construction units. These tiles are augmented by dynamic graphical information when they are placed on a sensor-enhanced flat panel display. They can be used independently or can be combined into more complex configurations, similar to the way language can express complex concepts through a sequence of simple words. Data-

Tiles allow people to physically express and manipulate database queries. This system demonstrates ways in which tangible interfaces can manipulate larger aggregates of information. One of these query approaches has been evaluated in a user study, which has compared favorably with a best-practice graphical interface alternative.

The mediaBlocks system enables people to use physical blocks to “copy and paste” digital media between specialized devices and general-purpose computers, and to physically compose and edit this content (e.g., to build multimedia presentations); The

MediaBlocks project is the closest to vision [5][32]. MediaBlocks is a tangible interface for physically capturing, transporting, and retrieving online digital media, as well as for physically and digitally manipulating this media. MediaBlocks do not actually store media internally. Instead, they are embedded with digital ID tags that allow them to function as “containers” for online content, or alternately expressed, as a kind of physically embodied URL. MediaBlocks interface with media input and output devices such as video cameras and projectors, allowing digital media to be rapidly “copied” from a media source and “pasted” into a media display. MediaBlocks are also compatible with traditional GUIs, providing seamless gateways between tangible and graphical interfaces. Finally, mediaBlocks are used as physical “controls” in tangible interfaces for tasks such as sequencing collections of media elements.

Interacting with paper on the digitaldesk; Instead of trying to use computers to replace paper, the Digital desk takes the opposite approach. It keeps the paper, but uses computers to make it more powerful. It provides a Computer Augmented Environment for paper. The DigitalDesk is built around an ordinary physical desk and can be used as such, but it has extra capabilities. A video camera is mounted above the desk, pointing down at the work surface. This camera output is fed through a system that can detect where the user is pointing, and it can read documents that are placed on the desk.

A computer-driven electronic projector is also mounted above the desk, allowing the system to project electronic objects onto the surface and onto real paper documents – something that can’t be done with flat display panels or rear-projection. The system is called DigitalDesk because it allows pointing with fingers [6][7].

2.2 Document-Centric multimedia Browsers

Nowadays, static documents have been used successfully in various browsers and interfaces as artifact for accessing to multimodal information. Most existing works and evaluations are centered on static documents used in the form of augmented paper.

This section does not summarize the totality of these works, but only presents recent and significant researches. RASA [9] is an environment that augments map paper with

Post-it™ notes and is used in military command posts for field training exercises. Users interact with the environment with voice commands or by writing symbols on notes, which are interpreted by a gesture recognizer. RASA ’s feedbacks are visual information projected on the map. Using augmented map papers presents various advantages such as collaboration between users, robustness of the system, users’ acceptance, etc. In the Books with Voices [5] project, printed books are augmented with barcodes, which are read in order to play corresponding oral histories on PDAs. This project is founded on the observation that several historians, although disposing of both printed document and audiotapes, prefer to search information in the transcript, because it contains page numbers, indexes, etc. Feedbacks of historians involved in the evaluation were enthusiastic. Listen Reader [2] is another exciting experience where users read a book while the sound environment evolves automatically. The system uses RFID technology for detecting the current page and electric fields for reading hand proximity to text and images, in order to adapt the audio atmosphere to the book content. Palette [10] allows preparing, reordering and controlling slideshows presentation using printed index cards, which contain a reproduction of the slide along with a barcode. This project has shown through user evaluation that the navigation, based on physical cards, allows users to concentrate exclusively on the communication task. Another project augmenting printed documents aims at creating interactive paper maps for tourists [11]; users use a digital pen for pointing an area of the map, which is linked with information grouped in categories such as entertainment, art, etc. System feedbacks could be either displayed on PDAs or restituted as voice in an earpiece.

This brief overview of existing projects using static documents as interfaces to multimedia information or services, shows how well documents are accepted by users and how good they are in collaborative environments, which is truly the case of meetings.

2.3 Personal Information Management

Personal information management (PIM) supports the daily life activities people perform in order to manage information items such as documents (paper-based and digital), web pages and email messages. PIM is generally user-centered and considers

the tasks and persons’ various roles (e.g. employee, parent, friend, etc.). The major goal of PIM is to provide the right information in the right place, and of sufficient completeness to meet users’ need, and not more.

Two major ways have been considered to tackle PIM, either using emails (Whittaker) or the agenda (S. Jerrold Kaplan) as hubs where personal information transits.

However, it seems not enough simply to study, for example, e-mail use in isolation ; a

PIM tool must be assessed in a broader context of a person’s various PIM activities.

3 Managing our daily life information

The goal within this application is to design, implement and evaluate tangible reminders facilitating the control, personalization and materialization of our daily life personal multimedia information. The following scenario illustrates the basic functionalities of our system:

Sandra created a folder full of pictures from her last vacations in Corsica. She glues an RFID (Radio Frequency Identification) on a nice seashell she brought back from Corsica and asks the system to associate it with the picture folder. One week later she wants to show her friends a slideshow of his vacations. She places the seashell, together with a “jazz” MeModule, in a little hole next to the plasma screen, which activates the show. Denis who enjoyed the evening and the pictures gives his calling card to Sandra. The following day, Sandra places Denis’ calling card along with the seashell on top of the communicator and makes a brief gesture to send everything.

At the time of writing, we have implemented several prototype of this concept for managing personal information, among which Elcano. The goal of the Elcano project

[1] was to develop a simple tangible browser providing original views of the multimedia content of a storage medium, facilitating navigation using tangible sorting and filtering mechanisms and interactive visualizations. In this case, a memory stick is used as storage medium to provide mobility to the user.

Elcano provides interactive visualizations to ease navigation through a great quantity of documents. The main visualization is divided into two parts. On the left part, documents are plotted according to two axes and are represented by circles in the resulting scatter plot, different circle colors being mapped onto different types of files

(jpg, mp3, avi), and a circle’s size representing the actual size of the document it stands for. To navigate in this plot, i.e. to select a subset of documents, two physical sliders, each one being connected to one axis, are used to move a rectangular selection box. A special “rotator” button may also be turned to resize the selection area, or pushed in order to zoom in and out in a cyclic way. In the case of zooming in, the scatter plot is rescaled to contain the selected elements only. Hence the navigation space is narrowed down through physical filtering. On the right part of the visualization, a

“sunburst” represents a sample of the tree structure of the memory stick. Directories to which belong the selected documents in the scatter plot are highlighted in the sunburst.

This gives a compact preview of the selected documents’ locations in the tree structure, rather than the exact directories containing the documents, which would take up more room.

When the satisfying subset of documents has been selected in the scatter plot, the user may switch to the documents wheel visualization by clicking on the appropriate button. In the documents wheel, documents names are displayed around the perimeter.

The rotator button triggers the rotation of the circle in order to select a particular document. Pushing the rotator button runs the selected document. Three additional buttons map onto other tasks: (1) create an album, i.e. a link between a personal object and a document, (2) read an album and (3) switch back to the scatter plot view. The technology used to attach a document to a personal object is explained in the next section.

Fig.1

Tangible multimedia browsing with Elcano.

Sorting and filtering algorithms as well as binding to personal objects are activated using physical tokens augmented with RFID tags. Two RFID antennas are used to control the visualization sorting algorithms. In the scatter plot visualization, filtering and sorting mechanisms are available. Filtering reduces the amount of documents displayed, while sorting algorithms applied on the axes of the scatter plot re-organize the layout of the documents accordingly. Three types of sorting algorithms are useful for any type of files: alphabetical order, modification date of file, frequency of use. The remaining sorting algorithms are suited to music files: album’s date, author’s name, real name of song, style of song, album’s date. A third RFID reader allows users to associate documents to personal objects, in order create a direct link between one’s digital memories and an object of the real world.

Elcano is a good example of augmented virtuality; the virtual world is augmented with physical access to information. Further, Elcano shows how tangible user interfaces can be used not only to manipulate multimedia digital data but also to allow endusers program their own links between digital information and tangible personal objects. A heuristic evaluation of Elcano has been performed by three usability experts to detect usability problems. Experts followed a list containing ten themes, which guided them in discovering 30 major usability problems, mainly falling in the category

“Match between system and the real world”. For example, the position of the phidgets was not found adequate. Most usability holes have been fixed and a satisfaction evaluation, on 8 users, conducted afterwards, showed encouraging results: users found the visualizations useful and most of them were in favor of using it for a home usage, although they experienced some difficulties to interact with the tangible devices.

4 Ego-centric indexing and browsing of meetings

The Smart Meeting Room application has been built in the context of the NCCR on

Interactive Multimodal Information Management (IM2). This application aims at recording meeting with audio/video devices, and further at analyzing the captured data in order to create indexes to retrieve interesting parts of meeting recordings.

In this context the University of Fribourg was in charge of analyzing the static doc-

-

uments and to align them, i.e. to synchronize them, with the other meeting media.

Within the Smart Meeting Room application, our goal is to support the following tasks:

Interacting real-time with the meeting room and the meeting documents in order to improve human/human communication during meeting, less intrusion of devices, etc.

Personal live annotation of meetings using tangible interaction techniques;

Browsing through multimedia meeting archives via personal information structure and tangible interactors;

Fig.2

Fribourg Smart Meeting Room environment.

4.1 Fribourg Smart Meeting Room and intentional live tangible annotations

Roughly 30 hours of meeting have been recorded at the University of Fribourg thanks to a room equipped with 10 cameras (8 close-ups, one per participant, 2 overviews), 8 microphones, a video projector, a camera for the projection screen capture and several cameras for capturing documents on the table. Camera and microphone pairs' synchronization is guarantied and they are all plugged to a single capture PC,

-

4.2 Indexing and browsing meetings through personal information structure

The goal of this approach is to elicit the implicit structure of our mailbox, to organize our personal information around, and use this structure to browse contextually meeting archives.

Indeed, email is an important part of our personal information. A standard mailbox typically contains information about the activities, topics of interest, friends and colleagues of the owner, as well as relevant temporal features associated to activities or relationships. Therefore, it is a rich entry point into our personal information space.

Three dimensions of the personal information are particularly well represented in email: thematic, social and temporal mainly.

Further, cross-media alignment techniques will be implemented to link documents to the elicitated email structure. More specifically, the purpose is to provide solutions and techniques to index, structure and browse Personal Information (PI) :

Index and Link PI. Cross-media information mining techniques shall be used to generate metadata on top of which, thematic, temporal or social links shall be created, connecting information heterogeneous type (documents, pictures, music, people, etc.), and thus building a personal information network.

Mine and structure PI. The system will provide means to organize personal information in various ways, combining automatic clustering techniques based on information features along with user-assisted clustering. This meth-

od should re-enforce users’ feeling of control on the organization of his personal information.

Browse and retrieve PI. Views of personal data, notably based on clusters, should allow various levels of focus, provide details on demand and filtering mechanisms, and be usable enough to invite browsing information instead of brute searching.

Fig.3

Meeting archives browsing through personal information structure

Using the similarity based on the co-occurrences of words in the subjects and contents of emails, a hierarchical clustering has been performed, which aims at finding a thematic organisation of emails. The result of this clustering is fed into a treemapvisualization. Furthermore, a social network has been built using similarity measures between people’s email addresses. The result of this analysis is a social network graph. However, no dimension alone can fully grasp the complexity of one’s mailbox.

Therefore, our plan is to combine and link several visualization techniques applied on each dimension to help the user browse through his personal email archive.

Using the AMI meeting corpus, which notably includes email data, we plan to lay the foundations of our egocentric meeting browser, taking profit of thematic, social and temporal clustering metadata to guide the user towards the desired piece of information in meetings.

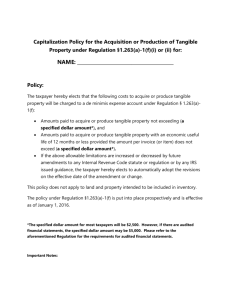

4.3 TDoc: a toy example of tangible meeting browser

In addition, we believe that physical documents, i.e. printed documents, can help achieve the same goal than tangible personal object. Because paper documents are also more familiar and more easily handled than electronic ones, there are even strong chances that a physical-document-centric browser would perform better than a purely digital-document-centric browser. From this observation emerged the idea of developing a “tangible” document-centric meeting browser, aiming at evaluating the usefulness of physical documents to help access digital multimodal meeting information.

TDoc is a first prototype extension of the JFriDoc meeting browser providing exclusively tangible interaction. By the mean of printed versions of the documents discussed or presented during a meeting, the user can access the vocal content recorded

during that meeting, related to the paragraph. The low-fidelity prototype of TDoc shown on Fig. 4 was built in a few hours. It uses Phidgets [4] and normal computer speakers as single input/output devices. Neither a screen nor a keyboard or a mouse are used. The tangible and audio devices are an interface to the behind-the-scene

JFriDoc meeting browser. As said above, the printed documents are the preferred entry points and as soon as the user places a document on the frame, the document is recognized by the mean of a RFID tag. With the help of a sliding arrow placed to the left of the frame, along the side of the chosen document, the user can then select a paragraph of interest. An IR distance sensor is in charge of tracking the position of the sliding arrow in order to find the pointed text block. A motor then rotates a graduated wheel, which shows the number of speech utterances linked to the selected text block.

Using another physical slider, the user can browse through these utterances, and listen to them in turn. The prototype described here will be extended in the future to improve paragraph selection, to allow more precise queries (for example to filter out utterances of a particular speaker), etc.

Fig.4

First prototype of the TDoc tangible document-centric meeting browser

6 Conclusion

This article presents an ego-centric and tangible approach to meeting recording and browsing. The approach proposed takes benefit of both tangible bookmarking mechanisms and of the alignment of personal information with meetings archives. The systems presented in this article are still in their infancy but the user feedbacks gathered on our tangible multimedia browser encourages us in that direction.

Our work on tangible document-centric browsing is a first step towards the implication of physical documents, and more generally tangible interactions, at several stages of meeting recording, analysis and browsing. Tangible objects could indeed be engaged in three different tasks in that context: (1) Aid “live” annotation of the meeting by providing tangible and collaborative mechanisms, like for instance bookmarking mechanisms or even voting cards (agreement/disagreement, request for a turn taking,

etc.) that could be used for later browing; (2) Serve as triggers to access services provided by the meeting room; (3) Help browsing a recorded and fully annotated meeting.

Finally, this article presented a first prototype of emails automatic clustering and browsing, that we plan to use as a central structure to build a personal access to meetings’ archives, through emails/meetings alignment. Using the AMI meeting corpus, which notably includes email data, we plan to lay the foundations of our egocentric meeting browser, taking profit of thematic, social and temporal clustering metadata to guide the user towards the desired piece of information in meetings.

References

1. Omar Abou Khaled, Denis Lalanne, Rolf Ingold, "MEMODULES as tangible shortcuts to multimedia information", Project specification, June 2005.

2. Back, M., Cohen, J., Gold, R., Harrison, S., Minneman, S.: Listen Reader: An Electronically

Augmented Paper-Based Book. In proceedings of CHI 01, ACM Press, Seattle, USA (2001)

23-29

4. Greenberg, S., Fitchett, C.: Phidgets: Easy Development of Physical Interfaces through Physical Widgets. In proceedings of the ACM UIST 2001, ACM Press, Orlando, USA (2001)

209-218

5. Klemmer, S.R., Graham, J., Wolff, G.J., Landay, J.A.: Books with Voices: Paper Transcripts as a Tangible Interface to Oral Histories. In proceedings of CHI 03, ACM Press, Ft. Lauderdale, USA (2003) 89-96

8. Lalanne, D., Lisowska, A., Bruno, E., Flynn, M., Georgescul, M., Guillemot, M., Janvier, B.,

Marchand-Maillet, S., Melichar, M., Moenne-Loccoz, N. Popescu-Belis, A., Rajman, M.,

Rigamonti, M., von Rotz, D., Wellner, P.: The IM2 Multimodal Meeting Browser Family,

IM2 technical report, (2005)

9. McGee, D.R., Cohen, P.R., Wu, L.: Something from nothing: Augmenting a paper-based work practice with multimodal interaction. In proceedings of DARE 2000, Helsingor, Denmark (2000) 71-80

10. Nelson, L., Ichimura, S., Pedersen, E.R., Adams, L.: Palette: A Paper Interface for Giving

Presentations. In proceedings of CHI 99, ACM Press, Pittsburgh, USA (1999) 354-361

11. Norrie, M.C., Signer, B.: Overlaying Paper Maps with Digital Information Services for

Tourists. Proceedings of ENTER 2005, 12th International Conference on Information Technology and Travel & Tourism, Innsbruck, Austria (2005) 23-33

12. Rigamonti, M., Lalanne, D., Evéquoz, F., Ingold, R.: Browsing Multimedia Archives

Through Intra- and Multimodal Cross-Documents Links.

In proceedings of MLMI

05, LNCS 3869, Springer-Verlag, Edinburgh, Scotland (2006) 114-125.

13. Ullmer, B. (2002). Tangible Interfaces for Manipulating Aggregates of Digital Information.

Doctoral dissertation, MIT Media Lab, 2002.

14. J. Rekimoto, B. Ullmer and H. Oba. DataTiles: A Modular Platform for Mixed Physical and

Graphical Interactions. Proceedings of ACM CHI 2001 Conference, pp 269-276.

15. B. Ullmer and H. Ishii. mediaBlocks: Tangible Interfaces for Online Media. Proceedings of

ACM CHI 1999 Conference (extended abstracts), pp 31-32.

16. Pierre Wellner: Interacting with Paper on the Digital Desk. Commun. ACM 36(7): 86-96

(1993).

17. Denis Lalanne, Rolf Ingold, Didier von Rotz, Ardhendu Behera, Dalila Mekhaldi, Andrei

Popescu-Belis - "Using static documents as structured and thematic interfaces to multimedia meeting archives". In Bourlard H. & Bengio S., eds. (2004), Multimodal Interaction and Related Machine Learning Algorithms, LNCS, Springer-Verlag, Berlin, ISBN: 3-540-24509-

X, pp. 87-100.

18. Denis Lalanne, Dalila Mekhaldi and Rolf Ingold. "Talking about documents: revealing a missing link to multimedia meeting archives", Document Recognition and Retrieval XI,

IS&T/SPIE's International Symposium on Electronic Imaging 2004, San Jose California;January 18, 2004; Document Recognition and Retrieval XI SPIE Vol. 5296, ISBN /

ISSN: 0-8194-5199-1, pp. 82-91.

19. Pierre Wellner, Mike Flynn, Simon Tucker, Steve Whittaker. A meeting browser evaluation test. Conference on Human Factors in Computing Systems (CHI '05), extended abstracts on

Human factors in computing systems table of contents. Portland, OR, USA, pp. 2021 –

2024.