ArabicVoiceRecognition1

advertisement

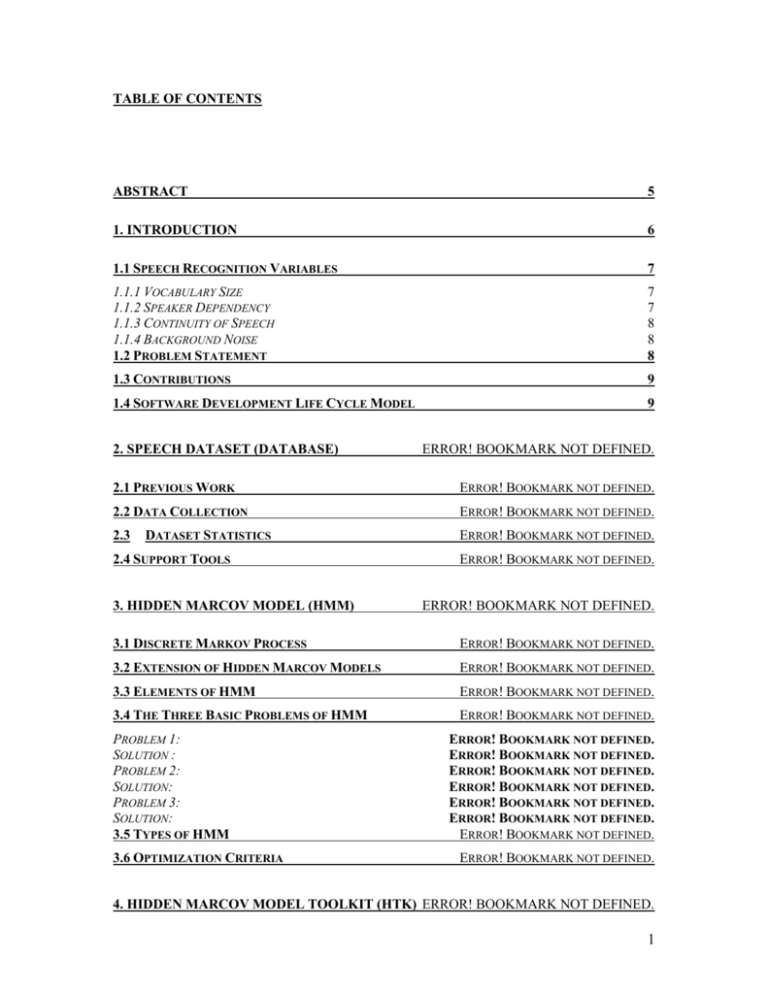

TABLE OF CONTENTS ABSTRACT 5 1. INTRODUCTION 6 1.1 SPEECH RECOGNITION VARIABLES 7 1.1.1 VOCABULARY SIZE 1.1.2 SPEAKER DEPENDENCY 1.1.3 CONTINUITY OF SPEECH 1.1.4 BACKGROUND NOISE 1.2 PROBLEM STATEMENT 7 7 8 8 8 1.3 CONTRIBUTIONS 9 1.4 SOFTWARE DEVELOPMENT LIFE CYCLE MODEL 9 2. SPEECH DATASET (DATABASE) ERROR! BOOKMARK NOT DEFINED. 2.1 PREVIOUS WORK ERROR! BOOKMARK NOT DEFINED. 2.2 DATA COLLECTION ERROR! BOOKMARK NOT DEFINED. 2.3 ERROR! BOOKMARK NOT DEFINED. DATASET STATISTICS 2.4 SUPPORT TOOLS 3. HIDDEN MARCOV MODEL (HMM) ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. 3.1 DISCRETE MARKOV PROCESS ERROR! BOOKMARK NOT DEFINED. 3.2 EXTENSION OF HIDDEN MARCOV MODELS ERROR! BOOKMARK NOT DEFINED. 3.3 ELEMENTS OF HMM ERROR! BOOKMARK NOT DEFINED. 3.4 THE THREE BASIC PROBLEMS OF HMM ERROR! BOOKMARK NOT DEFINED. PROBLEM 1: SOLUTION : PROBLEM 2: SOLUTION: PROBLEM 3: SOLUTION: 3.5 TYPES OF HMM 3.6 OPTIMIZATION CRITERIA ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. ERROR! BOOKMARK NOT DEFINED. 4. HIDDEN MARCOV MODEL TOOLKIT (HTK) ERROR! BOOKMARK NOT DEFINED. 1 4.1 SIMPLE HTK EXAMPLE ERROR! BOOKMARK NOT DEFINED. 4.2 DIFFICULTIES IN HTK ERROR! BOOKMARK NOT DEFINED. 5. MODELING AND LABELING TECHNIQUES ERROR! BOOKMARK NOT DEFINED. 5.1 EXAMPLE ON WORD LEVEL LABELING USING HTK ERROR! BOOKMARK NOT DEFINED. 6. SPEECH RECOGNITION DIFFERENT LABELING SCHEMES EXPERIMENTS ERROR! BOOKMARK NOT DEFINED. 6.1 LABELING ON WORD LEVEL ERROR! BOOKMARK NOT DEFINED. 6.2 PHONETIC-BASED LABELING ERROR! BOOKMARK NOT DEFINED. 6.3 LITERAL-BASED LABELING (OLD VERSION) ERROR! BOOKMARK NOT DEFINED. 6.4 LITERAL-BASED LABELING (NEW VERSION) ERROR! BOOKMARK NOT DEFINED. 6.5 COMPARISON BETWEEN THE FOUR METHODS ERROR! BOOKMARK NOT DEFINED. DISCUSSION ERROR! BOOKMARK NOT DEFINED. 7. RESULTS OF WORD-LEVEL LABELING ERROR! BOOKMARK NOT DEFINED. 8. RESULTS OF LITERAL-BASED LABELING ERROR! BOOKMARK NOT DEFINED. 9. RESULTS OF PHONETIC-BASED LABELING ERROR! BOOKMARK NOT DEFINED. 10. CONCLUSION ERROR! BOOKMARK NOT DEFINED. 10.1 FUTURE WORK ERROR! BOOKMARK NOT DEFINED. REFERENCES ERROR! BOOKMARK NOT DEFINED. 2 LIST OF TABLES Table 2.1: The Dataset parts………………………………………………………... 14 Table 2.2: The names that were used in the SR system and the number of the different samples for each name……………………………………………………. 14 Table 4.1: Brief Description of some HTK tools.…………………………………... 32 Table 4.2: Files needed to build an HTK example……………………………......... 32 Table 4.3: Files generated from HTK example……………………………………... 33 Table 5.1: Results of comparing word level and letter level……............................... 39 Table 6.1: The classes included in the small project and the classes sizes…………. 43 Table 6.2: Results on multi-speaker dataset using the word-level labeling………… 44 Table 6.3: Results on single-speaker dataset using the word-level labeling……… 44 Table 6.4: set of rules used in phonetic-based labeling…………………………….. 45 Table 6.5: Results on multi-speaker dataset using the phonetic-based labeling……. 46 Table 6.6: Results on single-speaker dataset using the phonetic-based labeling….... 46 Table 6.7: set of rules used in literal-based labeling (old version)…………………. 47 Table 6.8: Results on multi-speaker dataset using the literal-based labeling (old version)……………………………………………………………………………… Table 6.9: Results on single-speaker dataset using the literal-based……………….. 47 47 Table 6.10: set of rules used in literal-based labeling (new version)……………….. 48 Table 6.11: Results on multi-speaker dataset using the literal-based labeling (new version) ……………………………………………………………………………... 49 Table 6.12: Results on single-speaker dataset using the literal-based labeling (new version)…………………………………………………………………………….... 50 Table 6.13: Comparison between different labeling techniques on the multispeaker dataset……………………………………………………………………… 50 Table 6.14: Comparison between different labeling techniques on the singlespeaker dataset……………………………………………………………………… Table 7.1: Results of word-level labeling experiment……………………………… 50 52 Table 8.1: Results of literal-based labeling experiment…………………………….. 53 3 Table 8.2: Confusion Matrix of Literal Model on Training Set…………………….. 54 Table 8.3: Confusion Matrix of Literal Model on Testing Set……………………... 56 Table 9.1: Results of phonetic-based labeling experiment using monophones…….. 58 Table 9.2: Confusion Matrix of Phoneme Model on Training Set using Monophones……………………………………………………………………….... 59 Table 9.3: Confusion Matrix of Phoneme Model on Testing Set using Monophones………………………………………………………………………… 61 Table 9.4: Results of phonetic-based labeling experiment using triphones………… 63 Table 9.5: Confusion Matrix of Phoneme Model on Testing Set using Triphones… 64 Table 9.6: Comparison between monophones and triphones results……………….. 66 TABLE OF FIGURES FIGURE 3.1: A THREE-STATE HMM ……………………………..………………………. 22 FIGURE 3.2: ERGODIC HMM …………………………………………..…………………. 28 FIGURE 3.3: LEFT TO RIGHT HMM ………………………………………………...……. 28 4 Abstract Speech recognition is being the interest of scientists for more than four decades. Arabic speech recognition was an interest of Arabic scientists but they couldn’t reach a good performance until now. This is because they don’t have an existing speech dataset that they can build there systems on it. In this project, we are going to build a Speech Recognition system using the HTK tool. We are also going to build a speaker-independent speech dataset that can be used in our project to train our system and to validate it. This speech dataset can be helpful in other Speech Recognition researches. We will also run our recognizer interactively so that we can make use of it in information retrieval applications. 5 1. Introduction Speech recognition is being the interest of the scientists for more than four decades due to its wide range of applications. Speech recognition is to recognize the speech of a talker and transform it to the language of the receiver [1]. In computers, the automatic speech recognition means transforming human speech to a text or to an order to the computer. Automatic speech recognition can be used in a very wide range of applications such as information retrieval applications or applications that simplify the human-computers interactions. Speech recognition is a very complex process but we don’t recognize that because it is very natural to humans. It is a very large problem and it has so many variables that may affect the performance of the recognition if they were ignored. Speech recognition varies from one language to another, from one accent to another, from one sound to another. Moreover, it varies to the same person from one time to another. Also, the background noise may affect the recognition and the electrical characteristics of the telephone or any other electrical equipment can affect the recognition [1]. The performance of automatic speech recognition increased in the past few years. That’s because of the interest of the scientists in this field. This interest is because that the speech recognition is the simplest and the fastest way of communication between computers and humans. It saves the users time and effort which is the main goal of computers (besides the accuracy) [1]. Applications of automatic speech recognition systems include: telephone operated services, airline reservation systems, systems that talk with an 6 intelligent robot, learning systems, dictation systems, systems that helps persons with special needs, and so many other applications [5]. 1.1 Speech Recognition Variables [1] Automatic Speech Recognition has so many variables that may affect its performance and may increase the difficulty of building such a system. We will describe some of these variables below: 1.1.1 Vocabulary Size The vocabulary size is the number of words that the system is expected to recognize. As the vocabulary size increases, the performance decreases. 1.1.2 Speaker Dependency We can divide Speech Recognition Systems to two types based on this variable: Speaker Dependent SR System: which is a system that recognize the speech of one speaker only or a set of speakers known to the system before. Speaker Independent SR System: which is a system that can recognize the speech of any speaker. This is the most difficult type in SR systems. There has to be a large sounds database for training this system. 7 1.1.3 Continuity of Speech We can divide Speech Recognition Systems to three types also based on this variable: Isolated Word Recognition: which recognizes only single word at a time. Discontinuous Speech Recognition: which recognizes full sentences but there words separated with silences. Continuous Speech Recognition: this is the most difficult type of the SR systems. It recognizes the human natural speech. 1.1.4 Background Noise As we mentioned before, the background may affect the recognition process. So that, some systems has some conditions on the background such as speaking in a quite room, turning off the air conditioners in the room, speaking with a special type of microphones and so on. The perfect Speech Recognition System should recognize the human speech and give good accuracy with unlimited vocabulary size, speaker independent, continuous speech, and in any environment. 1.2 Problem Statement Our problem in this project is to create a voice directed phone directory system. We are going to build a Speech Recognition System that will recognize Arabic Spoken Names from any talker; it will then give the user 8 the number of the person that he was asking for his number. Our system can then be expanded to serve any application; because we will create the recognition system (the core). It can be connected to any application, all what we should do is to create a new interface with the Recognition System. Our recognition system will be an isolated word, speaker independent Speech Recognition System. 1.3 Contributions The new thing in this project is that it will recognize the speech that comes over the phone. Such kind of speech comes with some noise and some lost of data due to the characteristic of the telephone line and its limited bandwidth. There are so many existing applications that serve the sound dictation in Arabic language, but there is no existing information retrieval system based on Automatic Speech Recognition in Arabic language till now. 1.4 Software Development Life Cycle Model In this project, we are going to follow the development cycle model to achieve our goal: Speech Dataset Design and Development: we are going to build and design a very large speaker-independent speech dataset that will be used in training our recognition system. This dataset may also be used in the future to train other recognition systems. Learning the concept of Hidden Marcov Model (HMM) and its Implementation Tool (HTK). We should learn the concept of HMM, which 9 is a statistical model that will be used in building the models of recognition. This concept is implemented in a tool that is named Hidden Marcov Model Toolkit (HTK). We should learn how to use this tool and how to build a recognition system using this tool. Modeling: In this step, we are going to build the recognition models, and we are going to do some analysis to know what different models we have, the advantages and disadvantages of each modeling technique. Implementation: We will implement our recognition system in this phase using HTK. Testing: In this phase, we are going to run our recognizer, do all the tests that we need on it and make sure that it is working well. We can have a back cycle from each step to previous step or steps to modify our system if there were any errors. 10