April 2001 - AstroGrid wiki

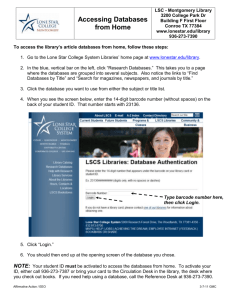

advertisement