Class Activity

advertisement

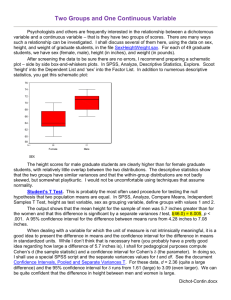

SPSS Workshop Utilizing and implementing SPSS in our OC-Math statistics classes My Name Is: . Starting SPSS, entering and modifying data will be studied in this section. □ Create a folder on the desktop under your name. After this go to □ Start, Programs, Spss, Pasw Statistics 18 …. □ Name variables in SPSS before entering data. Click the Variable View tab □ Enter name for variable 1, then for variable 2,... □ Advices regarding Variable View (wait one minute) (bottom). 1. In Variable View, you can NOT enter spaces for the name of variables. 2. Preferably, enter quantitative data. 3. When you enter integer values, select 0 in Decimals 4. You can also enter labels. Here you can type spaces etc. These would be the text that will be displayed when the mouse is on that variable. Example: If you enter AgeMarr for the name of the variable, you can type Age When Got Married under labels. 5. You can assign values to a variable, ex. 1=female, 2=male □ Start Typing or copying data from excel or from another document □ Copying from excel: mark data and do control c then go to SPSS and do control v □ Saving: Click on File, Save, then browse to your folder and type example1.sav My Name Is: . Basic statistics will be studied in this section. Mean, Mode, Standard Deviation(go to data window) □ Analyze, Descriptive Statistics, Frequencies □ Select your variables and them into the Variable(s): pane on the right. □ Click on Statistics and on the new window (Frequencies Statistics) select □ Mean, Median, Mode and Standard Deviation, then continue □ Click on Charts, Select None. □ Ok □ In the Output Window: go to Left pane: and Click inside Frequency Table, observe. Bar, Histogram or Pie Charts(go to data window) □ Firstly do Analyze, Descriptive Statistics, Frequencies Select your variables and them into the Variable(s): pane on the right. □ Click on Charts , Bar Charts and click continue then click ok □ Now, do Analyze, Descriptive Statistics, Frequencies Select your variables and them into the Variable(s): pane on the right. □ Histogram, Show Normal Curve on Histogram, click continue then click ok □ Finally do Analyze, Descriptive Statistics, Charts, Pie Charts, Frequencies Percentages, Continue, Ok □ In the Output Window: go to Left pane: and Click inside Frequencies, find your charts and observe. My Name Is: . Advanced statistics will be studied in this section. Relationship: Correlation (go to data window) □ Analyze, Correlate, Bivariate □ In the Bivariate Correlations dialog window select 2 to 5 variables □ Make sure Pearson, Two Tailed and Flag Signif... are checked, Ok □ Output Window: Left pane: click on Correlations then Correlations Relationship: Cross Tabs (go to data window) □ Analyze, Descriptive Statistics, Crosstabs □ Move variables from left to right, one in columns and one in rows □ Select Statistics, checkbox Chi-square then continue, then click ok □ Output Window: Left pane almost at bottom : click on Cross Tabs/Chi-Square Differences between two groups Running the t-test Independent Groups (Data window) □ Analyze, Compare Means, Independent-Samples T... □ In the Test Variable select one variable, in the Group Variable select another. You are trying to split into two groups to find the differences between these two groups. □ Define Groups, Cut Point, (it depends), Continue, then Ok (it might be define groups, e.g. 1 for female, 0 for male) □ Output Window: Left pane: click on T-Test, then Independent Samples My Name Is: . Advanced statistics will be studied in this section. Linear Regression (I want to know how salary is related to GPA) □ Analyze, Regression, Linear □ Select dependent and independent variable □ Continue Then Ok. □ Look at Coefficients in the Model box. B column has a top and bottom coefficient. Coeeficient a=bottom in column B, Coefficient b=top in Column B. Linear Regression: GRAPH (I want to know how salary is related to GPA) y=ax+b □ Graph, (Legacy Dialogs) Scater/Dot, Simple, Define □ Select dependent and independent variable □ Ok, Double click on the plot, be patient, then Options, then Reference line □ From Coefficients obtained in the Linear Regression, under Custom Equation insert y=(coefficient a you got before)*x + (coefficient b you got before), □ Checkbox: Attach label to. Close. □ Also close the Chart Editor by closing the window on the top right corner. Linear Regression: Residuals Find Outliers □ Analyze, Regression, Linear □ Select dependent and independent variable □ Click Plots then choose Zresid for the Y axis and ZPred for the X axis. □ Continue Then Ok. □ Double click on the plot, then Options, then Reference line From Equation, Enter y=2.5, then Apply , close. Also close the Chart Editor. My Name Is: . Advanced statistics will be studied in this section. Testing Normality □ Analyze, Descriptive Statistics , Q-Q plots, choose a variable, □ Select test distribution to be normal, then Ok Kolmogorov-Smirnov Test Test if Data are Normal or Test Randomness □ Analyze, Nonoparametric Test , One-Sample , (may choose a variable) □ Check Automatically Compare Observed data to hypothesized (You could have also chosen the TEST SEQUENCE FOR RANDOMNESS to see if the sequence of values defined by some cutting point is random) If you don’t see this AUTOMATICALLY COMP… option, select Descriptive and also Exclude cases test-by-test. □ Run □ Output Window: Left pane: click on NonParametric Tests, then Model Viewer Binomial Test When Normality fails, Test if Data are Binomial □ Analyze, Nonoparametric Test , Binomial , □ Select a variable □ Cut Point Enter a value (about the mean) □ Test Proportion Enter a value (about 50%) □ Options, Descriptive and also Exclude cases test-by-test) □ Ok □ Output Window: Left pane: click on NPar Tests, then Binomial Test My Name Is: . Advanced statistics will be studied in this section. Hypothesis Testing Running a T-Test: Is the GPA=3.5? □ Analyze, Compare Means, One-Sample T-Test □ In the Test Variable select one variable, □ Enter a value to test in Test Value (typically a mean) then OK □ Output Window: Left pane: click on T-Test, then Independent Samples Chi Square Expecting 10%Hispanic, 10%Asian, 70%White, 10%AfriAmer □ Analyze, Nonoparametric Test , Chi-Square , □ Select a variable □ Expected Values Enter a value, then Add. Repeat. □ Options, Descriptive and also Exclude cases test-by-test) □ Ok □ Output Window: Left pane: click on NPar Tests, then Chi-Square Test One-Way ANOVA (In AgeGender: 1=MaleOld, 2=MaleYong,3=FemaleOld, 4=Female Young) (for example Salary is the Factor, AgeGender is in the Dependent List) □ Analyze, Compare Means , One-Way Anova , □ Select a independent variable (Factor), in the given example AgeGender. Drag a variable into the Dependent List, for example Salary. □ Post Hoc… Select LSD then Continue. □ Options, Descriptive, Means Plot, Continue, □ Output Window: Left pane: click on Oneway, then ANOVA OK Ho=Null= Differences in Score between the groups =0= No significant Differences My Name Is: . Explanations about how to read the output from SPSS. □ A large standard deviation indicates that the data points are far from the mean and a small standard deviation indicates that they are clustered closely around the mean. It is a measure of dispersion. □ For the cross tabs, for the Chi-Square value A) If Sig < 0.05, then the variables are significantly related. B) If Sig > 0.05, then the variables are NOT significantly related. □ For the correlation, the important value is the Pearson (r) value, 1) if the r values greater than .50 indicate a strong correlation 2) if the r values around .30 indicatea moderate correlation 3) if the r values less than .20 indicate a weak correlation □ For the t-test, independent t-test , 1)If Sig.< 0.05, use the output in the Equal variances NOT assumed rows. 2)If Sig.> 0.05, use the output in the Equal variances assumed rows. Then we move to the Sig (2-tailed), A) If Sig< 0.05 we conclude that there was significant difference between the two means, i.e. that the difference is real and not due to randomness. B) If Sig> 0.05 we conclude that there was no significant difference between the two means. □ For the t-test, Paired We look at the Sig (2-tailed) A) If Sig < 0.05 we conclude that there was significant difference between the two means, i.e. that the difference is real and not due to randomness. B) If Sig> 0.05 we conclude that there was no significant difference between the two means. My Name Is: . Explanations about how to read the output from SPSS. □ Kolmogorov-Smirnov Test We look at the Sig (2-tailed) A) If Sig < 0.05 we conclude the data are NOT normal. B) If Sig) > 0.05 we conclude there is insufficient evidence to reject normality (we then proceed with the assumption the data are normal) □ P - Value We look at the Sig (2-tailed) The p-value is the same as the Asymp. Sig. A) If p-value (or Sig) < 0.05 we reject the null hypothesis B) If p-value (or Sig) >0.05 we conclude there is insufficient evidence to reject the null hypothesis (we then proceed with the assumption the null hypothesis is true, i.e. we accept it) □ Zero P – Value If mean1=hypothesized mean (the test value we input in our t-test) and mean2=the mean from our sample of size N, then a p-value of 0 indicates that there is a zero probability that in a random sample of size N from a population with mean1 we, by purely chance, obtain mean 2. If this is the case, we say that it is unlikely that the mean1 has occurred by chance and it is more likely that the mean1 is not as hypothesized. □ Hypothesis Testing is all about gathering evidence to suggest the null is not true, the lack of such evidence warrants a “Do not reject” decision. Remember, not guilty doesn’t mean the person is innocent, it means, the jury did not find enough evidence to condemn the person. □ Binomial Test When normality fails, we use a weaker test: The Binomial test. We have a cutting point and a proposed proportion or percentage. We look at the Sig (2-tailed) A) If Sig < 0.05 we reject the null hypothesis, hence the proposed percentage of our variable is NOT above our cutting point B) If Sig) > 0.05 There is insufficient evidence to conclude that the proposed percentage of our variable is not above our cutting point. □ For the Q-Q Plot, if the dots look pretty much like the straight line, we conclude the data look close to a normal distribution; otherwise we conclude that the data are far from a normal distribution. My Name Is: . Explanations about how to read the output from SPSS. □ One-Way Anova If p (Sig) <0.05 we can conclude as follows: Oneway ANOVA revealed that there were significant differences in the variable (of interest) between these groups. Whereas if p (Sig) >0.05 we can conclude as follows: According to the Anova Table, there is insufficient evidence to reject the null, in this case, we found not enough evidence to conclude that the there are no significant differences in the variable of interest among these groups. □ The linear regression straight line, tells you that it is the best straight line that fits the data (it minimizes the sum of the squares of the distances from the points to the line). You can also save the output by going to the output window and click Save then browse to your folder and type example1.SPO http://wwwstage.valpo.edu/other/dabook/ch8/c8-3.htm DATAPLOT (Visual), R (Fav), OPENSTAT (Soc. Sci.), INSTAT (Climat. Data) My Name Is: □ . Linear Regression Note: It is often very useful to look at the standardized residual versus standardized predicted plot in order to look for outliers and to check for homogeneity of variance. The ideal situation is to see no observations beyond the reference lines, which means that there are no outliers. Also, we would like the points on the plot to be distributed randomly, which means that all the systematic variance has been explained by the model. □ Anova As with the t-test, ANOVA also tests for significant differences between groups. But while the t-test is limited to the comparison of only two groups, one-way ANOVA can be used to test differences in three or more groups. The ANOVA procedure produces an F statistic, a value whose probability enables the researcher to reject or retain the null hypothesis, i.e., to conclude whether or not the differences in the scores on the dependent variable are statistically significant or due to chance. □ Two-Ways Anova Factorial ANOVA improves on one-way ANOVA in that the researcher can simultaneously assess the effects of two (or more) independent variables on a single dependent variable within the same analysis. Thus, factorial ANOVA yields the same information that two one-way ANOVA's would, but it does so in one analysis. But that's not all. Factorial ANOVA also allows the investigator to determine the possible combined effects of the independent variables. That is, it also assesses the ways in which these variables interact with one another to influence scores on the dependent variable.