DATA PROCESSING IN FSD - FORSDATA

advertisement

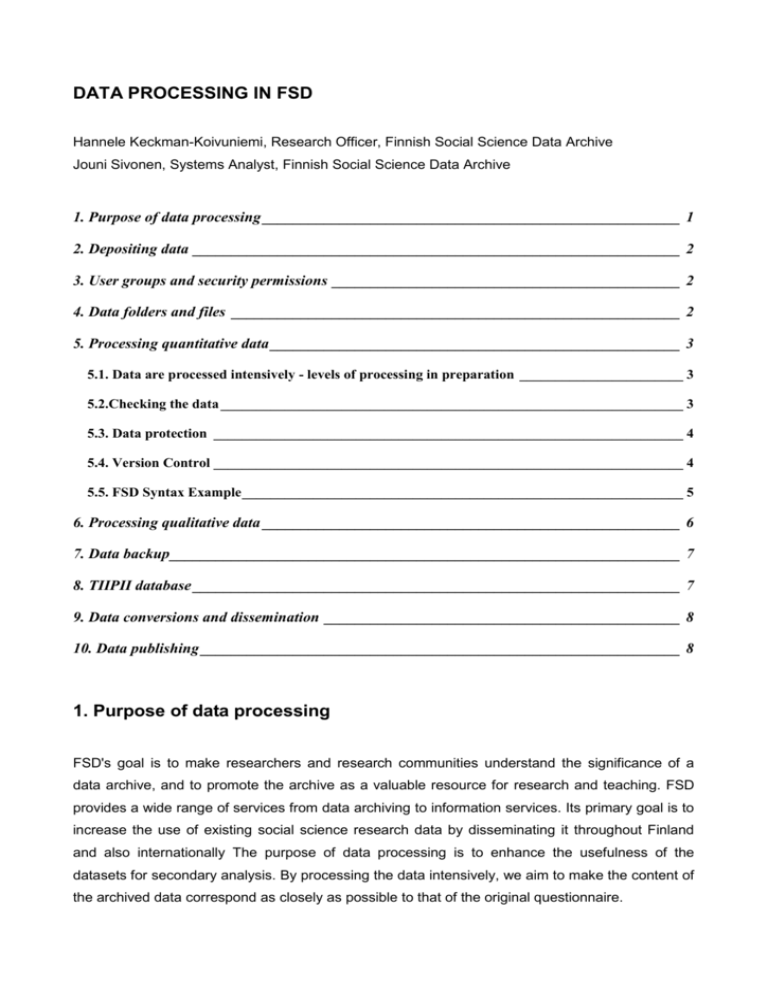

DATA PROCESSING IN FSD

Hannele Keckman-Koivuniemi, Research Officer, Finnish Social Science Data Archive

Jouni Sivonen, Systems Analyst, Finnish Social Science Data Archive

1. Purpose of data processing ______________________________________________________ 1

2. Depositing data _______________________________________________________________ 2

3. User groups and security permissions _____________________________________________ 2

4. Data folders and files __________________________________________________________ 2

5. Processing quantitative data _____________________________________________________ 3

5.1. Data are processed intensively - levels of processing in preparation _______________________ 3

5.2.Checking the data _________________________________________________________________ 3

5.3. Data protection __________________________________________________________________ 4

5.4. Version Control __________________________________________________________________ 4

5.5. FSD Syntax Example ______________________________________________________________ 5

6. Processing qualitative data ______________________________________________________ 6

7. Data backup __________________________________________________________________ 7

8. TIIPII database _______________________________________________________________ 7

9. Data conversions and dissemination ______________________________________________ 8

10. Data publishing ______________________________________________________________ 8

1. Purpose of data processing

FSD's goal is to make researchers and research communities understand the significance of a

data archive, and to promote the archive as a valuable resource for research and teaching. FSD

provides a wide range of services from data archiving to information services. Its primary goal is to

increase the use of existing social science research data by disseminating it throughout Finland

and also internationally The purpose of data processing is to enhance the usefulness of the

datasets for secondary analysis. By processing the data intensively, we aim to make the content of

the archived data correspond as closely as possible to that of the original questionnaire.

2. Depositing data

The archiving process begins when the depositor delivers machine readable data to FSD. Virus

checks will be carried out automatically on all received material. The data are most often received

in SPSS or Excel format and occasionally in SAS or ASCII format. Depositors are asked to give

detailed information about the collection procedure, resulting publications and the research project

in general. FSD prefers to obtain the supplementary documentation both in electronic and paper

formats.

The

completed

and

signed

material

description

(http://www.fsd.uta.fi/english/forms/description.pdf) and the material deposit agreement forms

(http://www.fsd.uta.fi/english/forms/deposit.pdf) are necessary as well.

3. User groups and security permissions

FSD has a method for controlling who can do what to documents and data folders. The archive

staff has been divided into different user groups with appropriate user rights, depending on the

work they do. The current user groups are administrators, technical staff, data administrators,

data processors, data documentators, data readers and others. Each group has only the security

permissions (read, write, modify permissions) they need in their daily work.

4. Data folders and files

All electronic information about a dataset is preserved in a dataset folder named FSDXXXX, where

XXXX is the FSD's dataset identification number. The main folder of a dataset contains the

following files:

- codebook (cbFXXXX.pdf)

- DDI documentation (study and document description = meFXXXX.xml and variable description =

vaFXXXX.xml)

- questionnaire (quFXXXX_fin.pdf)

- and other document files.

Main folder has a subfolder called Original, which contains all the original material received from

the depositor. Another subfolder, called Data, has all the datafiles attached to this dataset:

- daFXXXX.por

- syFXXXX.sps

- and possibly a source code for programs needed to convert the original data into a SPSS

readable data format.

5. Processing quantitative data

5.1. Data are processed intensively - levels of processing in preparation

FSD uses SPSS in data processing and preserves data in portable format. We have chosen SPSS

because it provides - at least at present - the best option for preserving data and identification

information (that is labels) in the same file. Since SPSS is widely used, converting material to

future formats should be manageable. All national studies acquired by FSD are processed

intensively - a very time-consuming undertaking. There are two reasons for doing this. First, the

number of archived studies so far is manageable. Second, researchers do not usually offer their

data. Rather, FSD staff identify studies from, for example, scientific journals and then request the

data from the researchers. This way all archived studies are by presumption "important" and

deserve intensive processing. However, three-level categorisation is under construction. The

process described below is a “ level one” process.

5.2. Checking the data

The original data file is preserved without modification as long as necessary for the archiving

process. The original datasets are preserved so that data protection rules are not violated. A copy

of the original file is used to produce a version suitable for secondary use. We focus on the

content, not the "look and feel" of the studies.

During the checking process mistakes are corrected and verifications, amendments and additions

are made in the syntax file. We confirm that the number of variables and cases match the

documentation supplied. We check frequencies to verify variables, valid and invalid values (e.g.

missing data, not applicable responses etc.). Variables are renamed corresponding to question

numbers. Background variables without their own question numbers are renamed bv1, bv2 etc.

FSD has standardised labels of some background variables (e.g. original question: How old are

you? --> variable label: Respondent's age). Variable and value labels are constructed based on the

questionnaire. Long question texts are shortened but still variable labels often become quite long.

We try to keep technical naming conventions or variable names and labels consistent within

studies of the same series.

Questionnaires often include questions that are directed only at respondents who meet certain

requirements. The archive checks these filter conditions. If the data include answers from people

who do not belong to the specified target group, the responses are classified as missing data.

In order to make sure that the content of the archived data corresponds as closely as possible to

that of the questionnaire some variables may have to be dropped or added. A variable is dropped if

it is undefined or data security aspects so require. Constructed variables, such as combined

variables and sum variables, are usually dropped. However, those constructed variables that are

integral to the usability of the data, especially weight variables, are kept - providing that the

documentation provided is explicit enough. Nowadays we add three variables to every dataset

processed in FSD: fsd_no, fsd_vr and fsd_id. Other new variables are added only if usability so

requires.

5.3. Data protection

Confidentiality aspects require that personal data are deleted. It is recommended that depositors

remove these types of data (names, addresses, birthdays etc.) before delivering the material to the

archive. Under certain circumstances, FSD stores materials which contain personal data. In these

cases, the archive anonymizes the data before dissemination according to its own guidelines and

depositors' instructions.

Variables indicating place of residence and business are also problematic. On one hand there is

always a risk that a single respondent might be identified, on the other hand the deletion of these

types of variables prevents secondary users from conducting regional comparisons, especially if no

other regional variables are used. Usually these types of variables are dropped. If necessary, they

can be restored. Variables of larger regional units (provinces, districts) are kept.

5.4. Version Control

We aim to process the datasets only once. Still, some of them have to be reprocessed. Sometimes

additional information about variables is given by depositors, errors are detected or processing

procedures updated. For example, datasets processed in the early days of FSD already need

repairing.

The first final version of a dataset is called version 1.0. If subsequent changes are more or less

cosmetic (typing errors etc.) the new version will be called 1.1. In the case of significant changes

(e.g. a variable added) the new version will be named 2.0.

We add these alterations to the end of the same SPSS syntax file used in processing the dataset

in the first place. This is not a long-term solution but has worked so far.

5.5. FSD Syntax Example

* FSD Finnish Social Science Data Archive.

* FSDXXXX Study Title Year.

* SPSS-syntax.

* Version 1.0: month year (research officer).

* Version 2.0: date month year (research officer).

* Warnings:.

* Check the saving paths before running the syntax! .

*.

* The original data file is g-zipped.

* Unzip it by double clicking the filename.

IMPORT FILE='X:\Data\FsdXXXX\original\nnnn.por'.

EXECUTE.

* or.

* GET FILE= 'X:\Data\FsdXXXX\original\nnnn.sav'.

* EXECUTE.

SAVE OUTFILE='C:\Temp\daFXXXX.sav'.

GET FILE='C:\Temp\daFXXXX.sav'.

EXECUTE.

COMPUTE fsd_no = XXXX.

FORMATS fsd_no (F5.0).

EXE.

COMPUTE fsd_vr = 1.0.

FORMATS fsd_vr (F5.1).

EXECUTE.

COMPUTE fsd_id = $CASENUM.

FORMATS fsd_id (F9.0).

EXECUTE.

RENAME VARIABLES (asuin1=q1)

(sukup2=q2)

(ika3=q3)

(perhes4=q4).

EXECUTE.

VARIABLE LABELS fsd_no "[fsd_no] FSD study number"

/fsd_vr "[fsd_vr] FSD edition number"

/fsd_id "[fsd_id] FSD case id"

/q1 "[q1] Respondent's municipality of residence"

/q2 "[q2] Respondent's sex"

/q3 "[q3] Respondent's age"

/q4 "[q4] Respondent's marital status".

EXECUTE.

VALUE LABELS q1

1 "Kajaani"

2 "Laihia"

3 "Loviisa"

4 "Muonio"

5 "Puumala"

6 "Valkeakoski"

/q2

1 "male"

2 "female"

/q4

1 "single"

2 "married or cohabiting"

3 "widowed or separated".

EXECUTE.

RECODE q8_1 (8=SYSMIS).

EXECUTE.

RECODE q11 ('?'=' ').

EXECUTE.

MISSING VALUES q8_1 (0).

EXECUTE.

SAVE OUTFILE='C:\Temp\daFXXXX.sav'/ KEEP= q1 TO q58.

EXECUTE.

GET FILE='C:\Temp\daFXXXX.sav'.

EXECUTE.

*.

* ## Syntax of version 1.0 ends here.

*.

* Syntax of version 2.0 starts here.

* Commands and comments needed here.

*.

* ## Syntax of version 2.0 ends here.

*.

EXPORT OUTFILE='X:\Data\FsdXXXX\data\daFXXXX.por'.

EXECUTE.

6. Processing qualitative data

FSD started to archive qualitative data in spring 2003. The received data are mainly in electronic

format, usually transliterated speech or interaction. We usually preserve the anonymized data in

rich text (.rtf), unformatted text (.txt) or HTML format. Hard copy material will be scanned and

preserved as TIFF images.

7. Data backup

Data are stored on a network drive, and the size of a hard disk partition available for datasets is

currently 40 gigabytes. To ensure preservation in case of a hard disk crash, the whole disk is

mirrored to a another hard disk with software RAID (Redundant Array of Independent/Inexpensive

Disks, Level 2). In addition, modified data are copied every night (working day) to a 3rd hard disk

and backupped to DDS-3 (Digital Data Storage) tapes. Backup tapes are rotated after 12 days. We

also store 12 monthly backup tapes (taken on the first working day of each month). Backup tapes

are stored in a locked fireproof safe. Twice a year a data backup tape is sent to the Finnish

National Archives.

8. TIIPII database

FSD has used a relational database called Tiipii for storing operational information from the very

beginning. First prototype was built with MS Access 97, and new functions have been added about

every 6 months. A project aiming to create a new user interface and change the database started

in fall 2002. The new user interface was created with Java and the database was changed to

PostgreSQL. Use of the new version started this summer. Tiipii2 database has information on all

important data archive functions:

1. Archived dataset: name, number, type, level of processing, version, history, publishing date,

depositor(s), conditions for use, series information, stage of data processing and

documentation, files connected to the dataset

2. Access agreements: research project, financier, purpose of use, delivery format, information on

data user(s)

3. Dissemination material: date, type, list of files, cd-rom covers, covering letter

4. Access conditions: permissions from depositors

5. Customer database: contact information

6. Contacts to FSD and made by FSD: phone calls, e-mails, date, contact person, contact chain

7. Visits to FSD and visits made by FSD: name of the event, dates and times, number of people

attending, contact information, related visits

8. Folders: paper materials related to a dataset

More information and screenshots at:

http://www.iassistdata.org/conferences/2004/presentations/C3_sivonen.ppt

9. Data conversions and dissemination

Most of our quantitative data customers are familiar with SPSS. About 90 % of researchers wish to

have data in this format. The rest wish to have data delivered in Excel or SAS formats.

Conversions between different statistical packages are done with Stattransfer software. In addition,

we have one Macintosh computer for conversions from the Mac file systems.

Qualitative datafiles are disseminated in RTF, TXT and HTML formats. Software programs for

qualitative analysis require text formats, but HTML files (with style sheet documents) are more

illustrative for the researcher. We deliver hard copy materials as PDF files.

10. Data publishing

Using tools created in the archive, FSD’s data documentation is published on the web both in

Finnish and in English. DDI format XML files are converted to HTML (www) and PDF files

(codebook), and copied to the www server.

Our data documentation is also published in the NESSTAR system. Two DDI xml files containing

Document

and Study Descriptions (meFXXXX.xml)

plus File

& Variable

Descriptions

(vaFXXXX.xml) are combined into one XML file and copied to the Nesstar server. We use NSD's

command line tools NSDImpNesstar and XMLGenerator for extracting variable information from

SPSS portable files. In the near future Nesstar Publisher will probably replace the command line

tools and text editor based DDI documenting.

See also

http://www.fsd.uta.fi/~matti/prujut/Koln2001.pdf

http://www.fsd.uta.fi/~matti/prujut/Bukarest2002.pdf