Introduction to Fault

advertisement

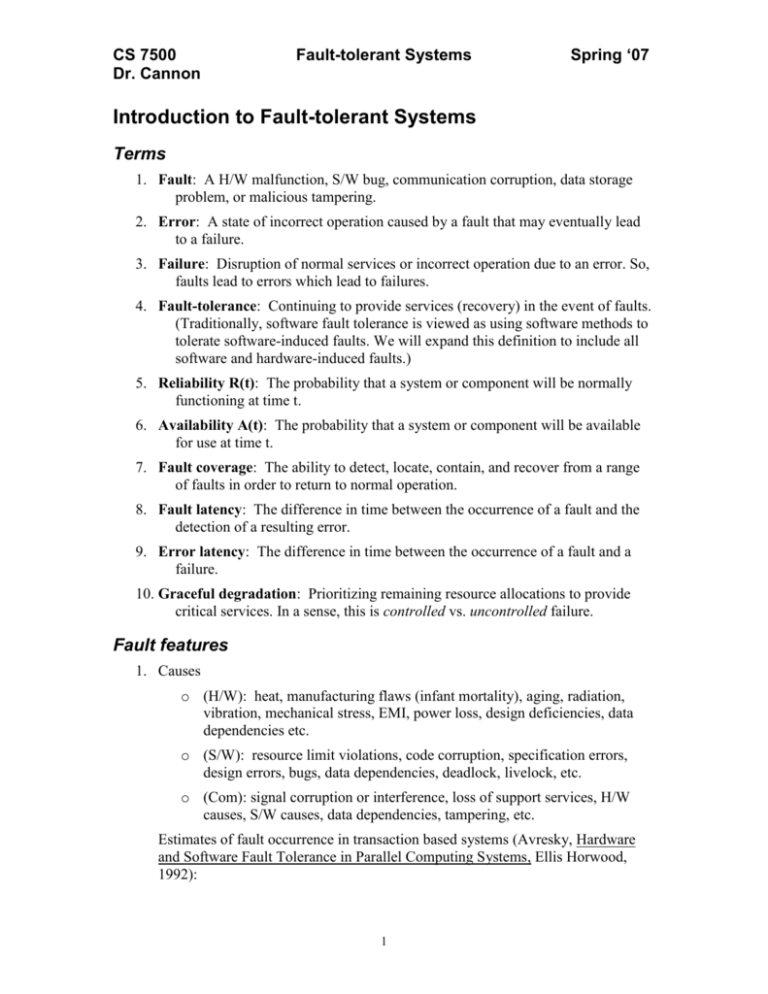

CS 7500 Dr. Cannon Fault-tolerant Systems Spring ‘07 Introduction to Fault-tolerant Systems Terms 1. Fault: A H/W malfunction, S/W bug, communication corruption, data storage problem, or malicious tampering. 2. Error: A state of incorrect operation caused by a fault that may eventually lead to a failure. 3. Failure: Disruption of normal services or incorrect operation due to an error. So, faults lead to errors which lead to failures. 4. Fault-tolerance: Continuing to provide services (recovery) in the event of faults. (Traditionally, software fault tolerance is viewed as using software methods to tolerate software-induced faults. We will expand this definition to include all software and hardware-induced faults.) 5. Reliability R(t): The probability that a system or component will be normally functioning at time t. 6. Availability A(t): The probability that a system or component will be available for use at time t. 7. Fault coverage: The ability to detect, locate, contain, and recover from a range of faults in order to return to normal operation. 8. Fault latency: The difference in time between the occurrence of a fault and the detection of a resulting error. 9. Error latency: The difference in time between the occurrence of a fault and a failure. 10. Graceful degradation: Prioritizing remaining resource allocations to provide critical services. In a sense, this is controlled vs. uncontrolled failure. Fault features 1. Causes o (H/W): heat, manufacturing flaws (infant mortality), aging, radiation, vibration, mechanical stress, EMI, power loss, design deficiencies, data dependencies etc. o (S/W): resource limit violations, code corruption, specification errors, design errors, bugs, data dependencies, deadlock, livelock, etc. o (Com): signal corruption or interference, loss of support services, H/W causes, S/W causes, data dependencies, tampering, etc. Estimates of fault occurrence in transaction based systems (Avresky, Hardware and Software Fault Tolerance in Parallel Computing Systems, Ellis Horwood, 1992): 1 CS 7500 Dr. Cannon Fault-tolerant Systems Fault cause percentage H/W 40% environment 5% S/W 30% data and operation 25% Spring ‘07 2. Fault Modes o Fail-silent – a component ceases to operate or perform o Fail-babbling – a component continues to operate, but produces meaningless output or results. o Fail-Byzantine – a component continues to operate as if no fault occurred, producing meaningful but incorrect output or results. 3. Fault Types o *Transient (soft) o Intermittent o Permanent (hard) *There is an important difference between transient and intermittent faults. An intermittent fault is due to repeated environmental conditions, hardware limitations, software problems, or poor design. As such, they are potentially detectable and thus repairable. Transient faults are due to transient conditions – such as natural alpha or gamma radiation. Since they do not re-occur and hardware is not damaged, they are generally not repairable. Importance of Fault-tolerant systems Providing services that are critical. Providing services when the cost of repair is high Providing services when access for repair is difficult Providing services when high availability is important System characteristics 1. Single computer systems 2 CS 7500 Dr. Cannon Fault-tolerant Systems Spring ‘07 o Low redundancy of needed components o High reliability o Simple detection, location, and containment of faults 2. Distributed and Parallel systems o High redundancy of needed components o Low reliability – the more components in a system, the higher the probability that any one component will fail o Difficult detection, location, and containment of faults *A parallel system of N nodes, each with Rn(t) will have a system R(t) < prod(Rn(t)) due to communications, synchronization, and exclusion failures. A distributed system of N nodes will have a lower system R(t) than N equivalent single-computer systems due to the same reasons. Software Systems Design objectives 1. Design a system with a low probability of failure. 2. Add software methodologies for adequate fault coverage Limitations and Practicality of Dependability Strategies 1. Dependable systems require attention to faults at four levels: a. fault avoidance through proper design b. fault detection and diagnosis c. fault forecasting d. fault tolerance 2. All these strategies are limited, imperfect, and subject themselves to faults. As a consequence, these strategies are typically applied in a recursive manner. In addition, tools and methods should be applied at each stage of the “fault error failure” progression. 3. Strategies that are either the most effective or have the least impact and overhead are typically specific to a given application, data domain, and system than are strategies that are more general and abstract. 4. Building dependability into a system usually increases the budget by a factor >2 (in both time and money). 5. Fault tolerance methods may mask faults with a greater coverage that could be easily corrected. 6. Failures may be the result of cascaded faults, errors, and previously undetected failures. A method or tool may be attacking the leaves of a problem and ignoring the root. 3