The Consistency of Arithmetic

advertisement

The Consistency of Arithmetic

Storrs McCall

The paper presents a proof of the consistency of Peano Arithmentic (PA) that

does not lie in deducing its consistency as a theorem in an axiomatic system. PA's

consistency cannot be proved in PA, and to deduce its consistency in some stronger

system PA+ is self-defeating. Instead, a semantic proof is constructed which

demonstrates consistency not relative to the consistency of some other system but in an

absolute sense. Some philosophical consequences of consistency for the thesis which

identifies minds with machines are explored.

Is Peano arithmetic (PA) consistent? This paper contains a proof that it is:- a

proof moreover that does not lie in deducing its consistency as a theorem in a system with

axioms and rules of inference. Gödel's second incompleteness theorem states that the

consistency of PA cannot be proved in PA, and to deduce its consistency in some

stronger system PA+ that includes PA is self-defeating, since if PA+ is itself inconsistent

the proof of PA's consistency is worthless. In an inconsistent system everything is

deducible, and therefore nothing is provable. If there is to be a genuine proof of PA's

consistency, it cannot be a proof relative to the consistency of some other stronger

system, but an absolute proof, such as the proof of consistency of two-valued

propositional logic using truth-tables. In this paper I construct a proof of PA's

consistency based not on manipulation of symbols, i.e. the syntactical derivation of a

consistency-theorem from axioms, but on a formal semantics for PA. An essential

element of any semantics is a nonlinguistic component, a domain of objects that is

necessarily absent from pure syntax. To my knowledge, no semantic consistency proof

of Peano arithmetic has yet appeared in the literature. In the last part of the paper I

briefly explore some of the philosophical consequences of the argument.

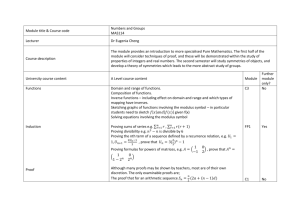

1. The system PA

1.1 Primitive symbols

Logical symbols: &, ~, =, plus brackets

Arithmetic symbols: 0, S, +, ×

Variables: x, y, z, ...

1.2 Rules of formation

1.2.1 For terms:

(i) All variables, plus 0, are terms

(ii) If X and Y are terms, then so are SX, X+Y, and X×Y

(iii) Nothing else is a term.

1.2.2 For wffs:

(i) If X and Y are terms, then X = Y is an atomic wff.

(ii) If A is a wff and x is a variable, then (x)A is a wff.

(iii) If A and B are wffs, then so are ~A and A&B.

2

(iv) Nothing else is a wff.

1.3 Definitions.

A => B =df ~(A&~B)

A v B =df ~(~A & ~B)

(Ex)A =df ~(x)~A

1 =df S0, 2 =df SS0, etc.

1.4 Axioms

Axioms and rules for first-order logic plus the following (Mendelson

(1964), p. 103; Goodstein (1965), p. 46):

A1.

A2.

A3.

A4.

A5.

A6.

A7.

A8.

(x=y) => (x=z => y=z)

~(Sx = 0)

(x=y) => (Sx=Sy)

(Sx=Sy) => (x=y)

x+0 = x

x+Sy = S(x+y)

x×0 = 0

x×Sy = (x×y)+x

Induction rule. From |- F(0) and |- (x)(Fx => F(Sx)) infer |- (x)Fx.

2. Formal semantics for PA

2.1 A model M consists of a domain D, in this case the set of natural

numbers including 0, and an assignment function v, which assigns each term of PA a

member of D. The function v is defined inductively.

2.1.1 (Basis) v(0) = 0. Also, where x is a variable, v(x) is a member of D.

2.1.2 (Induction step) Where X and Y are terms, assume that v(X) and

v(Y) have already been defined. Then

(i) v(SX) = v(X) + 1

(ii) v(X+Y) = v(X) + v(Y)

(iii) v(X×Y) = v(X) × v(Y)

2.2 The valuation function vM with respect to a model M is a function

which takes wffs of PA into the set of truth-values{T, F}. It too is defined inductively.

2.2.1 (Basis) Where A is an atomic wff X=Y, vM(X=Y) = T iff

v(X) = v(Y).

2.2.2 (Induction step) Assume vM(A) and vM(B) have already been

defined. Then:

(i) vM(~A) = T iff vM(A) = F

(ii) vM(A&B) = T iff vM(A) = T and vM(B) = T

(iii) vM((x)A) = T iff for all models M' which differ from M at

most in assignment to the variable x, vM'(A) = T.

3

2.3 Truth-in-a-model. We say that the formula A is true in a model M iff

vM(A) = T.

2.4 Validity. A valid formula is a formula which is true in all models M.

It is not difficult to show that the axioms A1-A8 are valid. For the induction rule,

if in every model F(0) is true, and if in every model in which Fx is true, F(Sx) is also true,

then in every model Fx is true. In the domain of natural numbers, either (i) all members

of the domain have a certain property F, or (ii) 0 lacks F, or (iii) there is a pair n, n+1

such that n has F and n+1 does not. There is no fourth alternative not covered by these

three. Hence the induction rule preserves validity. (By an element of the domain "having

a property F" is understood "having an arithmetic property expressible using only the

functions S, + and ×, the relation =, truth-functions and quantifiers". An example is the

property "being a prime number". Such properties do not include set-theoretical

properties like "being equal to the number of the set of all subsets of the natural

numbers". They do however include other properties that no numbers have, such as that

expressed by the open formula (y)y=x.)

The remaining axioms and rules of inference of PA belong to first-order predicate

logic, and it is known that these axioms are valid in the formal Tarski-style semantics

above, and that the rules preserve validity. Therefore all theorems of PA are valid. But

since no two formulae A and ~A can both be valid, it follows that PA is consistent.

3. Philosophical consequences of semantic consistency.

In a frequently cited paper written almost 50 years ago, Hilary Putnam says the

following in connection with Gödel's incompleteness theorem:

"It has sometimes been contended ... that a Turing machine cannot serve as a

model for the human mind, but this is simply a mistake.

"Let T be a Turing machine which 'represents' me in the sense that T can prove

just the mathematical statements I can prove. Then the argument ... is that by using

Gödel's technique I can discover a proposition that T cannot prove, and moreover I can

prove this proposition. This refutes the assumption that T 'represents' me, hence I am not

a Turing machine. The fallacy is a misapplication of Gödel's theorem, pure and simple.

Given an arbitrary machine T, all I can do is find a proposition U such that I can prove:

(3)

If T is consistent, U is true,

where U is undecidable by T if T is in fact consistent. However, T can perfectly well

prove (3) too! And the statement U, which T cannot prove (assuming consistency), I

cannot prove either (unless I can prove that T is consistent, which is unlikely if T is very

complicated)!" (Putnam (1960/1975), p. 366)

4

If T is a Peano-arithmetic-proving-machine, capable of outputting all and only

theorems of PA, then Putnam's observation is unassailable. A human being can prove

nothing other than what can be proved in Peano arithmetic unless the human being can

prove that PA is consistent. Despite his warning, however, many attempts have been

made since 1960 to do what Putnam asserted cannot be done, namely use Gödel's

theorem to argue that human minds are not Turing machines and that mechanism is

false.1 I include myself in this list of what Putnam would classify as "failed attempts",

having argued that whether PA is consistent or not, the set of propositions a human being

can know to be true is different from the set of theorems a Turing machine can prove.2

The argument goes as follows:

Let G denote the Gödel sentence, which says of itself that it is unprovable in

Peano arithmetic. There are two alternatives. (1) If PA is consistent, then G is

unprovable but true. (2) If PA is inconsistent, then G is provable but false. Either way,

provability and truth part company, and human beings can make judgments of truth and

falsehood which elude the theorem-proving capacities of PA-machines.

In reply, a supporter of mechanism could maintain, with Putnam, that the

conditional PA sentences:

(1) If Cons(PA) then ~Bew[G]

(2) If ~Cons(PA) then Bew[G]

that we accept as true, a Turing machine can prove. ("Bew" is the PA predicate

"beweisbar", provable). The result for the mechanist is a draw, neither humans nor

machines being entitled to claim victory. But the picture changes if PA is shown to be

consistent.

Although PA cannot prove its own consistency using axioms and rules of

inference, as was seen there exists a semantic consistency proof which we humans can

accept but which PA-machines cannot reproduce. The reason why a Turing machine

cannot deal with a semantic proof is that the machine recognizes only symbols on its

input tape, and its response is confined to outputting more symbols. But a semantic proof

contains as an essential component a non-linguistic domain of elements which a Turing

machine cannot access except through the medium of symbols. To "fully formalize" a

semantic proof would require symbolizing the domain and axiomatizing its structure, that

is to say converting the semantic proof into a syntactic proof. But as Gödel showed, the

consistency of PA cannot be proved in PA, and if proved in a stronger axiomatic system

is contingent upon the consistency of that system. It follows that a conclusive, watertight

consistency proof of arithmetic must necessarily be a semantic one. A human being can

go through such a proof, detach the consequent of the conditional (1) above, and know

for certain that the Gödel sentence is true. A Turing machine can't do this. Conclusion:

human beings are not machines.

5

Footnotes

1

See Lucas (1961), (1996); Penrose (1989), (1994); Redhead (2004); Wright (1994).

For criticism see Benacerraf (1967); Boolos (1990); Davis (1993); Dummett (1994);

Raatikainen (2005a), (2005b); Shapiro (1998); and numerous short comments in

Behavioral and Brain Sciences 13 (1990), pp. 655-92.

2 See McCall (1999), (2001). Critics of this approach include George and Velleman

(2000); Tennant (2001); Raatikainen (2002) and (2005a), pp. 523-4

References

Benacerraf, P. (1967) "God, the Devil, and Gödel", The Monist 51, pp. 9-32.

Boolos, G. (1990) "On 'seeing' the truth of the Gödel sentence", Behavioral and Brain

Sciences 13, pp. 655-56.

Davis, M. (1993) "How subtle is Gödel's theorem? More on Roger Penrose", Behavioral

and Brain Sciences 16, pp. 611-12.

Dummett, M. (1994) "Reply to Wright", in The Philosophy of Michael Dummett, ed.

McGuiness and Oliveri, pp. 329-38.

George, A. and Velleman, D. (2000) "Leveling the playing field between mind and

machine: a reply to McCall", The Journal of Philosophy 97, pp. 456-61.

Goodstein, R.L. (1965) Mathematical Logic.

Lucas, J. R. (1961) "Minds, machines, and Gödel", Philosophy 36, pp. 112-137.

---------------(1996) " Minds, machines, and Gödel: A retrospect", in Machines and

Thought. The Legacy of Alan Turing, vol. 1, ed. Millican and Clark, pp. 103-24.

McCall, S. (1999) "Can a Turing machine know that the Gödel sentence is true?", The

Journal of Philosophy 96, pp. 525-32.

--------------(2001) "On 'seeing' the truth of the Gödel sentence", Facta Philosophica 3,

pp. 25-29.

Mendelson, E. (1964) Introduction to Mathematical Logic.

Penrose, R. (1989) The Emperor's New Mind.

---------------(1994) Shadows of the Mind.

6

Putnam, H. (1960/1975) "Minds and machines", in Dimensions of Mind, ed. Sidney

Hook, reprinted in Putnam's Mind, Language and Reality, 1975, pp 362-85.

Raatikainen, P. (2002) "McCall's Gödelian argument is invalid", Facta Philosophica 4,

pp. 167-69.

-------------------(2005a) "On the philosophical relevance of Gödel's incompleteness

theorems", Revue Internationale de Philosophie 59, pp. 513-34.

-------------------(2005b) "Truth and provability -- A comment on Redhead", British

Journal for the Philosophy of Science 56, pp. 611-13.

Redhead, M. (2004) "Mathematics and the mind", British Journal for the Philosophy of

Science 55, pp.731-37.

Shapiro, S. (1998) "Incompleteness, mechanism, and optimism", The Bulletin of

Symbolic Logic 4, pp. 273-302.

Tennant, N. (2001) "On Turing machines knowing their own Gödel-sentences",

Philosophia Mathematica 9, pp. 72-79.

Wright, C. (1994) "About 'The philosophical significance of Gödel's theorem': Some

issues", in The Philosophy of Michael Dummett, ed. McGuiness and Oliveri, pp.167-202.