CH4

advertisement

Chapter 4

4.1

Properties of Discrete Distributions

The Poisson Approximation to the Binomial

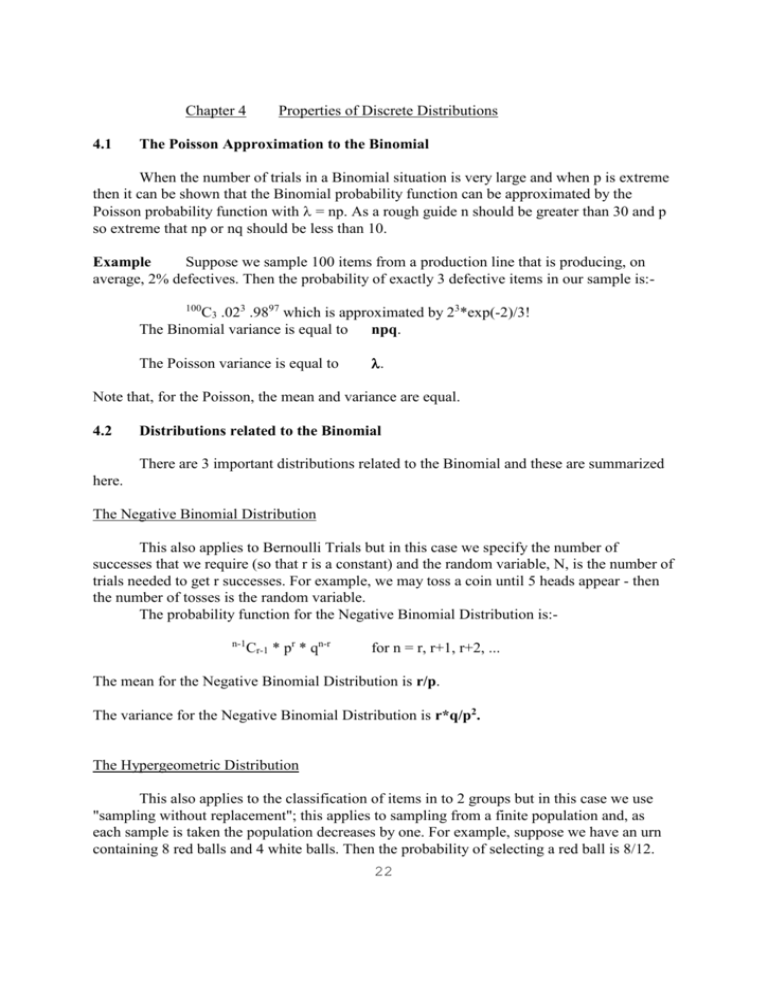

When the number of trials in a Binomial situation is very large and when p is extreme

then it can be shown that the Binomial probability function can be approximated by the

Poisson probability function with = np. As a rough guide n should be greater than 30 and p

so extreme that np or nq should be less than 10.

Example

Suppose we sample 100 items from a production line that is producing, on

average, 2% defectives. Then the probability of exactly 3 defective items in our sample is:100

C3 .023 .9897 which is approximated by 23*exp(-2)/3!

The Binomial variance is equal to

npq.

The Poisson variance is equal to

.

Note that, for the Poisson, the mean and variance are equal.

4.2

Distributions related to the Binomial

There are 3 important distributions related to the Binomial and these are summarized

here.

The Negative Binomial Distribution

This also applies to Bernoulli Trials but in this case we specify the number of

successes that we require (so that r is a constant) and the random variable, N, is the number of

trials needed to get r successes. For example, we may toss a coin until 5 heads appear - then

the number of tosses is the random variable.

The probability function for the Negative Binomial Distribution is:n-1

Cr-1 * pr * qn-r

for n = r, r+1, r+2, ...

The mean for the Negative Binomial Distribution is r/p.

The variance for the Negative Binomial Distribution is r*q/p2.

The Hypergeometric Distribution

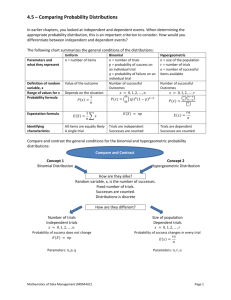

This also applies to the classification of items in to 2 groups but in this case we use

"sampling without replacement"; this applies to sampling from a finite population and, as

each sample is taken the population decreases by one. For example, suppose we have an urn

containing 8 red balls and 4 white balls. Then the probability of selecting a red ball is 8/12.

22

We select a ball at random and it happens to be red and we do not return it to the urn. The

probability of the next ball sampled being red is now 7/11 and so the trials are not

independent as the probability of red at the second drawing is affected by the result of the first

drawing.

In general, if we have a population of N items made up of R "successes" and

(N-R) "failures" then the probability that a random sample of n contains r successes is given

by the Hypergeometric probability function,

a r b

{RCr * N-RCn-r} / NCn

where a = max (0, n-N+R)

b = min (n, R)

The mean for the Hypergeometric Distribution is nR/N.

The variance for the Hypergeometric Distribution is

nR(N-R)(N-n)/{(N-1)N2}

The Multinomial Distribution

This applies to the classification of items in to k groups and in this case we use

"sampling with replacement"; this also applies to sampling from an infinite population. It is

an obvious generalization of the Binomial from 2 to many classifications.

Let us suppose that we have k groups with classification probabilities, p1, p2, ..., pk and a

random sample of n items is taken. Then the random variables are R1, R2, ..., Rk representing

the counts of items falling in to each class.

Note that R1 + R2 + ...+ Rk = n.

Then the probability function for the multinomial distribution is the probability that

R1 = r1, R2 = r2 ..., Rk = rk which can be shown to be:n! / {r1! * r2! * ... * rk!} p1r1 p2r2 ...pkrk

where all the r's range from 0 to n subject to

r1 + r2 + ...+ rk = n.

The mean for r1 is np1, etc.

The variance for r1 is np1 * q1, etc.

Note that the marginal distributions of each of the R's is Binomial and that the Binomial is a

special case of the hypergeometric when k = 2.

23