Factor Analysis: Data Reduction & Structure Detection

advertisement

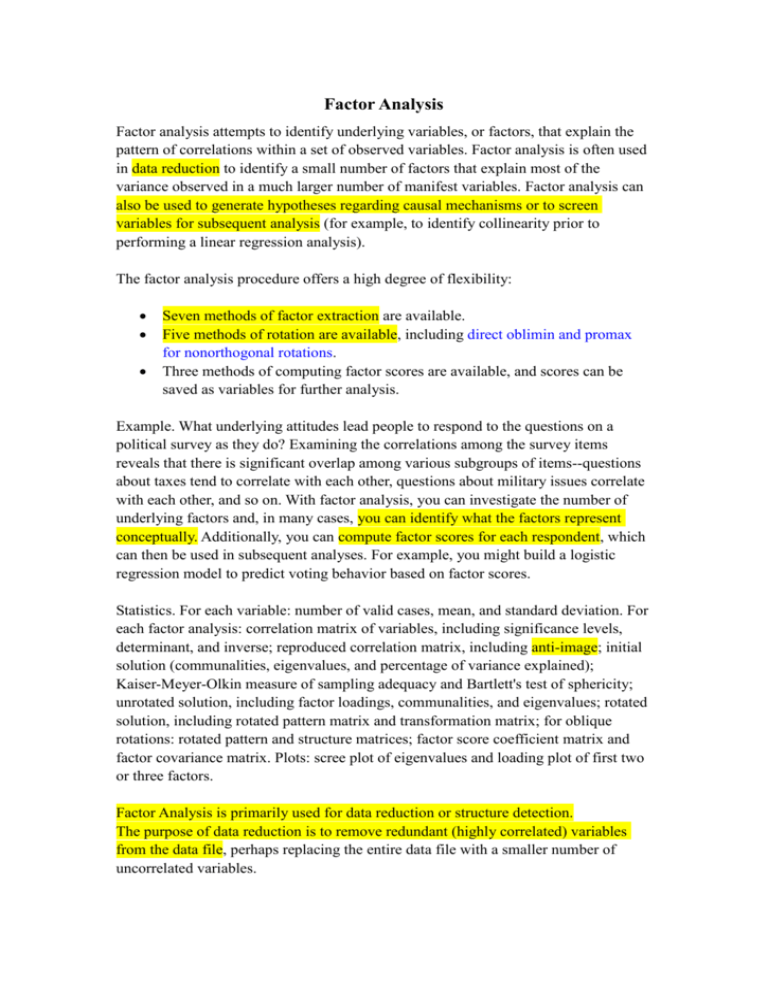

Factor Analysis Factor analysis attempts to identify underlying variables, or factors, that explain the pattern of correlations within a set of observed variables. Factor analysis is often used in data reduction to identify a small number of factors that explain most of the variance observed in a much larger number of manifest variables. Factor analysis can also be used to generate hypotheses regarding causal mechanisms or to screen variables for subsequent analysis (for example, to identify collinearity prior to performing a linear regression analysis). The factor analysis procedure offers a high degree of flexibility: Seven methods of factor extraction are available. Five methods of rotation are available, including direct oblimin and promax for nonorthogonal rotations. Three methods of computing factor scores are available, and scores can be saved as variables for further analysis. Example. What underlying attitudes lead people to respond to the questions on a political survey as they do? Examining the correlations among the survey items reveals that there is significant overlap among various subgroups of items--questions about taxes tend to correlate with each other, questions about military issues correlate with each other, and so on. With factor analysis, you can investigate the number of underlying factors and, in many cases, you can identify what the factors represent conceptually. Additionally, you can compute factor scores for each respondent, which can then be used in subsequent analyses. For example, you might build a logistic regression model to predict voting behavior based on factor scores. Statistics. For each variable: number of valid cases, mean, and standard deviation. For each factor analysis: correlation matrix of variables, including significance levels, determinant, and inverse; reproduced correlation matrix, including anti-image; initial solution (communalities, eigenvalues, and percentage of variance explained); Kaiser-Meyer-Olkin measure of sampling adequacy and Bartlett's test of sphericity; unrotated solution, including factor loadings, communalities, and eigenvalues; rotated solution, including rotated pattern matrix and transformation matrix; for oblique rotations: rotated pattern and structure matrices; factor score coefficient matrix and factor covariance matrix. Plots: scree plot of eigenvalues and loading plot of first two or three factors. Factor Analysis is primarily used for data reduction or structure detection. The purpose of data reduction is to remove redundant (highly correlated) variables from the data file, perhaps replacing the entire data file with a smaller number of uncorrelated variables. The purpose of structure detection is to examine the underlying (or latent) relationships between the variables. The Factor Analysis procedure has several extraction methods for constructing a solution. For Data Reduction. The principal components method of extraction begins by finding a linear combination of variables (a component) that accounts for as much variation in the original variables as possible. It then finds another component that accounts for as much of the remaining variation as possible and is uncorrelated with the previous component, continuing in this way until there are as many components as original variables. Usually, a few components will account for most of the variation, and these components can be used to replace the original variables. This method is most often used to reduce the number of variables in the data file. For Structure Detection. Other Factor Analysis extraction methods go one step further by adding the assumption that some of the variability in the data cannot be explained by the components (usually called factors in other extraction methods). As a result, the total variance explained by the solution is smaller; however, the addition of this structure to the factor model makes these methods ideal for examining relationships between the variables. With any extraction method, the two questions that a good solution should try to answer are "How many components (factors) are needed to represent the variables?" and "What do these components represent?" An industry analyst would like to predict automobile sales from a set of predictors. However, many of the predictors are correlated, and the analyst fears that this might adversely affect her results. This information is contained in the file car_sales.sav . Use Factor Analysis with principal components extraction to focus the analysis on a manageable subset of the predictors. Select Vehicle type through Fuel efficiency as analysis variables. Click Extraction Select Scree plot. Click Continue. Click Rotation in the Factor Analysis dialog box. Select Varimax in the Method group. Click Continue. Click Scores in the Factor Analysis dialog box. Select Save as variables and Display factor score coefficient matrix. Click Continue. Click OK in the Factor Analysis dialog box. These selections produce a solution using principal components extraction, which is then rotated for ease of interpretation. Components with eigenvalues greater than 1 are saved to the working file. Communalities indicate the amount of variance in each variable that is accounted for Initial communalities are estimates of the variance in each variable accounted for by all components or factors. For principal components extraction, this is always equal to 1.0 for correlation analyses. Extraction communalities are estimates of the variance in each variable accounted for by the components. The communalities in this table are all high, which indicates that the extracted components represent the variables well. If any communalities are very low in a principal components extraction, you may need to extract another component. The variance explained by the initial solution, extracted components, and rotated components is displayed. This first section of the table shows the Initial Eigenvalues The Total column gives the eigenvalue, or amount of variance in the original variables accounted for by each component. The % of Variance column gives the ratio, expressed as a percentage, of the variance accounted for by each component to the total variance in all of the variables. The Cumulative % column gives the percentage of variance accounted for by the first n components. For example, the cumulative percentage for the second component is the sum of the percentage of variance for the first and second components. For the initial solution, there are as many components as variables, and in a correlations analysis, the sum of the eigenvalues equals the number of components. You have requested that eigenvalues greater than 1 be extracted, so the first three principal components form the extracted solution. The second section of the table shows the extracted components. They explain nearly 88% of the variability in the original ten variables, so you can considerably reduce the complexity of the data set by using these components, with only a 12% loss of information. The rotation maintains the cumulative percentage of variation explained by the extracted components, but that variation is now spread more evenly over the components. The large changes in the individual totals suggest that the rotated component matrix will be easier to interpret than the unrotated matrix. The scree plot helps you to determine the optimal number of components. The eigenvalue of each component in the initial solution is plotted. Generally, you want to extract the components on the steep slope. The components on the shallow slope contribute little to the solution. The last big drop occurs between the third and fourth components, so using the first three components is an easy choice. The rotated component matrix helps you to determine what the components represent. The first component is most highly correlated with Price in thousands and Horsepower. Price in thousands is a better representative, however, because it is less correlated with the other two components. The second component is most highly correlated with Length The third component is most highly correlated with Vehicle type. This suggests that you can focus on Price in thousands, Length, and Vehicle type in further analyses, but you can do even better by saving component scores. For each case and each component, the component score is computed by multiplying the case's original variable values by the component's score coefficients. The resulting three component score variables are representative of, and can be used in place of, the ten original variables with only a 12% loss of information. Using the saved components is also preferable to using Price in thousands, Length, and Vehicle type because the components are representative of all ten original variables, and the components are not linearly correlated with each other. Although the linear correlation between the components is guaranteed to be 0, you should look at plots of the component scores to check for outliers and nonlinear associations between the components. To produce a scatterplot matrix of the component scores, from the menus choose: Graphs Scatter/Dot... Select Matrix Scatter. Click Define. Select REGR factor score 1 for analysis 1 through REGR factor score 3 for analysis 1 as the matrix variables. Click OK You can reduce the size of the data file from ten variables to three components by using Factor Analysis with a principal components extraction. Note that the interpretation of further analyses is dependent upon the relationships defined in the rotated component matrix. This step of "translation" complicates things slightly, but the benefits of reducing the data file and using uncorrelated predictors outweigh this cost A telecommunications provider wants to better understand service usage patterns in its customer database. If services can be clustered by usage, the company can offer more attractive packages to its customers. A random sample from the customer database is contained in telco.sav . Factor Analysis to determine the underlying structure in service usage. Select Long distance last month through Wireless last month and Multiple lines through Electronic billing as analysis variables. Click Descriptives. Select Anti-image and KMO and Bartlett's test of sphericity. Click Continue. Click Extraction in the Factor Analysis dialog box. Select Principal axis factoring from the Method list. Select Scree plot. Click Continue. Click Rotation in the Factor Analysis dialog box. Select Varimax in the Method group. Select Loading plot(s) in the Display group. Click Continue. Click OK in the Factor Analysis dialog box. These selections produce a solution using Principal axis factoring extraction, which is then given a Varimax rotation. This table shows two tests that indicate the suitability of your data for structure detection. The Kaiser-Meyer-Olkin Measure of Sampling Adequacy is a statistic that indicates the proportion of variance in your variables that might be caused by underlying factors. High values (close to 1.0) generally indicate that a factor analysis may be useful with your data. If the value is less than 0.50, the results of the factor analysis probably won't be very useful. Bartlett's test of sphericity tests the hypothesis that your correlation matrix is an identity matrix, which would indicate that your variables are unrelated and therefore unsuitable for structure detection. Small values (less than 0.05) of the significance level indicate that a factor analysis may be useful with your data. Initial communalities are, for correlation analyses, the proportion of variance accounted for in each variable by the rest of the variables. Extraction communalities are estimates of the variance in each variable accounted for by the factors in the factor solution. Small values indicate variables that do not fit well with the factor solution, and should possibly be dropped from the analysis The extraction communalities for this solution are acceptable, although the lower values of Multiple lines and Calling card show that they don't fit as well as the others. The leftmost section of this table shows the variance explained by the initial solution. Only three factors in the initial solution have eigenvalues greater than 1. Together, they account for almost 65% of the variability in the original variables. This suggests that three latent influences are associated with service usage, but there remains room for a lot of unexplained variation. The second section of this table shows the variance explained by the extracted factors before rotation. The cumulative variability explained by these three factors in the extracted solution is about 55%, a difference of 10% from the initial solution. Thus, about 10% of the variation explained by the initial solution is lost due to latent factors unique to the original variables and variability that simply cannot be explained by the factor model. The rightmost section of this table shows the variance explained by the extracted factors after rotation. The rotated factor model makes some small adjustments to factors 1 and 2, but factor 3 is left virtually unchanged. Look for changes between the unrotated and rotated factor matrices to see how the rotation affects the interpretation of the first and second factors. The scree plot confirms the choice of three components. The relationships in the unrotated factor matrix are somewhat clear. The factor transformation matrix describes the specific rotation applied to your factor solution. This matrix is used to compute the rotated factor matrix from the original (unrotated) factor matrix. Smaller off-diagonal elements correspond to smaller rotations. Larger off-diagonal elements correspond to larger rotations. The third factor is largely unaffected by the rotation, but the first two are now easier to interpret. Thus, there are three major groupings of services, as defined by the services that are most highly correlated with the three factors. Given these groupings, you can make the following observations about the remaining services: Because of their moderately large correlations with both the first and second factors, Wireless last month, Voice mail, and Paging service bridge the "Extras" and "Tech" groups. Calling card last month is moderately correlated with the first and third factors, thus it bridges the "Extras" and "Long Distance" groups Multiple lines is moderately correlated with the second and third factors, thus it bridges the "Tech" and "Long Distance" groups. This suggests avenues for cross-selling. For example, customers who subscribe to extra services may be more predisposed to accepting special offers on wireless services than Internet services. If you think the relationships between your variables are nonlinear, the Bivariate Correlations procedure offers correlation coefficients that are more appropriate for nonlinear associations. If your analysis variables are not scale variables, you can try Hierarchical Cluster Analysis on the variables as an alternative to Factor Analysis for structure detection.