3. The evaluation of documents` vector projection

advertisement

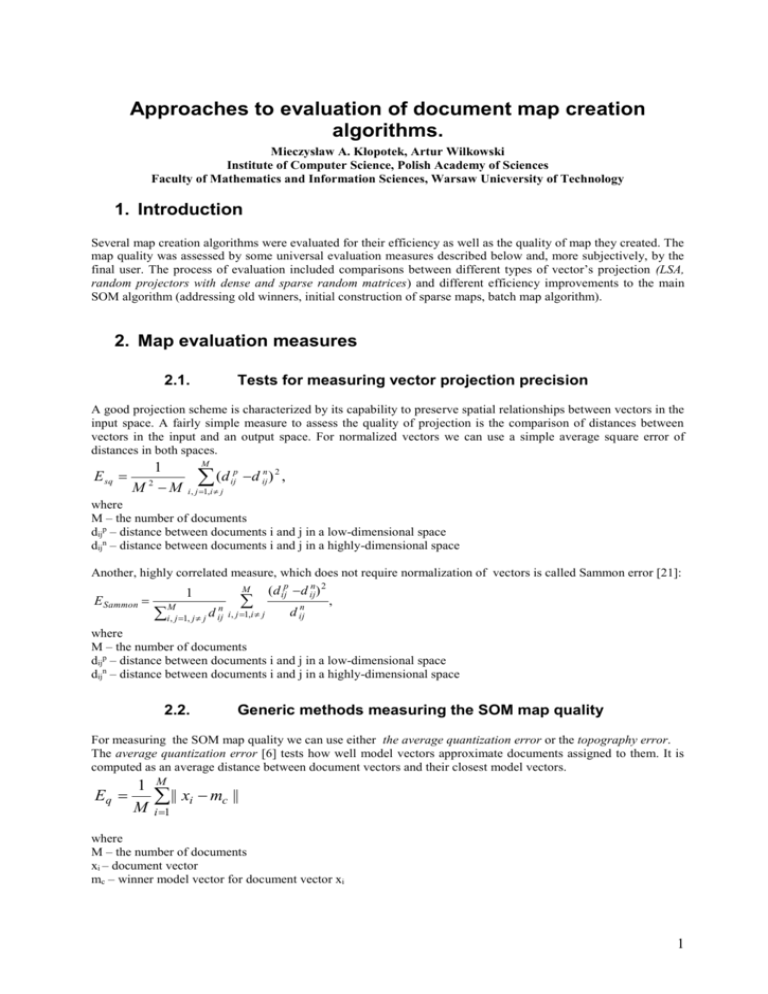

Approaches to evaluation of document map creation algorithms. Mieczysław A. Kłopotek, Artur Wilkowski Institute of Computer Science, Polish Academy of Sciences Faculty of Mathematics and Information Sciences, Warsaw Unicversity of Technology 1. Introduction Several map creation algorithms were evaluated for their efficiency as well as the quality of map they created. The map quality was assessed by some universal evaluation measures described below and, more subjectively, by the final user. The process of evaluation included comparisons between different types of vector’s projection (LSA, random projectors with dense and sparse random matrices) and different efficiency improvements to the main SOM algorithm (addressing old winners, initial construction of sparse maps, batch map algorithm). 2. Map evaluation measures 2.1. Tests for measuring vector projection precision A good projection scheme is characterized by its capability to preserve spatial relationships between vectors in the input space. A fairly simple measure to assess the quality of projection is the comparison of distances between vectors in the input and an output space. For normalized vectors we can use a simple average square error of distances in both spaces. E sq 1 2 M M M (d i , j 1,i j p ij d ijn ) 2 , where M – the number of documents dijp – distance between documents i and j in a low-dimensional space dijn – distance between documents i and j in a highly-dimensional space Another, highly correlated measure, which does not require normalization of vectors is called Sammon error [21]: E Sammon M 1 M i, j 1, j j d ijn i, j 1,i j (d ijp d ijn) 2 d ijn , where M – the number of documents dijp – distance between documents i and j in a low-dimensional space dijn – distance between documents i and j in a highly-dimensional space 2.2. Generic methods measuring the SOM map quality For measuring the SOM map quality we can use either the average quantization error or the topography error. The average quantization error [6] tests how well model vectors approximate documents assigned to them. It is computed as an average distance between document vectors and their closest model vectors. Eq 1 M M || xi mc || i 1 where M – the number of documents xi – document vector mc – winner model vector for document vector xi 1 The other one is the topography error [19]. It measures map orderliness - how well its topology reflects the similarity relationships between clusters. In order to do this, the proportion of documents for which the 2 best matching model vectors do not lie in adjacent map units is calculated. Et 1 M M u( xi ) i 1 where M – document count u(xi) = 1 when 2 best matching model vectors of the document xi are not neighbours, otherwise – 0 These two measures are dependent on the data set used. The proportion between them reflects the compromise between the local ordering of data (their assignment to particular map units) and the data density approximation by the model vectors. This proportion depends on the final width of the neighbourhood function, the diversity of documents being mapped and the size of the map. Generally, the greater the final neighbourhood width the lower value of the topography error and greater the average quantization error. Additionally we used a very simple map smoothness measure to check how similar to each other are adjacent vectors on the map (map smoothness is one of the goals of the SOM algorithm). The map smoothness measure is obtained by calculating average distances between adjacent map units over the whole map. In order to compare map quality achieved by the use of several modified SOM algorithms a good idea is to create a reference map using the basic algorithm first and then match the outcomes of other algorithms against it. However, several experiments revealed that it is extremely hard to compare two maps created even by the same algorithm. The SOM map as a whole behaves in highly chaotic way and even if a slight disturbance of data appear or some random factor is introduced (which is always the case for the document vectors are presented in a random order and model vectors are initiated randomly at the beginning) and the resulting maps can dramatically different, although both may be of the same quality. Different part of the map can be rotated, scaled and twisted in many ways, however, they still may reflect a specific clustering structure that is enforced by the data set and the algorithm principles. Although there exists sophisticated measures for comparing two SOM maps [20], for the purpose of the tests we developed a simplified one that seems to be sufficient providing that maps are not very large (as it only take into consideration immediate neighbours of a map unit). This measure computes the proportion of pairs of documents that are neighbours on one map and are situated farther off on the second. E sq 1 M2 M M testDist (d ijI ,d IIij) , i , j 1,i j 1 (d ijI 1,5 d IIij 1,5) (d ijII 1,5 d ijI 1,5) testDist (d ijI ,d IIij) 0 otherwise where M – the number of documents dijp – distance between documents i and j on the first map dijd – distance between documents i and j on the second map 2.3. Data-set specific methods for measuring map quality An efficient measure of map quality using a priori knowledge about the structure of the data set has been proposed by Kohonen in [6]. Although the categorization of web pages that his work is concerned with may be not so obvious as the categorization of scientific document abstracts, it is still possible. For this purpose we explored the portal republika.pl which has this advantage that it catalogues variety of web pages that are manually organized into topical structure. What is more, this hierarchical structure can be easily established basing on URL addresses of web pages.weused this property to compare the degree of expected similarity between web pages (without in fact performing any categorization). As a measure of topical similaritywetook the distance between documents in the directory structure. Two documents occupying the same directory were assigned the distance 0, those from the directories in parent-child relationship – distance 1, those from sibling directories – distance 2 and so on. Having thus established a metric of category distancewedirectly used the quality measure of the cluster purity similar to the one suggested by Kohonen. For each map unit there is calculated the number of documents belonging the a dominant category. Document’s from other categories are treated as errors. Then the average is computed over the whole map. The cluster purity measure adopted in this work is only a very imperfect approximation of a measure utilizing manually established 2 categories. It does not take into consideration overlapping categories for instance. During the test several problems also stemmed from the fact that even topically similar web pages were assigned to completely different categories (for instance web pages committed to horses could appear both in categories for instance sport/horseriding and hobby/animals) and therefore some of them was classified as errors. However, the fact that the there was noted a strong correlation between using simplified algorithms for map generation and the deny in the measure value proves that this measure generally well evaluates the quality of generated map clusters. Having in mind that similar documents are not bound to occupy single map units but also adjacent units (the map clusters may contain several map units) we developed also measures which calculates the average distances between documents belonging to categories of different degree of similarity (eg. average distance between the documents belonging to the same category, between documents that have degree of similarity of 1, 2 and so on according to the explicit “category distance” measure described above). Note that this measure must be used together with other measures as it assigns the lowest (for low degrees of similarity are considered the best) values to a map where all documents are localized in only one map unit. 3. The evaluation of documents’ vector projection The main projection experiment was carried out on a corpus of 1000 documents collected from servers of the web portal republika.pl. From 33450 keywords remained after filtering by stop words lists and word stemming we removed those that appeared in the whole text corpus in less than in three documents. This left us with the input space of 4599 entries. Apart from applying direct measures for assessing the quality of projected vectors (like Sammon error) we run for every configuration the 20 step (100 iterations per map unit) basic SOM algorithm (utilizing the Kohonen Learning Rule) to evaluate the accuracy of classification for different projection settings. The map taught by the algorithm consisted of 196 map units (rectangular map of 14 x 14 map units). The test was carried out for output vectors dimensions: 20, 30, 50, 100, 200. As the projection results for vector dimension 200 proved to be comparable to those obtained using fully-dimensional vectors we abandoned tests for higher input vector dimensions. From the projection methods described in the Methodology chapter, during the test we evaluated results of the projection for the normally distributed random projector, random projector with bipolar entries, sparse random projector with number of nonzero elements ranging from 1 to 5 and the projector basing on SVD decomposition (LSA projector) utilizing Lanczos algorithm. The maps generated by the use of different projections were compared against the map created using fully-dimensional space. One instance of the latter was also used to create a reference map used for direct map comparisons. To increase statistical accuracy each test consisted of six consecutive algorithm runs. The whole test took about 20 hours. All tests were completed on a Celeron 600 computer with 192 MB RAM. Sammon error full size vectors LSA Random pr gauss Random pr bipolar 0,0168 0,0032 0,0015 0,0050 0,0020 Random pr 0,0027 sparse (5 0,0004 nonzero) Figure 1. average quantizat ion error topograp hy error category distance (0) category distance (1) 67,4 0,8 68,4 1,4 66,2 1,3 66,5 1,3 0,3596 0,0005 0,2850 0,0011 0,3620 0,0100 0,3460 0,0160 0,0180 0,0070 0,0300 0,0100 0,0302 0,0060 0,0308 0,0050 0,67 0,06 0,65 0,08 0,89 0,09 0,98 0,05 2,57 0,08 2,50 0,20 2,63 0,18 2,90 0,30 distance projection SOM to the time (s) algorithm reference time (s) map 1076,0 7,0 0,0250 0,0010 410,9 1,9 0,0254 19,990 0,0009 0,180 409,4 0,6 0,0314 8,121 0,0013 0,005 410,5 1,5 0,0311 8,159 0,0009 0,008 66,3 0,7 0,3540 0,0080 0,0220 0,0050 0,86 0,10 2,65 0,16 0,0301 0,0014 cluster purity (%) 0,820 0,050 345,0 3,0 Comparison of different types of projection for model vector dimension 200 (standard deviations are taken as error margins) The most distinct thing that can be observed basing on the results in figure 1 is a very good performance of the LSA projector. Its classification accuracy measured by the cluster purity is even slightly better that the one obtained using full size model vectors. This is reflected also by lower values of the average quantization error measure. Other measures except for the topography error are comparable to those obtained using fullydimensional vectors, particularly the average distances between documents belonging to the same category (category distance(0)) . It must be noted too, that the degree of similarity with the reference map is virtually the 3 same for the LSA projection and full size document vectors . These results can of course be partly attributed to possible deficiencies of evaluation methods adopted (which only aim at approximating human perception of the map) but can also prove the LSA algorithm ability to remove the noise from data and to emphasize important relationships as was suggested in [7]. The second important issue about the Lanczos algorithm is its fairly good efficiency in comparison with the duration time of the basic version of the SOM algorithm used subsequently. Additionally, the projection performed using Lanczos algorithm proved to be only little above 2 times slower than the random projection utilizing completely filled random matrix. However, the efficiency comparison with the sparse matrix random projection seems to be much in favour of the latter (20 s vs. 0,8 s). Similarly to the results obtained in [6] the performance of the random projectors group turned out to be very good. The classification accuracy for random projectors was not more than 1% worse than the one achieved with full size document vectors. Regarding other measures the random projector family also seems to work very well. Sparse random projectors tend even to have better topological structure (measured by the topography error) than the map based on any other projection scheme. However, the degree of similarity to the reference map is much lower for randomly projected documents than for LSA projection. (At this point it is worth to note that the measure directly comparing the original and projected vector spatial relationships (Sammon error) does not necessarily reflect the quality of projection, as the LSA projection, however, scoring worse in this test, performs better in any other.) It is also interesting to observe that the map quality measures are to the large extent independent of the matrix sparsity used for random projection. The projection results for different number of nonzero elements in matrix column are presented in figure 2. Number of nonzero elements in a column 1 2 3 4 5 200 Figure 2. Cluster purity projection time (%) (s) 64,0 0,2 64,0 2,0 67,0 1,1 65,5 1,7 66,3 0,7 66,5 1,3 0,430 0,030 0,550 0,040 0,636 0,019 0,720 0,040 0,820 0,050 8,159 0,008 SOM algorithm time (s) 196,0 3,0 266,0 4,0 307,0 4,0 331,0 3,0 345,0 3,0 410,5 1,5 Relationships between the number of nonzero elements in a column of a random projector, cluster purity, a projection and a SOM algorithm duration. Document vectors dimension 200 (standard deviations are taken for error margins) As can be easily noted regarding only the numbers of nonzero elements in the random matrix it is quite difficult to distinguish better or worse projection. What is more, the sparse random projector with as much as one nonzero element in each column for output vectors dimensionality 200 performed not much worse than the random projection with a completely filled matrix. This could be attributed to a quite small number of documents involved in the experiment in comparison with the output vectors dimensionality. This allows a direct mapping of one element from highly dimensional space to the other from destination space without running a great risk of confusing two different words represented by the same unit in the output vector. Therefore if the great number of varying documents is concerned it is better to ensure that every word is mapped onto a large enough pattern of entries in the output space. The number of nonzero elements in the random matrix affects not only the projection time but also time in which the main SOM algorithm runs (up to the factor of 2 when two matrix density extremities are compared). This is the result of the final structure of vectors that undergone projection. The more sparse the random projection matrix is the more sparse are resulting vectors. This of course contributes to much more effective calculations of inner products between documents during the winner search phase if it is optimized for handling sparse document vectors. For this reasons it does not seem to be reasonable to invest into dense random matrix as it greatly increases computation overhead and the quality decline is almost insignificant. In [6] the authors give 5 as the value of number of nonzero elements in each random matrix column which should be sufficient even for large documents collections. It should be mentioned that also LSA projector gives as the output dense vectors, so the algorithm efficiency cannot be boosted by quick inner products computations. In this case the opportunity for improvement lies rather in its quality potential, namely by means of the further reduction of vectors sizes (the cluster purity for 100 dimensional vectors and LSA algorithm was close to 65%!). 4 During the tests both vector sizes 200 and 100 performed well in terms of map quality. For lower dimensionalities there was more rapid decrease in the cluster purity and other quality measures. Although, vector sizes 100 and 200 work well with this specific document collection this could not be the rule for all document collections, especially much larger or more varied topically and with larger vocabulary. In [6] authors adopted the value of 500 as sufficient even for a document collection counted in millions of documents. The values of the cluster purity in a function of the projected vectors dimensionality (for LSA and “dense” random projection) is displayed in figure 3. 80% 70% 60% Cluster Purity 50% Random Projection 40% LSA 30% 20% 10% 0% 0 50 100 150 200 250 Dimension Figure 3. Relations between the cluster purity and the size of document vectors used 4. The evaluation of the sped-up SOM creation methods In order to evaluate the performance of different algorithms of fast SOM map creation against the main algorithm we carried out a series small scale experiments on a text corpus containing 1000 web pages from the domain republika.pl. The documents were placed on a map of 14 x 14 units. Before that, the documents of dimensionality 4599 (after removing word appearing in documents less than 3 times) were projected onto 100 – dimensional space using random projector with 5 nonzero entries in each column of the matrix. With the basic SOM algorithm the map was taught for 20 major iterations, in each all documents from the corpus were presented to the neural network in the random order. This gave more than 100 iterations per map unit. During the learning process the learning coefficient and the neighbourhood size were gradually reduced – fast at the beginning and slowly at the end of the process. During the final stages about 11% of map units were updated in each step (this meant the final neighbourhood size of about 3 units) The basic algorithm was compared with modified SOM methods. The speedup was obtained through creation of a sparse map initially, approximating the large map and then fine tuning it. The fine tuning algorithms we tested were the SOM algorithm based on the Kohonen Learning Rule and the Batch Map Algorithm described in the Methodology chapter. Assuming that the resulting map unit length was 1 the initially created sparse maps were tested with units lengths 1.7, 2.4, 2.7, 3.3, 3.7, 5.3. Units lengths we chose purposefully to avoid multiplicities of resulting map unit length. we noticed that in such cases, especially for low unit lengths (2, 3) and using batch map algorithm the clusters “inherited” from the sparse map (created around map points directly underlying their counterparts on the sparse map) tend remain stable and only very little “dissolve” into adjacent map points. This problem could be addressed by the reduction of the final neighbourhood size, but this results in a worst overall map ordering and the increase of the Topography Error. Thankfully, the problem turned out to be not so relevant if non-integer sparse map unit sized were applied – so we adopted the latter approach. 5 For the tests of the batch map algorithm the sparse map was carefully taught for 20 major iterations then a dense map was approximated and the batch map fine tuning algorithm was utilized for 5 iterations (the number of iterations suggested in [6]). The tests of a fine tuning using the simple Kohonen Rule comprised teaching the sparse map for 5, 10 or 15 major iterations and fine tuning the map for remaining 15, 10 or 5 iterations with the Kohonen Rule. For each set of parameters we conducted a series of 6 experiments that as a whole took about 12 hours. ). All tests were completed on a Celeron 600 computer with 192 MB RAM. The performance of the fine tuning process using the Kohonen Rule measured by the Topography Error, Average Quantization Error, and Cluster Purity for fine tuning taking more than 10 iterations was the same or very close to the performance of the basic SOM algorithm. However, the speed up factor was less than 2 it this case. The remaining methods proved to be more promising as far as the efficiency gain is concerned. The relations between the duration of the algorithms and the cluster purity are presented in the figure 4. 64% 62% 58% 56% Classification accuracy 60% Basic Kohonen Kohonen 15 5 Batch Map - 5 54% 52% 50% 200 150 100 50 0 Time Figure 4. The comparison of the classification accuracy for a basic Kohonen algorithm, Kohonen algorithm with 15 main iterations and 5 fine-tuning Kohonen steps and Kohonen algorithm with 5 batch map fine tuning steps. The subsequent points on the graph represent increasing unit sizes of the sparse maps (obviously the greater unit sizes the shorter duration time but lesser map precision too). What we can see from the graph is that fine tuning by the simple Kohonen’s Rule outperforms batch mapping for the same sparse map unit sizes. The difference is quite small, for the cluster purity measure it does not exceed 2 percent. The batch map algorithm dominates slightly when efficiency is concerned and creates the map about 10% faster than the module utilizing Kohonen’s Rule. It is interesting to note that the values of cluster purity for the batch map algorithm stabilize a little above 55% even for very sparse initial maps. This points out that 5 batch map steps (unlike corresponding 5 Kohonen major iterations) suffice to perform local ordering of data even for very coarse map approximations. This good results might be deceptive – so we should not rely on them to make judgements of the map as a whole. If we look at the figure 5 that visualises relationships between the algorithm duration and the Topography Error we can note a quick rise of the Topography Error in all cases for larger sparse map unit sizes (the turning point can be located close to the map unit size 2.7) 6 0,1 0,09 0,08 0,06 0,05 0,04 Topography error 0,07 Basic Kohonen Kononen 15 5 Batch map 5 0,03 0,02 0,01 0 200 150 100 50 0 Time Figure 5. The comparison of the topography error for a basic Kohonen algorithm, Kohonen algorithm with 15 main iterations and 5 fine-tuning Kohonen steps and Kohonen algorithm with 5 batch map fine tuning steps. This well illustrates the fact that tuning methods (even the Batch Map with 5 steps for a small neighbourhood size), although able to improve the map locally, can do very little to make up for a poor global ordering of the map. Also when this measure is concerned the Kohonen Rule fine tuning seems to give the results of the best quality but in relatively large larger amount of time than the Batch Map algorithm. For the next tests we chose the length of the sparse map unit 2,7 as this offers considerable speedup and is still before the “steep slope” of the topography error function. In the next stage of the experiment we evaluated the principle of addressing winners from previous iterations in order to speed up the winner search process. During preliminary test we noticed that the hit ratio of choosing the next winner starting from the previous ones is very high near the end of the learning phase (over 99%) and quite low at the beginning (about 50%). Therefore we decided that a better solution, than intermittenly applying full winner search every some specified number of iterations, would be to adjust the number of iterations between full winner search to the stage of the learning process. we related the number of iterations to the current size of the neighbourhood as, intuitively, the hit ratio largely depend on the question if the map is updated globally or only locally. So, the number of iterations between each winner search is increased in an inversely linear manner by, with each iteration more slowly, bringing it nearer to some pre-specified constant value. (for the experiment we chose maximum 4 old winner search iterations between each full winner search). Kohonen - full size vectors Kohonen – projection dim 100 Kohonen – 15-5 Kohonen – 15-5 old winer search category distance (0) category distance (1) distance to the reference map cluster purity (%) average topography quantization error error SOM algorithm time (s) 67,4 0,8 0,3596 0,0005 0,018 0,007 0,67 0,06 2,57 0,08 0,0250 0,0010 1076 7 63,4 1,6 0,3470 0,0160 0,036 0,006 1,30 0,30 3,10 0,30 0,0360 0,0010 197 3 57,4 0,3600 1,4 0,0200 0,033 0,007 1,22 0,06 3,20 0,20 0,0359 0,0017 68 1 56,5 0,3630 0,058 1,39 0,17 3,40 0,30 0,0357 0,0011 44 1 7 2,3 Batch map (5 iterations) (dim 100) Batch map (5 iterations) (dim 100) old winner Figure 6. 0,0080 0,007 55,5 1,6 0,3620 0,0190 0,039 0,009 1,27 0,04 3,30 + 0,20 0,0362 0,0013 66 1 55,5 1,6 0,3630 0,0100 0,046 0,008 1,29 0,10 3,60 0,40 0,0359 0,0014 41 1 Comparison of efficiency and accuracy of basic and sped-up algorithms of map creation for the random projection algorithms (2,7 is as a unit for the initial sparse map, standard deviations are taken as errors) As could be expected the impact of old winner search improvement on the cluster purity of the map or the average quantization error was very little or none at all as in the case of the batch map algorithm (see figure 6). On the other hand the values of the topography error increased noticeably. This directly results from the fact that the lack of a full winner search in each iteration affects only the global organisation of the map, which can be still locally very well ordered. It is worth noting that the decline in topographical accuracy was more prominent for simple Kohonen algorithm than for the batch map rule. This together with a significant time save (from over 60 to 40 seconds) make the old winner search especially effective supplement to the batch map algorithm. Kohonen - full size vectors Kohonen – LSA projection dim 100 Kohonen – LSA 15-5 iterations Kohonen – LSA 15-5 iterations old winer search Batch map – LSA (5 iterations) (dim 100) Batch map – LSA (5 iterations) (dim 100) old winner Figure 7. category distance (0) category distance (1) distance to the reference map cluster purity (%) average topography quantization error error SOM algorithm time (s) 67,4 0,8 0,3596 0,0005 0,018 0,007 0,67 0,06 2,57 0,08 0,0250 0,0010 1076 7 64,8 1,2 0,2355 0,0009 0,041 0,010 0,63 0,03 2,42 0,16 0,0251 0,0018 211 1 56,8 1,3 0,2407 0,0017 0,044 0,017 0,75 0,07 2,70 0,30 0,0259 0,0019 73 1 57,7 1,3 0,2407 0,0016 0,040 0,011 0,72 0,08 2,60 0,30 0,0254 0,0001 48 1 58,2 2,2 0,2381 0,0018 0,032 0,005 0,69 0,06 2,51 0,20 0,0260 0,0020 72 1 57,7 1,4 0,2366 0,0010 0,044 0,007 0,71 0,04 2,55 0,15 0,0266 0,0011 44 1 Comparison of efficiency and accuracy of basic and sped-up algorithms of map creation for LSA projection algorithm (2,7 is as a unit for the initial sparse map, standard deviations are taken as errors) To finish off the tests we performed another experiment for the same choice of parameters but for document vectors projected using LSA algorithm (figure 7). In each of the tests methods using LSA projection exhibit about 2% advantage in the cluster purity over random projection methods. They also note much lower quantization error values, however, this measure may not allow a direct comparison of methods as it is data set dependant (and therefore projection dependant). The more important fact, which can be more reliably measured, is that all the algorithms based on LSA projection are able to preserve very low average distance between documents from the same category – category distance 0 - (0,7 for LSA and 1,3 for the random projection) and a very high degree of similarity to the reference map created with a simple Kohonen rule using full-size vectors (the measured distance was about 0,026 for LSA-based algorithms and about 0,036 for the random projection based algorithms). The values of the topography error for the simple algorithm versions were slightly greater in case of the LSA – projected vectors (it is difficult to make accurate comparisons due to quite high standard deviations of results). 8 The use of sped-up versions of the algorithms did not result in increase of this parameter as was in case of the random projection vectors, so for sped-up algorithms (the batch map and the batch map with old winner search) results were comparable or even a little in favour of the LSA algorithm. The LSA-based algorithms are about 10% slower than corresponding random projection – based algorithms (with a sparse projection matrix) due to more dense document vectors and slower winner vector computation. If we sum up the duration of both projection and the SOM algorithm duration times this ratio increases to about 25%. However, this seems to be still a reasonable computational overhead to consider if we take into account the quality advantages of the LSA algorithm. This make the LSA projection with the batch map and old winners speedups an interesting alternative for the random projection – based solutions. Both of the recent figures demonstrates that the batch map algorithm and a simple SOM algorithm based on the principle of map densening turn out to be about 3 times faster than the SOM algorithm working on a full-size map from the very beginning. The application of the old winner search increased this ratio to about 4,8. In result the fastest version of the algorithm is 24-26 times faster than the basic algorithm working on full-size document vectors. According to [6] this proportion is likely to change in favour of the sped-up algorithms for larger maps. This is for the fact the unit size of the sparse map should be adjusted to the final neighbourhood size in the learning process. For larger maps, where larger neighbourhood sizes are used, the larger sparse map unit can be used and significantly greater efficiency gain obtained. For larger maps also several map densening steps can be taken – so the time consuming work on a full size map can be reduced to the minimum and enough map quality preserved at the same time. 5. The manual evaluation of the sped-up map creation methods The parametric results are obviously not the most important factor of map creation. What really counts is how users perceive the maps created, how concise, logical they find the cluster structure displayed on the map. In order to compare different map creation algorithms using the “human factor”, two people were independently asked to assess the quality of several maps as well as the accuracy and usefulness of search facility for the given maps. The test procedure went as follows. For the first test the users were asked to manually assess the purity of map units (the measure similar to the cluster purity but performed in more intuivite and “intelligent” manner). As the second test the users were asked to assess the general map organisation, evaluate the extent to which similar documents tend to lie in map units nearby and if the documents clusters lying not far from one another are topically much different (this measure reflects the topography quality of the map). Both tests were mark on a scale from 0-10. In addition to these two texts users were asked to choose randomly 10 words (5 words each) after some preliminary map exploration. Then, in subsequent experiments, the users were asked to query the map for these words and evaluate the quality of response. Each query results were assessed in a scale of 0-1 (depending on the relevance to the query each of the subsequent best matches marked on the map). Manual tests were performed for the simple SOM algorithm working on document vectors projected by the LSA and various random projectors to the 100-dimensional space, the same algorithm for dense (gaussian) random projector and 300-dimensional space and the batch map algorithm using old winner search principle and 100-dimensional LSA projected vectors. (all other settings for all algorithms were set to the same values as during the previous experiment). The average results of the experiments are shown in figure 8. Map creation algorithm LSA – 100 cluster purity 9,5 topography quality 8,5 query results 10 Random pr gauss – 7 4,5 1,75 100 Random pr bipolar 7 6 1,5 – 100 Random pr sparse 7 7 2,5 100 Random pr gauss 9 9 5 300 LSA – 100 + map densening, batch 8,5 9,5 9,5 map + old winners Figure 8. User evaluation of different map creation techniques 9 As it is clearly visible in figure 8 the testers admit a very high quality of the map created basing on vectors projected using LSA algorithm. If the random projection is used (for the same model vectors dimension – 100) both users agree that the maps are relatively poorer than the map based on the LSA projection algorithm. However, they still score quite high (in most case about 7) which means that they are still regarded as well reflecting the structure of document’s collection. It turned out that in order to achieve comparable map quality using the random projection to those obtained with the LSA algorithm, larger model vectors’ sizes must be used. In the experiment the use of the random projector and model vector of dimension 300 gave results very alike to those of the LSA algorithm for vectors dimensionality 100. It must be noted that the computational complexity of the SOM algorithm for vectors with 300 entries is 3 times the computational complexity for vectors with just 100 entries. At this point the question must be stated if it is profitable to use quick random projection algorithms having in mind that not very much slower LSA algorithm can give results of much higher quality. The answer in favour of the LSA algorithm is very straightforward in the case of small document collection and low model vectors dimensionalities utilized. When the dimensionality of model vectors is larger the LSA projector would not prove as efficient as for lower dimensionalitites as its major ability is to choose the most important factors of document by term matrix. Therefore for model vector dimensionalities of over 500 the results of SVD decomposition and the random projection might be to large extent similar. Figure 8. shows also that the main SOM algorithm speedups (map densening, batch map algorithm, old winner search), although much shortening the algorithm time, do not have much negative impact on the map quality as perceived by users. This means that the fast approximation of the SOM algorithm suggested in [6], when applied to the information retrieval problems gives results almost indistinguishable from the outcome of the original algorithm. The difference in quality of projection between the random projection and the SVD decomposition is the best depicted by the difference in query accuracy results. For all model vector dimensions querying accuracy for the LSA algorithm is almost perfect, while for random projection algorithms very poor. The latter improves only when 300 dimensional vectors are utilized and even then reaches only about the half of the accuracy for the LSA algorithm. At the end it is interesting to observe that the user-percepted quality of the map was somehow correlated with the distance between categories measure. The poorly marked solutions have the masure of distance between category 0 above 1.0 while all positively marked algorightms (LSA and the random projection dim 300) scored significantly below 1.0. 6. Conclusion It has been proved in this thesis that SOM-created map can be the useful and attractive to the user way of presenting Internet document collections. Moreover, applying the SOM algorithm can also reveal the hidden structure of the collection. The SOM map, due to its integrated browsing and searching properties can not only fill the gap between purely search and browse based engines but can even set a new standard for the web exploration. It has been also shown that a basic and unimproved version of the Websom algorithm is sufficient for processing data from small document collections. However, when the number of documents and gathered keywords increases, several improvements including vector projections and more advanced methods of map creation must be applied to keep the algorithm computational complexity within reasonable bounds. During the process of evaluation it has been established that the use of language recognition tool, stop-word lists and word stemming is essential for maintaining a good quality of document vectors, which directly influences the map quality and especially map labelling and search facility. It has been also proved that the choice of appropriate vector projection algorithm can drastically boost the SOM algorithm efficiency, however, this choice should be backed up by a careful analysis of possible speedups and possible decline in map quality. It has been also shown that the improved SOM map creation algorithms (including map densening, batch map algorithm and old winner search) are in the domain of the Information Retrieval useful and even desired alternatives to the basic SOM algorithm, especially in terms of efficiency improvement and applying the SOM algorithm to larger Internet document collections. 7. Bibliography [1] S. Brin, L. Page, The anatomy of a Large-Scale Hypertextual Web Search Engine, http://citeseer.nj.nec.com/brin98anatomy.html, 2000 [2] K. Lagus, Text Mining with the Websom, Acta Polytechnica Scandinavica, computing series No. 110, 2000 [3] M. Porter, The Porter Stemming Algorithm, http://www.tartarus.org/~martin/PorterStemmer/, April 2003 [4] M. Porter, The English (Porter2) Stemming Algorithm Web Page, http://snowball.tartarus.org/english/stemmer.html, April 2003 [5] The Lovins Stemming Algorithm, http://snowball.tartarus.org/lovins/stemmer.html, April 2003 10 [6] T. Kohonen, S. Kaski, K. Laguss, J Sajolarvi, J Honkela, V. Paatero, A. Saarela, Self organization of a massive document collection, IEEE Transactions on Neural Networks vol. 11 No. 3, May 2000 [7] S. Deerwester, S. T. Dumais, G.W. Furnas, T.K. Landauer, R. Harshman, Indexing by Latent Semantic Analysis, http://citeseer.nj.nec.com/deerwester90indexing.html, 1990 [8] T. Hoffman, Probabilistic Latent Semantic Analysis, UAI Stockholm, http://citeseer.nj.nec.com/hofmann99probabilistic.html, 1999 [9] A. Ultsch, G. Guimaraes, D. Korus, H. Li, Knowledge extraction from Artificial Neural Networks and Applications, World Transputer Congress 93, Springer, 1993 [10] K. Lagus, S. Kasski, Keyword selection method for characterizing word document maps, Proceedings of ICANN’99, 1999 [11] D. Cohn, T. Hoffmann, The Missing Link – A Probabilistic Model of Document Content and Hypertext Connectivity, http://citeseer.nj.nec.com/cohn01missing.html, 2001 [12] T. Dunning, Statistical Identification of Language, March 1994 [13] D. Weiss, Polish Stemmer, http://www.cs.put.poznan.pl/dweiss/index.php/projects/lametyzator/index.xml?lang=en, September 2003 [14] T. Kowaltowski, C. L. Lucchesi, J. Stolfi, Finite Automata and Efficient Lexicon Implementation, Relatorio Tecnico IC-98-2, Janeiro, 1998 [15] J. Daciuk, Finite State Utilities, http://www.eti.pg.gda.pl/~jandac/fsa.html, September 2003 [16] W.B. Canvar, J.M. Trenkle, N-Gram-Based Text Categorization, Environmental Research Institute of Michigan, http://citeseer.nj.nec.com/68861.html, 1997 [17] R. Ganesan, A. T. Sherman, Statistical Techniques for Language Recognition: An Introduction and Guide for Cryptanalysts, http://citeseer.nj.nec.com/ravi93statistical.html, February 1993 [18] M. Berry, T. Do, G. O’Brien, V. Krishna, S. Varadhan, SVDPACKC(Version 1.0) User’s Guide, http://citeseer.nj.nec.com/9643.html, October 1993 [19] K. Kiviluoto, Topology Preservation in Self-Organizing Maps, Proceedings of ICANN 96, IEEE International Conference on Neural Networks, 1996 [20] S. Kasski, K. Lagus, Comparing Self Organizing Maps, Proceedings of ICANN 96, Internetional Conference on Artificial Neural Networks, Lecture Notes in Computer Science vol. 11112, pp.809-814, Springer, Berlin, 1996 [21] Sammon Mapping, http://www.eng.man.ac.uk/mech/merg/research/datafusion.org.uk/techniques/sammon.html, November 2003 [22] Wilkowski A. : M.Sc. Thesis, Warsaw University of Technology. (supervised by M.A.Klopotek) Research partially supported by the KBN research project 4 T11C 026 25 "Maps and intelligent navigation in WWW using Bayesian networks and artificial immune systems" 11 Approaches to evaluation of document map creation algorithms. ............................................... 1 1. Introduction .......................................................................................................................... 1 2. Map evaluation measures ..................................................................................................... 1 2.1. Tests for measuring vector projection precision .......................................................... 1 2.2. Generic methods measuring the SOM map quality ..................................................... 1 2.3. Data-set specific methods for measuring map quality ................................................. 2 3. The evaluation of documents’ vector projection .................................................................. 3 4. The evaluation of the sped-up SOM creation methods ........................................................ 5 5. The manual evaluation of the sped-up map creation methods ............................................. 9 6. Conclusion .......................................................................................................................... 10 7. Bibliography ....................................................................................................................... 10 12