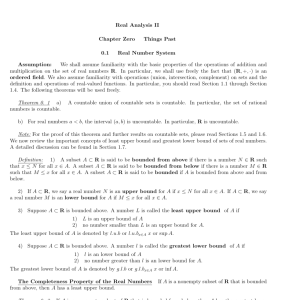

Central Limit Theorem

advertisement

CENTRAL LIMIT THEOREM

ΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩ

Central Limit Theorem

If X1, X2, X3, … is a sequence of independent random variables, each taken as an

observation from the same population, and if the population mean is and the standard

deviation is (finite), then the random variable sequence Z1, Z2, Z3, …

Xn

where Z n n

converges in distribution to N(0, 1).

Some notes:

Ω

d

d

1.

This can be restated as { Zn } N(0, 1). The symbol should be

read as “converges in distribution.”

2.

Convergence in distribution is a statement about the cumulative

distribution functions F1 (for Z1), F2 (for Z2), F3 (for Z3), and so on, and

also F (for the limiting random variable).

3.

Consider the limiting cumulative distribution function F, and let u be a

continuity point of F.

If F is continuous, then every u is a continuity point.

If F is not continuous, then u cannot be one of the jump points

of F.

4.

Consider the sequence of numbers F1(u), F2(u), F3(u), … . Check that this

sequence of numbers converges to F(u).

5.

If every u that satisfies (3) also satisfies (4), then the sequence { Fn }

converges in distribution to F.

Even though convergence in distribution is a property of

cumulative distribution functions, as noted in (2), it is

common to say also that the corresponding sequence of

random variables converges in distribution.

6.

In this discussion, there is no mention of independence.

7.

Here is an example that justifies the concern about continuity points in (3).

1

Suppose that the random variable Zn has the property P Z n = 1. Its

n

1

cumulative distribution function is Fn(u) = I u . The sequence of

n

random variables clearly converges to the constant zero, corresponding to

the cumulative distribution function F(u) = I(0 u). The function F has

a jump at 0, so that 0 is not a continuity point. For every nonzero u, it

1

gs2011

CENTRAL LIMIT THEOREM

ΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩΩ

happens that { Fn (u) } F(u). This convergence does not happen

at 0, as Fn(0) = 0 for every n. Then { Fn (0) } 0, while F(0) = 1.

Ω

8.

The previous example may be unsatisfying in that all the random variables

are degenerate (non-random constants). Thus, it seems to be a statement

about numbers rather than about random variables. Try replacing Zn by a

1

uniform random variable on the interval 0, ; the same demonstration

n

will work.

9.

There are many, many variants on the Central Limit theorem. This result

can be proved under much weaker assumptions than those we have used

here.

10.

The result can be proved, using the assumptions we have stated here, by

characteristic functions. If you are willing to assume that all moments are

finite, then moment generating functions can provide a proof.

11.

For the assumptions used here, most people will say that the

approximation to normality is adequate if n 30. That is, a sample size of

30 or more will be enough to invoke the Central Limit theorem. This is,

of course, a practical statement which cannot possibly be theoretically

rigorous. The Central Limit theorem works very well for nearly all real

data problems if n 10. This seems to be a secret.

12.

The Central Limit theorem should be used with suspicion if the result

probability is extremely large or extremely small. That is, you might not

want to trust the Central Limit theorem in cases in which it provides an

answer like 0.001 or 0.999.

13.

The Central Limit theorem is most often applied to X , the average of a

sample X1, X2, …, Xn from a population with mean and standard

deviation . It will usually be noted that E X = and SD( X ) =

.

n

These statements are correct, but they are not the Central Limit theorem.

The Central Limit theorem is about the limiting distribution of the

X

standardized random variable n

.

14.

Sample averages do not always have limiting normal distributions. For

example, the average of a sample of Cauchy random variables will not

have a limiting normal distribution.

2

gs2011