suppose a2

advertisement

S

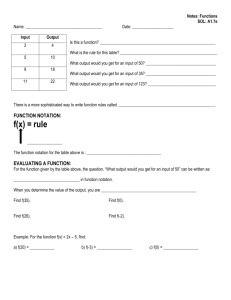

Conditional Probability

P(A/B )=

𝑃(𝐴∩𝐵)

A

A∩𝐵

B

𝑃(𝐵)

- Knowing that event B has occurred, S is reduced to B

Figure1

- Using relative frequency repeated n-times.

𝑛𝐴∩𝐵/𝑛

𝑃(𝐴∩𝐵)

𝑛𝐵/𝑛

𝑃(𝐵)

= P(A/B)

Note

-

𝑃(𝐴 ∩ 𝐵) = P(A/B)P(B)= P(B/A)P(A)

Similarly,

-

P(A∩B ∩ 𝐶) = P(A/B)

= P(A/B∩ 𝐶)𝑃(𝐵 ∩ 𝐶)P(C)

Example:

In a binary communication system, suppose that P(A0)=1-P, P(A1)=P and P(error)=ε. Fined

𝑃(𝐴𝑖 ∩ 𝐵𝑗 )

where, i,j=0,1

Sol.

P(A0∩B0)=P(B0/A0)P(A0 ) = (1-ε)(1-P)

P(error)= ε =P(B0/A1)=P(B1/A0)

P(A0∩B1)=P(B1/A0)P(A0)= ε(1-P)

P(A1∩B0)=P(B0/A1)P(A1)= ε * P

P(A1∩B1)=P(B1/A1)P(A1)= (1-ε)P

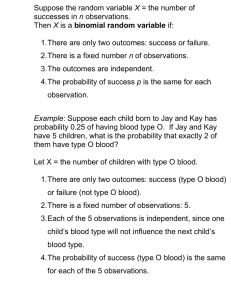

Total Probability theorem

Let S=B1 ∩ B2 …… ∩ Bn (mutually exclusive events)

B1, B2, ………..,Bn are called partition of S

Any event A=A∩S = A∩(B1∪B2∪B3…….∪Bn)

= (A∩B1) ∪ (A∩B2) ∪ (A∩B3)…… ∪ (A∩Bn)

P(A)=P(A/B1)P(B1)+……………….+P(A/Bn)P(B)

This is useful when an experiment can be viewed as sequences of two sub-experiments as

shown in figure 2.

……….

Bn-1

B1

A

B2

Bn

B3

…

Figure2

Example:

A factory produces a mix of good and bad chips. The lifetime of good chips follow exponential

law with rate α and bad chips with rate 1000 α.

Suppose (1-P) of hips are good, find the probability that a randomly selected chip is still

functioning after t?

Sol.

G=” Chip is good”

B=” Chip is bad”

C=” Chip is functioning after time t ”

P(C/G) = 𝑒 −𝛼𝑡 , P(C/B) = 𝑒 −1000𝛼𝑡

Find P(C)

P(C) =P(C∪G) + P(C∩B)

=P(C/G)P(G)+P(C/B)P(B)

= 𝑒 −𝛼𝑡 (1-P) + 𝑒 −1000𝛼𝑡 P

Bayes’ Rule

Example:

Let S=B1∪B2∪ ………………….∪Bn (Partition)

Suppose that event A occurs, what is the probability of Bj?

Sol.

P(𝐵𝑗 /A) =

𝑃(𝐴∩𝐵𝑗 )

𝑃(𝐴)

𝐴

)𝑃(𝐵𝑗 )

𝐵𝑘

𝐴

∑𝑛

𝑘=1 𝑃(𝐵 )𝑃(𝐵𝑘 )

𝑘

𝑃(

=

Bayes’ rule is useful in finding the “a posteriori”

(P(Bj/A) in terms of the “ a priori” (P(Bj) before experiment is performed) and occurrence of A

Example:

In binary communication system, find which input is more probable given that the receive has

output 1. Assume P(A0) = P(A1) and P(error)= ε.

Sol.

P(A0/B1) =

=𝜀

2

𝜀∗12

(1−𝜀)

+

2

𝐵

𝑃( 1)𝑃(𝐴0 )

𝐴0

𝑃(𝐵1 )

=

𝐵

𝑃( 1)𝑃(𝐴0 )

𝐴0

𝐵1

𝐵

𝑃( )𝑃(𝐴0 )+𝑃( 1)𝑃(𝐴1 )

𝐴0

𝐴1

=ε

P(A1/B1) =

𝐵

𝑃( 1)𝑃(𝐴1 )

𝐴1

𝑃(𝐵 )

=

(1−)𝜀∗12

𝜀 (1−𝜀)

+

2

2

If ε<12, then A1 is more probable.

= 1-ε

Independence of events

Events A and B are independent if

P(A/B)= P(A)P(B) or P(A/B) = P(A)

Example: Two number x and y are selected at random between 0 and 1. Let event A={x> 0.5},

B={y<0.5}

C={x>y}

Are A and B independent?

Are A and C independent?

Sol.

P(A)=0.5=P(B)=P(C)

P(C∩B) = 14

P(A∩B) = 14

P(A∩C) = 38

P(A∩B) = 14 = P(A)P(B)

then A & B Independent.

P(A∩C) = 38 ≠P(A)P(B)

then A & C dependent.

Notes

(1): If P(A) > 0 and A and B are mutually exclusive or disjoint, then A and B cannot be

independent.

(2) If A and B are independent and mutually exclusives, then P(A)=0 or P(B)=0.

(3) A, B and C are independent, if:

P(A∩B)= P(A)P(B)

P(A∩C)= P(A)P(C)

P(B∩C)= P(B)P(C)

P(A∩B∩ 𝐶)= P(A)P(B)P(C)

Pairwise Independent

(4) Independent is often assumed if events have no obvious physical relation.

Sequential experiments

-

Many experiments can be decomposed of sequences of simpler sub experiments, which

may or may not be independent.

Sequences of independent experiments A1, A2, ………, Ak be events associated with the

outcomes of k independent sub-experiments S1, S2, …….., Sk then

P(A1∩A2… … … ∩ 𝐴k)= P(A1)P(A2)……P(Ak)

Example:

Suppose 10 numbers are selected at random between [0 1]. Find the probability that the first 5

numbers < 14 and the last 5 ≥ 12

Sol.

1 3

1 3

Probability (4) (2) = 3.05 *10−3

The binomial law

A Bernoulli trial involves performing as experiment and noting whether event A occurs

“success” or not “failure”.

Theorem: Let k be the number of success in n independent Bernoulli trials, then the probability

of k successes:

Pn(k)= (𝑛𝑘)𝑝𝑘 (1 − 𝑝)𝑛−𝑘−3

where k = 0, …………n

Example:

let k be the number of active speakers in a group of 8 independent speakers.

Suppose a speaker is active with probability = 13

Find probability that the number of active speakers is greater than 6.

Sol.

Let k=number of active speakers.

P(k>6) = P(k=7) + P(k=8)

1 7 2 1

1 8 2 0

= (87) (3) (3) + (88) (3) (3) = 0.00259

The multinomial probability law

Let the experiment is repeated n times and each Bj occurred kj times. Then, probability of the

vector (k1, k2, …………kn)

P(k1, k2, …………kn) =

𝑛!

𝑘1!𝑘2!……..𝑘𝑚!

𝑝1𝑘1 𝑝2𝑘2 ………. 𝑝𝑚𝑘𝑚

Example:

Pick 10 phone numbers at random and note the last digit. What is the probability that you

obtain 0 to 9 (with ordering)

Probability =

10!

1!1!……..1!

(0.1)10 = 3.6 * 10−4

The Geometric probability law

-

Here, the outcome is the number of independent Bernoulli trials until the occurrence of

the first success. S= {1, 2, …………∞}

The probability P(m) that m trials are required means that the first m-1 result in failures

and the m’th trials is success. Let Ai = “success in i’th trials”

P(m) = P(A1, A2, ………..Am-1, An) = (1 − 𝑝)𝑚−1 p

-

where n=1,2, ……….

The probability that more than k trials are required before a success:

∞

∞

P[{m>K}] = p ∑𝑚=𝑘+1 𝑞 𝑚−1 = p 𝑞 k ∑𝑗=0 𝑞 𝑗

= p 𝑞k

= 𝑞k

1

1−𝑞

Example:

Computer A sends a message to computer B. If the probability of error is (0.1). if error

occurs, B request A resend the message. What is the probability that a message is

transmitted more than twice?

Sol.

Each transmission is Bernoulli trial with P(success) = 0.9 and P(failure) =0.1

P(m>2) = (1 − p)2 (1 − 0.9)2 = (0.1)2 = 0.01