Final Report

advertisement

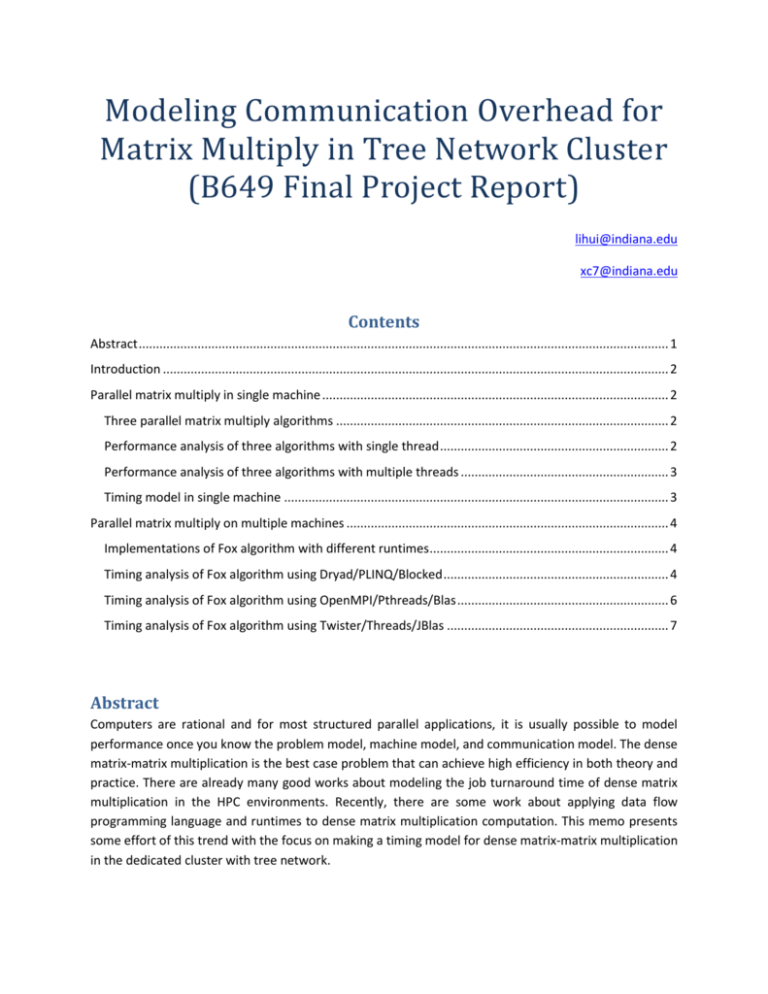

Modeling Communication Overhead for Matrix Multiply in Tree Network Cluster (B649 Final Project Report) lihui@indiana.edu xc7@indiana.edu Contents Abstract ......................................................................................................................................................... 1 Introduction .................................................................................................................................................. 2 Parallel matrix multiply in single machine .................................................................................................... 2 Three parallel matrix multiply algorithms ................................................................................................ 2 Performance analysis of three algorithms with single thread .................................................................. 2 Performance analysis of three algorithms with multiple threads ............................................................ 3 Timing model in single machine ............................................................................................................... 3 Parallel matrix multiply on multiple machines ............................................................................................. 4 Implementations of Fox algorithm with different runtimes ..................................................................... 4 Timing analysis of Fox algorithm using Dryad/PLINQ/Blocked ................................................................. 4 Timing analysis of Fox algorithm using OpenMPI/Pthreads/Blas ............................................................. 6 Timing analysis of Fox algorithm using Twister/Threads/JBlas ................................................................ 7 Abstract Computers are rational and for most structured parallel applications, it is usually possible to model performance once you know the problem model, machine model, and communication model. The dense matrix-matrix multiplication is the best case problem that can achieve high efficiency in both theory and practice. There are already many good works about modeling the job turnaround time of dense matrix multiplication in the HPC environments. Recently, there are some work about applying data flow programming language and runtimes to dense matrix multiplication computation. This memo presents some effort of this trend with the focus on making a timing model for dense matrix-matrix multiplication in the dedicated cluster with tree network. Introduction The linear algebra problem we studied in this memo are of the form: = 𝛼 ∗ 𝐴 ∗ 𝐵 + 𝛽 ∗ 𝐶 . In order to simply the problem model, we assume matrices A and B are square dense matrices and 𝛼 = 1.0, 𝛽 = 0.0. We implemented several parallel dense matrix-matrix algorithms using state-of-the-art runtimes in the cluster with tree network. These algorithms can be classified into categories according to the communication patterns, such as how steps of communication pipeline are overlapped, how compute and communication are overlapped. Among the parallel algorithms of matrix multiplication, we mainly study the Fox algorithm, which is also called broadcast-multiply-roll up (BMR) algorithm. In order to obtain relative general results that are applicable to a set of situation, we simplify the machine model by assuming it has one CPU and one shared memory space with the processing speed of Tflops. As last, we assume the jobs are run in cluster with the tree network, which is very common in data center, with the communication speed of Tcomm. The goal of this study is to model Tcomm/Tflops, the communication overhead per double float point operations of matrix multiply. The difficulty of our work is to model the communication overhead of Fox algorithm implemented with specific runtimes in cluster with tree network. Parallel matrix multiply in single machine Three parallel matrix multiply algorithms 1) Naïve algorithm (3 loops approach) 2) Blocked algorithm (6 loops approach) 3) Blas Performance analysis of three algorithms with single thread Figure 1. Mflops for three algorithms with Java Figure 2. Mflops for three algorithms with C As it shown in figure 1, the Java Blas and blocked matrix multiply perform better than naïve approach because the cache locality behavior better in the first two approaches. The Jblas version is much faster than Java blocked algorithm because Jblas actually invoke Fortran code to execute computation via JNI. Figure 2 shows that both cblas and blocked algorithms perform better than the naïve version, but cblas is little slower than the blocked version we implemented. The reason is that current cblas lib is not optimized for the CPU on Quarry. I am writing email to UITS about the vendor provider blas_dgemm, such as Intel lapack. Performance analysis of three algorithms with multiple threads Figure 3. Threaded CBlas code in bare metal in FG Figure 4. Threaded JBlas code in bare metal in FG Figure 5. Threaded CBlas code in VM in FG Figure 6. Theaded JBlas code in VM in FG Figure 3, 4, 5&6 are Job Turnaround Time of Threads/JBlas program with various numbers of threads and matrices sizes on bare metal and VM in FutureGrid environments. Timing model in single machine We make the timing model of matrix multiply on single machine. T = f * tf + m * tm = (2*N*N*N)* tf + (3*N*N)* tm 1) N = order of square matrix 2) 3) 4) 5) f = number of arithmetic operations, (f=2*N*N*N) m = number of elements read/write from/to memory, (m = 3*N*N) tm = time per memory read/write operation tf = time per arithmetic operation Parallel matrix multiply on multiple machines Implementations of Fox algorithm with different runtimes Figure 7 is the relative parallel efficiency of Fox algorithms implemented with different runtimes. This section analyzes the timing model of Fox algorithm in Dryad and MPI. Figure 7 Fox algorithms with different runtimes Timing analysis of Fox algorithm using Dryad/PLINQ/Blocked To theoretical analysis above experiments results, we make the timing model for Fox-Hey algorithm in the Tempest. Assume the M*M Matrix Multiplication jobs are partitioned and run on a mesh of √𝑁 ∗ √𝑁 nodes. The size of subblocks in each node is m*m, where 𝑚 = 𝑀/√𝑁. The “broadcast-multiply-roll” cycle of the algorithm is repeated √𝑁 times. For each such cycle: since the network topology of Tempest is simply star rather than mesh, it takes √𝑁 − 1 steps to broadcast the A submatrix to the other √𝑁 − 1 nodes in the same row of processors mesh. In each step, the overhead of transferring data between two processes include 1) the startup time (latency), 2) the network time to transfer data, 3) and the disk IO time for writing data into local disk and reloading data from disk to memory. Note: the extra disk IO overhead is common in Cloud runtime such as Hadoop. In Dryad, the data transferring usually go through file pipe over NTFS. Therefore, the time of broadcasting the A submatrix is: (√N − 1) ∗ (𝑇𝑙𝑎𝑡 + 𝑚2 ∗ (𝑇𝑖𝑜 + 𝑇𝑐𝑜𝑚𝑚 )) (Note: In a good implementation pipelining will remove factor (√N − 1) in broadcast time) As the process to “roll” B submatrix can be parallelized and run within one step, its time overhead is: 𝑇𝑙𝑎𝑡 + 𝑚2 ∗ (𝑇𝑖𝑜 + 𝑇𝑐𝑜𝑚𝑚 ) The time actually to compute the submatrix product (include the multiplication and addition) is: 2*𝑚3 ∗ 𝑇𝑓𝑙𝑜𝑝𝑠 The total computation time of the Fox-Hey Matrix Multiplication is: 𝑇 = √𝑁 ∗ (√𝑁 ∗ (𝑇𝑙𝑎𝑡 + 𝑚2 ∗ (𝑇𝑖𝑜 + 𝑇𝑐𝑜𝑚𝑚 )) + 2 ∗ 𝑚3 ∗ 𝑇𝑓𝑙𝑜𝑝𝑠 ) (1) 𝑇 = 𝑁 ∗ 𝑇𝑙𝑎𝑡 + 𝑀2 ∗ (𝑇𝑖𝑜 +𝑇𝑐𝑜𝑚𝑚 ) + 2 ∗ (𝑀3 /𝑁) ∗ 𝑇𝑓𝑙𝑜𝑝𝑠 (2) The last term in equation (2) is the expected ‘perfect linear speedup’ while the other terms represent communication overheads. In the following paragraph, we investigate 𝑇𝑓𝑙𝑜𝑝𝑠 and 𝑇𝑖𝑜 +𝑇𝑐𝑜𝑚𝑚 in actual timing results. 𝑇16∗1 = 21𝑠 + 3.24𝑢𝑠 ∗ 𝑀2 + 1.33 ∗ 10−3 𝑢𝑠 ∗ 𝑀3 (4) 𝑇16∗24 = 61𝑠 + 1.55𝑢𝑠 ∗ 𝑀2 + 4.96 ∗ 10−5 𝑢𝑠 ∗ 𝑀3 (5) Equation (4) is the timings for Fox-Hey algorithm running with one core per node on 16 nodes. Fig.25 Equation (5) represents the timings for Fox-Hey/PLINQ algorithm running with 24 cores per node on 16 nodes. Equation (6) is the value of 𝑇𝑓𝑙𝑜𝑝𝑠−𝑠𝑖𝑛𝑔𝑙𝑒 𝑐𝑜𝑟𝑒 𝑇𝑓𝑙𝑜𝑝𝑠−24 𝑐𝑜𝑟𝑒𝑠 for large matrix sizes. It verifies the correctness of the cubic term coefficient of equation (3) & (4), as 26.8 is near 24, the number of cores in each node. Equation (7) is the value of (𝑇𝑐𝑜𝑚𝑚 +𝑇𝑖𝑜 )𝑠𝑖𝑛𝑔𝑙𝑒 𝑐𝑜𝑟𝑒 (𝑇𝑐𝑜𝑚𝑚 +𝑇𝑖𝑜 )24 𝑐𝑜𝑟𝑒𝑠 for large matrix sizes. The value is 2.08, while the ideal value should be 1.0. The difference can be reduced by making fitting function with results of larger matrix sizes. The intercept in equation (3), (4), and (5) is the cost of initialization the computation, such as runtime startup, allocating the memory for matrices. 𝑇𝑓𝑙𝑜𝑝𝑠−𝑠𝑖𝑛𝑔𝑙𝑒 𝑐𝑜𝑟𝑒 𝑇𝑓𝑙𝑜𝑝𝑠−24 𝑐𝑜𝑟𝑒𝑠 1.33∗10^−3 = 4.96∗10^−5 ≈ 26.8 (𝑇𝑐𝑜𝑚𝑚 +𝑇𝑖𝑜 )𝑠𝑖𝑛𝑔𝑙𝑒 𝑐𝑜𝑟𝑒 (𝑇𝑐𝑜𝑚𝑚 +𝑇𝑖𝑜 )24 𝑐𝑜𝑟𝑒𝑠 𝑇𝑖𝑜 𝑇𝑐𝑜𝑚𝑚 3.24 = 1.55 ≈ 2.08 ≈5 (6) (7) (8) Equation (8) represents value of 𝑇 𝑇𝑖𝑜 𝑐𝑜𝑚𝑚 for large submatrix sizes. The value illustrates that though the disk IO cost has more effect on communication overhead than network cost has, they are of the same order for large submatrix sizes, thus we assign the sum of them as the coefficient of the quadratic term in equation (2). Besides, one must bear in mind that the so called communication and IO overhead actually include other overheads such as string parsing, index initialization, which are dependent upon how one writes the code. 9 real curve 8 approximate curve (1/𝜀)-1 Parallel Cost 7 Linear (approximate curve) 6 5 4 3 2 y = 2.2172x - 0.4099 1 0 0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 (N*10^3)/(2*M) 1 Fig. 8 overhead (𝜀 − 1) 𝑣𝑠. 1 Fig 8 plot parallel overhead (𝜀 − 1) 𝑣𝑠. 𝑁∗10^3 2∗𝑀 𝑁∗10^3 , 2∗𝑀 (note: red curve is fitting function) with 𝜀 calculated directly from equation (9),(3),and (5). This experiment is done to investigate the overhead term, for small 𝑁∗10^3 2∗𝑀 𝑁 2∗𝑀 ∗ 𝑇𝑐𝑜𝑚𝑚+𝑇𝑖𝑜 . 𝑇𝑓𝑙𝑜𝑝𝑠 The linear approximate curve (large matrix sizes) shows that the function form of equation (9) is correct. Equation 10 is 𝑁 value of the linear coefficient of 2∗𝑀 1 𝑡𝑖𝑚𝑒 𝑜𝑛 1 𝑝𝑟𝑜𝑐𝑒𝑠𝑠𝑜𝑟 𝜀 = 𝑁 ∗ 𝑡𝑖𝑚𝑒 𝑜𝑛 𝑁 𝑝𝑟𝑜𝑐𝑒𝑠𝑠𝑜𝑟 ≈ 𝑇𝑐𝑜𝑚𝑚 +𝑇𝑖𝑜 𝑇𝑓𝑙𝑜𝑝𝑠 1 𝑁 𝑇𝑐𝑜𝑚𝑚+𝑇𝑖𝑜 1+ ∗ 2∗𝑀 𝑇𝑓𝑙𝑜𝑝𝑠 = 2.217 ∗ 10^3 (9) (10) Timing analysis of Fox algorithm using OpenMPI/Pthreads/Blas Environment: OpenMpi 1.4.1/Pthreads/RedHat Enterprise 4/Quarry Fig . 9 MPI/Pthreads Fig. 10 relative parallel efficiency 𝑇9∗8 = 0.971898 + 5.61299 ∗ 10−8 ∗ 𝑥 2 + 1.69192 ∗ 10−10 ∗ 𝑥^3 (1) 𝑇16∗8 = 0.475334 + 1.3172 ∗ 10−8 ∗ 𝑥 2 + 9.89912 ∗ 10−11 ∗ 𝑥^3 (2) 𝑇25∗8 = 0.129674 + 4.38066 ∗ 10−8 ∗ x 2 + 6.21113 ∗ 10−11 ∗ x^3 (3) Analysis for Tflops, the cubic term coefficient of equaiton (1),(2),(3) 16.9 25 = 2.72 ≈ = 2.77 6.2 9 9.8 25 = 1.58 ≈ = 1.56 6.2 16 Note: the quadratic term coefficient (Tcomm+Tlat) of equation (1),(2),(3) are not consistent, as there are performance fluctuate in experiments. Besides, when the assigned nodes are located in different rack, the (Tcomm+Tlat) changes due to increased hops. Todo: (1) more experiments to eliminate the performance fluctuate. (2) ask system admin the network topology of Quarry. (3) study whether MPI broadcast take poly-algorithms that adjust for different network topology. Analysis, Fox/MPI/Pthread scale out for large matrices sizes and different number of compute nodes 4*4nodes 5*5nodes 1 0.8 0.6 0.4 0.2 0 0 0.0005 0.001 0.0015 0.002 0.0025 -0.2 Fig 11. Parallel overhead vs. 1/sqrt(grain size) for 16 and 25 nodes cases (Note: x axis are not consistent) Timing analysis of Fox algorithm using Twister/Threads/JBlas Performance comparison between Jblas and Blocked version (note: replace with absolute performance) 16nodes-Jblas 16nodes-Blocked Relative papallel efficiency 1.2 1 0.8 0.6 0.4 0.2 0 600 1200 1800 2400 3000 3600 4200 4800 5400 6000 size of submatrix per node Figure 12 parallel efficiency for various task granularities for Jblas and blocked algorithm. As it obviously indicated in figure 2 that the parallel efficiency degraded dramatically after porting the Jblas. The reason is that computation overhead takes less proportion in Jblas version than that in blocked version. In addition, we even found that, for same problem size, running the jobs with 25 nodes is slower than that with just 16 nodes. As a result, the communication overhead has become the bottleneck for Fox/Twister/Threads/Jblas implementation. The current implementation only use single Naradabroker instance, but with the peer to peer data transferring function open. Parallel Overhead VS. 1/Sqrt(GrainSize): 16nodes 25nodes parallel overhead 180 160 140 120 100 80 60 40 20 0 0 0.0005 0.001 0.0015 0.002 Figure 13. Parallel Overhead VS. 1/Sqrt(GrainSize) in Fox/Twister/Thread/Jblas