Information Geometry and Machine Learning: An Invitation Prof. Jun

advertisement

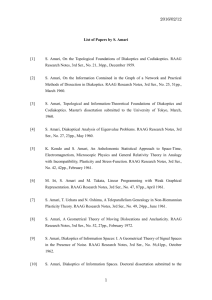

Information Geometry and Machine Learning: An Invitation Prof. Jun Zhang (University of Michigan Ann Arbor) Part A (Information Geometry): 3 lectures Part B (Machine Learning Application): 2 lectures Optional: (Open Research Questions): 1 lecture Information geometry is the differential geometric study of the manifold of probability density functions (or probability distributions on discrete support). From a geometric perspective, a parametric family of probability density functions on a sample space is modeled as a differentiable manifold, where points on the manifold represent the density functions themselves and coordinates represent the indexing parameters. Information Geometry is seen as an emerging tool for providing a unified perspective to many branches of information science, including coding, statistics, machine learning, inference and decision, etc. This serial lectures will provide an introduction to the fundamental concepts in Information Geometry as well as a sample application to machine learning. Each lecture will be 2 hours. Part A will introduce foundation of information geometry, including topics like: Kullback-Leibler divergence and Bregman divergence, Fisher-Rao metric, conjugate (dual) connections, alpha-connections, statistical manifold, curvature, duallyflat manifold, exponential family, natural parameter/expectation parameter, affine immersion, equiaffine geometry, centro-affine immersion, alpha-Hessian manifold, symplectic, Kahler, and Einstein-Weyl structures of information systems, etc. Part B will start with the regularized learning framework, with the introduction of reproducing kernel Hilbert space, semi-inner product, reproducing kernel Banach space, representer theorem, feature map, kernel-trick, support vector machine, l1-regularization and sparsity, etc. Application of information geometry to kernel methods will be discussed at the end of this mini-course, as are other open research questions. Students at advanced undergraduate and graduate levels are welcomed. The instructor looks forward to recruiting motivated mathematics students to work in this exciting new area of applied mathematics. PREREQUISITE: A first course in differential geometry is expected for Part A. Real analysis or function analysis is expected for Part B. MATERIALS: (A.1) S. Amari and H. Nagaoka (2000). Method of Information Geometry. AMS monograph vol 191. Oxford University Press. (A.2) U. Simon, A. Schwenk-Schellschmidt, and H. Viesel. (1991). Introduction to the Affine Differential Geometry of Hypersurfaces. Science University of Tokyo Press. (A.3) Zhang, J. (2004). Divergence function, duality, and convex analysis. Neural Computation, vol 16, 159-195. (B.1) S. Amari and S. Wu (1999). Improving support vector machine classifiers by modifying kernel functions. Neural Networks, vol 12(no 6), 783-789. (B.2) F. Cucker and S. Smale (2001). On the mathematical foundation of learning. Bulletin of the American Mathematical Society, vol 39 (no.1), 1-49. (B.3) T. Poggio and S. Smale (2003). The mathematics of learning: Dealing with data. Notice of the American Mathematical Society, vol 50 (no.5), 534-544.