Computational Methods - Operations Research and Financial

advertisement

1

CHAPTER 3

2

COMPUTATIONAL METHODS

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

The computational methods of a reliability monitoring systems are responsible for

ensuring data quality, computing travel times from different sources, generating reliability

information, and analyzing the relationship between travel time variability and the causes of

congestion. On one level, it can be thought of as a travel time processing unit; it takes the raw

data in the tables outlined in Chapter 2, cleans it and fills in gaps, computes travel times and

error estimates, and stores data in summary tables. It also serves as a reliability computation unit;

upon request from an external system, it assembles historically archived travel times into

distributions and calculates reliability measures from them. Finally, it supports analysis into the

causes of reliability by modeling the relationship between travel time variability and various

congestion factors.

This chapter is broken into six subsections that detail the various functional requirements

of the travel time reliability monitoring system:

Ensuring Data Quality describes the process of evaluating the quality of the raw

data and performing imputation for missing or invalid data.

Calculating Travel Times details steps and computations for transforming the raw

data compiled from various sources into link travel times.

Validating Travel Times gives guidance on prioritizing facilities for travel time

validation and how the validation can be performed.

Assembling Travel Time Distributions describes how historical travel times are

formed into probability density functions.

Reporting Reliability describes the message exchange between the reliability

monitoring system and external systems used to communicate reliability

information in response to requests.

Analyze Effects of Non-Recurring Events describes the regression model that can

be used to form statistical relationships between travel time variability and its

causes.

As discussed in Chapter 1, both demand-side and supply-side perspectives on travel time

reliability need to be kept in mind when reading this material. The demand side perspective

focuses on individual travelers and the travel times they experience in making trips. Observations

of individual vehicle (trip) travel times are important; the travel time density functions of interest

pertain to the travel times experienced by individual travelers at some specific point in time; and

the percentiles of those travel times (e.g., the 15th, 50th, and 85th percentiles) pertain to the

travel times for those travelers. The supply-side perspective focuses on consistency in the

average (or median) travel times for segments or routes. In this case, the observations are the

average (or median) travel times on these segments or routes in specific time periods (e.g., 5

minute intervals), the average is the mean of these 5-minute average travel time observations,

and the probability density function captures the variation in the average travel time across some

span of time. Thus, the percentile observations pertain to specific points in the probability

density function for these observed average travel time values. Both of these perspectives are

important. The first relates to the demand side of the travel market place, where individual

travelers want to receive information that helps them ascertain when they need to leave to arrive

5

1

2

3

4

5

6

7

8

on time or how long a given trip is likely to take given some degree of confidence in the arrival

time. The second aims at the supply side for system operators interested in understanding the

consistency in segment and route-level travel times provided and in making capital investments

and operational changes that help enhance that consistency. The text that follows strives to make

it clear which perspective pertains in each part of the material discussed and presented. The word

"average" is retained throughout where average travel times are being referenced - even though it

may seem redundant at times - just to ensure that the text is clear and the reader does not

mistakenly perceive that actual trip travel times are being discussed.

9

ENSURING DATA QUALITY

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

The most prevalent type of roadway data currently available to agencies comes from

point sensors, such as loop and radar detectors. While these data sources provide valuable

information about roadway performance, they have two main drawbacks: (1) detectors frequently

malfunction; and (2) detectors only provide data in the temporal dimension, whereas travel times

require data over spatial segments. The first issue is best dealt with through an imputation

process, which fills in data holes left by bad detectors. The second issue, which is discussed in

the Calculating Travel Times section of this chapter, can be resolved by extrapolating speeds

along roadway segments located between two point detectors.

An alternative approach to improving the quality of infrastructure-based data is to

calibrate detector-based travel time estimates with travel times from AVI/AVL probe data.

Research has been performed into this task, but the benefits are uncertain. For example, past

research attempted to use transit AVL data to calibrate loop detector speeds, but found it is

impossible to determine the probe vehicle's speed at the loop location (11.9). It also assumes that

agencies will have both infrastructure-based and vehicle-based sensors deployed on the same

route, which is not guaranteed. Agencies that do have both types of resources are advised to rely

on the vehicle-based estimates when sufficient samples are available due to the higher accuracy

of their travel time measures. Alternatively, agencies can employ a fusion method that combines

inputs from both data sources to estimate a representative travel time. Both of these approaches

are preferable to calibrating point sensor data with vehicle-based data.

As such, this section focuses on the critical issue of imputation, describing the best

practices for identifying bad detectors and imputing data for infrastructure-based sources. While

less crucial to the estimate of accurate reliability estimates, it also describes general approaches

for imputing data for vehicle-based (AVI/AVL) sources where detectors are broken or vehicle

samples are insufficient.

34

Infrastructure-Based Sensors

35

36

37

Rigorous imputation is a two-step process. First, the system needs to systematically

determine which detectors are malfunctioning. Then, the data from the broken detectors must be

discarded and the remaining holes filled in with imputed values.

38

Identifying Bad Detectors

39

40

41

Detectors can experience a wide range of errors. There can be problems with the actual

detector or its roadside controller cabinet hardware, or a failure somewhere in the chain of

communications infrastructure that brings a detector's data from the individual lane to a

6

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

centralized location. Each of these errors results in unique, diagnosable signs of distress in the

data that the detector transmits. There are two broad categories of methods for identifying bad

detectors based on their data: (1) real-time data quality measurement; and (2) post-hoc data

quality measurement. While this section briefly describes real-time data quality measurement,

post-hoc methods are recommended for implementation in a reliability monitoring system.

Real-time data quality measurement methods run algorithms on each sample transmitted

by an individual detector, so a detector health diagnosis is made for each 30-second data sample.

Because traffic conditions can vary so widely from one 30-second period to another, however, it

is difficult to assign "acceptable" regions within which a detector can be considered good and

outside of which it can be assumed to be broken. Additionally, depending on the time of day, day

of week, and other factors like the facility type, geographical location, and lane number,

detectors record different levels of traffic. As such, this method requires calibrating diagnostics

for each individual detector, making it impractical for large scale deployments.

The best practice for reliability monitoring is to use post-hoc data quality measurement

methods. These methods make diagnostic determinations based on the time series of a detector's

data; in other words, the data submitted over an entire day (2). This methodology works because

typically, once detectors go bad, they stay bad until the problem can be resolved. As such, they

are usually bad for multiple days at a time. Under this suggested framework, the following four

symptomatic errors can be detected to mark a detector as bad during a given day:

Too many zero occupancy and flow values reported;

Too many non-zero occupancy and zero flow values reported;

Too many very high occupancy samples reported; or

Too many constant occupancy and flow values reported.

Agencies are advised to only run detector health algorithms on the data collected during

hours when the facility carries significant traffic volumes (for example, between 5:00 AM and

10:00 PM). Otherwise, low volume samples collected during the early morning hours could

cause a false positive detector health diagnosis, particularly under the first category listed above.

Additionally, agencies should establish threshold levels for each error symptom that reflect the

unique traffic patterns in their regions. For instance, the thresholds should be set differently for

detectors that are in urban versus rural areas. Working detectors in rural areas may submit a high

number of data samples that fall into the first category simply because there is little traffic.

Alternatively, working detectors on congested urban facilities may submit many samples that fall

into the third category because of the onset of queuing.

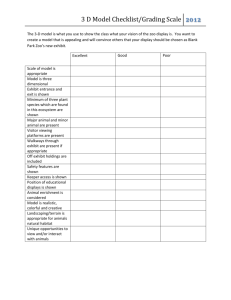

To serve as an example, the thresholds listed in Exhibit 11-1 can be used to detect

malfunctioning detectors in urban areas. In addition, Chapter 4 describes the methodology used

to identify bad detectors that were stuck or not collecting data in the Northern Virginia case

study.

41

Imputing Data

42

43

44

Once the system has classified infrastructure-based detectors as good or bad, it must

remove the data transmitted by the bad detectors so that invalid travel times are not incorporated

into the reliability computations. However, removing bad data points will leave routes and

Exhibit 11-1

PeMS Urban Detector Health Thresholds

7

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

facilities with incomplete data sets for time periods where one or more detectors were

malfunctioning. Since travel time computations for infrastructure-based sensors rely on the

aggregation and extrapolation of values from every detector on the route, travel time

computations for a route would be compromised each time a detector failed (which, given the

nature of detectors, will occur frequently). For this reason, imputation is required to fill in the

data holes and thus enable the calculation of travel times and variability. Exhibit 11-2 shows the

high-level concept of imputation.

33

Linear Regression from Neighbors Based on Local Coefficients

34

35

36

37

38

39

40

41

42

43

44

45

This imputation method is the most accurate due to the fact that there are high

correlations between occupancies and volumes of detectors in adjacent locations. Infrastructurebased detectors can be considered neighbors if they are in the same location in different lanes or

if they are in adjacent locations upstream or downstream. In this approach, an offline regression

analysis is used to continuously determine the relationship between each pair of neighbors in the

system. Then, when a detector is broken, its flow and occupancy values can be determined. The

regression equation can take the following form:

Exhibit 11-2

Imputation

From a data management perspective, agencies are advised to store and archive both the

raw data and the imputed data in parallel, rather than discarding the raw data sent by detectors

diagnosed as bad. This ensures that, when imputation methods change, the data can be reimputed where necessary. It also gives system users the ability to skeptically examine the results

of imputation where desired.

When imputation is needed for infrastructure-based data, it must begin at the detector

level. The strength of an imputation regime is determined by the quality and quantity of data

available for imputation use. The best imputations are derived from local data, such as from

neighboring sensors or historical patterns observed when the detector was working. Modest

imputations can also be performed in areas that have good global information, by forming

general regional relationships between detectors in different lanes and configurations. It is

important to have an imputation regime that makes it possible to impute data for any broken

detector, regardless of its characteristics. As such, the best practice is to implement a series of

imputation methods, employed in the order of the accuracy of their estimates. In other words,

when the best imputation method is not possible for a certain detector and time period, the nextbest approach can be attempted. That way, data that can be imputed by the most accurate method

will be, but imputation will also be possible for detectors that cannot support the most rigorous

methods.

The following imputation methods are recommended to be employed where possible, in

this order, to fill in data holes for bad detectors (2). The first two methods make use of some

real-time data, while the third and fourth methods rely on historically archived values.

Equation 11-1

Equation 11-2

8

1

2

3

4

Where (i,j) is a pair of detectors, q is flow, k is occupancy, t is a specified time period

(for example, 5 minutes), and are parameters estimated between each pair of loops using five

days of historical data. These parameters can be determined for any pair of loops that report data

to a historical database.

5

Linear Regression from Neighbors Based on Global Coefficients

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

This imputation method is similar to the first, but is needed because there are some loops

that never report data because they are always broken. This makes it impossible to compute local

regression coefficients for them. For these loops, it is possible to generate a set of global

parameters that expresses the relationship between pairs of loops in different configurations. The

configurations refer to location on the freeway and lane number. The linear model is developed

for each possible combination of configurations and takes the following form:

24

Temporal Medians

25

26

27

28

29

30

The above two linear regression methods can break down during time periods when

adjacent detectors are not reporting data themselves (for example, when there is a widespread

communication outage). In this case, temporal medians can be used as imputed values. Temporal

medians can be determined by looking at the data samples transmitted by that detector for the

same day of week and time of day over the previous ten weeks. Then, the imputed value is taken

as the median of those historical values.

31

Cluster Medians

32

33

34

35

36

37

38

39

40

This is the imputation method of last resort, for those detectors and time periods where

none of the above three methods are possible. A cluster is defined as a group of detectors that

have the same macroscopic flow and occupancy patterns over the course of a typical week. An

example cluster is all of the detectors that are monitoring a freeway into the downtown area of a

city. These detectors would all have comparable macroscopic patterns in that the AM and PM

peak data would be similar across detectors due to commuting traffic. Clusters can be defined

within each region. For each detector in the cluster, the joint vector of aggregate hourly flow and

occupancy values can be assembled and, from this, the median time-of-day and day-of-week

profile for each cluster can be calculated.

Equation 11-3

Equation 11-4

where (i,j) is a pair of detectors, ? = 0 if i and j are in the same location, 1 otherwise; li =

lane number of detector I; and lj = lane number of detector j. The terms are derived using locally

available data for each of the different configurations. Exhibit 11-3 shows an example of this

type of imputation routine.

Exhibit 11-3

Imputation Routine Example

9

1

2

3

4

5

6

7

Once values have been imputed for all broken detectors, the data set within the system

will be complete, albeit composed of both raw data and imputed values. It is important that the

quantity and quality of imputed data be tracked. That is, the database should store statistics on

what percentage of the data behind a travel time estimate, and thus a travel time reliability

measure, is imputed, and what methods were used to impute it. This allows analysts to make

subjective judgments on what percentage of good raw data must underlie a measure in order for

it to be usable in analysis.

8

Vehicle-Based Sensors

9

10

11

12

13

The biggest quality control issue with vehicle-based sensor data is filtering out

unrepresentative travel times collected from travelers who make a stop or take a different route.

This issue is discussed in the Calculating Travel Times section of this chapter. This section

describes methods for identifying broken AVI sensors, and imputing data where sensors are

broken or an insufficient number of vehicles samples were obtained in a given time period.

14

Identifying Broken AVI Sensors

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

There are two scenarios for which an AVI sensor might transmit no data: (1) the sensor is

broken; or (2) no vehicle equipped for sampling passed by during the time period. Therefore, it is

important to implement a method for distinguishing between these two cases, so that broken

sensors can be detected and fixed. A simple test for flagging a sensor as broken is if it did not

report any samples throughout a single day.

The system computes travel times for segments defined by two sensors positioned at the

segment's beginning and ending points. For example, sensors A and B define segment A-B. The

number of vehicles measured at each sensor can be written as nA and nB respectively, and the

number of matched vehicles over segment A-B as nA-B. As a rule:

Equation 11-5

If either sensor is broken or there is little to no traffic equipped for sampling, then or ,

and therefore . There are now three scenarios for which a vehicle-based sensor pair would report

no travel time data:

Either or both sensor A and B are broken;

No vehicle equipped for sampling passed by A or B during the time period; or

No vehicle was matched between A and B during the time period.

For the calculation of travel times, the consequence of a broken sensor and few to no

equipped vehicles at the sensor are identical: there are not enough data points to calculate the

travel time summary statistics for the segment during the time period.

Similar to the individual sensor malfunction test, a segment can be considered to be

broken on day d if its sensors do not report any samples throughout the day. This "missing" data

case is straightforward to detect. It is more difficult to handle the case when there are too few

data points to construct meaningful travel time trends, even with the running-median method

discussed in the Calculating Travel Times section of this chapter. For example, if only one data

point per hour is observed over a 24-hour period, detailed 5-minute or hourly travel time trends

10

1

2

3

4

5

6

7

8

9

cannot be recovered. Such data sparseness is expected during night when traffic volumes are

actually low, and does not cause problems during these free-flow periods. But such data

sparseness is not acceptable during peak hours. As such, the system should employ a test for data

sparseness. A segment A-B can be considered to have sparse data on day d if the total number of

samples throughout the day is less than a specified threshold (for example, 200 samples per day).

This approach looks at an entire day of data to determine where sensors are broken and

data is sparse. More granular approaches are also possible, such as running the above test for

individual hourly periods. However, more granular tests would have to use varying thresholds

based on the time of day and day of the week to fit varying demand patterns.

10

Imputing Missing or Bad Vehicle-Based Sensor Data

11

12

13

14

15

16

17

18

19

20

The consequence of a broken sensor is different for vehicle-based sensors and for

infrastructure-based sensors. For infrastructure-based sensors, missing data reduces the accuracy

of route-level travel time estimates. However, if vehicle-based sensor B over segment A-B

breaks, it may no longer be possible to measure the travel time over A-B, but it is possible to

measure the travel time over segment A-C, assuming that there is a sensor C downstream of

sensor B. Thus, with vehicle-based sensors, malfunctions impact the spatial granularity of

reported travel times, not their accuracy.

If agencies do wish to impute data for missing vehicle-based travel time samples, a few

options are available and are outlined below. They are listed in order of preference; where the

first option is not possible, the second option can be used, and so on until imputation is achieved.

21

Infrastructure-based Imputation

22

23

24

25

Where infrastructure-based detection is present, the best alternative is to use the observed

detector speed values to estimate travel times for the missing time periods. If no infrastructurebased detection is present, or if the detectors are broken, another imputation method should be

used.

26

Linear Regression from "Super Routes"

27

28

29

30

31

32

33

34

35

For segment A-B when A is broken, the super routes are all routes whose endpoint is B

and who pass through A. For segment A-B when B is broken, the super routes are all routes

whose starting point is A and who pass through B. Exhibit 11-4 shows an example of super route

assignments for two broken AVI sensors and paths. Using these definitions, local coefficient

relationships can be developed over time through linear regression between a route's travel time

and its super routes' travel times. The local coefficients from the super route that has the

strongest relationship with the route (the "optimal super route") can then be used to impute

missing travel times for the route, using a process akin to option 1 for infrastructure-based

imputation.

36

Temporal Median

37

38

39

40

If neither of the above two options is possible, then a temporal median approach,

equivalent to the one described for option 3 for infrastructure-based imputation, can be utilized.

A temporal median is the median of the historical, non-imputed route travel time values reported

for that segment for the same day of week and time of day over the most recent ten weeks.

11

1

2

3

4

Exhibit 11-4

Super Route Examples

5

CALCULATING TRAVEL TIMES

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

This section focuses on developing average travel times based on the data available from

a particular measurement technology. Accurate average travel time reliability measures can only

be generated from valid average travel times. The process for transforming raw data into

segment average travel times is dependent on the technology used for data collection.

Infrastructure-based sensors only measure data at discrete points on the roadway. They capture

every vehicle, but can only inform on spot (time-mean) speeds, or the average speed of vehicles

over one fixed point of the roadway. For these detectors, the process of calculating segment

average travel times from raw data requires (1) estimating spot speeds from the raw data; and (2)

transforming these speed estimates into segment travel times. In contrast, vehicle-based sensors

directly report individual vehicle travel times, but only for some of the roadway travelers. In this

case, the main computational steps are to (1) associate the vehicle travel times with specific

segments and/or routes; (2) filter out non-representative travel times, such as those involving a

stop; and (3) determine if enough valid travel time observations remain to compute an accurate

representative average travel time for the time period. Chapter 4 shows how segment and route

regimes for freeway and arterial networks were defined and identified in the San Diego case

study.

An important aside is that the median travel time is actually a more useful and stable

statistic, although it is not often employed. It is less sensitive to the impacts of outliers. Almost

all estimation methodologies today compute means rather than medians because the process is

much simpler - one divides the sum of the observations by the number of observations; and

averages add across segments while medians may not. The effect of outliers can be diminished

by removing observations that are significantly deviant from the rest of the data. However, this

can make it more difficult to observe incidents and other disruptive events. The material

presented here focuses principally on averages although one can think in terms of medians as

well.

31

Infrastructure-Based Sensors

32

Estimating Spot (Time-Mean) Speeds

33

34

35

36

37

38

39

40

41

42

Most infrastructure-based sensors transmit some combination of flow, occupancy, and

speed data. Observations for individual vehicles are not available. In cases where sensors do not

directly measure speeds (which is the case for most loop detectors), it is necessary to estimate

average speeds in order to calculate average travel times. This is typically done by using a "gfactor", which represents the average length of a vehicle, to estimate speeds from flow and

occupancy data using the below equation:

Equation 11-6

Here, (1/g) is the average effective vehicle length, which is the sum of the actual vehicle

length and the width of the loop detector. At a given loop, the average vehicle length will vary by

12

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

time of day and day of the week due to truck traffic patterns. For example, trucks make up a

heavy percentage of traffic in the early morning hours, while automobile traffic dominates during

commute hours. Vehicle length is also dependent on the lane that the loop is in; loops in the

right-most lanes will have longer lengths than those in the left-hand lanes since trucks usually

travel in the right lanes. Despite this variability, most agencies use a constant g-factor that gets

applied across all loops and all time periods in a region. Analysis of this approach has shown that

it can introduce errors of up to 50% into speed estimates (4). Since average travel time

computations require the aggregation and extrapolation of multiple loop detector speed

estimates, this has huge ramifications for the reliability of calculated average travel times. As

such, agencies are advised to implement methods that account for the time- and locationdependency of the g-factor.

G-factors can be estimated for each time of day and individual detector using a weeksworth of detector data. By manipulating the above equation to make speed the known variable

and the g-factor the unknown variable, g-factors can be estimated for all free-flow time periods.

Free-flow conditions can be identified in the data as time periods where the occupancy falls

below 15%. For these conditions, free-flow speeds can be approximated based ideally on speed

data obtained from the field for different lanes and facility types. An example of speeds by

facility type, lane, and number of lanes derived from double-loop detectors in the San Francisco

Bay Area is shown in Exhibit 11-5.

Exhibit 11-5

Measured Speeds by Lane, San Francisco Bay Area

Using the assumed free-flow speeds, g-factors can be calculated for all free-flow time

periods according to the below equation:

Equation 11-7

Where g(t) is the g-factor at time of day t (a 5-minute period), k(t) is the observed

occupancy at t, q(t) is the observed flow at t, T is the duration of the time-period (for example, 5

minutes), and Vfree is the assumed average free-flow velocity.

Running this equation over the data transmitted by a loop detector over a typical week

yields a number of g-factor estimates at various hours of the day for each day of the week, which

can be formed into a scatterplot of g-factor versus time. To obtain a complete set of g-factors for

each 5-minute period of each day of the week, the scatterplot data points can be fitted with a

regression curve, resulting in a function that gives a complete set of g-factors for each loop

detector. An example of this analysis is shown in Exhibit 11-6. In the figure, the dots represent gfactors directly estimated during free-flow conditions, and the line shows the smooth regression

curve (5). The 5-minute g-factors from the regression function can then be stored in a database

for each loop detector, and used in real-time to estimate speeds from flow and occupancy values.

An example of the g-factor functions for the detector in the left-most lane (Lane 1) and the rightmost lane (Lane 5) of a freeway in Oakland, California, is shown in Exhibit 11-7. As is evident

from the plot, the g-factors in the left-lane are relatively stable over each time of day and day of

the week, but the g-factors in the right-lane vary widely as a function of time.

G-factors should be updated periodically to account for seasonal variations in vehicle

lengths, or changes to truck traffic patterns. It is recommended that new g-factors be generated at

least every three months.

13

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

Exhibit 11-6

5-minute G-Factor Estimates at a Detector

Exhibit 11-7

G-Factors at a Detector on I-880 North, Oakland, CA

Estimating Average Travel Times

The steps in the previous subsection produce average spot (time-mean) speed estimates,

or average speed estimates at multiple loop detector locations. Since there is no knowledge of

individual or average vehicle speeds in between the fixed detectors, there needs to be a process

for using the spot speeds along a segment or route to estimate the average segment or route travel

times. The most common way to do this is to assume that the average spot speeds measured at

each detector apply to half the distance to the next upstream or downstream detector.

After using the average spot speeds to approximate the variations in average speeds along

the roadway, average segment or route travel times can be computed. Where average travel times

need to be computed in real-time (to be posted on a VMS sign, for example), this can be done by

summing the average segment travel times that are available when the trip starts. Of course, this

method is inaccurate for long trips, especially during peak periods, because the speeds are likely

to change as congestion levels fluctuate while the vehicle is passing through the network.

The best way to compute an average travel time from infrastructure-based sensor speeds

is to: (1) calculate the average travel time for the first route segment using the average travel

time at the trip's departure time; (2) get the average speed for the next segment at the time that

the vehicle is expected to arrive at that segment, as estimated by the calculated average travel

time for the first route section; and (3) repeat step (2) until the average travel time for the entire

route has been computed.

Exhibit 11-8, which shows detected average spot speeds along a route and its route

sections, can be used to illustrate the difference between the two methods. For real-time display

purposes, for a trip departing at time t=0, the average route travel time can be calculated as

follows:

Equation 11-8

?tt_total?_(t=0)= ?tt1?_(t=0) + ?tt2?_(t=0)+ ?tt3?_(t=0)+ ?tt4?_(t=0)

For all other purposes, by using average segment travel times stored in an archival

database, the average route travel time can be calculated as follows:

Equation 11-9

Exhibit 11-8

Route Section Travel Times

14

1

Vehicle-Based Sensors

2

Generation of Passage Time Pairs for Bluetooth Readers (BTR)

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

The first step in the process of calculating segment travel time PDFs for a roadway is the

calculation of segment travel times for individual vehicles. A vehicle segment travel time is

calculated as the difference between the vehicle's passage times at both the origin and destination

BTRs. Passage time is defined as the single point in time selected to represent when a vehicle

passed through a BTR's detection zone. Chapter 7 describes a methodology for identification of

passage times, which is applied in the Lake Tahoe case study in Chapter 15 and Appendix N.

It is common for vehicles to generate multiple sequential visits per BTR, which may be

interwoven in time with visits at other BTRs (see Exhibit 11-9). For BTRs with a significant

mean zone traversal time, it is common for vehicles to generate multiple visits close in time. The

motivation for grouping visits is evident in Exhibit 11-10, where the vehicle was at the origin

BTR multiple times (see rows 1-3) before traveling to the destination BTR (see row 4). Based on

this data, 3 different travel times could be calculated: 1 to 4; 2 to 4; or 3 to 4. Which pair or pairs

represent the most likely trip? The benefit of performing more complex analysis of visits is that

many likely false trips can be eliminated, increasing the quality of the calculated travel time.

Three methods of identifying segment trips are discussed below and applied in the Lake Tahoe

case study in Appendix N.

Exhibit 11-9

Relationship Between Maximum Speed Error & BTR-to BTR Distance with ?T>0

Exhibit 11-10

Visits for a Single Vehicle Between Two BTRs

Row Origin

BTR Visit

BTR Visit

Per Visit

1

1

2

2

3

3

4

5

4

6

7

5

8

6

Destination

Time Observations

1

2

Fri Jan 28 13:07:19 2011

Fri Jan 28 16:24:06 2011

Fri Jan 28 17:41:50 2011

Fri Jan 28 22:07:36 2011

Sat Jan 29 15:07:40 2011

Sun Jan 30 10:49:03 2011

Sun Jan 30 12:15:33 2011

Mon Jan 31 13:05:54 2011

7

13

25

3

4

129

3

10

Method 1: The first potential method for identifying segment trips is simple: create an

origin and destination pair for every possible permutation of visits, except those generating

negative travel times (Exhibit 11-11). For example, the visits in Exhibit 11-10 show 6 origin and

2 destination visits, resulting in 12 possible pairs. Five pairs can be discarded because they

generate negative travel times. Even so, this approach will generate many passage time pairs that

do not represent actual trips. Using this method, 243,777 travel times were generated between

one pair of BTRs over a three-month period.

15

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

Exhibit 11-11

Trips Generated from All Visit Permutations

Method 2: The second potential method for identifying segment trips is also simple, but

represents an improvement from the first method. It creates an origin and destination pair for

every origin visit and the closest (in time) destination visit, as shown in Exhibit 11-12. Multiple

origin visits would therefore potentially be paired with a single destination visit. Using this

method with the data in Exhibit 11-10 would generate 4 pairs: 1-4, 2-4, 3-4, 5-6. This method

generated 60,537 travel times between the origin and destination BTRs, eliminating 183,240

(75%) potential trips compared with the first method.

Exhibit 11-12

Trips Generated from All Origin Visits to First Destination Visit

Method 3: Vehicles frequently make multiple visits to an origin BTR before traveling to a

destination BTR. The third method of eliminating invalid segment trips aggregates those origin

visits that would otherwise be interpreted incorrectly as multiple trips between the origin and

destination readers. This method can be described as aggregating visits at the BTR network level.

This is an additional level of aggregation beyond aggregating individual observations into visits,

as discussed in the previous section. Logically, a single visit represents a vehicle's continuous

presence within a BTR's detection zone. In contrast, multiple visits aggregated into a single

grouping represent a vehicle's continuous presence within the geographic region around the

BTR, as determined by the distance to adjacent BTRs. This method is an example of using

knowledge of network topology to identify valid trips.

This method can be applied to the data displayed in Exhibit 11-10, which shows 3 origin

visits in rows 1, 2, and 3. The question is whether any of these visits can be aggregated or should

each be considered a valid origin departure?

Exhibit 11-13

Trips Generated from Aggregating Origin Visits

The distance from the origin (BTR #7) to the destination (BTR #10) is 50 miles (or 100

miles for the round-trip). Driving at some maximum reasonable speed (for that road segment,

anything over 80 MPH is unreasonable) a vehicle would take 76-minutes for the round-trip.

Therefore, if the time between visits at the origin is less than 76-minutes, they can be aggregated

and considered as a single visit. In table 2-5, visits 2 and 3 (rows 2 and 3) meet this criterion and

can therefore be aggregated (Exhibit 11-13). This eliminates 1 of 3 potential origin visits that

could potentially be paired with the destination visit in row 4. Again, the idea is to identify when

the vehicle was continuously within the geographic region around the origin BTR and eliminate

departure visits wherever possible. When this method was applied to the data set, it generated

39,836 travel times, eliminating 20,701 (34%) potential trips compared with the second method

discussed above.

Additional filters could be used to identify and eliminate greater numbers of trips. For

example, an algorithm could take advantage of graph topology and interspersed trips to other

BTRs to aggregate larger numbers of visits. In addition, the algorithm could potentially track

16

1

2

3

4

which destination visits had previously been paired with origin visits, eliminating unlikely trips.

If PDFs are developed based on historical data, selection among multiple competing origin visits

paired with a single destination visit could be probabilistic. These are potential topics for future

research.

5

Associating Travel Times with a Route

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

Automated Vehicle Identification (AVI) technologies report timestamps, reader IDs, and

vehicle IDs, for every equipped vehicle that passes by a sensor. As such, it is simple to calculate

average travel times or travel time density functions between two sensors, but there may be some

uncertainty in the route that the vehicle traveled between them. This can be important in arterial

networks, where, depending on sensor placements, there can be more than one viable route

between two sensors or sequences of sensors. The best way to correct this issue is to place and

space sensors so as to reduce the uncertainty in a vehicle's path. An example application is

shown in Exhibit 11-9, where there are two possible paths between intersection A and

intersection C: Path A-C and Path A-B-C. If only AVI data are available, it is difficult to tell

which travel times correspond to which paths. To correct the issue, a third AVI sensor can be

deployed in the middle of Path A-B-C, to correctly distinguish between travel times of vehicles

taking Path A-B-C versus Path A-C.

Exhibit 11-9

AVI Sensor Placement

Automated Vehicle Location (AVL) technologies track vehicles as they travel, but this

data still needs to be matched with specific paths and segments in the study network. One way to

do this is through map matching algorithms. They match location data received from vehiclebased sensors (longitude, latitude, point speed, bearing, and timestamps) to routes in the study

network. Map matching is one of the core data processing algorithms for associating AVL-based

travel time measurements with a route. A typical GPS map-matching algorithm uses latitude,

longitude, and bearing of a probe vehicle to search nearby roads. It then determines which route

the vehicle is traveling on and the resulting link travel time. In many cases, as shown in Exhibit

11-10, there are multiple possibilities for map-matching results. Thus, a number of GPS data

mining methods have been developed to find the closest or most probable match. Map-matching

algorithms for transportation applications can be divided into four categories: (1) geometric; (2)

topological; (3) probabilistic; and (4) advanced (6). Geometric algorithms use only the geometry

of the link, while topological algorithms also use the connectivity of the network. In probabilistic

approaches, an error region is first used to determine matches, and then the topology is used

when multiple links or link segments lie within the created error region. Advanced algorithms

include Kalman Filtering, Bayesian Inference, Belief Theory, and Fuzzy Logic.

Another technique sometimes employed by AVL systems is to create "monument-tomonument" travel time records. Monuments are virtual locations defined by the system, akin to

the end points for TPC segments to and from which vehicles travel. When the AVL-equipped

vehicle passes each monument it creates a message packet indicating the monument it just

passed, the associated timestamp, the previous monument passed, its associated timestamp, and

the next monument in the path. Using this technique, data records akin to the AVI detector-todetector records are created and can be used to create segment and route-specific travel times.

17

1

2

3

4

5

Moreover, in some systems the path followed is also included in the data packet, so the route

followed is known as well.

6

Filtering Unrepresentative Travel Times

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

Exhibit 11-10

GPS Points, Underlying Road Network, and Map Matching Results

The output of the previous step is, for each segment or route in the system, data for n trips

that occurred over some time frame, labeled i = 1,…, n. Each observation i has a "from" time si

and a "to" time ti. These travel times can be processed to yield an average travel time and a travel

time density function that represents the true experience of vehicles traversing the segment or

route during the observed time period. Since it is likely that some of the vehicles will make stops

or other detours along their path, those observations need to be identified and removed.

Moreover, to guard against the impacts of outliers, the median travel time is a better statistic to

use than the mean.

The state of the practice in this area is to filter out invalid travel times by comparing

individual travel times measured for the current time period with the estimate computed for the

previous time period. In systems in Houston and San Antonio, for example, if a travel time

reported by an individual vehicle varies by more than 20% from the average travel time reported

for the previous period, that vehicle's travel time is removed from the dataset (7). In a reliability

monitoring context, however, this could reduce the system's ability to capture large and sudden

increases in travel times due to an incident or other event, and thus underestimate travel time

variability. For this reason, the research team has developed two other approaches that agencies

can implement to ensure that final median travel time estimates for each time period are robust

and representative of actual traffic conditions: (1) a simple time-period median approach; and (2)

running-percentile smoothing. Both of these approaches use the median of observed travel times,

rather than the mean, as the final estimate, since median values are less affected by outliers.

Before either of the two approaches is applied, it is recommended that very high travel times (for

example, those greater than five times the free-flow travel time) be filtered out and removed

from the data set before further analysis.

The simpler of the two possible methods is called the "simple time period median"

method. For time period j (which could be 5 minutes or 1 hour), the median travel time Tj is

initially calculated as the median of all ti's whose departure time si falls in the time period j. As

long as more than half of the observed trips do not stop or take a different route, the estimate will

not be affected by these "outliers." Once the travel time series Tj are obtained, it is possible to

perform geometrical or exponential smoothing over the current and previous time periods to

smooth the trend over time, giving more weight to the most recent Tj as well as those Tj's with

larger sample sizes, and thus higher accuracy. The drawback of this method is that if the current

time period has too few data points, then the median value could still be affected by the outliers.

An example of this is shown in Exhibit 11-11, which shows the simple time period median

method applied to a day's worth of FasTrak toll tag data in Oakland, CA. As can be seen in the

top figure (hourly median travel times) and the bottom figure (5-minute median travel times),

estimates of the median travel time appear to be too high in the early morning hours, when there

are few vehicles to sample.

18

1

2

3

4

5

6

7

8

9

10

11

12

13

The second possible method is "running-percentile smoothing". This is a smoothing over

the observed scatter plot of individual (si, ti)'s. The running pth percentile travel time estimate of

bin width k at time s is given by:

Equation 11-10

Tp(s) = pth percentile of the k most recent travel times ti's at s

When p=50, the method becomes the running median, and can be used to generate an

estimate of the median travel time for a given time period. As long as more than half of the

observed trips do not stop or take a different route, the estimate will not be affected by these

outliers. This method requires more computation than the simple median above but each estimate

Tp(s) has the guaranteed sample size k and desired accuracy. It is not affected by outliers or too

small of a sample size in a given time period. Therefore, the running median approach is the

more desirable of the two alternatives, provided there are enough computational and storage

resources.

14

Estimating Error

15

16

17

18

19

20

21

22

23

24

25

26

The samples sizes of vehicle-based travel times will vary from location to location and

hour to hour, which means that the accuracy of each median travel time estimate will vary. The

more travel times samples that are collected, the more likely that the samples represent the true

travel time distribution along the route. An estimate of data accuracy can be obtained for a given

sample size using standard statistical methods. The recommended way of quantifying the

accuracy of a sample is through standard error, which is always proportional to 1/?n, where n is

the number of samples collected during the specific time period (for example, 8:00 AM - 8:05

AM). Through this computation, each agency can quantify an acceptable level of error for travel

time estimates to be considered accurate enough to be used in reliability calculations.

27

VALIDATING TRAVEL TIMES

28

29

30

31

32

33

34

35

36

37

Agencies need to have confidence in the travel time and reliability data that are computed

and reported. As has been previously discussed, infrastructure-based sensors are likely to be a

key source of data for many monitoring systems due to their existing widespread deployment.

While they are a convenient resource to leverage, the calculation of segment and route travel

times from the spot speed data they provide introduces potential inaccuracies in the reported

travel times. Validating point sensor-based average travel times with average travel times

computed from AVI or AVL data is standard practice for small-scale studies, but is resourceintensive to do comprehensively for all monitored facilities. This section guides agencies in the

selection of the most important paths on which to validate travel times and the choice of an

appropriate validation method.

38

Selecting Critical Paths

39

40

There are a few different criteria that a path can meet to be considered a critical route for

travel time validation. They are listed here in the recommended order of importance.

Exhibit 11-11

Simple Median Method Applied to FasTrak Data in the San Francisco Bay Area

19

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

1. Paths with known travel time variability issues. Since the purpose of the system is

to accurately measure and monitor reliability, it is important to ensure that

computations for these facilities are accurately capturing the variability known to

exist and in need of being monitored.

2. Paths along arterials that are being monitored with infrastructure-based

detection sources. Because of the influence of traffic control devices on arterial

travel times, they are complicated to estimate using point sources alone. Where

arterial travel times are being computed from infrastructure-based sources, they

should be validated with AVI or AVL-estimates.

3. Paths where data is being obtained from private sources and the accuracy of the

data is not certain. Where agencies want to increase confidence in purchased

private source data, they can independently verify travel time estimates through

validation.

4. Paths that consistently carry high traffic volumes, as these are the locations

where unreliable travel times will impact the most users. Since these paths are

likely to draw high levels of interest from those in the traveler user group, it is

important that reported measures reflect actual conditions so that the driving

public can have confidence in system outputs.

5. Paths that serve key origin-destination pairs, especially those on which a high

percentage of trips are time-sensitive. An example would be a route from a

suburb to a downtown business district, where a high percentage of morning

traffic is composed of commuters needing to arrive at work on time.

23

Validating

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

Practices for validating infrastructure-based travel times with other sources are well

understood. This section describes a few potential methods that agencies can deploy, depending

on their existing infrastructure and resources.

One option commonly used for validating loop detector travel times is to deploy GPS

probe runs on selected routes. If this option is pursued, probe runs should be conducted during

both peak and off-peak hours, to gauge the accuracy of infrastructure-based travel times under

varying levels of congestion. Runs should also be conducted for the same hours of the day over

multiple days, to ensure that the infrastructure-based estimates are accurately capturing the dayto-day variability in travel times on critical paths. The downside of this method is that it becomes

resource-intensive if agencies want to validate travel times on many paths.

An alternate option that reduces the time investment of validation is to deploy AVI

sensors at the origins and destinations of key routes, to directly measure travel times from a

sampling of vehicles. One alternative is to use Bluetooth MAC address readers, which register

the unique MAC addresses of vehicles that contain Bluetooth-enabled technologies. The benefit

of this technology is that the address readers can be easily moved from one location to another,

meaning that the same sensors can be used to validate travel times on multiple routes. This

option is possible in most regions, as the percentage of vehicles with Bluetooth-enabled

technologies is high and growing. For metropolitan areas that use automated toll collection

technologies, another option is to deploy RFID toll tag readers at route origins and destinations.

The data from either of these options can be collected automatically, then filtered and processed

by hand for comparison with the estimates produced by the infrastructure-based technologies.

20

1

2

3

4

5

6

7

8

9

10

Further exploratory methods for travel time collection and validation have also been

tested. For example, some studies have tried to use GPS data collected from equipped transit

fleets to validate speeds and travel times computed by loop detectors (1). Others have attempted

to use GPS-equipped Freeway Service Patrol vehicles for the same purpose (8). Both of these

efforts noted that it is not possible to obtain speeds from the probe vehicles at the exact loop

detector locations, making a direct comparison difficult. Results were also complicated by the

fact that transit vehicles generally travel slower than other vehicles, and that Freeway Service

Patrol vehicles make frequent stops. As such, agencies can explore these alternative methods

where they have equipped probe vehicles in place, but should recognize the limitations of using

travel times from specialized vehicle types for validation purposes.

11

ASSEMBLING TRAVEL TIME DISTRIBUTIONS FOR AVERAGE TRAVEL TIMES

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

Supply-side travel time reliability measures predicated on average travel times are

computed from historically archived average travel times. To connect this step with upstream

system tasks, generating any reliability measure requires first assembling the average travel

times stored in a database into average travel time distribution profiles. To support reliability

analysis, probability density functions for average segment or route travel times must reflect the

time-dependent nature of travel time variability (for example, average travel times are usually

more variable during peak hours than during the middle of the night). As such, the system must

be capable of assembling distributions for average travel times for each time of day, for specified

days of the week. This capability is fully supported by the database structure outlined in Chapter

10 which, at the finest granularity, stores average travel times for each 5-minute departure time

and day of the week. The details of the message exchanges and database queries that will support

the dissemination of reliability information to other systems will be more fully discussed in the

Reporting Reliability subsection. For now, however, the example below illustrates a possible

information request and how the system assembles the distribution to respond.

Question:

What was the 95th percentile average travel time along a route on weekdays for trips that

departed at 5:00 PM in 2009?

Steps:

Retrieve the average travel times for the route of interest predicated on a 5:00 PM

"departure" (trip commencement) for every non-Holiday Monday, Tuesday,

Wednesday, Thursday, and Friday in 2009 from the 5-minute average travel times

that pertain to the segments that are part of the route using equation (11-9) and not

equation (11-8).

Assemble travel times into a distribution and calculate the 95th percentile travel

time from the data set.

Send the 95th percentile travel time to the requesting system.

38

Conceptualizing Average Travel Time Distributions

39

40

41

42

43

Consistent with the material presented in Chapter 7, the average travel time for a given

segment or route is assumed to be an observed value of a continuous random variable (T) that

follows an unknown probability distribution. The probability distribution is specified by the

cumulative distribution function (CDF) F(x) or the probability density function (PDF) f(x),

which are defined respectively by:

21

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

Equation 11-11

and

As such, for a given route, the probabilities that a certain travel time is less than 40minutes and between 30-minutes and 50-minutes could be written respectively as:

Equation 11-12

and

Since the ideal distributions F and f are not known, they need to be estimated from

t1…tn, the historically archived average travel times assembled over the selected time range for

the selected route. Since the average travel time is a continuous random variable, the theoretical

probability that the exact same average travel time will be reported twice is zero. Assuming that

the monitoring system calculates average travel times with a high enough precision (for example,

one-second), it is unlikely that the exact same average travel time will be reported twice, even on

reliable facilities. Three "empirical PDFs" illustrating the discrete nature of route-specific

average travel times are shown in Exhibit 11-12.

Exhibit 11-12

Discrete Average Travel Time Distribution

Exhibit 11-12 shows PeMS average travel times measured during the summer months of

2009 on I-5 North, heading from downtown San Diego to the beach-side suburbs. Figure (a)

shows the distribution of average travel times for 5:30 PM weekday departure times during this

time period, figure (b) shows the distribution of average travel times for 8:00 AM weekday

departure times, and figure (c) illustrates the distribution of average travel times for weekend

5:00 PM departure times. The x-axis shows the average route travel time, with spikes drawn at

each discrete average travel time that was observed during the time period. The y-axis shows the

frequency that each observed average travel time occurred. The fact that all of the spikes are the

same height illustrates that, due to the discussed discreteness of travel times, each observed

average travel time occurred exactly once. We can get a sense of the relative reliability on this

route between the three displayed time periods. The PM period clearly experiences frequent

variability in the average travel time, as there is a large range in possible average travel times.

The weekend distribution also has some high average travel times, but the majority of observed

average travel times are clustered between 22 and 25 minutes. In the AM period, average travel

times are highly clustered around the same value, and thus can be considered more reliable.

Exhibit 11-12 shows the empirical PDF in its most raw form, but a more popular way of

visualizing the empirically-based distribution of average travel times is through a histogram,

shown in Exhibit 11-13 for the same data in Exhibit 11-12. In histograms, average travel time

measurements are grouped into bins to give a better idea of the varying likelihoods of different

average travel times. Here, we can start to see which average travel times are the most likely. In

the PM period, for example, we can see that average travel times around 28 minutes and 33

minutes occur most frequently, but the significantly higher 37 minute average travel times occur

about 10% of the time at 5:00 PM.

Exhibit 11-13

Average Travel Time Probability Density Functions

22

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

The plots also show the density curves (in red) formed by the kernel estimation from the

data, whose formula is given by:

33

Generation of Segment Travel Time Histograms

34

35

36

37

38

39

40

41

42

43

44

45

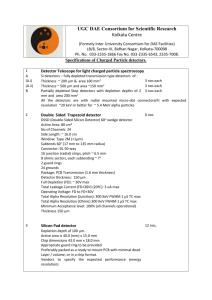

Chapter 7 and Section 11.3.3.1 described methods for determining travel times based on

Bluetooth data. This was done by first identifying vehicle passage times at each of the Bluetooth

readers, then pairing those passage times from the same vehicle at origin and destination

locations. These techniques were developed with the goal of maximizing the validity of the

travel times. However, because of Bluetooth data's susceptibility to erroneous travel time

measurements, even the most careful pairing methodology will still result in trip times (which

could include stops and/or detours) that need to be filtered in order to obtain accurate ground

truth travel times (the actual driving time).

This section of the methodology describes a four-step technique for filtering travel times.

Appendix N describes the application of these techniques to the Lake Tahoe case study and

includes travel time histograms before and after filtering. This section focuses on the low-level

issues associated with obtaining travel time distributions from Bluetooth data.

Equation 11-13

where K is some kernel function and h is a smoothing parameter called the bandwidth.

Kernel density estimation is a non-parametric way of estimating the unknown PDF f(x) from the

empirical data.

CDFs express the unknown theoretical probability that the average travel time will be

shorter than or equal to a given average travel time. The most straightforward way to estimate

the unknown CDF is to use the empirical distribution of the data to form the "empirical

cumulative distribution function" (ECDF). The ECDF is a function that puts a weight of 1/n at

each measured average travel time ti. It is calculated as follows:

Equation 11-14

(Number of ti's that are less than or equal to x)

An example ECDF for the average travel time pertaining to a 5:00 PM departure time in

San Diego is shown in Exhibit 11-14. The x-axis shows the range of possible average travel

times, and the y-axis (which ranges between 0 and 1) illustrates the empirical probability that an

average travel time will be less than or equal to a given value. The ECDF is a "step function"

with the discrete jump of size 1/n at each observed average travel time ti.

Exhibit 11-14

Empirical Cumulative Distribution Function (ECDF) for the Average Travel Time

Sample percentiles are easy to determine from the ECDF figures. For example, to find the

sample median average travel time, we can find the point on the curve that corresponds with the

0.5 value on the y-axis (in this case, a travel time of 30.4 minutes). Similarly, to find the sample

95th percentile average travel time, we can find the point on the curve that corresponds to the

0.95 value on the y-axis (in this case, a travel time of 42.2 minutes).

The ECDF is an approximation of the true, unknown CDF. Similarly, the sample

percentiles calculated from the ECDF are approximations of the true, unknown population

percentiles.

23

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

To begin, the distribution of raw travel times obtained from two different passage time

pairing methods can be seen in Exhibit 11-20. The data presented here as "Method 2" was

developed using the second passage time pairing method described in Section 11.3.3.1. Data

labeled as belonging to "Method 3" was built according to the third passage time pairing method

in that same section. No "Method 1" analysis is included here due to that method's lack of

sophistication. In Exhibit 11-20, the unfiltered travel time distributions appear similar apart from

the quantity of data present. Both distributions have extremely long tails, with most trips lasting

an hour or less and many taking months. It is clear from these figures that even the carefully

constructed "Method 3" data is unusable before filtering.

Exhibit 11-20

Unfiltered Travel Times Between One Pair of Bluetooth Readers from Lake Tahoe Case

Study

Several plans have been developed to filter Bluetooth data. Here, we adopt a four-step

method proposed by Haghani, et. al. [2]. In Haghani's filtering plan, points are discarded based

on their statistical characteristics, such as coefficient of variation and distance from the mean.

The four data filtering steps are:

Filter outliers across days. This step is intended to remove measurements that do not

represent an actual trip but rather a data artifact (i.e., the case above of a vehicle being missed

one day and detected the next). Here, we group the travel times by day and plot PDFs of the

speeds observed in each day (rounded to the nearest integer). To filter the data, thresholds are

defined based on the moving average of the distribution of the speeds (with a recommended

radius of 4 miles per hour). The low and high thresholds are defined as the minima of the moving

average on either side of the modal speed (i.e., the first speed on either side of the mode in which

the moving average increases with distance from the mode). All speeds above/below these values

are discarded.

Filter outliers across time intervals. For the remaining steps 2-4, time intervals smaller

than one full day are considered (we use both 5-minute and 30-minute intervals). In this step,

speed observations beyond the range mean ±1.5? within an interval are thrown out. The mean

and standard deviation are based on the measurements within the interval.

Remove intervals with few observations. Haghani determines the minimum number of

observations in a time interval required to effectively estimate ground truth speeds. This is based

on the minimum detectible traffic volume and the length of the interval. Based on this, intervals

with fewer than 3 measurements per 5-minutes (or 18 measurements per 30-minutes) were

discarded.

Remove highly variable intervals. In Step 4, the variability among speed observations is

kept to a reasonable level by throwing out all measurements from time intervals whose

coefficient of variation (COV) is greater than 1.

After the data has been filtered, the travel time distributions over the week appear much

more meaningful, as can be seen by comparing Exhibit 11-21 with Exhibit 11-20. The filtered

travel times have lost their unreasonably long values and in both cases, a nicely shaped

distribution is visible. Note that the earlier comparison of the data sets remains true: both have

similarly shaped distributions, but the data prepared using passage time pairing method 2

contains a greater quantity of data. This is because that data set was larger initially, and also a

24

1

2

3

4

5

larger percentage of points from it survived filtering. Further detail about the application of the

filtering steps described above can be found in Appendix N.

6

SYSTEM COMPUTATIONS

Exhibit 11-21

Filtered Travel Times (5-minute Intervals)

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

Thus far, this chapter has focused on useful ways of describing average travel time

distributions and variability, and the time-dependent nature of both. The true quantities (PDF,

CDF, and true population percentiles) have been carefully distinguished from the sample

quantities (empirical PDF, ECDF, histogram, and sample percentiles). Such distinction is

important for conceptualization and modeling purposes. For practical purposes, however, the

monitoring system will work mostly with the empirical data using a number of standard formulas

that can directly compute percentile reliability measures from a set of data points. These

equations are directly embedded in data analysis tools like Microsoft Excel or MATLAB,

making reliability computations relatively straightforward. To serve as an example, the steps to

calculating percentiles recommended by the National Institute of Standards and Technology are

as follows (9):

To calculate the sample pth percentile value, XP , from a set of N data points in

ascending order: {X1,X2,X3,…XN}:

p*(N + 1) = k + d, where p is between 0 and 1, k is an integer and d is a fraction greater

than or equal to zero and less than one.

If 0 < k < N, XP = Xk + d*(Xk+1 - Xk);

If k = 0, Xp = X1;

If k = N, Xp = XN.

There are a number of alternate formulas available, but for reliability monitoring

purposes, they will yield nearly identical results.

27

Error Estimation

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

When a reliability statistic such as the 95th percentile is calculated from average travel

time data, some errors are introduced. One is the "interpolation error," which occurs because

some interpolation is made between data points to calculate the percentile in the above formula.