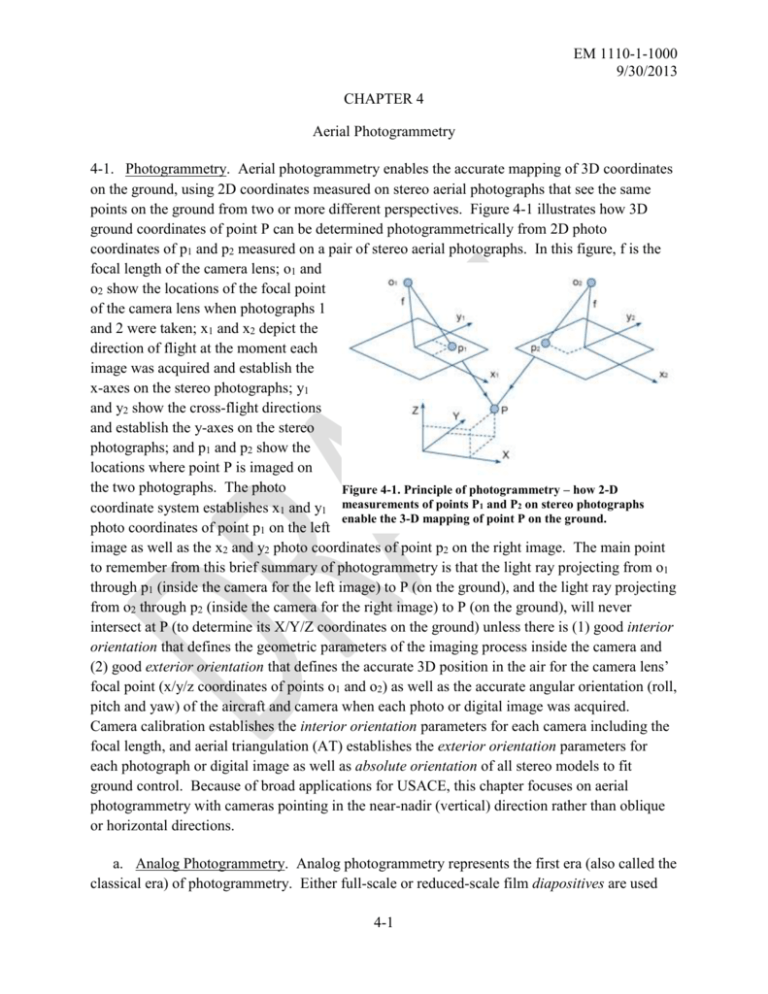

Chapter_4_Aerial_Photogrammetry

advertisement