file

advertisement

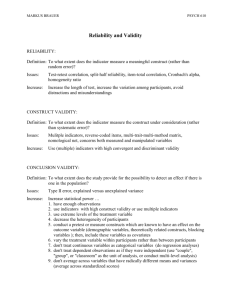

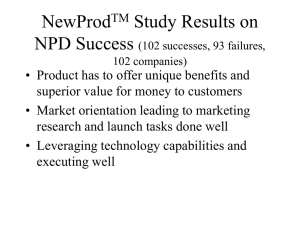

Research & Skills for BA #1 – Student Projects There are two research paradigms to be used in student field work in Business or Management, being the explanatory paradigm and the design science paradigm. The explanatory paradigm is mostly used in social sciences, whereas the design science paradigm is used in medical/engineering schools. A project following the explanatory approach (gaining knowledge) aims to produce descriptive and explanatory knowledge, following the empirical cycle. On the other hand, the design science paradigm (finding a solution) aims to produce solutions to field problems and can follow the problem-solving cycle. In a master studies, both approaches can be combined. The empirical and problem-solving cycle have some things in common, but may also be very different in some aspects. The empirical cycle is observation -> induction (developing theory) -> deduction (generating hypotheses) -> testing -> evaluation. Observation concerns phenomena in the real world and what is written about them and may result in the conclusion that more companies deal with an issue, but the issue is not worked out in the literature yet. During the induction step, possible explanation are developed for the issue and is thus a theory-developing step. At the deduction step, the most promising ideas of the induction step are transformed into hypotheses, which are tested in the testing step through statistics or qualitative approaches. Finally, the evaluation step s used to examine and interpret the outcomes of the testing and may lead to new research question or a restart of the cycle. The empirical cycle can also be used for non-academic reasons, for example to come to know needs of customers. The problem-solving cycle is driven by a business problem of a company, chosen by stakeholders (problem mess). To formulate the problem, the mess has to be identified and structured, resulting in a problem definition. The next step is analysis and diagnosis, being the analysis of the context and the causes of the problems, after which a solution is designed. Following this, the intervention step is where the solution is implemented after which the solution is evaluated and assessed, which may lead to new business problems. There are two knowledge generating processes that can be used for descriptive or explanatory theory: - Theory development. This process is based on the first part of the empirical cycle and based on the work of Eisenhardt. This starts with a business phenomenon, the observation of that phenomenon, a development of the explanation while comparing findings with theories, and deriving propositions that are changes of or additions to existing theories. This is especially needed when the phenomenon has not been addressed in the literature before or when it is very exploratory in nature. - Theory testing: This process is based on the second part of the empirical cycle, following the deduction, testing, and evaluation steps, eventually resulting in a newly tested part of a theory. It starts with a business phenomenon, after which important variables are identified and a model is build. Following this, large scale data collection may find results through statistical analysis, followed by implications and future research. Here, literature is already less scarce. - Academic problem solving: This is based on the problem-solving cycle, though addressing a generic type of business problem. The main focus is on selecting the company’s business problem, analyzing and diagnosing, data collection and analysis while cross-checking with literature, and finding a solution and eventually, possibly a pilot implementation. It is dangerous to mix the research processes and to assume that a mixture of two logics is consistent. Mixing theory development with theory-testing is wrong for two reasons. First, it is not valid to develop and test theory on the basis of the same data. Second, a test on the basis of a few cases is not a large scale test that is meant in the theory-testing process. So it is very difficult to come to generalizations in this way. All in all, as a master student, choose one of the three process, select it based on the research question and business phenomenon you want to study, and do not mix different types of knowledge producing processes. #2 – Quality Criteria for Research If a product does not meet its quality criteria, it loses much of its value. Quality criteria can be used in two ways, for managing the quality of one’s own research, but also to evaluate the research done by others. Furthermore, quality criteria can be used by the principal of the steering committee to asses the problem-solving project that is done for them. Problem-solving project can be evaluated on several criteria in which there is a distinction between research oriented criteria and change oriented criteria. Research oriented criteria concern the research aspects of problem-solving projects, they concern the statements on the business system researched and its context. These are particularly relevant for diagnosis and evaluation, since these comprise most research activities. Change-oriented criteria are more relevant during problem definition, redesign and implementation. There is debate on whether you can ever yield true conclusions. Many authors acknowledge that the central aim of research is to strive after inter-subjective agreement, referring to consensus between the actors. The most important research-oriented quality criteria are controllability, reliability, and validity, which provide the basis for inter-subjective agreement: - Controllability: this functions as prerequisite for testing reliability and validity. Researchers have to reveal how they executed a study. This enables others to replicate it and check whether they get the same outcomes, which is also the threshold for this criteria. In addition, controllability also requires that results are presented as precisely as possible. - Reliability: The results of a study are reliable when they are independent of the particular characteristics of that study and can therefore be replicated in other studies. There are four sources of possible bias; the researcher, the instrument, the respondents, and the situation. Thus, results should be independent of the these sources. A replication of the study by another researcher, with different instruments, different respondents and in another situation, should yield similar results. As a result, a common strategy for determining the reliability is to repeat the study. Repetition also gives the opportunity to increase reliability as measurements can be combined. - Researchers and reliability: Results are more reliable when they are independent of the person who has conducted the study. Goldman distinguished between hot and cold biases. Hot biases refer to the influence of interests, motivation, and emotions of researchers on their results. Cold biases refer to subjective influences of the researchers that have a cognitive origin (e.g., self-fulfilling prophecy). Some instruments level more room for biases than others, such as interviews. Having somebody else conducting a study may work as assessment. When objects or events are coded, it is common to have two people doing it, in which the reliability can be assessed by determining the correlation between the codes, also called the interrater-reliability. Another option is standardization, being the development and use of explicit procedures for data collection, analysis, and interpretation (e.g., structured interviews or a case-study protocol). Instruments and reliability: multiple techniques may be available for studying the same phenomenon, though outcomes may differ. If one gets different results, these may be both complementary and contradictory. Overall, using multiple research increases reliability through the concept of triangulation. Triangulation is the combination of multiple sources of evidence, such as interviews, documents, archives, observations, and surveys and can remedy shortcomings and biases of instruments by complementing or correcting each other. In interviews or surveys, reliability can be achieved by asking a similar question with different wordings. The statistical correlation (Cronbach Aplha) shows whether the scales used were reliable. Respondents and reliability: People within a company can have widely diverging opinions. When the opinion of interviewees serves as a source of information, you want the information to correspond to reality or at least to get an inter-subjective view by combining perspectives. Important here are samples and populations. Three principles should be followed to counter respondent unreliability. (1) All roles, departments, and groups need to be represented among the respondents, (2) in a large groups, respondents need to be selected at random, and (3) the general strategy to increase reliability is to increase the number of respondents. Circumstances and reliability: Timing of the study may influence results (e.g., cranky in the morning). Hence, a study should be carried out at different moments in time. Validity is the third major criterion; a research result is valid when it is justified by the way it is generated. The way it is generated should provide good reasons to believe that the research result is true or adequate. Thus, validity refers to the relationship between a research result or conclusion and the way it has been generated. Validity presupposed reliability, as an unreliable measurement limits our reasons to believe that the result are true. There are three separate types of validity: Construct validity: the extent to which a measuring instrument measures what it is intended to measure. The concept should be covered completely, and the measurement should have no components that do not fit the meaning of the concept. It can be assessed in multiple ways: (1) evaluating measuring instruments - and collecting techniques by yourself, (2) ask experts to evaluate the instruments, and (3) determine the correlation of their operationalization with measurements of other concepts with which it should correlate. Sometimes, triangulation can be valuable as it helps covering all aspects. Furthermore, construct validity and reliability sometimes pose contradictory demands as some instruments are more reliable, but have a lower construct validity. Internal validity: this concept concerns conclusions about the relationship between phenomena. These results are internally valid when conclusions about relationships are justified and complete. In order to so, researchers have to make sure that there are no plausible competing explanations. Thus, a conclusion is internally valid when such alternative explanations are ruled out. In the problem-solving cycle, internal validity is high when most of the actual causes of the business problem are found. Studying the problem area from multiple perspectives, theoretical triangulation, can facilitate the discovery of all causes. Finally, internal validity can be improved by systematic analysis. External validity: this refers to the generalizations or transfer of research results and conclusions to other people, organizations, countries, and situations and is especially important in theory-oriented research. It is less important in problem-solving projects, since these projects focus on one specific problem. However, when the study is a pilot study, it is necessary to assess whether the result can also be expected in other departments or organizations. External validity can be increased by increasing the number of objects studied, selected at random or on theoretical grounds. The final criterion concerns the recognition of results, referring to the degree to which he principal, the problem owner and other organization members recognize research result in problem-solving projects. If they cannot make sense of the results, it is hard to reach intersubjective agreement. The recognition of results can be assessed by presenting (intermediate) results to those involves, also called a member check. In addition, using narratives may help you in understanding the insider. All in all, it is important to notice that a positive assessment of reliability and validity is not a guarantee for truth or adequacy as some important things may still be overlooked. In addition, not all scientists consider the criteria mentioned in this chapter to be very important . Finally, alternative criteria that are mentioned are fairness, referring to the degree to which the perspectives and values of different parties are acknowledged, and educational authenticity, being the degree to which research contributes to the mutual understanding people have of each others world. #3 – A Multi-Dimensional Framework of Organizational Innovation: A Systematic Review of the Literature According to management scholars, innovation capability is the most important determinant of firm performance. Though tens of thousands articles are written, review and meta-analyses are rare and narrowly focused, either around the level of analysis or the type of innovation. This resulting fragmentation of the field prevents seeing the relations between facets. The goal fo this article is to bring together all part by consolidating research, establishing connections, and identifying gaps between different research streams. There are many different definitions of innovation, which the authors combined into a broad new one. Due to the wide range of meanings, the authors intentionally cast the net wide in order to understand all definitional nuances. The initial step was a review and categorization of the findings, which were synthesized into a comprehensive multi-dimensional framework of organizational innovation. On the methodology, an analytical review scheme was necessary for systematically evaluating the contribution of a given body of literature. A systematic review uses an explicit algorithm, as opposed to a heuristic, to perform a search and critical appraisal of the literature. They improve the quality of the review process and outcome by employing a transparent and reproducible procedure (quality criteria!). This was important to overcome the challenges of synthesizing data from different disciplines. The review process comprised three parts: - Data collection: As opposed to employing subjective collection methodologies, this paper – using the systematic review approach – removed the subjectivity of data collection by using a predefined selection algorithm. - Data analysis: The goal was to provide a conceptual, rather than an empirical, consolidation. As a result, the authors were methodologically limited to descriptive rather than statistical methods (depth for breadth). As the data was qualitative, pattern-matching and explanation building were selected for the review. - Data synthesis: this is the primary value-added product of a review as it produces new knowledge based on thorough data collection and analysis. The ten dimensions that were found were mapped onto a framework consisting of the two sequential components: innovation as a process (how?), and innovation as an outcome (what?). Additionally, the authors compiled the determinants of organizational innovation and their associated measures, and organized them around three theoretical lenses found in organizational research. All in all, the authors use a systematic review, with as main aim a conceptual consolidation across a fragmented field using systematic data collection procedures, descriptive and qualitative data analysis techniques, and theoretically grounded synthesis. The authors following a three-stage procedure: planning (defining objectives and identifying data sources), execution ((1) identifying selection criteria –keywords and search terms, (2) grouping publications, (3) compiling a consideration set into reviews and meta-analyses, highly cited papers, and recent papers and was done by two panelists (4) classification and typology of the results, and (5) synthesis), and reporting. They choose to limit their sources to peer-reviewed journals (SSCI) as these can be considered validated knowledge . The analysis proceeded in three steps. First, they reviewed the spectrum of theoretical lenses used in group 2 (highly cited papers), organized them by level of analysis, and summarized the findings. The authors used a framework whereby a set of determinants lead to their phenomenon of interest. The authors call for a practice-based view, which can combine the individual, firm, contextual, and process variables prevalent in the literature. PBC considers the activities that organizational actors conduct (micro level), their consequences for organizational outcomes (macro level), and the feedback loop from contextual and organization variables back to the actors. Three elements of innovation can be isolated: practice (e.g. shared routines), praxis (theories in use, and practitioners. Much of the research on innovation fits in the category of practice. It is at this micro level that the managerial reality enfolds every day, therefore a theory of innovation needs to connect the action (praxis) with the managerial and academic theories (practice) by understanding the role of the agents (practitioners) (future research) Limitations: (1) focus was to integrate prior research, so authors did not offer detailed propositions linking the elements, (2) only one database of record, (3) the filtering process may have omitted relevant research, (4) a complicated framework like this one may highlight previously neglected connections, while failing to capture others. #4 – Success factors in new ventures: a meta-analysis New technology ventures (NTV) can have positive effects on employment and could rejuvenate industries with disruptive technologies. However, they have a limited survival rate. Thus, it is important to examine how new technology ventures can survive better. Current academic literature does not offer much insight, with numerous studies focus on success factors for new technology ventures, but the empirical results are often controversial and fragmented. The inconsistent and often contradictory results can stem from methodological problems, different study design, different measurements, omitted variables in the regression models, and noncomparable samples. Hence, this study provides a solution through a meta-analysis. Meta-analysis is a statistical research integration technique with a quantitative character. They require statistical techniques specifically designed to integrate the results of a set of primary empirical studies. This allows meta-analysis to pool all existing literature on a topic and not only the most influential ones. At the same time, meta-analysis compensates for quality differences by correcting for different artifacts and sample sizes. There are two main types of meta-analytic studies in the literature. The first focuses on relationships between two variables or a change in one variable across different groups of respondents. In general, this type of analysis is strongly guided by one or two theories. The second type examines a large number of metafactors related to one particular focal construct and are largely atheoretical because the research they combine rests on heterogeneous theoretical grounds. Because this paper focuses on numerous theoretical streams where only the setting (new firms) is the common denominator, the decision was made to focus on the second type of meta-analysis. The first step is to select studies as input for the analysis. The literature was combed for research that discussed the success factors of NTVs, using the ABI-INFORM system and the internet, based on a group of key words. After articles were gathered, cross-referenced studies were added from them. Next, an effort was made to ensure that the articles on the list represented the correct level of analysis, significantly reflected NTVs, and reported a correlation matrix with at least one antecedent of performance and one performance measure. When coding the studies, care was taken to refer to the scales reported in the primary studies so that dissimilar elements would not be combined inappropriately and so that conceptually similar variables would not be coded separately to compensate for the slightly different labels that authors use to refer to similar constructs. The most important consideration for the meta-analysis was to the ability to make comparisons across research studies. To do this, the research could draw on Pearson correlations between a metafactor and the dependent variable or the regression coefficient between the metafactor and the dependent variable. This is necessary because correlations between two variables are independent of the other variables in the model. Furthermore, using this method has the advantage of using random effects models instead of fixed effects model. Fixed effects models assume that exactly the same true correlation value between metafactor and dependent variable underlies all studies in the meta-analysis, whereas random effects models allow for the possibility that population parameters vary from study to study. Given the range of definitions for NTVs, the choice for random effects was appropriate. The second step is to correct metafactors for dichotomization, sample size differences, and measurement errors. First, to correct dichotomized metafactors, a conservative correction was made by dividing the observed correlation coefficient of the sample by 0,8, because dichotomization reduces the real correlation coefficient by at least 0,8. Furthermore, to correct sampling error, the sample correlation was weighted by sample size. Finally, to remedy measurement errors, Cronbach’s alphas were used. The correlation coefficient was divided by the products of the square root of the reliability of the metafactor and the square root of the reliability of performance. The third step in the meta-analysis protocol was to determine whether a metafactor was a success factor. To accomplish this, three conditions were assessed. First, the study should have, in essence, the same correlation which can be checked through a chi-square test on homogeneity. However, this seems to have a bias because of uncorrected artifacts. For homogenous metafactors, two significance test were applied. First, it was determined whether whole confidence interval was above zero. Second, if it was, the p-value was calculated for the real correlation to estimate the degree of significance. Only when all three conditions held was a given metafactor considered to be a success metafactor for NTVs. For those heterogeneous metafactors, a moderator analysis was conducted were the date was divided into subgroups according to methodological characteristics. A separate meta-analysis was conducted for each subgroup. Finally, the ‘file drawer’ was reviewed in an attempt to assess publication bias, resulting from a general tendency to publish only significant results. The analysis revealed 24 metafactors, that were put into categories grounded in the literature in order to make it more transparent and interpretable. Two researchers independently assigned each variable to a category. The explained variance must be more than 75 percent to yield a homogenous factor. In that case, the real variance is less than 245 percent of the total variance of correlations from the primary studies. The remaining variance is likely due to other unknown and uncorrected artifacts, and therefore it can be neglected. The study’s high-quality scale is either a ratio-interval measure or a Likert-type scale with a Cronbach’s alpha of at least 0,7 that consists of at least three items. The last condition ensures that liker-type scales will be reliable and that they will still hold a certain reserve for future studies. An essential implication for future regression studies is that when results are contradicting or insignificant it may be due to the study’s heterogeneous factors. In that case, a detailed study to significant differences in correlation coefficients for various subsamples between factor and dependent variable may explain the deviating results. The study’s findings suggest that acquiring more experience in marketing and industry may lead to higher NTV performance. Remarkable was that R&D investments were not a success factor. Limitations were that the pearson correlations used for the study are primarily intended for measurement of the strength of a linear relationship between two variables. Furthermore, the primary studies based their samples on surviving NTVs because of the difficulties in assessing failed NTVs. Therefore, any study in this area must be biased with two implications: meta-factors that influence the success and mortality of a NTV could conceivably be substantially different, and strategies that seem to deliver the best performance can be misleading. Finally, the 31 studies used here may have been sufficient for a pre-mate analysis, but may not be enough for the real deal. #5 – Organizational theories: Some Criteria for Evaluation A theory is a statement of relations among concepts within a set of boundary assumptions and constraints. The function of a theory is to prevent the observer from being dazzled by the complexity o natural or concrete events. The purpose is twofold: to organize (parsimoniously) and to communicate (clear). What theory is not… Description, the features or qualities of individual things, acts or events must be distinguished from theory. The vocabulary of science has two basic functions: to describe the objects and events being investigated and to establish theories by which events and objects can be explained and predicted. Specifically, three modes of description must be distinguished from theory: categorization of raw data, typologies, and metaphors. Typologies can be seen as a mental construct formed by the synthesis of many diffuse individual phenomena which are arranged into a unified analytical construct. Metaphors are statements that maintain that two phenomena are isomorphic. The metaphor is used to ask how the phenomenon is related to another one. Researchers can define a theory as a statement of relationships between units observed or approximated in the empirical world. Approximated units mean constructs, which by their very nature cannot be observed directly. Observed units mean variables, which are operationalized empirically by measurement. The primary objective of a theory is to answer the questions of how, when, and why, unlike the goal of description, which is to answer the question of what. Thus, a theory may be viewed as a system of constructs and variables in which the constructs are related to each other by propositions and the variables are related to each other by hypotheses. The system is bounded by the theorist’s assumptions. They set the limitations in applying the theory. These assumptions include the implicit values of the theorist (values) and the often explicit restrictions regarding space and time. Theories cannot be compared on the basis of their underlying values, as these tend to be idiosyncratic products of the theorist’s creative imagination and experience. These can never be eliminated and can be revealed by studying psychoanalytic, historical, and ideological works of the theories. Spatial and temporal assumptions are often relatively apparent. Spatial boundaries are conditions restricting the use of the theory to specific units of analysis. Temporal contingencies specify the historical applicability of a theoretical system. Taken together, spatial boundaries and temporal contingencies limit the generalizability of the theory. However, some are more bounded by this than others. There is a continuum ranging from empirical generalization (rich in detail, bounded by space and time) to grand theoretical statements (abstract, but unbound). Propositions state the relationships among constructs, whereas hypothesis that are derived from the propositions specify the relations among variables. Hence, specific terminology is required by researchers. No evaluation of a theory is possible unless there are broad criteria by which it is evaluated. The two primary criteria upon which any theory may be evaluated are falsifiability and utility. Falsifiability determines whether a theory is constructed such that empirical refutation is possible. Utility refers to the usefulness of theoretical constructs and is the bridge that connects theory and research. At the core of this are explanation and prediction. An explanation establishes the substantive meaning of constructs, variables, and their linkages, while a prediction tests that substantive meaning by comparing it to empirical evidence. . Falsifiability Utility Measurement Issues Variable Scope Construct validity Construct scope Logical Adequacy Explanatory Potential Empirical Adequacy Predictive Adequacy Measurement issues: In order for a variable to be operationally specific, that variable must be defined in terms of its measurement. Furthermore, for a theory to be falsifiable, these operationalized variables must be coherent. That is, they must pass the tests of being a good measurement model: validity, noncontinuousness, and reliability. Unless the theorist’s hypotheses incorporate variables which can be meaningfully and correctly measured, any variance that may exist in the object of analysis is essentially unobservable. If the antecedent is a sufficient condition for the consequent, a continuous consequent would make testing impossible. Thus, the theorists is demanded to specify the time and space parameters in the hypothesis so that the constructs may be meaningfully measured. Variables Constructs Relationships Construct validity: it may be useful to define constructs in terms of other established and wellunderstood constructs. When combined, the indicators of construct and variable falsifiability are no less than the criteria for construct validity itself. To achieve construct validity, at least the responses from alternative measurements of the same construct must share variance (convergent validity), while the identified objects of analysis must not share attributes and must be empirically distinguishable from one another (discriminant validity). In determining convergent validity, the theorist must confirm that evidence from different sources gathered in different ways all indiciate the same or similar meaning of the construct. In determining discriminant validity, the theorist must confirm that one can empirically differentiate the construct from other constructs that may be wsimilar, and the one can point out what is unrelated to the construct. In cases of high collinearity, it is impossible to talk of their independent effects. The key to meeting the falsifiability criterion for constructs thus lies in showing that variables which should be derived from the constructs are indeed correlated with the construct, regardless of the procedure used to test correlation, and those variables which should be unrelated to the construct are indeed uncorrelated. Logical and Emperical Adequacy: When evaluating the falsifiability of the relational properties of theoretical systems, theorists must examine both the logical adequacy of the propositions and hypotheses as well as their empirical adequacy (the capacity of the relationships implied in propositions and hypotheses to be operationalized). Logical adequacy may be defined as the implicit or explicit logic embedded in the hypotheses and proposition s which ensures that they are capable of being disconfirmed. In this context, propositions and criteria must be nontautological and the nature of the relationships between antecedent and consequent must be specified. For a proposition or hypothesis to be falsifiable, the antecedent and the consequent may not be epiphenomenal. That is, the sheer existence of the antecedent may not automatically imply the existence of the consequent. However, there are also examples in which a tautological proposition is self-verifying and not subject to disconfirmation. The theorist must incorporate in propositions and hypotheses and explicit statement of whether the antecedent is a necessary and/or sufficient condition for the consequent. By failing to explicitly specify the nature of these logical links, organizational theorists make it impossible for their theories to ever be disproved. Finally, empirical adequacy is one in which the propositions and hypotheses may be operationalized in such a manner as to render the theory subject to disconfirmation. There either must be more than one object of analysis or that object must exist at more than one point time. In addition, empirical adequacy at the relational level cannot be achieved if the variable does not meet standards of a good measurement model. Variable scope: For adequate scope, the variables included in the theoretical system must sufficiently, although parsimoniously, tap the domain of the construct in question, while the construct must, in turn, sufficiently, although parsimoniously, tap the domain of the phenomenon in question. The goal must be the achievement of a balance between scope and parsimony. Explanatory potential and predictive adequacy: Theorists must examine both the substantive as well as probabilistic elements of propositions and hypotheses. The explanatory potential of theories can be compared on the basis of (a) the specificity of their assumptions regarding objects of analysis, (b) the specificity of their assumptions regarding determinative relations between antecedent and consequent, and (c) the scope and parsimony of their propositions. How will theorists decide which theories to include? To answer this question, theorists need a clear understanding of how a given theory fits in with the other preexisting and apparently related theories. Two dimensions fit this: connective and transformational. Connectivity refers to the ability of a new theory to bridge the gap between two or more different theories, thus explaining something between the domains of previous theories. The theory is said to be transformational if it causes preexisting theories to be reevaluated in a new light. #6 – Does Strategic Planning Enhance or Impede Innovation and Firm Performance. Many scholars have debated whether strategic planning enhances or impedes the generation of NPD projects (new product developments). The traditional view claims that strategic planning promotes a careful review of the different options in various business environments and therefore increase the number of NPD projects and enhances firm performance. In contrast, some scholars indicate that improvisation, or an experiemental approach may better increase the number. The purpose of this study is to provide empirical evidence on the debated role of strategic planning by answering the two questions: does strategic planning increase or decrease the number of NPD projects? And if so, how can a firm manage controllable organizational factors to mitigate the adverse effect of strategic planning. The article continues by providing a background on strategic planning and the possible influence it has on NPD projects as identified in the literature. Despite the abundance of literature they found on the importance of either strategic planning or generating new NPD projects in strategies, the debate continues about whether and how strategic planning influences the generation of NPD project ideas, largely because of the limited availability of empirical research. To address this unresolved issue, the authors propose a contingency framework that not only explains their direct relationship, but also shows how organizational factors identified moderate the relationships. The authors select variables that best represent resource-advantage theory to test if they facilitate or impede the relationship. In order to provide a more comprehensive view of the firm, the model also examines the direct relationship between strategic planning and firm performance as well as the moderating effects of resource-advantage variables on this relationship, and also include product innovativeness and three environmental variables as control variables that are generally believed to influence outcomes of NPD activities. The authors use a competing hypothesis approach that compares two plausible alternative hypotheses to examine the debatable link. This approach is designed to elicit more objective and reasonable explanations for competitng perspective and enhances objectivity. It generalizes the findings by evaluating the pros and cons of the different views. This approach leads to HI: Strategic planning decreases the number of NPD projects. H2: The effect of strategic planning on the number of NPD projects is stronger in larger firms than in smaller firms (Firm size: number of employees) H3: The effect of strategic planning on the number of NPD projects is stronger in firms with high R&D intensity than firms with low R&D intensity (R&D intensity: R&D expenditures as % of revenues) H4: The effect of strategic planning on the number of NPD projects is stronger in firms with high organizational redundancy than in firms with low organizational redundancy (redundancy: overlap of skills and resources, information, activities, and management responsibility) H5: Strategic planning enhances firm performance Four control variables are added: product innovativeness, market turbulence, market growth, and technological turbulence. All study measures were adopted from well-validated measures (used in other research). To assess the appropriateness of the measures for this research, the authors conducted in-depth interview with 22 senior executives from seven organizations in the pre-test stage after which small adjustments were made. The unit of analysis is the strategic business unit (SBU) and all survey respondents were asked to refer to NPD projects of all NPD teams governed by their own SBUs when they filled out the survey. The Dependent Variable, the number of NPD projects, is measured by the number of NPD projects initiated during the previous 12 months at the SBU level. This measurement is adopted as to ensure that the common method bias that can result from using perceptual measures for variables is avoided. In addition, to measure whether NPD projects really matter for NPD outcomes, the authors also collected firm performance data : return on investment (ROI) and subjective overall performance, adopted from Song & Parry, which consists of three performance measures relative to firm objectives. For measuring the independent variable of strategic planning, the authors use a well-validated five-item Likert-type scale to measure the extent to which planning reflects a formal decision-making process, the extent to which the strategic plan is strictly implemented, and whether it includes an explicit process for determining specific, long-range objectives and process for generating alternative strategies.On control variables, market turbulence, market growth, and technology turbulence is measures using multi-item scales, also derived from different authors.