Old E4302

advertisement

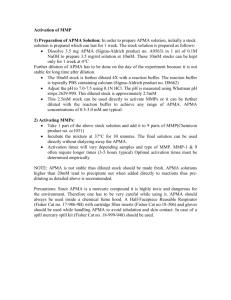

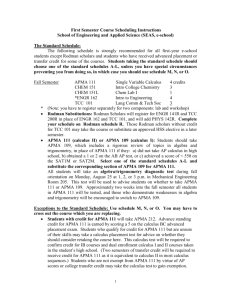

Columbia University | The Fu Foundation School of Engineering and Applied Science AMCS E4302: Parallel Scientific Computing Fall 2009 Lectures: Wednesdays, 6:50 - 9:20 pm Location: 1024 Mudd (note the change to accommodate CVN students; we will NOT be in 415 Schapiro CEPSR) Instructor: Edmond Chow E-mail: <ec2659@columbia.edu> (the best way to reach me) Office Hours: By appointment. You can also catch me after each class. Teaching Assistant: Tianzhi Yang <ty2109@columbia.edu> Office Hours: Thursdays, 2-3 pm, in 287 Engineering Terrace. Also available by appointment. CVN Students: E-mail the instructor or TA to set up any times you want to meet in person or discuss things via phone (skype and vnc may also be possible). You will also receive accounts on the catapult SiCortex machine. E-mail the instructor if you have any questions before you register (otherwise we don't know who you are until you have paid your registration). Course Discussion Group: We have a Google group that you will be invited to join. Please participate by posting questions and answers as well as anything of interest to the class. The TA and instructor will also participate. This is your group! Course Description An introduction to the concepts, the hardware and software environments, and selected algorithms and applications of parallel scientific computing, with an emphasis on tightly coupled computations that are capable of scaling to thousands of processors. Includes high-level descriptions of motivating applications and low-level details of implementation, in order to expose the algorithmic kernels and the shifting balances of computation and communication between them. This is a hands-on course which will develop your skills in using scientific parallel computing in industry or in your research. The content of this course is a mixture of 1) the numerical methods of choice used today in industry and academia for solving large-scale linear systems and partial differential equations, and 2) parallel and high-performance programming for solving problems of science and engineering. These subjects will teach you how to apply parallel computing to your own problems and you will complete a substantial project of your choice involving writing a parallel code (difficulty to be suited to your background and interest) and analysis of its performance. Most of our 2.5-hour lectures will present parallel algorithms and numerical methods in the first half, and topics of parallel computing practice (with occasional live demos) in the second half. Prerequisites Introductory course in numerical methods (e.g., APMA E4300), linear algebra (e.g., APMA E3101), elementary partial differential equations (e.g., APMA 3102), programming ability in C/C++ or some flavor of Fortran, and experience with Unix/Linux. (Assignments and projects will require programming on a Linux cluster.) Concurrent registration with APMA E4301 (Numerical Methods for PDEs) is recommended. Computing Resources We will be using a 72-core SiCortex computer for our assignments and projects. Another 1458core SiCortex computer may also be available for larger projects. Details on using these machines will be given in class. If you have your own parallel computing resources, even if you are set up to program your own GPU, you are free to use these for your project. Topics Overview of large-scale parallel scientific computing applications Parallel computer architecture (including networks and commodity components such as multicore processors and GPUs) Parallel performance evaluation Review of boundary value problems, data structures for sparse matrices, and solving linear systems; parallel sparse matrix-vector product as a prototypical problem Distributed memory parallelism and the Message Passing Interface (MPI) Shared memory parallelism; OpenMP and POSIX Threads programming Parallelism at the chip level; SSE/SIMD and other techniques Parallel languages Mathematical libraries, e.g., BLAS, ScaLAPACK, PetSc Graph partitioning (e.g., METIS) and load balancing Iterative methods for linear systems (Krylov subspace methods including the conjugate gradient method and GMRES) Parallel preconditioning techniques Multigrid methods (geometric multigrid and algebraic multigrid) Domain decomposition methods Parallel molecular dynamics Grading 10% Assignment 1 (warm-up project) 20% Assignment 2 (examples to give you an idea of the programming expectations for assignment 2: implement various algorithms for 1) parallel dense matrix-matrix multiply, 2) parallel sparse-matrix vector product, etc.) 50% Project 20% Take-home Final Calendar/Due Dates All due dates are on Wednesdays. E-mail your assignment to the instructor by midnight on the due date. Lecture 1: Sept. 9 - First class Lecture 2: Sept. 16 Lecture 3: Sept. 23 Lecture 4: Sept. 30 Lecture 5: Oct. 7 - Assignment 1 due (modified due date) Lecture 6: Oct. 14 - Proposals due Lecture 7: Oct. 21 Lecture 8: Oct. 28 - Assignment 2 due (modified due date) Lecture 9: Nov. 4 Lecture 10: Nov. 11 - Progress report due Lecture 11: Nov. 18 - Guest lecturer: Dr. Patrick Miller Lecture 12: Nov. 25 - night before Thanksgiving - we can discuss whether we want to move this class Lecture 13: Dec. 2 Lecture 14: Dec. 9 - Class presentations Dec. 16 - Take-home exam Dec. 23 - Project due References There is no required text for this course. References will be given and added here as needed. Readings from papers will also be suggested. Your project should involve doing some literature research to put your work and ideas in context. There exist several books on parallel computation that you may want to consult on parallel computer architecture, parallel performance evaluation, and parallel programming and algorithms. A choice that has a particularly good scientific computing perspective is Introduction to Parallel Computing, by Ananth Grama, Anshul Gupta, George Karypis, and Vipin Kumar. This book is on overnight reserve at the Engineering Library. SiCortex Programming Guide For MPI, the canonical reference is on the web here. What Every Programmer Should Know About Memory The Landscape of Parallel Computing Research: A View from Berkeley Victor Eijkhout's High Performance Scientific Computing book. The book is a work-inprogress so he would appreciate any of your comments (the link gives the latest version). You will see that he and I (and many others!) have a similar idea of what are the important ideas in parallel scientific computing. For example, chapters 1 and 2 covers sequential and parallel architecture; chapter 5 covers linear algebra including sparse matrices and dense and sparse matrix-vector products. The appendices include brief introductions to programming and Unix. Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods available as a free PDF book. It's probably a bit terse for introductory purposes, but it contains basic ideas (e.g., Jacobi, Gauss-Seidel, SOR) and important references. Tutorial on OpenMP