Coping with NP Hardness

advertisement

Coping with NP Hardness

Introduction

Duties

Base the grade on a final exam with an open material.

No obligatory homework, but strongly recommended.

Example – The Traveling Salesman Problem (TSP)

We have 𝑛 cities – {1, … , 𝑛}

∀𝑖, 𝑗 ∈ {1, … , 𝑛} there’s a distance 𝑑𝑖,𝑗

𝑛−1

𝑤(𝜃) = ∑ 𝑑𝜋𝑖,𝜋𝑖+1

𝑖=1

TSP

Input: 𝑑𝑖,𝑗 , B

Question: Is there a tour 𝜃 of length (𝜃) ≤ 𝐵 ?

𝑇𝑆𝑃𝑏𝑜𝑢𝑛𝑑 :

Input: 𝑑𝑖,𝑗

Question: Find 𝐵∗ (shortest path – with length 𝑤(𝜃 ∗ ))

𝑇𝑆𝑃𝑜𝑝𝑡 :

Input: 𝑑𝑖,𝑗

Question: Find 𝜃 ∗

𝑇𝑆𝑃𝑎𝑙𝑙 :

Input: 𝑑𝑖,𝑗 ,B

Question: Find all the tours 𝜃 s.t. 𝑤(𝜃) ≤ 𝐵

𝑇𝐴 (𝑥) = the time it will take the algorithm to run

𝑇𝐴 (𝑛) = max{𝑇𝐴 (𝑥)|𝑙𝑒𝑛𝑔𝑡ℎ(𝑥) = 𝑛}

P = consists of all the problems that have a poly-time algorithm.

NP = consists of all the problems that have a polynomial time verifier (or have a nondeterministic polynomial time algorithm).

Formally: 𝑁𝑃 =

{𝑝𝑟𝑜𝑏𝑙𝑒𝑚𝑠 𝜋|𝑇ℎ𝑒𝑟𝑒 𝑖𝑠 𝑎 𝑝𝑜𝑙𝑦 − 𝑡𝑖𝑚𝑒 𝑛𝑜𝑛 − 𝑑𝑒𝑡𝑒𝑟𝑚𝑖𝑛𝑖𝑠𝑡𝑖𝑐 𝑎𝑙𝑔𝑜𝑟𝑖𝑡ℎ𝑚 𝑓𝑜𝑟 𝑑𝑒𝑐𝑖𝑑𝑖𝑛𝑔 𝜋}

EXP = The class of problems that have an exp-time algorithm.

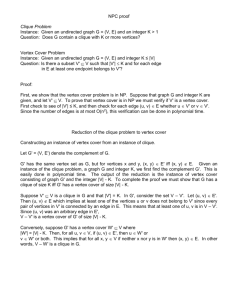

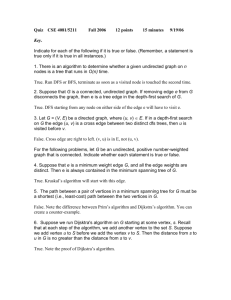

NP Completenes

A problem 𝜋 Is NP-Complete if:

(1) 𝜋 ∈ 𝑁𝑃

(2) ∀𝜋 ′ ∈ 𝑁𝑃. 𝜋′ ∝ 𝜋 (there is a poly-time transformation from 𝜋′ to 𝜋)

TODO: Draw reductions from NP to NP-Complete problems

NP

P

𝜋

𝜋′′

𝜋′

Scheme for proving a problem is NP-Complete

To prove that a problem 𝜋 is NP-Complete:

(1) Show that 𝜋 ∈ 𝑁𝑃

(2) Show that 𝜋′ ∝ 𝜋 for some 𝜋′ that is already known to be NP-Complete.

Example – Partition problem

Input: Integers – 𝐶 = {𝑐1 , … , 𝑐𝑛 }

Questions: Is there a subset 𝑠 ⊂ {1, … , 𝑛} s.t. ∑𝑖∈𝑆 𝑐𝑖 =

𝑛

𝐵

2

𝐵 = ∑ 𝑐𝑖

𝑖=1

Turing Reduction

𝜋1 ∝ 𝑇 𝜋2 (𝜋1 is turing reducible to 𝜋2 )

If given a procedure P that solves 𝜋2 instances in constant time , It is possible to device a

polynomial time algorithm for 𝜋1 .

An obvious observation would be that:

𝑇𝑆𝑃 ∝ 𝑇 𝑇𝑆𝑃𝑏𝑜𝑢𝑛𝑑 ∝ 𝑇 𝑇𝑆𝑃𝑜𝑝𝑡

But what about:

𝑇𝑆𝑃𝑏𝑜𝑢𝑛𝑑 ∝ 𝑇 𝑇𝑆𝑃

A detailed explanation -

Given: Procedure P that gets 𝑑𝑖,𝑗 , 𝐵 and returns T/N

Devise: Algorithm for 𝑇𝑆𝑃𝑏𝑜𝑢𝑛𝑑

Input: 𝑑𝑖,𝑗

Find: 𝐵∗

We can easily do it by a binary search for 𝐵∗ in the range [1, 𝑛 ∙ max 𝑑𝑖,𝑗 ]

The sums of all weights is not always polynomial:

𝑑𝑖,𝑗 = 2100 , 𝑛 = 100

Each number is only a 100 bits, but their sum is a number exponential in the size of the input.

What about:

𝑇𝑆𝑃𝑜𝑝𝑡 ∝ 𝑇 𝑇𝑆𝑃𝑏𝑜𝑢𝑛𝑑 ?

K-largest subses (KLS)

Input: 𝐶 = {𝑐1 , … , 𝑐𝑛 }, 𝐾, 𝐵 (𝑐1 , … , 𝑐𝑛 ∈ ℤ)

C has 2𝑛 subsets 𝑆𝑖 ⊆ 𝐶

𝑡𝑖 = ∑ 𝑐𝑗

𝑐𝑗 ∈𝑆𝑖

𝑆1 , 𝑆2 , … , 𝑆2𝑛

𝑡1 ≥ 𝑡2 ≥ ⋯ ≥ 𝑡2𝑛

Question: Are there at least k subsets 𝑆𝑖 of 𝐶 of size 𝑡𝑖 ≥ 𝐵?

Claim: Partition ∝ 𝑇 KLS

Proof: Assume given a procedure 𝑃(𝐶, 𝐾, 𝐵) → 𝑌/𝑁 for 𝐾𝐿𝑆.

We need a procedure 𝑄(𝐶, 𝐵) returning an index 𝑗 s.t. 𝑡𝑗 ≥ 𝐵 > 𝑡𝑗+1

Answer: We should look at the range [1,2𝑛 ] and perform a binary search on it. By that we would

find exactly the B that is the threshold.

Now we should use the procedure Q to produce and algorithm for partition.

𝐶 = {𝑐1 , … , 𝑐𝑛 }

1

1. 𝐵 ← 2 ∑ 𝑐𝑖

2. 𝑗1 ← 𝑄(𝐶, 𝐵)

3. 𝑗2 ← 𝑄(𝐶, 𝐵 + 1)

4. If 𝑗1 = 𝑗2 then return No. Else return Yes.

Home Exercise: Prove the reduction is correct.

A harder home exercise: Give a polynomial transformation from 𝑝𝑎𝑟𝑡𝑖𝑡𝑖𝑜𝑛 ∝ 𝐾𝐿𝑆.

A problem is self-reducible if there is a Turing reduction from its optimization version to its

decision version.

An example: 𝑇𝑃𝑜𝑝𝑡 ∝ 𝑇 𝑇𝑆𝑃 → 𝑇𝑆𝑃 is self reducible.

Another example: Clique is self-reducible.

Lets first define Clique…

𝐶𝑙𝑖𝑞𝑢𝑒:

Input: Graph 𝐺,integer 𝐾

Question: Does 𝐺 contain a Clique of size ≥ 𝑘?

A Clique is a complete sub-graph.

Proof: need to show 𝐶𝐿𝑄𝑜𝑝𝑡 ∝ 𝑇 𝐶𝐿𝑄𝑑𝑒𝑐

Or in other words – given a procedure 𝑃(𝐺, 𝑘) which answers whether 𝐶𝐿𝑄𝑑𝑒𝑐 has a Clique of

size 𝑘.

We need to devise an algorithm to find the biggest Clique in graph 𝐺.

Algorithm:

1) Find 𝑘 ∗ which is the size of the largest Clique in 𝐺 by applying 𝑃(𝐺, 𝑘) for0020𝑘 = 1, …

2) For every 𝑣 ∈ 𝐺 do:

a. Set 𝐺 ′ ← 𝐺 \{𝑣} (erase 𝑣 and all its edges)

b. 𝑏 ← 𝑃(𝐺 ′ , 𝑘 ∗)

c. If 𝑏 = 𝑌 then 𝐺 ← 𝐺′ else set 𝐺 ← 𝐺({𝑣} ∪ 𝑁(𝑣))

Home exercise 3: Show that dominating set is self reducible.

𝑊 ⊆ 𝑉 in graph 𝐺 is a dominating set if ∀𝑥 ∈ 𝑉\𝑊 has a neighbor in 𝑊.

𝐷𝑂𝑀𝑑𝑒𝑐 :

Input: 𝐺, 𝑘

Question: Does 𝐺 have a dominating set of size ≤ 𝑘.

𝐷𝑂𝑀𝑜𝑝𝑡 :

Input: 𝐺

Question: Find the smallest dominating set.

Pseudo-Polynomial Algorithms

Knapsack Problem

Input: Item types 1, … , 𝑛. Each item has a size 𝑆𝑖 and cost 𝐶𝑖 .

Also given a knapsack of size 𝐾. Profit target 𝐵.

Question: Are there integers {𝑥1 , … , 𝑥𝑛 } s.t. ∑𝑛𝑖=1 𝑥𝑖 𝑆𝑖 ≤ 𝐾 and the profit is larger than B

(∑𝑛𝑖=1 𝑥𝑖 𝐶𝑖 ≥ 𝐵)

Uniform Knapsack

A variant in which K=B (𝑆𝑖 = 𝐶𝑖 )

Question: ∃𝑥̅ , … , 𝑥̅𝑛 s.t. ∑ 𝑥𝑖 𝐶𝑖 = 𝑘.

0-1 Knapsack

Same except 𝑥𝑖 ∈ {0,1}

Algorithm for Uniform Knapsack

Stage A

Build a DAG (Directed Acyclic Graph) on 𝑉 = {0, … , 𝑘}

𝐸 = {⟨𝐴, 𝐵⟩|1 ≤ 𝐴 < 𝐵 ≤ 𝑘, ∃𝑖. 𝐴 + 𝐶𝑖 = 𝐵}

Example:

𝑘=8

0

1

2

3

4

5

6

7

Stage B

Check reachibility from 0 to 𝑘 in the DAG.

Home exercise 4: Prove that 𝑘 ks reachable from 0 ↔ ⋃ 𝐾(𝐶̅ , 𝐾) = 𝑦.

max(𝐼) = largest number in the input 𝐼.

A is a pseudo-polynomial time algorithm for a problem 𝜋 if its complexity is bounded by a

polynomial in both length of the input and max(𝐼).

---------- End of lesson 1

Pseudo Polynomial Algorithms

Instance 𝐼 composed of numbers

𝑙𝑒𝑛𝑔𝑡ℎ(𝐼)

max(𝐼) =largest number in 𝐼.

𝐴 is pseudo-polynomial if ∃polynomial 𝑝 𝑠. 𝑡. ∀𝐼, 𝑇𝐴 (𝐼) ≤ 𝑃(𝑙𝑒𝑛𝑔𝑡ℎ(𝐼), max(𝐼))

Strongly NP-Hardness

For problem 𝜋, denote by 𝜋𝑞 (𝑞 is some polynomial) the problem restricted to instances 𝐼 in

which max(𝐼) ≤ 𝑞(𝑙𝑒𝑛𝑔𝑡ℎ(𝑖)).

𝜋 is strongly NP-Hard if 𝜋𝑞 is NP-Hard.

Fact: If 𝜋 is strongly NP-Hard it does not have a pseudo-polynomial time algorithm (unless

P=NP).

Exercise 1: Prove.

0-1 knapsack

Input: 𝐶 = {𝑐1 , … , 𝑐𝑛 }, 𝑘

Question: Is there a subset 𝑠 ⊂ {1, … , 𝑛} 𝑠. 𝑡. ∑𝑖∈𝑆 𝑐𝑖 = 𝑘?

Dynamic Programming

𝑇(𝑖, 𝑏) = {

1 ∃𝑠 ⊆ {1, … , 𝑖} 𝑠. 𝑡. ∑ 𝑐𝑗 = 𝑏

0

For 1 ≤ 𝑖 ≤ 𝑛, 0 ≤ 𝑏 ≤ 𝑘

𝑗∈𝑆

𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

The final answer would be: 𝑇(𝑛, 𝑘).

Process: We start by filling row 𝑖 = 1.

1 𝑏 = 0 ∨ 𝑏 = 𝑐1

𝑇(1, 𝑏) = {

0

𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Suppose we filled rows till 𝑖, and now we fill row 𝑖 + 1:

1 𝑇(𝑖, 𝑏) ∨ 𝑇(𝑖, 𝑏 − 𝑐𝑖+1 )

𝑇(𝑖 + 1, 𝑏) = {

0

𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Time: 𝑂(𝑛𝐾). So it’s pseudo polynomial.

𝑻𝑺𝑷𝒐𝒑𝒕

Input: 𝑑𝑖,𝑗

𝑛 𝑛

The number of possibilities is (𝑛 − 1)! ∙ 𝑛 = 𝑛! ~ ( 𝑒 ) ~2𝑂(𝑛 𝑙𝑜𝑔𝑛)

We want to do it in 𝑂(𝑝(𝑛) ∙ 2𝑛 )

𝑀 = {2, … , 𝑛}

𝑇(𝑖, 𝑆) = length of the shortest tour that starts at city 1, visits all visits of 𝑆 exactly once and

ends at 𝑗.

Process of filling 𝑇:

For |𝑆| = 1: 𝑆 = {𝑗}.

𝑇(𝑗, 𝑆) = 𝑑𝑖,𝑗

For |𝑆| = 𝑙 + 1:

Let’s define 𝑆 ′ to be the vertices between city 1 and 𝑗.

|𝑆 ′ | = 𝑙.

𝑇(𝑖, 𝑆) = min{𝑇(𝑘, 𝑆 ′ )|𝑆 ′ ⊆ 𝑆, |𝑆 ′ | = 𝑙, 𝑘 ∈ 𝑆 ′}

Final answer: min{𝑇(𝑗, 𝑀) + 𝑑𝑗,1 }

𝑗∈𝑀

We have 2𝑛−1 different subsets times n. And 𝑛 operations for each cell.

So in conclusion we are talking about 2𝑛−1 ∙ 𝑛2 .

Exercise 2: Find the shortest TSP path (and not cycle).

TSP Path:

You start at some city 𝜋1 and travel to a city 𝜋𝑛 . Allowing you to start or to end at any city.

Exercise 3: TSP with dependent distances:

Input: 𝑑𝑖,𝑗,𝑘 - the cost/distance for going from 𝑖 to 𝑗 if the city you visited be fore was 𝑘.

Recursion

3SAT

Input: 𝜑 = ⋀𝑚

𝑖=1 𝑐𝑖

𝑖

𝑖

𝑐𝑖 = (𝑢1 ∨ 𝑢2 ∨ 𝑢3𝑖 ), 𝑢𝑖 ∈ {𝑥𝑖 , 𝑥̅𝑖 }

Question: Does 𝜑 have a satisfying truth assignment 𝑓: {𝑥1 , … , 𝑥𝑛 } → {𝑇, 𝐹} 𝑠. 𝑡. 𝑓(𝑥̅𝑖 ) = ̅̅̅̅̅̅̅

𝑓(𝑥𝑖 )

X3SAT

𝑓 is required to satisfy in each clause exactly one literal.

Naïve Algorithm: 𝑂(2𝑛 𝑝(𝑚))

𝑓(𝑥𝑖 ) = 𝑇

Suppose 𝑥𝑖 appears in some 𝐶 = (𝑢𝑖 , 𝑢𝑗 , 𝑢𝑘 ). Suppose 𝑢𝑖 = 𝑥𝑖 .

Then must yield 𝑓(𝑢𝑗 ) = 𝑓(𝑢𝑘 ) = 𝑓.

Symmetrically, if 𝑢𝑖 = 𝑥̅𝑖 we should set them to true.

If 𝑓(𝑥𝑖 ) = 𝐹

𝐶 = (𝑥𝑖 , 𝑢𝑗 , 𝑢𝑘 ) → 𝑓(𝑢𝑗 ) ≠ 𝑓(𝑢𝑘 ) →

Get rid of 𝑋𝑘 and replace it by 𝑥𝑗 or 𝑥̅𝑗

Canonical form: 𝜑 is in canonical form if each close contains 3 different variables.

Fact: If 𝜑 is not in canonical form, then it can be transformed into a shorter 𝜑 in canonical form

in polynomial time.

Idea: Suppose that 𝜑 is not canonical. Let 𝑐 = (𝑢1 , 𝑢2 , 𝑢3 ) be a clause violating the condition.

Clauses might already be simplified formulas and not necessarily the input.

Suppose (𝑢1 , 𝑢2 , 𝐹), 𝑢2 = 𝑥2

We can discard 𝑢1 , replacing by 𝑥1 / ̅̅̅

𝑥1 as needed

(𝑥1 , 𝑥1 , 𝑥1 ) → not satisfyable

(𝑥1 , 𝑥1 , ̅̅̅)

𝑥1 → 𝑓(𝑥! ) = 𝐹

⋮

(𝑥1 , 𝑥1 , 𝑥2 ) → 𝑓(𝑥1 ) = 𝐹, 𝑓(𝑥2 ) = 𝑇

Algorithm for X3SAT:

(1) Pick 𝑥𝑖 ∈ 𝜑

(2) 𝑏 ← 𝑇𝐸𝑆𝑇(𝜑, 𝑥𝑖 , 𝑇)

(3) If 𝑏 = 𝑇 then return 𝑇.

(4) Else 𝑏 ← 𝑇𝐸𝑆𝑇(𝜑, 𝑥𝑖 , 𝐹) and return 𝑏.

Procedure 𝑇𝐸𝑆𝑇(𝜑, 𝑥𝑖 , 𝑣)

(1) Set 𝑓(𝑥𝑖 ) ← 𝑣

(2) Canonize 𝜑 → 𝜑′ .

a. If found contradicton while canonizing. Return 𝐹.

b. If 𝜑 ′ = ∅ then return 𝑇.

(3) Run 𝑋3𝑆𝐴𝑇(𝜑′ ).

𝑔(𝑛) = max 𝑇𝐴 (𝜑) over 𝜑 with n variables.

Suppos that there is a clause in which 𝑥𝑖 appear positively.

𝑐 = (𝑥𝑖 , 𝑢2 , 𝑢3 ). When you assign: 𝑓(𝑥𝑖 ) ← 𝑇 ⇒ 𝑓(𝑢2 ) = 𝑓(𝑢3 ) = 𝐹.

So 𝜑′ has at least 3 variables less.

(𝑥⏟𝑖 , 𝑢2 , 𝑢3 )

𝐹

𝑢2 ≡ 𝑢

̅̅̅3

So 𝜑′ has at least 2 variables less.

Case 2:

𝑐 = (𝑥̅𝑖 , 𝑢2 , 𝑢3 )

𝑛

𝑔(𝑛) ≤ 𝑝(𝑛) + 2 ∙ 𝑔(𝑛 − 2) ⇒ 𝑔(𝑛) ≤ 2 2

𝑔(𝑛) ≤ 𝑝(𝑛) + 2𝛼(𝑛−2) + 2𝛼(𝑛−3)

2SAT

Clauses 𝑐𝑖 = (𝑢1𝑖 , 𝑢2𝑖 )

-------end of lesson 2

𝑁𝑒𝑒𝑑 𝑡𝑜 𝑝𝑟𝑜𝑣𝑒!

≤

2𝛼𝑛

Maximum Independent Set on Planar Graphs

MIS-Planar Algorithm

1. Find a Separator 𝑆

2. For every Independent Set 𝑀0 ⊆ 𝑆

a. Erase from 𝐴𝑖 all vertices adjacent to 𝑀0 along with their edges

b. Apply 𝑀1 ← MIS-Planar ()

c. Apply 𝑀2 ← MIS-Planar ()

d. 𝑀 ← 𝑀0 ∪ 𝑀1 ∪ 𝑀2

3. Return the largest 𝑀 seen

Correctness

Denote by 𝑀∗ = Maximum Independent Set of 𝐺

𝑀∗ = 𝑀0∗ ∪ 𝑀1∗ ∪ 𝑀2∗

𝑀0 = 𝑀0∗

𝑇(𝑛) = The maximum time complexity on 𝑛 vertex graph (in the worst case).

2

𝑇(𝑛) ≤ 𝑐1 𝑛𝑟2√𝑛 (𝑐2 𝑛 + 2𝑇 ( 𝑛))

3

Guess that 𝑇(𝑛) ≤ 2𝐶1 𝑛

Exercise – Verify!

A graph is planar if it does not contain a 𝐾5 or 𝐾33 as a sub-graph (minor).

Therefore, The clique problem on planar graph is not analogous to IS. The complementary graph

of a planar graph is not always a planar graph.

0-1 Knapsack

Input: Integers {𝑎, … , 𝑎𝑛 }

Question: Is there a subset 𝑆 ⊆ {𝑎1 , … , 𝑎𝑛 } such that ∑𝑖⊆𝑆 𝑎𝑖 = 𝐵 ?

P.P. Algorithm 𝑂(𝑛𝐵)~2𝑛

𝑛

Now we will see a 2 2 algorithm.

Assume 𝑛 is even.

𝐴𝛼 {𝑎1 , … , 𝑎𝑛 }

2

𝐴𝛽 {𝑎𝑛+1 , … , 𝑎𝑛 }

𝑛

𝐾 = 22

Look at the sorted items of 𝐴𝛼 and 𝐴𝛽

𝑛

𝑆 = 𝑆1 ∪ 𝑆2

𝑛

𝑆1 ⊆ {1, … , }

2

𝑛

𝑆2 ⊆ { + 1, … , 𝑛}

2

Set 𝑖 = 0, 𝑗 = 2 − 1

We check whether 𝛼𝑖 + 𝛽𝑗 = 𝐵?

If it’s smaller than B – 𝑖 = 𝑖 + 1

If it’s larger than B – 𝑗 = 𝑗 − 1

If it equals, we’re done!

We loop until either 𝑖 or 𝑗 reaches the end.

Claim: The algorithm is correct.

Proof: We need to show that if the algorithm returns no, then there’s no solution.

By contradiction: Suppose there is some 𝑆 ∗ that solves the problem.

𝑆 ∗ = 𝑆1∗ + 𝑆2∗ 𝑠. 𝑡. 𝑆1∗ ⊆ 𝑆1 , 𝑆2∗ ⊆ 𝑆2

By the definition of the algorithm, one of the indices had to explore the entire table since the

algorithm gave a negative answer.

Suppose 𝑖 reached the value 𝑖 ∗ before 𝑗 reached 𝑗 ∗ if at all.

But

𝛼𝑖 + 𝛽𝑗 > 𝛼𝑖∗ + 𝛽𝑗∗

Since always larger than B. But then we should have advanced with 𝑗. But our situation stays the

same. So eventually 𝑗 will reach 𝑗 ∗ - contradiction.

𝑛

The time complexity at the moment is 𝑛 ∙ 2 2 . The additional 𝑛 factor is due to the initial sorting.

However, we can sort the subset linearly – we just need to add the subsets containing a new

item and merge them with the old subsets till we have all subsets.

We still have that 𝑇 ∙ 𝑆 = 𝑂(2𝑛 )

What happens if we divide everything into 𝑘 tables?

𝑇 = 𝑂 (2

𝑘−1

𝑛

𝑘 )

𝑛

𝑆 = 𝑂 (2𝑘 )

𝑛

𝐴𝛼1 = {1, … , }

𝑘

2nd description:

We will break 𝐴 still into two sets – 𝐴𝛼 and 𝐴𝛽 .

𝑛

𝐴𝛼 = {1, … , 𝑘 }

𝐴𝛽 = the rest

We build only the table 𝛼 (sorted).

For every subset 𝑄 ⊆ 𝐴𝛽 do

𝑞 = ∑ 𝑎𝑖

𝑎𝑖 ∈𝑄

𝑟 = 𝐵−𝑞

Check if 𝑟 appears in the table 𝛼.

(by binary search)

There are 2|𝛽| iterations = 2

𝑘−1

𝑛

𝑘

iterations.

𝑛

𝑘

Each iteration takes time.

𝑇 = 𝑂 (𝑛 ∙ 2

𝑘−1

𝑛

𝑘 )

𝑛

𝑆 = 𝑂 (2𝑘 )

We would like to show that we can do it in:

𝑛

𝑇 = 𝑂 (22 )

𝑛

𝑆 = 𝑂 (24 )

𝑛

𝐾 = 24

𝑛

Lets divide everything into four parts (each size 4 ). Now lets treat each two parts as a single part

of size 𝑛2 . Denote the first “unified” table as 𝛼 + 𝛽

Implementation of 𝜶

By a priority queue 𝑃𝑄𝛼+𝛽

𝑛

Storing 𝑂 (2 4 ) = 𝑂(𝐾) elements

Operations: Insert, Extract_min

Obviously we need all possible combinations of elements of 𝛼 and 𝛽. Therefore we can imagine

a matrix of all possible combinations.

At first, we will put just the first column in the queue. It is clear the value at 0,0 is the minimal

one - so the minimal one is already in the queue.

Whenever we take out a value from the queue, we will insert the value to its right.

Similarly, we can unite the last two 𝐾 sized lists of subsets.

Exercise: Apply the technique for the following:

Exact Hitting set

Input: Universe

𝑈 = {𝑢1 , … , 𝑢𝑛 }

Subsets 𝑆1 , … , 𝑆𝑚 ⊆ 𝑈

Question: Find 𝑅 ⊆ 𝑈 touching each 𝑆𝑖 exactly once

(i.e. |𝑅 ∩ 𝑆𝑖 | = 1∀𝑖)

You can say that 𝑚 is no longer than 𝑛.

------ end of lesson 3

Theorem: If there exists a poly-time algorithm for finding a clique of size 𝑘 = log 𝑛 in a given

graph of size 𝑛, then there exists a poly-time algorithm for finding a clique of arbitrary size 𝑘 in

𝐺 in time ~2𝑂(√𝑘 log 𝑛) ≤ 2𝑂(√𝑛 log 𝑛)

Proof: Suppose we are given a polytime algorithm for finding a clique of size 𝑘, ∀𝑘 ≤ log 𝑛

Algorithm 𝐵[𝐺, 𝑘] (𝐺 is arbitrary)

1. Construct 𝐺̃ (𝑉̃ , 𝐸̃ )

𝑉̃ = {𝑊 ⊂ 𝑉||𝑊| = √𝑘}

𝑛

[|𝑉̃ | = 𝑛̃ = ( ) ≤ 2√𝑘 log 𝑛 ]

√𝑘

𝐸̃ = {(𝑊, 𝑊 ′ )|𝑊 ∩ 𝑊 ′ = ∅, 𝑊 ∪ 𝑊 ′ 𝑖𝑠 𝑎 𝑐𝑙𝑖𝑞𝑢𝑒 𝑖𝑛 𝐺}

2. Apply 𝐴[𝐺̃ , √𝑅]

And return it’s answer.

Observation 1: √𝑘 < log 𝑛̃ = √𝑘 log 𝑛 ⇒ 𝐴 works in time 𝑂(𝑛̃𝑐 ) = 𝑂 (2𝑂(√𝑘 log 𝑛) )

Observation 2: 𝐺̃ has a √𝑘-Clique ⇔ 𝐺 has a 𝑘-clique.

Approximation Algorithms

Optimization problem 𝜋.

Input 𝐼

𝑓 ∗ (𝐼) =Value of optimal solution of 𝐼

Polytime algorithm for 𝜋 for input 𝐼, return 𝑓𝐴 (𝐼).

We are interested in

|𝑓𝐴 (𝐼)−𝑓∗ (𝐼)|

𝑓∗ (𝐼)

the error measure.

|𝑓𝐴 (𝐼)−𝑓∗ (𝐼)|

≤𝜌

𝑓∗ (𝐼)

𝑓 (𝐼)

For minimization: 𝑓𝐴∗ (𝐼) ≤ 𝜌. A

Definition 1: We say that 𝐴 has approximation ratio ρ if ∀Input 𝐼

𝑓∗ (𝐼)

𝐴 (𝐼)

Definition 2: For maximization problems: 𝑓

≤ 𝜌.

good

approximation is when 1 ≤ 𝜌

TSP

𝑇𝑆𝑃𝑂𝑃𝑇 – 𝑛 cities, 1 … 𝑛 and there was a distance between every two cities 𝑑𝑖𝑗

The goal is to find a cyclic tour that visits all cities, such that it’s shortest (has minimal distance).

Common Assumptions

(1) Symmetry: 𝑑𝑖𝑗 = 𝑑𝑗𝑖

(2) Triangle Inequality: 𝑑𝑖𝑗 ≤ 𝑑𝑖𝑘 + 𝑑𝑘𝑗

Cannot Approximate without Triangle Inequality

Theorem: If there is an approximation algorithm for 𝑇𝑆𝑃 (without triangle inequality) with ratio

𝜌 (up to exp(𝑛)) then 𝑃 = 𝑁𝑃

Proof: Suppose we have such an approximation algorithm 𝐴 with ratio 𝜌.

We will use it to define a polynomial algorithm 𝐵 for the Hamiltonian cycle problem.

Reminder: The Hamiltonian cycle problem has input 𝐺 and the question is: Is there a cycle that

goes exactly once through every vertex?

Algorithm 𝐵 gets an input 𝐺

Create A 𝑇𝑆𝑃 instance on 𝑛 cities (assuming that 𝐺 is of size 𝑛).

(𝑖, 𝑗) ∈ 𝐺

1,

𝑑𝑖𝑗 = {

𝜌𝑛 + 2, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Now run 𝐴 on this instance.

If 𝐹𝐴 [𝐼] = 𝑛 return yes. Otherwise return no.

Claim: 𝐺 has a Hamiltonian cycle ⇔ 𝐵 returns yes.

Proof:

G has no Hamiltonian cycle ⇒ B surely returns no by definition of 𝐼

𝐺 has a Hamiltonian cycle𝑓 ∗ (𝑛) = 𝑛. Suppose 𝐵 returns “no”. or 𝑓𝐴 (𝐼) > 𝑛. It means that

𝑓𝐴 (𝐼) ≥ (𝑛 − 1) + (𝜌𝑛 + 2) ≥ (𝜌 + 1)𝑛 + 1

𝑓𝐴 (𝐼)−𝑓∗ (𝐼)

𝑓∗ (𝐼)

≥

𝜌𝑛+1

𝑛

> 𝜌 ⇒ 𝐴 Does not have approximation ration 𝜌.

Nearest Neighbor Heuristics

Each step, you visit the closest city which you haven’t visited yet. A greedy procedure…

This is the least efficient of the procedures we’ll describe.

𝜌 ≅ log 𝑛

This 𝜌 will probably be true for directed graphs as well.

Global Nearest Neighbor Heuristics

We hold a cycle at any given moment. We look for the city that can be added to the cycle such

that the cycle is minimal (of all possible cycles with the added city).

In this algorithm 𝜌 = 1 according to definition 1 and 𝜌 = 2 according to the second definition.

The Tree Algorithm

1. Construct a minimum spanning tree 𝑇 for 𝑑𝑖𝑗

2. Construct an Euler tour 𝜃 on 𝑇.

3. Shortcut the tour 𝜃 to a tour 𝜃 ′ which visits every city exactly once.

We know 𝑤(𝜃 ′ ) ≤ 𝑤(𝜃) (due to the triangle inequality)

𝑤(𝜃) ≤ 2𝑤(𝑇) Since

𝜃 ∗ - best tour.

But 𝑤(𝑇) ≤ 𝑤(𝑇𝜃∗ ) ≤ 𝑤(𝜃 ∗ )

So we know 𝑤(𝜃 ′ ) ≤ 2𝑤(𝜃 ∗ )

Definition 1: 𝜌 ≤

|𝑤(𝜃∗ )−𝑤(𝜃′ )|

𝑤(𝜃∗ )

Definition 2: 𝜌 ≤

𝑤(𝜃′ )

𝑤(𝜃∗ )

≤

≤

2𝑤(𝜃∗ )

𝑤(𝜃∗ )

2𝑤(𝜃∗ )−𝑤(𝜃∗ )

𝑤(𝜃∗ )

=1

≤2

Tight Exaple

TODO: Draw tight example

The tight example is a circle graph where each edge has a single edge to a vertex outside the

circle. The weight within the cycle is 𝛼 and the weight of every outer edge will be 𝛽. 𝛽 ≪ 𝛼.

𝑤(𝜃 ′ ) = (𝑛 − 1)𝛼 + 2𝛽 + (𝑛 − 1)(𝛼 + 2𝛽) ≈ 2𝑛(𝛼 + 𝛽)

𝑛

𝑤(𝜃 ∗ ) = 𝑛 ∙ 𝛼 + +𝑛𝛽 + 2𝛽 ∙ 2 ≈ 𝑛(𝛼 + 2𝛽)

So the ratio is ≈ 2.

Chrostofides

Euler Graph: All degrees are even. On an Euler graph you always have an Euler tour (if and only

if).

In any graph, the number of nodes of odd degree is even.

1.

2.

3.

4.

Build 𝑀𝑆𝑇 𝑇

Select a weight matching 𝑀 on the odd degree vertices 𝑍.

Compute an Euler tour 𝜃 on 𝑇 ∪ 𝑀

Shortcut into 𝜃 ′

?

𝑤(𝑇) + 𝑤(𝑀) ≤ 1.5𝑤(𝜃 ∗ )

1

Prove: 𝑤(𝑀) ≤ 2 𝑤(𝜃 ∗ )

Observe the tour 𝜃 ∗.

Observe the tour 𝜃̂ which is a tour of all the vertices of 𝑀.

Denote alternating edges of 𝑍 as 𝑀1 and 𝑀2 .

𝜃̂ = 𝑀1 ∪ 𝑀2

𝑀1 , 𝑀2 are matchings on 𝑍.

𝑤(𝑀)

≤

𝑤(𝑀1 ), 𝑤(𝑀2 )

𝐶ℎ𝑜𝑠𝑒𝑛 𝑎𝑠 𝑡ℎ𝑒 𝑚𝑖𝑛

𝑤𝑒𝑖𝑔ℎ𝑡 𝑚𝑎𝑡𝑐ℎ𝑖𝑛 𝑜𝑛 𝑍

2𝑤(𝑀) ≤ 𝑤(𝑀1 ) + 𝑤(𝑀2 ) = 𝑤(𝜃̂) ≤ 𝑤(𝜃 ∗ )

Thightness

TODO: Draw the tightness example

----- end of lesson 4

Exercise 1: [Tree algorithm for TSP]

a) Describe a variant of the algorithm for the TSP path problem

b) Show ratio 2

c) Give a tight “bad” example

𝑘-TSP

𝑘 salesman with an office located at some city. They can share the load. So we must construct 𝑘

different tours (Starting from the home city and ending there as well) that will traverse all cities.

The goal is to minimize the longest tour.

Paths: 𝜃1 , … , 𝜃𝑘 . |𝜃̂| ≥ |𝜃𝑖 |

Minimize 𝜃𝑖 .

Heuristics

1. Compute the shortest “ordinary” tour 𝜃 = ⟨𝑖1 , 𝑖2 , … , 𝑖𝑛 , 𝑖1 ⟩

2. We would like to identify breakpoints – {𝑖𝑝1 , … , 𝑖𝑝𝑘−1 }

3. Each agent will start with the city after a breakpoint, and return at the next breakpoint.

TODO: Draw the k-tour drawings

Denote 𝐿 = |𝜃|

And denote: 𝑑𝑚𝑎𝑥 = max {𝑑1𝑖 }

2≤i≤n

Choosing the breakpoints:

𝑗

(𝐿 − 2𝑑𝑚𝑎𝑥 ) + 𝑑𝑚𝑎𝑥 }

𝑘

1

Lemma 1: Each 𝜃𝑗 satisfies that |𝜃𝑗 | ≤ 𝑘 (𝐿 − 2𝑑𝑚𝑎𝑥 ) + 2𝑑𝑚𝑎𝑥

𝑝𝑗 = max {1 ≤ 𝑙 ≤ 𝑛||𝜃[1 → 𝑖𝑙 ]| ≤

Proof:

For 𝑗 = 1:

Denote 𝑒1 as the edge from 𝑖𝑝1 back to point 𝑖1

1

𝑘

|𝜃𝑘 |: By choice of 𝑝1 , 𝛼 ≤ (𝐿 − 2𝑑𝑚𝑎𝑥 ) + 𝑑𝑚𝑎𝑥

|𝑒1 | ≤ 𝑑𝑚𝑎𝑥

|𝜃1 | = 𝛼 + |𝑒1 | ≤

1

(𝐿 − 2𝑑𝑚𝑎𝑥 ) + 2𝑑𝑚𝑎𝑥

𝑘

𝑖 < 𝑗 < 𝑘:

Denote

By choice of 𝑝𝑖 ,𝑝𝑖+1

(*) 𝛼 + 𝛽 >

𝑗−1

(𝐿

𝑘

− 2𝑑𝑚𝑎𝑥 ) + 𝑑𝑚𝑎𝑥

𝑗

(**) 𝛼 + 𝛽 + 𝛾 ≤ 𝑘 (𝐿 − 2𝑑𝑚𝑎𝑥 ) + 𝑑𝑚𝑎𝑥

1

(*)+(**) 𝛾 ≤ 𝑘 (𝐿 − 2𝑑𝑚𝑎𝑥 )

|𝜃𝑗 | = 𝛾 + |𝑒1 | + |𝑒2 | ≤

1

(𝐿 − 2𝑑𝑚𝑎𝑥 ) + 2𝑑𝑚𝑎𝑥

𝑘

The only case left is 𝑗 = 𝑘. Left as an exercise.

1

1

Result 2: |𝜃̂| ≤ 𝑘 + 2 (1 − 𝑘) 𝑑𝑚𝑎𝑥

𝜃̂ ∗ - The best 𝑘 tour

𝜃 ∗ - the best tour

Lemma 3: |𝜃 ∗ | ≤ 𝑘|𝜃̂ ∗ |

𝜃 ∗ ≤ |𝜃1∗ ∪ 𝜃2∗ ∪ … ∪ 𝜃𝑘∗ | ≤ 𝑘|𝜃̂ ∗ |

Lemma 4:

|𝜃̂ ∗ | ≥ 2𝑑𝑚𝑎𝑥

Lemma 5: If |𝜃| ≤ 𝑟 ∙ |𝜃 ∗ |

1

Then |𝜃̂| ≤ (𝑟 + 1 − ) |𝜃̂ ∗ |

𝑘

Proof:

𝐿

1

+ 2 (1 − ) 𝑑𝑚𝑎𝑥

𝑘

𝑅

|𝜃 ∗ |

1

≤𝑟∙

+ 2 (1 − ) 𝑑𝑚𝑎𝑥

𝑘

𝑘

𝑘|𝜃 ∗ |

1 1

≤𝑟∙

+ 2 (1 − ) |𝜃̂ ∗ |

𝑘

𝑘 2

1

= 𝑟(𝜃̂ ∗ ) + (1 − ) |𝜃̂ ∗ |

𝑘

|𝜃̂| ≤

Bottleneck graph problems

𝑛 vertices

𝑑𝑖𝑗 ,

1 ≤ 𝑖, 𝑗 ≤ 𝑛

Triangle inequality holds.

𝒌-centers problem

𝑘-centers – ℳ ⊆ 𝑉, |𝑚| = 𝑘

𝑀: {1, … , 𝑛} → ℳ

∀𝑖 , 𝑀(𝑖) satisfies 𝑑𝑖,𝑀(𝑖) ≤ 𝑑𝑖,𝑚 ∀𝑚 ∈ ℳ

𝑑(ℳ) = max{𝑑𝑖,𝑀(𝑖) }

i

Decision Version:

Input: {𝑑𝑖𝑗 }, 𝑘, 𝑑

Question: Is there ℳ of size 𝑘 such that 𝑑(ℳ) ≤ 𝑑?

𝑘 − 𝐶𝑒𝑛𝑡𝑒𝑟𝑠𝑂𝑃𝑇

Input: {𝑑𝑖𝑗 }, 𝑘

Question: Find ℳ with minimal 𝑑(ℳ)

Feasible Solution:

Edge subgraph

𝐻 = (𝑉, 𝐸 ′ ) with cost max(𝐻) = max′{𝑑𝑖𝑗 }

(i,j)∈E

𝒢 =Collection of feasible subgraphs

Optimal solution: 𝐻 ∈ 𝒢 such that max(𝐻) ≤ max(𝐻 ′ ) ∀𝐻 ′ ∈ 𝒢

For the 𝑘 − 𝐶𝑒𝑛𝑡𝑒𝑟𝑠 problem:

𝒢𝑘−𝐶𝑇𝑅 = {𝐻|𝐻 = 𝑘 𝑠𝑡𝑎𝑟𝑠 𝑤𝑖𝑡ℎ 𝑡ℎ𝑒 𝑘 𝑐𝑒𝑛𝑡𝑒𝑟𝑠 𝑎𝑠 𝑟𝑜𝑜𝑡𝑠}

ℳ = {𝑚1 , … , 𝑚𝑘 }

Algorithm

𝐸 = {𝑒1 , 𝑒2 , … } such that 𝑑𝑒1 ≤ 𝑑𝑒2 ≤ ⋯ ≤ 𝑑𝑒𝑛(𝑛−1)

2

𝑖’th bottleneck graph

𝑏𝑛(𝑖) = {𝑉, {𝑒1 , … , 𝑒𝑖 }}

Algorithm A:

For 𝑖 = 1,2, … do

𝐺𝑖 ← 𝑏𝑛(𝑖)

If 𝐺𝑖 contains an edge subgraph 𝐻 such that 𝐻 ∈ 𝒢 then return 𝐻

Correctness:

𝐻 found in 𝑏𝑛(𝑖)

max(𝐻) = 𝑑𝑒𝑖

Power Graphs

For some 𝑡 ≥ 2, 𝐺 = (𝑉, 𝐸)

𝐺 𝑡 = (𝑉, 𝐸 ′ ) such that 𝐸 ′ =

{(𝑖, 𝑗)|𝑡ℎ𝑒𝑟𝑒 𝑒𝑥𝑖𝑠𝑡𝑠 𝑎 𝑝𝑎𝑡ℎ 𝑜𝑓 𝑎𝑡 𝑚𝑜𝑠𝑡 𝑡 𝑒𝑑𝑔𝑒𝑠 𝑐𝑜𝑛𝑛𝑒𝑐𝑡𝑖𝑛𝑔 𝑖 𝑎𝑛𝑑 𝑗 𝑖𝑛 𝐺}

Claim: max(𝐺 𝑡 ) ≤ 𝑡 ∙ max(𝐺)

Proof: If (𝑖, 𝑗) ∈ 𝐸 ′ (𝐺 𝑡 )

𝑑𝑒𝑖 ≤ max(𝐺)

𝑑𝑖𝑗 ≤ 𝑡 ∙ max(𝐺)

In the “if”

Procedure 𝑇𝑒𝑠𝑡(𝐺𝑖 , 𝑡) returning:

1) Failure

2) Edge subgraph 𝐻 of 𝐺𝑖𝑡 such that 𝐻 ∈ 𝒢

Algorithm B:

For 𝑖 = 1,2, … do

𝐺𝑖 ← 𝑏𝑛(𝑖)

𝑥 ← 𝑇𝑒𝑠𝑡(𝐺𝑖 , 𝑡)

If ≠ ”Failure” then return 𝑥 and halt

Claim 2: If 𝑇𝑒𝑠𝑡 complies with its specifications, then algorithm B has ratio 𝑡.

Proof: Suppose the algorithm stopped on iteration 𝑖. Test returned “failure” for 1, … , 𝑖 − 1

Which means there is no feasible solution using only 𝑒1 , … , 𝑒𝑖−1

⇒ 𝑑(ℳ ∗ ) > 𝑑𝑒𝑖−1 ≥ 𝑑𝑒𝑖

𝐻 ∈ 𝐺𝑖𝑡 = 𝑏𝑛(𝑖)𝑡 ⇒ 𝑑(ℳ𝑎𝑙𝑔 ) ≤ max(𝐻) ≤ 𝑡 ∙ 𝑑𝑒𝑖 ∎

Implementing test for 𝑘-center

𝑡=2

𝑇𝑒𝑠𝑡(𝐺, 2):

1. Construct 𝐺 2

2. Find a maximal independent set in 𝐺 2 , ℳ

3. If |ℳ| > 𝑘 then return failure. Else return ℳ

Claim: Test satisfies requirements 1+2.

2 is easy.

1 – Suppose that test returns failure while there is an edge subgraph 𝐻 in 𝐺 such that 𝐻 consists

of 𝑘 stars spanning 𝑉.

But since 𝐻 ∈ 𝐺 is a list of stars, after you square it you get a list of cliques. If there were at least

𝑘 stars, there are at least 𝑘 cliques and you can’t find a maximal larger idependent set.

Exercise 3: Give a tight “bad example”.

Exercise 4: Prove that the greedy centers algorithm also yields an approximation ratio of 2.

(and give a tight example)

Lower bound:

If there is a polynomial time approximation algorithm for 𝑘 − 𝑐𝑒𝑛𝑡𝑒𝑟𝑠 (with triangle inequality)

with ratio strictly less than 2, then 𝑝 = 𝑁𝑃

Proof sketch: Given an algorithm with ratio < 2 define a polynomial time algorithm for

dominating set.

Given 𝐺 and 𝑘 (where the question is: Is there a dominating set of size at most 𝑘?)

1. Create instance of 𝑘-centers by setting 𝑑𝑖𝑗 = 1 if edge (𝑖, 𝑗) is in the graph and 0

otherwise

Another bottleneck problem:

Bottleneck TSP

Input: {𝑑𝑖𝑗 }

Question: Find a tour 𝜃 = ⟨𝑖1 , 𝑖2 , … , 𝑖𝑛 , 𝑖1 ⟩ such that max{𝑑𝑖𝑙 ,𝑖𝑙+1 } is minimized.

l

𝒢 = {𝑡𝑜𝑢𝑟𝑠 𝜃 𝑜𝑛 𝑡ℎ𝑒 𝑐𝑖𝑡𝑖𝑒𝑠}

𝑐𝑜𝑠𝑡 = max(𝜃)

Fact 1: Testing if a graph 𝐺 is 2 connected is polynomial.

Fact 2: If 𝐺 is not 2-connected, then it does not have a Hamiltonian cycle

Fact 3: If 𝐺 is 2-connected – 𝐺 2 has a Hamiltonian cycle (which can be constructed in polynomial

time).

--- end of lesson 5

Maximum independent set (MIS)

Input: graph 𝐺 of size 𝑛

Question: Find largest independent set 𝑀∗

Known: No approximation algorithm with ration 𝑛1−𝜖

Best known: Ratio 𝑛 ∙

(log log 𝑛)2

log3 𝑛

Algorithm A (Greedy MIS):

𝑆←∅

While 𝐺 ≠ ∅ do

- Pick lowest degree 𝑣

- 𝑆 ← 𝑆 ∪ {𝑣}

- Erase {𝑣 } ∪ Γ(𝑣) from 𝐺

This is not a good algorithm…

Bad example:

TODO: Draw bad example

𝑛

Lemma 1: If the maximum degree of 𝐺 is Δ, then 𝐴 will return |𝑆| ≥ Δ+1

𝑛

Proof: Each iteration erases at most Δ + 1 vertices from 𝐺 ⇒ # of iterations ≥ Δ+1

And # of iterations = |𝑆|

1

Lemma 2: If 𝜒(𝐺) = 𝑘 (𝐺 is 𝑘-colorable) then A will find 𝑆 of size |𝑆| ≥ 2 log k 𝑛

𝑛

(𝐺 has |𝑀∗ | ≥ 𝑘)

𝑛

Proof: 𝐺 has 𝑛 vertices and 𝜒(𝐺) = 𝑘 ⇒ ∃ colorclas of size ≥ 𝑘 ⇒ The lowest degee in 𝐺 is at

𝑛

𝑘

𝑛

𝑘

most 𝑛 − ⇒ This iteration will erase at most 𝑛 − + 1 vectices

𝑛

1

𝑓(𝑛) ≥ 1 + 𝑓 (𝑘 − 1) ⇒ 𝑓(𝑛) ≥ 2 log k 𝑛

𝑛

Algorithm B (Greedy algorithm with ratio log 𝑛):

Partition 𝑉 into 𝑉1 , … , 𝑉

𝑛

log 𝑛

For each 𝐺(𝑉𝑖 ) find MIS 𝑆𝑖

Return the largest 𝑆𝑖

TODO: Draw partitioning of the best empty set

|𝑀∗ |

𝑛 log 𝑛

|𝑀∗ |

𝑛

≤

|𝑆𝐵 | log 𝑛

|𝑆𝐵 | ≥

A bad example is the empty set. Instead of taking all the nodes, we just take on of the sets.

Getting ratio

𝑛(log log 𝑛)2

log2 𝑛

|𝑀∗ | = 𝑛/𝐾

TODO: Draw ranges of 𝐾

Case ( אalgorithm A1):

If 𝐾 ≥ log 2 𝑛

𝑛

|𝑀∗ | ≤ 2

log 𝑛

So we can just return a single vertex.

Case ( בalgorithm A2):

log 𝑛

< 𝐾 < log 2 𝑛

log log 𝑛

log 𝑛

1. 𝑡 ← log log 𝑛

𝑛

2. Partition 𝑉 into sets 𝑉1 , … , 𝑉 of size 𝑘𝑡

𝐾𝑡

3. In each 𝐺𝑖 = 𝐺(𝑉𝑖 )

Search for an independent set of size 𝑡

4. Return one of them

In 𝑃 because the number of cases:

log 𝑛

𝐾𝑡

( ) ≤ (𝐾𝑡)𝑡 ≤ log 2 𝑛 = (22𝑙𝑜𝑔 log 𝑛 )log log 𝑛 = 22 log 𝑛 = 𝑛2

𝑡

Claim: ∃𝑖 such that 𝐺𝑖 contains an IS of size 𝑡

𝑛

Proof: |𝑀∗ | = 𝑘

So ∃𝑖 such that

𝑛⁄

|𝑀∗ ∩ 𝑉𝑖 | ≥ 𝑛 𝑘 = 𝑡

⁄𝐾𝑡

𝑛

∗

⁄

|𝑀 |

𝑛

𝑛

𝜌≤

≤ 𝑘=

=

𝑡

𝑘𝑡

|𝑆𝐴2 |

log 𝑛 2

(

)

log log 𝑛

Case ד:

𝑛

|𝑀∗ | =

𝑘

𝐾 const

Handling graphs without large cliques.

Graphs without triangles:

Algorithm 𝐹[3, 𝐺]:

1. If Δ(𝐺) < √𝑛 (Δ is the maximal degree)

then apply greedy algorithm 𝐴

2. Otherwise:

- Pick vertex 𝑣 of degree Δ

- Return 𝑆 = Γ(𝑣)

Γ(𝑣) ≥ √𝑛

So 𝜌𝐹 ≥ √𝑛

EX: Show that the following is a bad example

TODO: Draw bad example

Graphs with no cliques of size ≥ 𝑘:

Algorithm 𝐹[𝐾, 𝐺]

𝑘 = 2 return 𝑉

𝑘 = 3: return 𝐹[3, 𝐺]

𝑘 > 3:

1

If Δ(𝐺) < 𝑛1−𝑘−1 then apply the greedy algorithm 𝐴

Else

1

-

Pick a vertex 𝑣 of maximum degree 𝑑(𝑣) = Δ ≥ 𝑛1−𝑘−1

𝑆 ← 𝐹(𝑘 − 1, Γ(𝑣))

Return 𝑆

What is the ratio of the algorithm?

1

If Δ < 𝑛1−𝑘−1

𝑛

Greedy finds |𝑆| ≥ Δ+1 ≥

𝑛

1

1−

𝑛 𝑘−1

1

= 𝑛𝑘−1

1

If Δ ≥ 𝑛1−𝑘−1

1

𝑛′ = |Γ(𝑣)| ≥ 𝑛1−𝑘−1

By the induction hypothesis 𝐹(𝑘 − 1, Γ(𝑣)) returns |𝑠| ≥

|𝑆| ≥

1

1 𝑘−1

1−

(𝑛 𝑘−1 )

1

1

(𝑛′ )𝑘−1

𝑘−1

1

= 𝑛𝑘−1

1

Claim: 𝐹 returns |𝑆| ≥ 𝑐 ∙ 𝑛𝑘−1

𝑛

We know |𝑀∗ | = 𝐾, K constant.

1

|𝑀∗ | = ( + 𝐸) 𝑛

𝑘

1

1

1

[𝑘 = 2𝐾, 𝜖 = 𝑘 ⇒ 𝑘 + 𝜖 = 𝐾]

1

𝑘

1

Lemma: If |𝑀∗ | = ( + 𝜖) 𝑛 then we can find |𝑆| ≥ (𝜖𝑛)𝑘−1

We will now describe an algorithm called 𝐹𝑟𝑒𝑒𝑘 (𝐺)

While 𝐺 ha 𝑘 cliques

- Find a clique of size 𝑘

- Erase it from the graph

1

Claim: If there is an independent set of size (𝑘 + 𝜖𝑛) then 𝐹𝑟𝑒𝑒𝑘 (𝐺) will leave us with a raph 𝐺 ′

without 𝑘-cliques, |𝑣(𝐺 ′ )| ≥ 𝜖𝑛

Proof: 𝑥 iterations

Erased 𝑥𝑘 vertices

𝑥𝑘 ≤ 𝑛

𝑛

𝑥≤𝑘

Each iteration erased at most one 𝑀∗ vertex

1

This means that at the end, 𝐺 ′ still contains at least (𝑘 + 𝜖) 𝑛 − 𝑥 vertices of 𝑀∗\

1

1

𝑛

( + 𝜖) 𝑛 − 𝑥 ≥ ( + 𝜖) 𝑛 − = 𝜖𝑛

𝑘

𝑘

𝑘

|𝑉(𝐺 ′ )| ≥ 𝜖𝑛

A comment that doesn’t help us: Also, 𝐺 ′ still has an independent set of size ≥ 𝜖𝑛

Algorithm 𝐴4

- Apply 𝐹𝑟𝑒𝑒𝑘 (𝐺)

- Apply 𝐹(𝑘, 𝐺)

Case ד:

|𝑀∗ |𝑛

1

= ( + 𝜖) 𝑛,

𝑘

𝑘

⇒ After 𝐹𝑟𝑒𝑒𝑘 we have

𝜌𝐴4 ≤

1

𝑛

1

1

( 𝑛)𝑘−1

𝑘

=𝑘

1

𝑘−1

|𝑉(𝐺 ′ )|

∙𝑛

1

1−

𝑘−1

≥

1

𝑛

𝑘

𝑘 = 2𝐾,

⇒ 𝐹 finds |𝑆| ≥

𝜖=

1

1

𝑘

1

1−𝑘

(𝑘 𝑛 )

𝑅→∞

𝑘 𝑘−1 < 2 → 1

So it’s not just a constant, it’s a small constant.

Cases ד+ג:

Ramsey’s Theorem:

In a graph of 𝑛 vertices

𝑠+𝑡−2

∀𝑠, 𝑡 such that 𝑛 ≥ (

), 𝐺 must contain either an 𝑆-clique or a 𝑡-independent set.

𝑠−1

(Can be found in polynomial time)

Algorithm 𝑅(𝑠, 𝑡, 𝑉):

1. If 𝑆 = 0 then return ∅ as clique

If 𝑡 = 0 return ∅ as IS

2. Pick vertex 𝑣

𝛾 ← |Γ(𝑣)|

𝑠+𝑡−3

3. If 𝛾 ≥ (

) then do

𝑠−2

a. Apply 𝑅(𝑠 − 1 , 𝑡, Γ(𝑣))

b. If found (𝑠−, 1)-clique 𝑆, then return 𝑆 ∪ {𝑣}

c. If found 𝑡-IS 𝑇, then return it

4. Else

a. 𝑁 ← 𝑉\({0} ∪ Γ(𝑣))

𝑠+𝑡−3

)]

[|𝑁| ≥ (

𝑠−1

b. Apply 𝑅(𝑠, 𝑡 − 1, 𝑁)

c. If found 𝑆-clique return it

d. If found 𝑡 − 1 independent set return 𝑇 ∪ {𝑣}

To prove the algorithm works, we must show inductively that the different options are correct.

Ex: Prove correctness of 𝑅 and of theorem.

2

Corolary: ∀𝑐 ≥ 1 constant, ∀𝐺 of size 𝑛 [𝑜𝑛 ≥ 𝑛3 ]

log 𝑛

𝐺 contains either a clique of size ≥ 2𝑐 log log 𝑛 or an IS of size ≥ 𝑡 = log c 𝑛

Algorithm 𝐴3+4

𝑛

̂ = log 𝑛 ]

[|𝑀∗ | = 𝑘 for 𝐾 < 𝐾

𝑐 log log 𝑛

log 𝑛

̂=

0. 𝐾 ← 2𝐾

𝑐 log log 𝑛

1. Apply 𝑅(𝑠, 𝑡, 𝑉) with 𝑠 = 𝑘, 𝑡 = log c 𝑛

(take 𝑐 = 2)

2. If found an IS o size 𝑡 return it

3. If found clique of size 𝑆, erase it from 𝐺 and goto(1)

--- end of lesson 6

Coloring

Theorem: If there exists an approximation algorithm for 𝑀𝐼𝑆 with ratio 𝜌(𝑛) [non-decreasing]

then there exists an approximation for coloring with ratio 𝜌(𝑛) ∙ log 𝑛.

𝑛

We can even say something stronger. If 𝜌(𝑛) ≈ logα 𝑛 then the coloring has ratio 3𝜌(𝑛) or

something like that.

𝑛

Proof: Assume we have an algorithm 𝐴 for 𝑀𝐼𝑆 with ratio ~ logα 𝑛

Algorithm 𝐵 for coloring:

1. 𝑖 ← 1

𝑛

2. While |𝑉| ≥ logα 𝑛 do

a. Invoke algorithm 𝐴, get independent set 𝐼

b. Color 𝐼 vertices by 𝑖

c. Discard 𝐼 vertices by 𝑖

d. Discard 𝐼 vertices of their edges from 𝐺

e. 𝑖 ← 𝑖 + 1

3. Color each remaining vertex with a new color

Lemma 1: Algorithm 𝐴 uses ≤

3𝑛

𝜒

logα 𝑛

colors (where 𝜒 = chromatic number of the graph).

Proof: Suppose that there were m iterations. So we used 𝑚 colors in the WHILE loop. And the

number of colors at line 3 was 𝑚′

𝑛

𝑛

We know that: 𝑚′ < logα 𝑛 ≤ logα 𝑛 𝜒

Remains to show 𝑚 ≤

2𝑛

𝜒

logα 𝑛

∀𝑖

𝑉𝑖 = iteration set at the beginning of stage 𝑖

𝐺𝑖 - graph at that stage

𝑛𝑖 = |𝑉𝑖 |

𝐼𝑖 – independent set colored at that stage

𝜒𝑖 – chromatic number at that stage

𝐼𝑖∗ - maximal independent set of 𝐺𝑖

Claim 2: |𝐼𝑖∗ | ≥

𝑛𝑖

𝜒

Proof: The chromatic number of a subset of the graph is smaller of equals to the chromatic

number of the original graph. There exists a subset 𝑆 ⊂ 𝑉𝑖 such that 𝑆 is independent and

𝑛𝑖 𝑛𝑖

|𝐼𝑖∗ | ≥ |𝑆| ≥ ≥

𝜒𝑖

𝜒

Corollary: |𝐼𝑖 | ≥

logα 𝑛

2𝜒

Proof: From approximation ratio of 𝐴:

|𝐼𝑖∗ |

|𝐼𝑖 |

≤ 𝜌(𝑛𝑖 )

(*)

1

For sufficiently large 𝑛: log α 𝑛𝑖 ≥ 2 log α 𝑛

𝑛

because 𝑛𝑖 ≥ logα 𝑛. Why?

1

log α 𝑛

2

1

log 𝑛 − 𝛼 log log 𝑛 ≥ 2𝛼 log 𝑛 for sufficiently large 𝑛

log α 𝑛𝑖 ≥ (log 𝑛 − 𝛼 log log 𝑛)𝛼 ≥

𝜌(𝑛𝑖 ) ≤

𝑛𝑖

𝑛

≤

α

log 𝑛 log α 𝑛

|𝐼𝑖∗ |

|𝐼𝑖 | =

𝜌(𝑛𝑖 )

𝑏𝑦 (∗)

𝑐𝑙𝑎𝑖𝑚 2

(∗)

≥

𝑛𝑖

⁄𝜒

𝑛𝑖

⁄1 α

2 log 𝑛

𝑛1 = 𝑛

𝑛𝑖+1 = 𝑛𝑖 − |𝐼𝑖 | ≤ 𝑛𝑖 −

≥

log α 𝑛

2𝜒

log α 𝑛

2𝜒

After 𝑚 − 1 iterations

α

log 𝑛

2𝜒

𝑓𝑜𝑟 𝑙𝑎𝑟𝑔𝑒

𝑒𝑛𝑜𝑢𝑔ℎ 𝑛

≤

𝑛

log α 𝑛

(𝑚

≤

𝑛

≤

𝑛

−

−

1)

𝑚−1

log α 𝑛

2𝜒

log α 𝑛

2𝑛

𝑚

≤ 𝑛,

𝑚≤

∙𝜒

2𝜒

log α 𝑛

Algorithm DEG

Δ = max degree of 𝐺

Color set {1, … , Δ + 1}

Consider 3-Colorable 𝐺.

TODO: Draw set of neighbors of 𝑣 – Γ(𝑣)

Observation 1: ∀𝑣. 𝐺(Γ(𝑣)) is bipartite(≡ 2 colorable).

Algorithm 𝐴:

a. 𝑖 ← 1

b. While Δ(𝐺) ≥ √𝑛 do

(1) Pick max degree 𝑣 ∈ 𝐺

(2) Color 𝑣 by 𝑖 + 2

(3) Color the vertices of Γ(𝑣) by colors 𝑖, 𝑖 + 1

(4) Discard {𝑣} ∪ Γ(𝑣) from 𝐺 (and their edges)

(5) 𝑖 ← 𝑖 + 2

c. Color the remaining 𝐺 using algorithm 𝐷𝐸𝐺.

Note:

(1) Step 𝑐 uses at most √𝑛 colors

(2) Step 𝑏3 is polynomial

(3) 2 colors per iteration

(4) # iterations ≤ √𝑛

𝜒𝐴 ≤ 2

⏟𝑛 ≤ 3√𝑛

⏟∙ √𝑛 + √

𝑏

𝑐

𝑛

Slight improvement: 𝑂 (√log 𝑛) colors. (there are ways to color a graph in 𝑛0.39 )

𝑛

1. While Δ (𝐺) ≥ √log 𝑛 do

a. 𝐺 ′ ← 𝐺

𝑆←∅

b. While 𝐺 ′ ≠ ∅ and |𝑆| < log 𝑛 do

i. Pick max degree vertex 𝑣

ii. 𝑆 ← 𝑆 ∪ {𝑣}

iii. Discard from 𝐺 ′ {𝑣} ∪ Γ(𝑣)

c. Examine every 𝑊 ⊆ 𝑆, pick largest 𝑤 ⊆ 𝑆 such that Γ(𝑊) (=⋃𝑢∈𝑊 Γ(𝑤)) is

bipartite

d. Discard from 𝐺 the vertices 𝑊 ∪ Γ(𝑊)

e. Color 𝑊 by a new color and Γ(𝑊) by two new colors.

2. Apply DEG to the remaining vertices

We can always find a set 𝑊 ⊂ 𝑆 such that |𝑊| ≥

log 𝑛

3

such that Γ(𝑊) is bipartite.

Claim:

𝑛

(1) Step 2 uses ≤ √log 𝑛 colors

(2) Step 1 has 𝑚 iterations, 𝑚 ≤

3𝑛

√𝑛𝑙𝑜𝑔 𝑛

1

𝑛

= 3√log 𝑛

(3) Each iteration colors at least ≥ ≥ 3 √𝑛𝑙𝑜𝑔 𝑛 vertices

(4) Algorithm is in 𝑃 (the only suspicious step is c, and it’s OK because 𝑆 is logarithmic.

|𝑊| ≥

log 𝑛

,

3

𝑛

1

Γ(𝑊) ≥ |𝑊| ∙ √

≥ √𝑛𝑙𝑜𝑔 𝑛

log 𝑛 3

Exercise: Consider the following problem:

Input: 𝐺(𝑉, 𝐸) |𝑉| = 𝑛

𝜒(𝐺) = 3

𝑊 ⊂ 𝑉, |𝑊| ≤ log 𝑛

Known; 𝑊 is independent and dominating

Question: Color 𝐺 with 𝑂(log log 𝑛) colors.

Greedy Algorithm

Set Cover/Hitting Set

Input: Universe 𝐼 = {1, … , 𝑚}

Subsets 𝑃1 , … , 𝑃𝑛 ∀𝑖. 𝑃𝑖 ⊆ 𝐼

SC: Collection of subsets.

𝑆 ⊆ 𝐽 = {1, … , 𝑛}, such that ⋃𝛾∈𝑆 𝑃𝛾 = 𝐼

HS: Subset 𝑅 ⊆ 𝐼 such that 𝑅 ∩ 𝑃𝑖 ≠ ∅ ∀𝑖

TODO: Draw matching between 𝐼 and 𝐽

So actually the question can be reduced to finding a dominating set in a bipartite graph (where

elements are on the left, sets are on the right, and you have an edge if the element is in the set).

The weighted version has weights on the edges and we are looking for the cheapest cover.

Greedy Algorithm for weighted set cover:

𝑆←∅

While 𝐼 ≠ ∅ do

Find 𝑘 such that

𝑃𝑘

𝑘

is minimal

𝑆 ← 𝑆 ∪ {𝑘}

Discard the elements of 𝑃𝑘 from 𝐼 and from all their sets.

A tight bad example:

𝐼 = {1, … , 𝑚}

𝐽 = {1, … , 𝑚 + 1}

1

𝐶𝑗 = ,

𝑃𝑗 = {𝑗},

𝑗

𝑃𝑚+1 = 𝐼

𝐶𝑚+1 = 1 + 𝜖

1≤𝑗≤𝑚

By the greedy selection, we will pick: 𝑝𝑚 , 𝑝𝑚−1 , … , 𝑝1 in the first 𝑚 step.

1

1

1

𝐶𝑎𝑙𝑔 = +

+. . + = 𝐻(𝑚)~ log 𝑚

𝑚 𝑚−1

1

𝐶 ∗ = 𝑐𝑚+1 = 1 + 𝜖

Tight bad example for the unwaited case:

TODO: Draw a picture of the universe

--- end of lesson 7

Greedy Algorithms – Framework

𝑈 = {𝑢1 , … , 𝑢𝑛 } universe.

Solution 𝑆 ⊆ 𝑈.

Cost of 𝑢𝑖 = 𝑐𝑖

𝑐(𝑆) = ∑ 𝑐𝑖

𝑢𝑖 ∈𝑆

Constraints → feasability

Goal: Lowest cost feasible 𝑆.

Penalty: 𝑃: 2𝑈 → ℤ+ ∪ {0}

𝑆 feasible ⇔ 𝑃(𝑆) = 0

Requirements:

(A1) Penalty monotonicity – 𝑆1 ⊆ 𝑆2 ⇒ 𝑃(𝑆1 ) ≥ 𝑃(𝑆2 )

To choose elements greedily – Δ(𝑆, 𝑢) = 𝑃(𝑆) − 𝑃(𝑆 ∪ {𝑢})

(2) While 𝑃(𝑆) > 0 do

a) Choose 𝑢 maximizing the ratio:

Δ(𝑆,𝑢𝑖 )

𝑐𝑖

b) 𝑆 ← 𝑆 ∪ {𝑢𝑖 }

(A3) polinomiality:

Computing 𝑃(𝑆) is polynomial.

̃(𝑆, 𝑢)

Exercise: Suppose computing Δ is NP hard, but you can, in polynomial time compute Δ

̃(𝑆, 𝑢) ≤ 1 Δ(𝑆, 𝑢)

such that 𝜖Δ(𝑆, 𝑢) ≤ Δ

𝜖

Suppose we are looking now at 𝑆. And suppose 𝑆 ∗ is the optimal set.

𝑆̅ = 𝑆 ∗ \𝑆 “missing”

Currently Δ(𝑆, 𝑆̅) = 𝑃(𝑆) − 𝑃(𝑆

⏟ ∪ 𝑆̅) = 𝑃(𝑆)

=0

(A2) ∑𝑢𝑖∈𝑆̅ Δ(𝑆, 𝑢𝑖 ) ≥ 𝑃(𝑆)

𝑐 ∗ = 𝑐(𝑆 ∗ )

∀𝑆 such that 𝑃(𝑆) > 0, denote by 𝑢(𝑆) = element added by the greedy algorithm.

Claim 1:

Δ(𝑆, 𝑢(𝑆)) 𝑃(𝑆)

≥ ∗

𝑐𝑢(𝑠)

𝑐

𝑆̅ = 𝑆 ∗ \𝑆 = {𝑢1 , … , 𝑢𝑖𝑙 }

𝑙

(∗) ∑ Δ (𝑆, 𝑢𝑖𝑗 ) ≥ 𝑃(𝑆)

𝑗=1

(∗∗)𝑐(𝑆 ∗ ) = 𝑐 ∗ ≥ 𝑐(𝑆̅)

(𝐴2)

∑𝑙𝑗=1 Δ (𝑆, 𝑢𝑖𝑗 )

∑𝑙𝑗=1 Δ(𝑆, 𝑢𝑖𝑙 ) (∗∗) 𝑃(𝑆)

Δ(𝑆, 𝑢(𝑆))

≥

=

≥

𝑐𝑢(𝑆)

𝑐∗

𝑐(𝑆̅)

∑𝑙𝑗=1 𝑐 (𝑢𝑖𝑗 )

We know that:

Δ(𝑆, 𝑢(𝑆)) Δ (𝑆, 𝑢𝑖𝑗 )

≥

𝑐𝑢(𝑆)

𝑐 (𝑢 )

𝑖𝑗

(Because if ∀𝑗 𝑋 ≥

(∗∗∗) 𝑐(𝑢(𝑠)) ≤

𝑎𝑖

𝑏𝑗

∑𝑎

⇒ 𝑋 ≥ ∑ 𝑏𝑖)

𝑗

𝑐 ∗ ∙ Δ(𝑆, 𝑢(𝑆))

𝑃(𝑆)

Denote by 𝑐𝑔 = cost of the solution chosen by the greedy algorithm

Claim 2: 𝑐𝑔 ≤ 𝐻(𝑝(∅)) ∙ 𝑐 ∗ \

1

2

1

3

Where 𝐻(𝑚) = 1 + + + ⋯ +

1

𝑚

≈ ln 𝑚

Proof: 𝑢1 , 𝑢2 , … , 𝑢𝑚 are added to the solution by the greedy algorithm in the order which it

added them. Their cost: 𝑐1 , … , 𝑐𝑚 .

Δ1 , … , Δ𝑚 and 𝑃1 , … , 𝑃𝑚 (penalty before iteration 𝑖)

𝑃(∅) = 𝑃1

𝑃𝑖+1 = 𝑃𝑖 − Δ𝑖

𝑃𝑚+1 = 0

𝑚

𝑐𝑔 = ∑ 𝑐𝑖

𝑖=1

𝑚

𝑚

𝑚

𝑚

𝑖=1

𝑖=1

𝑖=1

𝑃𝑚+1 +1

𝑐 ∗ ∙ Δ𝑖

Δ𝑖

1

1

1

≤ ∑

= 𝑐∗ ∙ ∑ ≤ 𝑐∗ ∙ ∑ ( +

+ ⋯+

)=

𝑃𝑖

𝑃𝑖

𝑃𝑖 𝑃𝑖 − 1

𝑃𝑖 − Δ𝑖 + 1

(∗∗∗)

1

1

1

1

𝑐∗ ∙ ∑ ( +

+⋯+

) = 𝑐∗ ∙ ∑

= 𝑐 ∗ ∙ 𝐻(𝑃1 ) = 𝑐 ∗ ∙ 𝐻(𝑃(∅)) ∎

𝑃𝑖 𝑃𝑖 − 1

𝑃𝑖+1 + 1

𝑗

𝑖=1

𝑗=𝑃1

Stronger (but more convenient) version of (A2)

(A2’) ∀𝑢, 𝑆1 , 𝑆2 . 𝑆1 ⊆ 𝑆2 ⇒ Δ(𝑆1 , 𝑢) ≥ Δ(𝑆2 , 𝑢)

Claim 3: (𝐴2′ ) ⇒ (𝐴2) Exercise – prove!

Example: Set-Cover.

𝑈 = {1, … , 𝑛} corresponding to sets 𝑆1 , … , 𝑆𝑛 ⊆ 𝐼

Constraints:

𝐼 = {1, … , 𝑚}

Feasible solution: 𝑅 ⊂ 𝑈 such that ⋃𝑖∈𝑅 𝑆𝑖 = 𝐼

We would like to minimize the cost of 𝑅 such that 𝑅 is feasible.

1. Define penalty:

𝑃(𝑅) = # elements f 𝐼 not in ⋃𝑖∈𝑅 𝑆𝑖 = |𝐼\ ⋃𝑖∈𝑅 𝑆𝑖 |

𝑃(∅) = 𝑚, 𝑃(𝑈) = 0 assuming ⋃𝑖∈𝑅 𝑆𝑖 = 𝐼

(A1) V

(A3) V

(A’2)

Suppose we have 𝑅1 = {𝑆𝑖1 , … , 𝑆𝑖𝑙 } adding 𝑆𝑗 reduces 𝑃 by 𝑅

Then for 𝑅1 ⊇ 𝑅1

𝑅2 = {𝑆𝑖1 , … , 𝑆𝑖𝑙 , 𝑆𝑖𝑙 +1 , … , 𝑆𝑖𝑘 }

Adding 𝑆𝑗 to 𝑅2 will reduce 𝑃 by at most 𝑘

Exercise: prove formally!

Example:

K-Set cover

Choose min0cost collection of sets (from 𝑆1 , … , 𝑆𝑛 ) such that each element 𝑖 ∈ 𝐼 appears in at

least 𝑘 sets.

Exercise: Choose 𝑃. Show A1,A2’,A3

Min test-set:

𝐼 = {1, … , 𝑚}

𝑆1 , … , 𝑆𝑛 and choose 𝑅 ⊂ {1, … , 𝑛} such that for every 2 elements: 𝑖, 𝑗 ∈ 𝐼 there is 𝑘 ∈ 𝑅 such

that 𝑆𝑘 contains exactly one of 𝑖 add 𝑗.

Some centers problem:

Input: 𝐺(𝑉, 𝐸), distances 𝑑(𝑢, 𝑣) ∈ ℤ+

𝜑: 𝑉 → ℤ+ demand vertex 𝑣 needs 𝜑(𝑣) units.

Cost of opening a facility in 𝑣𝑖 = 𝑐𝑖

Solution = 𝑊 ⊂ 𝑉 where facilities are opened.

𝑐(𝑊) = ∑ 𝑐𝑖

𝑣𝑖 ∈𝑊

Goal: pick min-cost 𝑊 such that ∀ client 𝑣, 𝜑(𝑣) ∙ 𝑑𝑖𝑠𝑡(𝑣, 𝑛𝑒𝑎𝑟(𝑊, 𝑣)) ≤ 𝜌

HittingSet problem:

𝑈 = {𝑢1 , … , 𝑢𝑚 },

𝒮 = {𝑆1 , … , 𝑆𝑛 }

Solution: 𝑆 ⊂ 𝑈

𝑆 hits 𝑆𝑖 if 𝑆 ∩ 𝑆𝑖 ≠ ∅

Feasible solution: hits every 𝑆𝑖

Dual-HittingSet problem:

Input: 𝑈, 𝒮, 𝑝

Goal: Select 𝑆 ⊂ 𝑈, |𝑆| = 𝑝

Maximize the number of sets hit.

The optimum: |𝑆 ∗ | = 𝑝 = {𝑢1∗ , … , 𝑢𝑝∗ } hitting 𝑋 sets of 𝒮. 𝒮 ∗ ⊂ 𝒮 = sets hit by 𝑆 ∗. |𝒮 ∗ | = 𝑋

1

⇒ greedy yields |𝒮𝑔 | = 𝑝 hitting at least ≥ (1 − 𝑒) ∙ 𝑋

1

2

But we will only show it hits ≥ 𝑋

Greedy chooses:

𝑥

𝑢1 ∃𝑢𝑖∗ hitting ≥ 𝑝 sets. Gains 𝑔1 hits.

𝑥

𝑔1 ≥

𝑝

|𝒮 ∗ | = 𝑋

𝒮2∗ = 𝒮 ∗ \{sets hit by 𝑢1 }

|𝒮2∗ | ≥ 𝑋 − 𝑔1

∃𝑢𝑖∗ hitting ≥

(𝑋−𝑔1 )

𝑝

sets of 𝒮2∗

𝑢2 gains 𝑔2 hits

(𝑥 − 𝑔1 )

𝑔2 ≥

𝑝

…

𝑢𝑖 gains 𝑔𝑖 ≥

𝑋−𝑔1 −𝑔2 ,…,𝑔𝑖−1

𝑝

𝑝 ∙ 𝑔1 ≥ 𝑋

𝑝 ∙ 𝑔2 + 𝑔1 ≥ 𝑋

𝑝𝑔𝑝 + 𝑔𝑝−1 + 𝑔𝑝−2 + ⋯ + 𝑔1 ≥ 𝑋

Hitting 𝑔1 + 𝑔2 + ⋯ + 𝑔𝑝

But we will not show it!

Instead we will show:

𝑠ℎ𝑜𝑢𝑙𝑑 𝑏𝑒

≥

1

(1 − 𝑒) 𝑋

𝑝𝑔𝑝 + (𝑝 + 1)𝑔𝑝−1 + ⋯ + (2𝑃 − 2)𝑔2 + (2𝑃 − 1)𝑔1 ≥ 𝑝 ∙ 𝑋

(2𝑃 − 1)(𝑔𝑝 + 𝑔𝑝−1 + ⋯ + 𝑔2 + 𝑔1 ) ≥ 𝑝 ∙ 𝑋

𝑝

𝑋𝑔𝑟𝑒𝑒𝑑𝑦 = ∑ 𝑔𝑖 ≥

𝑖=1

𝑝

𝑋

𝑋≥

2𝑃 − 1

2

Comment 1: The bound is tight (there are examples).

Conclude: OPT needs more than 𝑘 − 1 elements to hit all sets

--- end of lesson

Hit-all Algorithm

Vertex cover.

Input: Algorithm 𝐺(𝑉, 𝐸)

Question: Find the smallest set of vertices 𝐶 such that every edge is touched.

𝐶←∅

While 𝐸 ≠ ∅ do

Pick 𝑒 = ⟨𝑢, 𝑣⟩ ∈ 𝐸

𝐶 ← 𝐶 ∪ {𝑢, 𝑣}

Discard from 𝐸 all “touched” edges

If we were to solve it using a greedy algorithm:

𝑃(∅) = |𝐸|

Ratio ≤ ln|𝐸| ≤ 2 ln 𝑛

Bad example for a greedy algorithm:

TODO: Draw bad example for a greedy algorithm

Back to the hit-all algorithm

Suppose we chose 𝑀 = {𝑒1 , . . , 𝑒𝑚 } edges considered by the algorithm.

𝑀 =maximal matching

𝐶𝑎𝑙𝑔 = 2𝑀

𝐶∗ ≥ 𝑀

Set Cover

𝑈 = {1, … , 𝑚},

𝑆1 , … , 𝑆𝑛

deg(𝑖) = # sets 𝑆𝑗 such that 𝑖 ∈ 𝑆𝑖

Δ(𝒮) = max{deg(𝑖)}

i

Exercise: Hit-all algorithm has approximation ratio Δ.

Greedy algorithm to weighted set cover

𝐶1 , … , 𝐶𝑛

𝐶𝑖 - the cost of using 𝑆𝑖

The greedy algorithm does the following:

1. 𝐼 ← ∅

2. While 𝑈 ≠ ∅ do

𝐶

a. Find 𝑖 such that |𝑆𝑖| is minimum

𝑖

b. 𝐼 ← 𝐼 ∪ {𝑖}

c. Discard from 𝐼 and every remaining 𝑆𝑖′ the element 𝑎 ∈ 𝑆𝑖

Theorem: Approximation ≤ ln 𝑚

Proof: 𝑞 = max{|𝑆𝑖 |}

𝜌𝑔𝑟𝑒𝑒𝑑𝑦 = 𝐻(𝑞)

Representing 𝑊𝑆𝐶 as an integer linear program

Linear Programming

{𝑥1 , … , 𝑥𝑛 }

Minimize ∑𝑛𝑖=1 𝑐𝑖 𝑥𝑖

Subject to:

𝑎11 𝑥1 + ⋯ + 𝑎1𝑛 𝑥𝑛 ≥ 𝑏1

⋮

𝑎𝑚1 𝑥1 + ⋯ + 𝑎𝑚𝑛 𝑥𝑛 ≥ 𝑏𝑚

Or: min 𝑐̅𝑥̅ such that 𝐴𝑥̅ ≥ 𝑏̅

𝑥𝑖 ≥ 0

In our case, 𝑥𝑖 ∈ {0,1}

We want to choose a subset of the set 𝑆1 , … , 𝑆𝑛 so the variables will be 𝑥1 , … , 𝑥𝑛 and their

intended interpretation will be that 𝑥1 should get the value of 1 if set 𝑠1 is selected to the cover,

and 0 otherwise.

1,

𝑆𝑖 𝑠𝑒𝑙𝑒𝑐𝑡𝑒𝑑 𝑡𝑜 𝑡ℎ𝑒 𝑐𝑜𝑣𝑒𝑟

𝑥𝑖 ← {

0,

𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Minimize ∑𝑛𝑖=1 𝑥𝑖 𝑐𝑖

Such that ∀𝑗 ∈ 𝑈 (𝑖 ≤ 𝑗 ≤ 𝑚). ∑𝑆𝑖∋𝑗 𝑥𝑖 ≥ 1

Since we need integer linear programming, the problem is NP hard!

This is what we call a primal linear program.

Dual Linear Programming

{𝑦1 , … , 𝑦𝑚 }

The dual would be to maximize ∑𝑗 𝑏𝑖 𝑦𝑖 subject to 𝐴𝑇 𝑦̅ ≤ 𝑐̅.

Refer to 𝑥̅ , 𝑦̅ feasible solutions if they satisfy their constraints (respectively).

And denote by 𝑥̂, 𝑦̂ the optimal solutions to the primal and the dual (respectively).

Weak LP-Duality Theorem

If 𝑥̅ is feasible for the primal LP, and 𝑦̅ is feasible for the dual LP, then:

𝑐̅𝑥̅ ≥ 𝑏̅𝑦̅

Proof:

𝑛

𝑐̅𝑥̅ = ∑ 𝑐𝑗 𝑥𝑗

𝑏𝑦 𝑡ℎ𝑒 𝑑𝑢𝑎𝑙 𝑛

𝑐𝑜𝑛𝑠𝑡𝑟𝑎𝑖𝑛𝑡

≥

𝑗=1

𝑚

𝑚

𝑛

∑ (∑ 𝑎𝑖𝑗 𝑦𝑖 ) 𝑥𝑗 = ∑ (∑ 𝑎𝑖𝑗 𝑥𝑗 ) 𝑦𝑖

𝑗=1

𝑖=1

𝑖=1

𝑏𝑦 𝑡ℎ𝑒 𝑝𝑟𝑖𝑚𝑎𝑙 𝑚

𝑐𝑜𝑛𝑠𝑡𝑟𝑎𝑖𝑛𝑡𝑠

≥

𝑗=1

∑ 𝑏𝑖 𝑦𝑖 = 𝑏̅𝑦̅

𝑖=1

The (not weak) LP duality theorem is: 𝑐̅𝑥̂ = 𝑏̅𝑦̂

Cost Decomposition

Suppose that in iteration 𝑡 of the greedy algorithm, it chose some set denoted 𝑆𝑖𝑡 .

𝐾𝑡 = {𝑗|𝑗 𝑖𝑠 𝑠𝑡𝑖𝑙𝑙 𝑖𝑛 𝑆𝑖𝑡 𝑎𝑡 𝑖𝑡𝑒𝑟𝑎𝑡𝑖𝑜𝑛 𝑡}

For every 𝑗 ∈ 𝐾𝑡 :

𝑐𝑖

𝑃𝑟𝑖𝑐𝑒(𝑗) = 𝑡

𝐾𝑡

𝐶𝑔𝑟𝑒𝑒𝑑𝑦 = ∑ ∑ 𝑝𝑟𝑖𝑐𝑒(𝑗) = ∑ 𝑐𝑖𝑡 =

𝑡 𝑗∈𝐾𝑡

𝑡

Guessing a solution for the dual-weighted set cover.

Dual Weighted Set Cover

max ∑𝑚

𝑗=1 𝑦𝑗 such that ∑ 𝑗∈𝑆𝑗 𝑦𝑗 ≤ 𝑐𝑖 ∀1 ≤ 𝑖 ≤ 𝑛

𝑦𝑗 ≥0

Guessed 𝑦̅

𝑝𝑟𝑖𝑐𝑒(𝑗)

𝑦𝑗 =

𝐻(𝑞)

(for 𝑞 = max|𝑆𝑖 |)

Claim: 𝑦̅ is a feasible solution for dual-weighted set cover.

Proof: Need to show that ∀𝑖, ∑𝑗∈𝑆𝑖

𝑝𝑟𝑖𝑐𝑒(𝑗)

𝐻(𝑞)

≤ 𝑐𝑖 or ∑𝑗∈𝑆𝑖 𝑝𝑟𝑖𝑐𝑒(𝑗) ≤ 𝑐𝑖 ∙ 𝐻(𝑞)

𝑆𝑖 = {𝑗1 , … , 𝑗𝑘 } ordered by the ordering the greedy algorithm covered them. If two elements are

covered in the same iteration, we don’t care about the ordering.

Suppose 𝑗𝑙 was covered in iteration 𝑡 (by 𝑆𝑖𝑡 )

𝑐𝑖

𝑝𝑟𝑖𝑐𝑒(𝑗𝑡 ) = 𝑡

|𝐾𝑡 |

Why didn’t the algorithm choose 𝑆𝑖 ?

By choosing 𝑆𝑖 we would have paid 𝑐𝑖 .

𝑐𝑖

𝑐

𝑖

So we know |𝐾𝑡| ≤ 𝑘−(𝑙+1)

.

𝑡

𝑘

𝑘

𝑘

𝑧=1

𝑧=1

𝑙=1

𝑐𝑖

1

∑ 𝑝𝑟𝑖𝑐𝑒(𝑗) = ∑ 𝑝𝑟𝑖𝑐𝑒(𝑗𝑧) ≤ ∑

= 𝑐𝑖 ∑ = 𝑐𝑖 𝐻(𝑘) ≤ 𝑐𝑖 𝐻(𝑞)

𝑘−𝑙+1

𝑙

𝑗∈𝑆𝑖

𝑐𝑔𝑟𝑒𝑒𝑑𝑦 = ∑ 𝑝𝑟𝑖𝑐𝑒(𝑗)

𝑗

𝐻𝑞 ∙ 𝑐̅

𝑥̅⏟∗

𝑐ℎ𝑜𝑖𝑐𝑒 𝑜𝑓

𝑦𝑗

=

∑ 𝐻𝑞 𝑦𝑗 = 𝐻𝑞 ∙ 1̅ ∙ 𝑦̅

𝑊𝐿𝑃−𝑑𝑢𝑎𝑙

≤

𝐻𝑞 ∙ 𝑐̅ ∙

⏟

𝑥̂

𝑜𝑝𝑡𝑖𝑚𝑎𝑙

𝑝𝑟𝑖𝑚𝑎𝑙

(𝑓𝑟𝑎𝑐𝑡𝑖𝑜𝑛𝑎𝑙)

𝑠𝑜𝑙𝑢𝑡𝑖𝑜𝑛

𝑗

= 𝐻𝑞 𝐶 ∗

𝑂𝑝𝑡𝑖𝑚𝑎𝑙

𝑠𝑜𝑙𝑢𝑡𝑖𝑜𝑛

𝑓𝑜𝑟 𝑡ℎ𝑒

𝐼𝐿𝑃

Example: Cover by 3 paths

Input: graph 𝐺 = (𝑉, 𝐸) of 𝑛 vertices

Question: cover the vertices by a minimum collection of paths of length 3.

1 1 11

𝜌𝑔𝑟𝑒𝑒𝑑𝑦 = 𝐻(3) = 1 + + =

2 3

6

3

Exercise: Show algorithm with 𝜌𝐴 ≤ 2. With Bonus!

Exercise 2: Show polynomial algorithms for paths for length is 2.

Deterministic rounding

Vertex Cover

ILP

Variables: 𝑥𝑖 for each 𝑣𝑖

1,

𝑣𝑖 ∈ 𝑐

𝑥𝑖 = {

0,

𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Minimize ∑𝑖 𝑥𝑖

Subject to 𝑥𝑖 + 𝑥𝑗 ≥ 1, 𝑥𝑖 ∈ {0,1}, ∀𝑒 = {𝑣𝑖 , 𝑣𝑗 }

LP

≤

0 ≤ 𝑥𝑖 ≤ 1

In polytime, find optimum 𝑥̂

Rounded integeral solution:

1

1,

𝑥

̂

≥

′

𝑖

𝑥𝑖 ← {

2

0,

𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Observation: ∀𝑖, 𝑥𝑖′ ≤ 2𝑥̂𝑖

Claim 1: 𝑥̅′ is a feasible solution to the ILP-VC.

Proof: Consider any edge 𝑒 = ⟨𝑣𝑖 , 𝑣𝑗 ⟩

The LP had a constraint: 𝑥𝑖 + 𝑥𝑗 ≥ 1

1

2

1

2

Therefore, we know that 𝑥̂𝑖 + 𝑥̂𝑗 ≥ 1 ⇒ either 𝑥̂𝑖 ≥ or 𝑥̂𝑗 ≥ . This means that either 𝑥𝑖′ or 𝑥𝑗′

was set to 1. Which means that either 𝑣𝑖 ∈ 𝑐 or 𝑣𝑗 ∈ 𝑐.

Claim 2: 𝑐𝐷𝑅 ≤ 2𝐶 ∗

𝑐𝐷𝑅 = ∑ 𝑥𝑖′ ≤ ∑ 2𝑥̂𝑖 ≤ 2 ∙

𝑖

⏟

𝑐̂

𝑜𝑝𝑡 𝑓𝑎𝑐

𝑠𝑜𝑙𝑢𝑡𝑖𝑜𝑛

𝑖

Generalization to Weighted Set Cover

Δ = max{deg(𝑖)}

i

Hit-all→ 𝜌 = Δ

1) Write ILP for Weighted Set Cover

2) Solve relaxed SP, get 𝑥̂ (fractional)

1

1,

𝑥̂𝑖 ≥

Δ

3) Round by setting 𝑥𝑖′ ← {

0, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

1,

𝑥̂𝑖 > 0

3’) 𝑥𝑖′ ← {

0, 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

≤ 2 ∙ 𝑐∗