10 - Discriminant Analysis (LDA, QDA, RDA)

advertisement

10 – Discriminant Analysis (LDA, QDA, and RDA)

10.1 - Discriminant Analysis in JMP

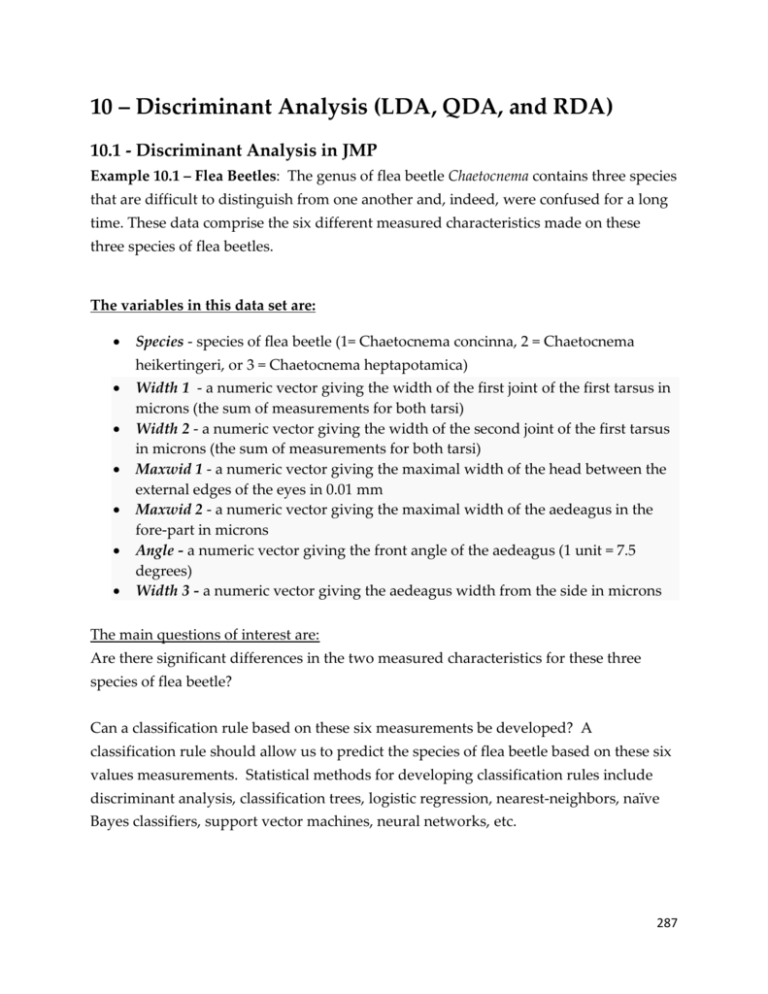

Example 10.1 – Flea Beetles: The genus of flea beetle Chaetocnema contains three species

that are difficult to distinguish from one another and, indeed, were confused for a long

time. These data comprise the six different measured characteristics made on these

three species of flea beetles.

The variables in this data set are:

Species - species of flea beetle (1= Chaetocnema concinna, 2 = Chaetocnema

heikertingeri, or 3 = Chaetocnema heptapotamica)

Width 1 - a numeric vector giving the width of the first joint of the first tarsus in

microns (the sum of measurements for both tarsi)

Width 2 - a numeric vector giving the width of the second joint of the first tarsus

in microns (the sum of measurements for both tarsi)

Maxwid 1 - a numeric vector giving the maximal width of the head between the

external edges of the eyes in 0.01 mm

Maxwid 2 - a numeric vector giving the maximal width of the aedeagus in the

fore-part in microns

Angle - a numeric vector giving the front angle of the aedeagus (1 unit = 7.5

degrees)

Width 3 - a numeric vector giving the aedeagus width from the side in microns

The main questions of interest are:

Are there significant differences in the two measured characteristics for these three

species of flea beetle?

Can a classification rule based on these six measurements be developed? A

classification rule should allow us to predict the species of flea beetle based on these six

values measurements. Statistical methods for developing classification rules include

discriminant analysis, classification trees, logistic regression, nearest-neighbors, naïve

Bayes classifiers, support vector machines, neural networks, etc.

287

Analysis in JMP

First we use graphical techniques such as histograms, comparative boxplots and

scatterplots with color coding in an attempt to derive a classification rule for the three

species of flea beetle. This can done as follows:

Color coding - From the Rows menu select Color/Marker by Col... then check box

labeled Marker (color will already be checked by default). Now highlight Species with

the mouse and click OK. In subsequent plots the different species of flea beetle will

color coded and a different plotting symbol will be used for each species. Look at the

spreadsheet to see the correspondence.

Histograms - Select Distribution of Y from the Analyze menu and add Species and all

six of the measurements to the right hand box, then click OK. Now use the mouse to

click on the bars corresponding to the different species of beetle in the bar graph for

Species, carefully observing what happens in the histograms for the two measured

characteristics. This is an example of linked viewing - data that is highlighted or

selected in one plot becomes highlighted in all other plots.

What can we conclude from this preliminary histogram analysis?

288

From the above displays we can see that Heptapot flea beetles has width measurements

between 125 and 150. On the angle variable we can see that this species of flea beetle

tends to have much smaller angle measurements than the other two species. At this

point might conjecture that if we observed a width value between 125-150 and an angle

measurement less than 12 for an unclassified flea beetle that it would be classified as a

Heptapot flea beetle. Similar types of observations can be made by clicking on the bars

for the other two species in the bar graph for species.

Comparative Boxplots - Select Fit Y by X from the Analyze menu and add Species in

the X box and all six measurements in the Y box. Add boxplots and/or means diamonds

to the resulting plots by selecting these options in the Display menu which is located

below the plot.

289

Comparative Displays for Width 1

The plot at the top to the left gives

comparative boxplots for the three

flea beetle genus for the width

variable. We clearly see that the

Heikert. genus have the lowest

width measurements in general,

while Concinna generally have the

highest.

The Compare density option gives

smoothed histograms which also can

be used to show univariate

differences between the three genus

of flea beetle.

290

Comparative Displays for Angle

These graphs again show that the genus Heptapot have the smallest angle measurements

in general. Another interesting plot to consider when looking at multivariate data

where there are potentially different populations involved is the parallel coordinate

plot. In the parallel coordinates plot, a set of parallel axes are drawn for each variable.

Then a given row of data is represented by drawing a line that connects the value of

that row on each corresponding axis. When you have predefined populations

represented in your data, these plots can be particularly useful when looking for

distinguishing characteristics of these populations.

To obtain a parallel coordinate plot in JMP select Parallel Plot from the Graph menu

and place the variables of interest in the Y response box. You can place a grouping

variable in the X, Grouping box if you wish to see a parallel coordinate display for each

level of the grouping variable X. The results from both approaches are shown on the

following page.

291

At this point we can see our classification rule could be stated as follows... If the angle is

less than 12 classify a flea beetle as genus Heptapot. If angle is greater than 12 then the

beetles is most likely from genus Concinna or Heikert. To distinguish genus Concinna

from Heikert the comparative boxplots for Maxwid 2 suggest that we could classify a

flea beetle with angle greater than 12 as being from genus Concinna if the maximal

width exceeded 133 or 134, otherwise classify it as being from genus Heikert.

292

To formally compare the genus types on these six characteristics we could perform a

one-way analysis of variance (ANOVA) for each. This is can be done by selecting

Means/ANOVA from the Analysis menu below the plot. Pairwise multiple

comparisons can be done using Tukey’s method by selecting Compare All Pairs from

the Oneway Analysis pull-down menu.

ANOVA TABLE for Maximal Width 2

The p-value for the F-test for testing the null hypothesis that the population means for

width are all equal is less than <.0001 indicating that at least two means are significantly

different. To decide which means are significantly different we can use Tukey’s

multiple comparison procedure examining all pairwise comparisons of the populations

means. The results are shown below.

Results of Tukey's Multiple Comparisons

Here we can see that the mean maximal widths differ for all species.

293

To aid in the development of a simple classification rule we could also examine a

scatterplot for Maxwid 2 vs. Angle making sure that the different species are still color

coded (see above). This can be done by choosing Fit Y by X from the Analyze menu and

placing Width in the X box and Angle in the Y box. We can add a density ellipse for

each group to the plot by choosing Grouping Variable from the Analysis menu below

the plot and highlighting Species as the variable to group on. Then select Density

Ellipses with the desired percent confidence from the Analysis menu. The ellipses

should contain the specified percent of the points for each group. Notice the nice

separation of the density ellipses.

Scatterplot of Angle vs. Width

We can use this scatterplot to state a classification rule that can be used to identify

species on the basis of these measurements. Clearly an angle less than 12 would

indicate that the flea beetle was genus Heptapot. Concinna and Heikert both appear

to have angle measurements exceeding 12. However by using the maximal width

measurement we can distinguish between these two species. Concinna flea beetles have

widths exceeding 134, while the Heikert beetles have widths less than 134. To

statistically formalize the procedure above we could perform discriminant analysis,

which we will consider later in this tutorial.

294

Rather than consider each of the six characteristics individually using ANOVA.

MANOVA allows us to determine if the three species differ on the six characteristics

measured simultaneously. This is achieved by looking at the multivariate response

consisting of all six measurements rather than each characteristic individually which

was done above through the use of one-way ANOVA. To perform MANOVA in JMP

first select the Fit Model option in the Analyze menu. Place all six measurements in

the Y box and place Species in the Effects in Model box, then select MANOVA from the

Personality pull-down menu and select Run. When the analysis is completed, choose

Identity from the menu below where it says Choose Response and click Done. Below

you will see a variety of boxes with many different statistics and statistical tests. To test

the main hypotheses that these measured characteristics differ from species to species

you will want to examine the results of the tests in the Species box. This box contains

four different test statistics which all the answer the question - - do the means for these

six measurements differ in any way from species to species? If the p-value is small for

any of them there is evidence that the mean of width and angle differ significantly from

species to species. You can examine a profile of the mean values of width and angle for

each species by examining the plot for species in the Least Square Means box in the

output. The table on the following page shows the results of the MANOVA for species.

MANOVA Results for Species Comparison

Here we can see that the p-values associated with each test statistic is less than .0001,

which provides compelling evidence that the three species differ significantly on the six

measured characteristics. To see the nature of these differences select Centroid Plot

from the pull-down menu at the top of the Species box. The centroid plot for these data

is shown on the following page.

295

Canonical Centroid Plot

A 2-D canonical centroid plot is a plot of the first

two discriminants from Fisher’s Discriminant

Analysis. Fisher’s discriminant analysis is a

method where linear combinations of X1, …, Xp

are found that maximally separate the groups.

The first linear combination maximally separates

the groups in 1-D dimension, the second linear

combination maximally separates the groups

subject to the constraint that the linear

combination is orthogonal to the first. Thus

when the resulting linear combinations are plotted

for each observation we obtain a scatterplot

exhibiting zero correlation and hopefully good

group separation. We can also visualize the

results in 3-D considering a third linear

combination, provided there are more than three

groups/populations.

The above plot confirms things we have already seen. Notice that the Concinna and

Heikert centroid circles lie in the direction of the Angle ray indicating that these two

genus types have large angle measurements relative to genus Heptapot. The circle for

genus Heikert lies in the direction of the Width 1 ray indicating that these flea beetles

have relatively large width measurements. In total, we see that a nice species

separation is achieved.

The canonical centroid plot displays the results of discriminant analysis. Discriminant

analysis, though related to MANOVA, is really a standalone method. There are two

main approaches to classic discriminant analysis: Fisher’s method which is discussed

above and a Bayesian approach where the posterior probability of group membership is

calculated assuming X’s have an approximate multivariate normal distribution. In

Fisher’s approach to discriminating between g groups, (g – 1) orthogonal linear

combinations are found that maximally separate the groups, with the first linear

combination doing largest degree of separation and so. Future observations are

classified to the group they are closest to in the lower dimensional space created by

these linear combinations.

296

In LDA/QDA we classify observations to groups based on their posterior

“probability” of group membership. The probabilities are calculated assuming the

populations have a multivariate normal distribution. In linear discriminant analysis

(LDA) we assume each population, while having different mean vectors, have the same

variance-covariance structure. In quadratic discriminant analysis (QDA) we assume

that the variance-covariance structure of the populations is different. QDA requires

more observations per group, (𝑛𝑖 > 𝑝 )𝑓𝑜𝑟 𝑎𝑙𝑙 𝑖, as the variance-covariance matrix is

estimated separately for each of the groups, whereas LDA uses a pooled estimate of the

common variance-covariance structure. Because QDA is effectively estimating more

“parameters” it should provide a better discrimination between than LDA. Regularized

discriminant analysis (RDA) is a balance between the two extremes by essentially

taking a weighted average of variance-covariance structure of the two approaches. You

can think of it as a shrunken version of QDA, where the shrinkage is towards LDA.

To classify future observations a Bayesian approach is used where the posterior

probability of group membership for each group is computed as

𝑃(𝐺𝑟𝑜𝑢𝑝 = 𝑘|𝒙) =

2

exp(−.5 𝐷𝑘∗ (𝒙))

∑𝑔𝑖=1 exp(−.5 𝐷𝑖∗ 2 (𝒙))

𝑓𝑜𝑟 𝑘 = 1, … , 𝑔

where,

2

̅𝒊 )′ 𝑺−𝟏

̅𝒊 ) + 𝒍𝒏|𝑺𝒊 | − 𝟐𝒍𝒏𝒑𝒊 for QDA with unequal priors

𝐷𝑖∗ (𝒙) = (𝒙 − 𝒙

𝒊 (𝒙 − 𝒙

2

̅𝒊 )′ 𝑺−𝟏

̅𝒊 ) + 𝒍𝒏|𝑺𝒊 |

𝐷𝑖∗ (𝒙) = (𝒙 − 𝒙

𝒊 (𝒙 − 𝒙

for QDA with equal priors

2

̅𝒊 )′ 𝑺−𝟏

̅𝒊 ) − 𝟐𝒍𝒏𝒑𝒊

𝐷𝑖∗ (𝒙) = (𝒙 − 𝒙

𝒑 (𝒙 − 𝒙

for LDA with unequal priors

2

̅𝒊 )′ 𝑺−𝟏

̅𝒊 )

𝐷𝑖∗ (𝒙) = (𝒙 − 𝒙

𝒑 (𝒙 − 𝒙

for LDA with equal priors

and

𝑆𝑝−1 =

𝑔

∑𝑖=1(𝑛𝑖 −1)𝑆𝑖−1

𝑔

∑𝑖=1(𝑛𝑖 −1)

pooled estimate of the common variance-covariance matrix in LDA

and 𝑝𝑖 = 𝑝𝑟𝑖𝑜𝑟 𝑝𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑏𝑒𝑖𝑛𝑔 𝑓𝑟𝑜𝑚 𝑝𝑜𝑝𝑢𝑙𝑎𝑡𝑖𝑜𝑛 𝑜𝑟 𝑔𝑟𝑜𝑢𝑝 𝑖. For RDA we use the

same formula as QDA with the sample variance-covariance matrix replaced by

𝑆𝑖∗ = 𝜆𝑆𝑖 + (1 − 𝜆)𝑆𝑝

297

If the parameter is different from 0, then another convex combination is formed based

on 𝑆𝑖∗ .

𝛾

𝑆𝛾 = (1 − 𝛾)𝑆𝑖∗ + 𝑝 𝑡𝑟(𝑆𝑖∗ )𝐼 where 𝐼 is the pxp identity matrix.

More on RDA: The values for the two regularization parameters, 0 ≤ 𝜆 ≤ 1 and 0 ≤

𝛾 ≤ 1, are chosen to minimize jointly an unbiased estimate of future misclassification

risk which we could obtain using some form of cross-validation. Regularized

discriminant analysis provides for a fairly rich class of regularization alternatives. The

four corners defining the extremes of the (𝜆, 𝛾) plane represent the well-known

classification procedures outlined above. The lower left corner (𝜆 = 0, 𝛾 = 0) represents

QDA. The lower right corner (𝜆 = 1, 𝛾 = 0) represents LDA. The upper right corner (𝜆 = 1, 𝛾 = 1)

corresponds to the nearest-means classifier where an observation is assigned to the class

with the closest (Euclidean distance) mean, but this could be changed to statistical

distance if we first standardize the 𝑋𝑖 ′𝑠. The upper left corner of the plane represents a

weighted nearest-means classifier, with the class weights inversely proportional to the

average variance of the measurement variables within the class. Holding 𝛾 fixed at 0

and varying 𝜆 produces models somewhere between QDA and LDA. Holding 𝜆 fixed at

0 and increasing 𝛾 attempts to un-bias the sample-based eigenvalue estimates. Holding

𝜆 fixed at 1 and increasing 𝛾 gives rise to a ridge-regression analog for LDA. A good

pair of values for 𝜆 and 𝛾 is not likely to be known in advance. We therefore must use

cross-validation methods to find optimal values. The resulting misclassification loss

averaged over the training/test samples are then used as an estimate of future

misclassification risk. We can choose a grid of points in the (𝜆, 𝛾) plane (0 ≤ 𝜆 ≤ 1, 0 ≤

𝛾 ≤ 1) and evaluate the cross-validated estimate of misclassification risk at each

prescribed points on the grid, and then choose the point with the smallest estimated

misclassification rate.

With all methods (LDA, QDA, and RDA) the posterior probabilities for each

observation in the training data are computed and group membership is predicted. The

performance of the discriminant analysis is the reported the number/percent

misclassified in the training data and in the test data if it is available. In JMP future

observations can be classified by adding them to the data table.

298

LDA/QDA Details

299

LDA/QDA Details (continued)

300

To perform LDA/QDA/RDA in JMP, choose Discriminant from the Multivariate

Methods option within the Analyze menu as shown below.

Put all six measurements

in the Y, Covariates box

and Species in the X,

Categories.

The results are shown below:

Here we can see that linear discriminant analysis misclassifies none of the flea beetles in

these training data.

A contingency table showing the classification results is displayed below the table of

posterior genus probabilities for each observation. This table is sometimes referred to as

a confusion matrix.

301

Confusion Matrix for Species Classification

None of the species are misclassified giving us an apparent error rate (APER) of .000 or

0%. The apparent error rate is analogous to the RSS for the training data fit in a

regression problem. Applying either QDA (which actually might be recommended

given that the variability of some of the characteristics differs across species) or RDA

results in perfect classification of the species as well.

We can save the distances to each group along with the posterior probabilities for each

species to our spreadsheet by selecting Save Formulas from Score Options pull-out

menu as shown below.

Having saved these formulae to the data spreadsheet we can use the results of our

discriminant analysis to classify the species of new observations. For example, adding 2

rows to our spreadsheet and entering the measurements for two yet to be classified

beetles will obtain their predicted species based on our model.

302

The predictions are shown below for the two new beetles, the first is classified as

Heikert and the second as Concinna.

Below is a visualization of the classification of the new flea beetles using the first two

discriminants.

303

Example 2: Italian Olive Oils: The goal here is to classify olive oils in terms of the area of Italy

they are from based upon amino acid composition. There are two geographic classifications in

these data. The first classification is nine individual growing areas in Italy (Area Name) – East

Liguria, West Liguria, Umbria, North-Apulia, South-Apulia, Sicily, Coastal Sardinia, InlandSardinia, and Calabria. A broader classification is the growing region in Italy (Region Name) –

Northern, Southern, and Sardinia. The map below should help in your understanding of where

these areas/regions are located in Italy.

Puglia = Apulia

Sardegna = Sardinia

Sicilia = Sicily

The bar graph above shows the number of olive oils

in these data from each area. The plots on the left

show a parallel coordinate plot and comparative

boxplots for palmitic acid across areas.

304

The scatterplot matrix on the right above clearly shows that LDA is NOT appropriate for these

data. Why? Thus we will consider QDA first.

We can see the overall misclassification rate on

the entire training data set is 11/572 = 1.923%.

We can randomly choose some cases to use as

test data.

.

The end result of doing this will be a column

with 0 for training and 1 for test cases. We can

then Hide and Exclude the test cases and when

we run a discriminant analysis (LDA, QDA, or

RDA) it will use the Excluded cases as test cases

and report the misclassification rate on those

cases that were not used to perform the

discriminant analysis.

305

We can create training/test cases in JMP using the Initialize Data feature and then we

can use a bar graph of this column to Hide and Exclude the test cases.

The results from a QDA now contain a misclassification rate (APER) for the training

cases and the test cases (Excluded).

The APER = 1.312% and the Test Case Error Rate = 4.712%. Next we consider using

regularized discriminant analysis for these data. We will again use the training and test

cases to obtain a measure of prediction error.

306

Results from RDA with 𝜆 = .6667 and 𝛾 = 0 (giving a test case error rate = 8.38%)

and using 𝜆 = 0.1 and 𝛾 = 0 (giving a test case error rate = 4.19%)

There is no easy method for finding “optimal” values 𝜆 & 𝛾 in JMP, it is pretty much

trial and error.

307

10.2 - LDA, QDA, and RDA in R

Example 10.2: Italian Olive Oils (revisited)

> dim(Olives)

[1] 572 12

> names(Olives)

[1] "Region.name" "Area.name"

[6] "palmitoleic" "strearic"

[11] "linolenic"

"eicosenoic"

"Region"

"oleic"

"Area"

"linoleic"

"palmitic"

"eicosanoic"

> sam = sample(1:572,floor(.3333*572),replace=F)

> OA.test = OliveOils[sam,-c(1,3,4,12)] remove Region identifiers

> OA.train = OliveOils[-sam,-c(1,3,4,12)]

> dim(OA.test)

[1] 190

8

> dim(OA.train)

[1] 382

8

> names(OA.train)

[1] "Area.name"

"palmitic"

"palmitoleic" "strearic"

"oleic"

[6] "linoleic"

"eicosanoic" "linolenic"

> table(OA.test$Area.name) make sure all areas are in test cases!

Calabria Coastal-Sardinia

17

12

North-Apulia

Sicily

10

8

West-Liguria

15

> table(OA.train$Area.name)

Calabria Coastal-Sardinia

39

21

North-Apulia

Sicily

15

28

West-Liguria

35

East-Liguria

17

South-Apulia

70

Inland-Sardinia

23

Umbria

18

East-Liguria

33

South-Apulia

136

Inland-Sardinia

42

Umbria

33

> area.lda = lda(Area.name~.,data=OA.train)

> pred.lda = predict(area.lda,newdata=OA.test)

> misclassification(pred.lda$class,OA.test$Area.name)

Table of Misclassification (row = predicted, col = actual)

y

fit

Calabria Coastal-Sardinia East-Liguria Inland-Sardinia

Calabria

16

0

0

0

Coastal-Sardinia

0

11

0

0

East-Liguria

0

0

10

0

Inland-Sardinia

0

1

0

21

North-Apulia

0

0

4

0

Sicily

1

0

0

1

South-Apulia

0

0

0

1

Umbria

0

0

0

0

West-Liguria

0

0

3

0

y

fit

North-Apulia Sicily South-Apulia Umbria West-Liguria

Calabria

0

1

0

0

0

Coastal-Sardinia

0

0

0

0

0

East-Liguria

2

0

0

1

0

Inland-Sardinia

0

0

0

0

0

North-Apulia

7

1

0

0

0

Sicily

0

6

4

0

0

South-Apulia

0

0

66

0

0

Umbria

1

0

0

17

0

West-Liguria

0

0

0

0

15

Misclassification Rate = 0.111

> area.qda = qda(Area.name~.,data=OA.train)

308

> pred.qda = predict(area.qda,newdata=OA.test)

> misclass(pred.qda$class,OA.test$Area.name)

> misclassification(pred.qda$class,OA.test$Area.name)

Table of Misclassification (row = predicted, col = actual)

y

fit

Calabria Coastal-Sardinia East-Liguria Inland-Sardinia

Calabria

14

0

0

0

Coastal-Sardinia

0

11

0

1

East-Liguria

0

0

16

0

Inland-Sardinia

0

0

0

21

North-Apulia

0

0

0

0

Sicily

2

0

0

0

South-Apulia

1

1

0

1

Umbria

0

0

0

0

West-Liguria

0

0

1

0

y

fit

North-Apulia Sicily South-Apulia Umbria West-Liguria

Calabria

0

1

0

0

0

Coastal-Sardinia

0

0

0

0

0

East-Liguria

2

0

0

0

0

Inland-Sardinia

0

0

0

0

0

North-Apulia

6

0

0

0

0

Sicily

1

7

3

0

0

South-Apulia

1

0

67

0

0

Umbria

0

0

0

18

0

West-Liguria

0

0

0

0

15

Misclassification Rate = 0.0789

> area.rda = rda(Area.name~.,data=OA.train)

> pred.rda = predict(area.rda,newdata=OA.test)

> misclassification(pred.qda$class,OA.test$Area.name)

Table of Misclassification (row = predicted, col = actual)

y

fit

Calabria Coastal-Sardinia East-Liguria Inland-Sardinia

Calabria

14

0

0

0

Coastal-Sardinia

0

11

0

1

East-Liguria

0

0

16

0

Inland-Sardinia

0

0

0

21

North-Apulia

0

0

0

0

Sicily

2

0

0

0

South-Apulia

1

1

0

1

Umbria

0

0

0

0

West-Liguria

0

0

1

0

y

fit

North-Apulia Sicily South-Apulia Umbria West-Liguria

Calabria

0

1

0

0

0

Coastal-Sardinia

0

0

0

0

0

East-Liguria

2

0

0

0

0

Inland-Sardinia

0

0

0

0

0

North-Apulia

6

0

0

0

0

Sicily

1

7

3

0

0

South-Apulia

1

0

67

0

0

Umbria

0

0

0

18

0

West-Liguria

0

0

0

0

15

Misclassification Rate = 0.0789

Same as QDA!

RDA help file from the package klaR

309

We clearly need to consider changing the regularization parameters if we are going to see

any benefit from using RDA vs. LDA/QDA.

> area.rda = rda(Area.name~.,data=OA.train,lambda=.1,gamma=0)

> pred.rda = predict(area.rda,newdata=OA.test)

> misclassification(pred.rda$class,OA.test$Area.name)

Table of Misclassification

(row = predicted, col = actual)

y

fit

Calabria Coastal-Sardinia East-Liguria Inland-Sardinia

Calabria

16

0

0

0

Coastal-Sardinia

0

12

0

1

East-Liguria

0

0

16

0

Inland-Sardinia

0

0

0

21

North-Apulia

0

0

0

0

Sicily

0

0

0

0

South-Apulia

1

0

0

1

Umbria

0

0

0

0

West-Liguria

0

0

1

0

y

fit

North-Apulia Sicily South-Apulia Umbria West-Liguria

Calabria

0

1

0

0

0

Coastal-Sardinia

0

0

0

0

0

East-Liguria

2

0

0

0

0

Inland-Sardinia

0

0

0

0

0

North-Apulia

6

0

0

0

0

Sicily

2

7

4

0

0

South-Apulia

0

0

66

0

0

Umbria

0

0

0

18

0

West-Liguria

0

0

0

0

15

Misclassification Rate = 0.0684

We can write a simple function for looking through a 𝑘 × 𝑘 grid of (𝜆, 𝛾) of

combinations in order to find “optimal” values for these tuning parameters. The

function assumes the response is in the first column of both the training and test data

frames.

find.lamgam = function(formula,train,test,k=5){

lambda = seq(0,1,length=k)

gamma = seq(0,1,length=k)

mcr = rep(0,as.integer(k^2))

ntest = dim(test)[1]

lg.grid = expand.grid(lambda=lambda,gamma=gamma)

for (i in 1:as.integer(k^2)){

temp = rda(formula,data=train,lambda=lg.grid[i,1],gamma=lg.grid[i,2])

pred = predict(temp,newdata=test)$class

numinc = ntest - sum(diag(table(pred,test[,1])))

mcr[i] = numinc/ntest

}

cbind(lg.grid,mcr)

}

310

> find.lamgam(formula=Area.name~.,train=OA.train,test=OA.test,k=10)

lambda

gamma

mcr

1

0.0000000 0.0000000 0.07894737

2

0.1111111 0.0000000 0.06842105

3

0.2222222 0.0000000 0.07894737

4

0.3333333 0.0000000 0.07368421

5

0.4444444 0.0000000 0.06842105

6

0.5555556 0.0000000 0.06842105

7

0.6666667 0.0000000 0.06315789

8

0.7777778 0.0000000 0.06842105

9

0.8888889 0.0000000 0.08421053

10 1.0000000 0.0000000 0.11052632

11 0.0000000 0.1111111 0.08421053

12 0.1111111 0.1111111 0.08947368

. . .

. . .

. . .

. . .

96 0.5555556 1.0000000 0.18421053

97 0.6666667 1.0000000 0.18421053

98 0.7777778 1.0000000 0.18421053

99 0.8888889 1.0000000 0.17894737

100 1.0000000 1.0000000 0.17894737

It appears that 𝜆 = .6667 and is approximately optimal for this train/test combination.

We can use the partimat function to visualize the classification boundaries in a pairwise basis

using two of the 𝑋𝑖′ 𝑠 at a time. The classification error rates are for discriminant analysis using

the two displayed variables only, thus the usefulness of these displays is questionable.

> partimat(Area.name~.,data=OA.train,method="lda",nplots.hor=4,nplots.ver=4)

Individual plot from LDA

311

> partimat(Area.name~.,data=OA.train,method="qda",nplots.hor=4,nplots.ver=4)

Individual plot from QDA

312

> partimat(Area.name~.,data=OA.train,method="rda",lambda =

.6667,gamma=0,nplots.hor=4,nplots.ver=4)

An individual plot from RDA

> partimat(Area.name~.,data=OA.train,method=”sknn”,nplots.hor=4,nplots.ver=4)

313

> partimat(Area.name~.,data=OA.train,method=”naiveBayes”,nplots.hor=4,nplots.ver=4)

It is interesting to contrast the decision boundaries between the different methods of

classification we have discussed so far.

314