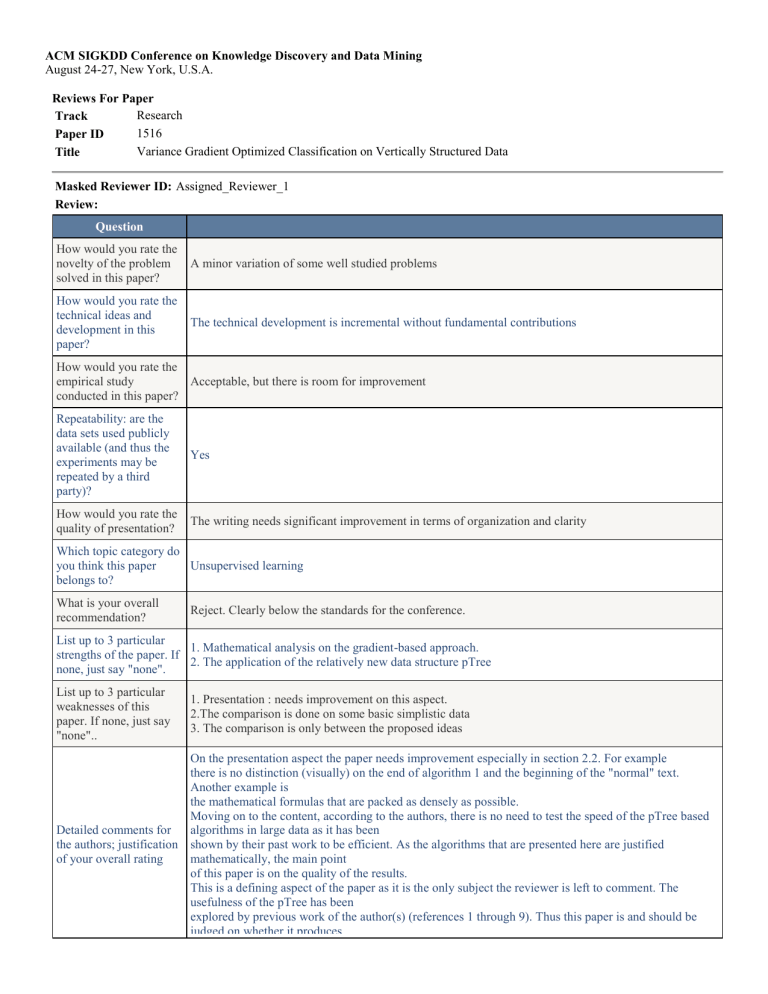

ACM SIGKDD Conference on Knowledge Discovery and Data

ACM SIGKDD Conference on Knowledge Discovery and Data Mining

August 24-27, New York, U.S.A.

Reviews For Paper

Track Research

Paper ID

Title

1516

Variance Gradient Optimized Classification on Vertically Structured Data

Masked Reviewer ID: Assigned_Reviewer_1

Review:

Question

How would you rate the novelty of the problem solved in this paper?

A minor variation of some well studied problems

How would you rate the technical ideas and development in this paper?

The technical development is incremental without fundamental contributions

How would you rate the empirical study conducted in this paper?

Acceptable, but there is room for improvement

Repeatability: are the data sets used publicly available (and thus the experiments may be repeated by a third party)?

Yes

How would you rate the quality of presentation?

The writing needs significant improvement in terms of organization and clarity

Which topic category do you think this paper belongs to?

Unsupervised learning

What is your overall recommendation?

List up to 3 particular weaknesses of this paper. If none, just say

"none"..

Reject. Clearly below the standards for the conference.

List up to 3 particular strengths of the paper. If none, just say "none".

1. Mathematical analysis on the gradient-based approach.

2. The application of the relatively new data structure pTree

1. Presentation : needs improvement on this aspect.

2.The comparison is done on some basic simplistic data

3. The comparison is only between the proposed ideas

Detailed comments for the authors; justification of your overall rating

On the presentation aspect the paper needs improvement especially in section 2.2. For example there is no distinction (visually) on the end of algorithm 1 and the beginning of the "normal" text.

Another example is the mathematical formulas that are packed as densely as possible.

Moving on to the content, according to the authors, there is no need to test the speed of the pTree based algorithms in large data as it has been shown by their past work to be efficient. As the algorithms that are presented here are justified mathematically, the main point of this paper is on the quality of the results.

This is a defining aspect of the paper as it is the only subject the reviewer is left to comment. The usefulness of the pTree has been explored by previous work of the author(s) (references 1 through 9). Thus this paper is and should be judged on whether it produces

List of typos, grammatical errors and/or concrete suggestions to improve presentation good results.

Unfortunately, the experiments are conducted on simplistic datasets. These datasets are not really good for benchmarking but more for a "proof of concept" approach. They lack in complexity and two of them (IRIS and

SEEDS) have at most 200 instances

(and 4 and 7 features for each). This set of data is "prone" to display good results even with the most simplistic approaches.

The authors might avoid comparison with large data only because the scale does not matter in the efficiency of the approach but -with this argument- they also "avoid" the complexity of larger datasets.

Moreover, as this is a clustering procedure, there should be a comparison with another algorithm that is not part of their 3 alternatives. Perhaps they should conciser comparing with a state of the art clustering algorithm on the quality so that they may answer the actual question posed in the beginning of their document:

"That is, if we structure our data vertically and process across those vertical structures (horizontally), can those horizontal algorithms compete quality-wise with the time-honored methods that process horizontal (record) data vertically?"

2.1 Please fix the first sentence as it is hard to make sense.

2.2 Mathematical formulas and algorithms should stand out when compared to the text.

"Then our problem is to develop an algorithm to

(1.2) ...." -> this makes no sense

1.1 and 1.2 are not connected in some logical manner and their indent is also different.

"Thus, 2. is equivalent to" ...which/what "2"?

4 'fact that the combining of" -> the combination

"whereas for horizon- tally structured data is doubles (at least)." -> is double

"pTree compression is design to improve speed more and" -> is designed

Masked Reviewer ID: Assigned_Reviewer_2

Review:

Question

How would you rate the novelty of the problem solved in this paper?

A minor variation of some well studied problems

How would you rate the technical ideas and development in this paper?

The technical development is incremental without fundamental contributions

How would you rate the empirical study conducted in this paper?

Not thorough, or even faulty

Repeatability: are the data sets used publicly available (and thus the experiments may be repeated by a third party)?

Yes

How would you rate the quality of presentation?

The writing needs significant improvement in terms of organization and clarity

Which topic category do you think this paper belongs to?

Classification

What is your overall recommendation?

List up to 3 particular weaknesses of this paper. If none, just say

"none"..

Reject. Clearly below the standards for the conference.

List up to 3 particular strengths of the paper. If none, just say "none". none

1. The statements are not clear.

2. There are few related works discussed.

3. The experiments are problematic.

Detailed comments for the authors; justification of your overall rating

This paper proposed classification methods based on predicate trees (pTrees) for large datasets. There are some problems:

1. The whole paper is not well organized, and is unclear. There are only 6 pages of this paper, and the limitation is 10 pages. There are enough spaces to state the problem and the algorithm more clearly.

2. Classification for “big data” is a well studied problem. The authors did not discuss the other related works in this area.

3. Some proofs of lemma/theorem/corollary are missing.

4. The authors claim that the proposed algorithms are very efficient, and which are useful to handle “big data”. But in the experiments, there are only very small UCI datasets (a few hundreds of examples), and no running time records.

5. The experimental results of the proposed algorithms seem not very strong. For the example of Wine dataset, it is easy to achieve a 95% accuracy by many common classification methods, such as 1NN. But the accuracies of your methods are 62.7%, 66.7%, and 81.3% on this dataset.

Masked Reviewer ID: Assigned_Reviewer_3

Review:

Question

How would you rate the novelty of the problem solved in this paper?

A well established problem

How would you rate the technical ideas and development in this paper?

The technical development has some flaws

How would you rate the empirical study conducted in this paper?

Not thorough, or even faulty

Repeatability: are the data sets used publicly available (and thus the experiments may be repeated by a third party)?

Yes

How would you rate the quality of presentation?

The writing needs significant improvement in terms of organization and clarity

Which topic category do you think this paper belongs to?

Novel statistical techniques for big data

What is your overall recommendation?

Reject. Clearly below the standards for the conference.

List up to 3 particular strengths of the paper. If none, just say "none".

1. Interesting approach to use of pTree/vertically structured data (apparently similar to column stores) in data mining.

2. Formulation of the gradient of maximal variance (1st PC) using iteration in this context.

3. Addresses a paradigm-shifting basic question: "do we give up quality (accuracy) when horizontally processing vertical bit-slices compared to vertically processing horizontal records"?

List up to 3 particular weaknesses of this paper. If none, just say

"none"..

1. Paper is not yet complete; needs further development.

2. The performance evaluation section (considering 4 UCI datasets) provides only 3 accuracy results for each dataset.

3. Algorithm 1, on p.3, is incomplete and currently includes some typos.

Detailed comments for the authors; justification of your overall rating

It seemed the method proposed here is finding the first principal component using something like the power method for finding eigenvectors. If the method in the paper, or the resulting gradient, are in fact different from this, it might help to clarify that. in Corollary 3, it is not clear why the complexity is O(n^2 log(1/3)). (By the way, there is a right parenthesis missing.)

The relationship between pTrees and vertically structured data, on the one hand, and the column store systems now being used in Big Data applications on the other, wants clarification. It might generate a lot of interest in pTrees if the column store systems differed in important ways.