Exercise 4: Simple operations on vector data

advertisement

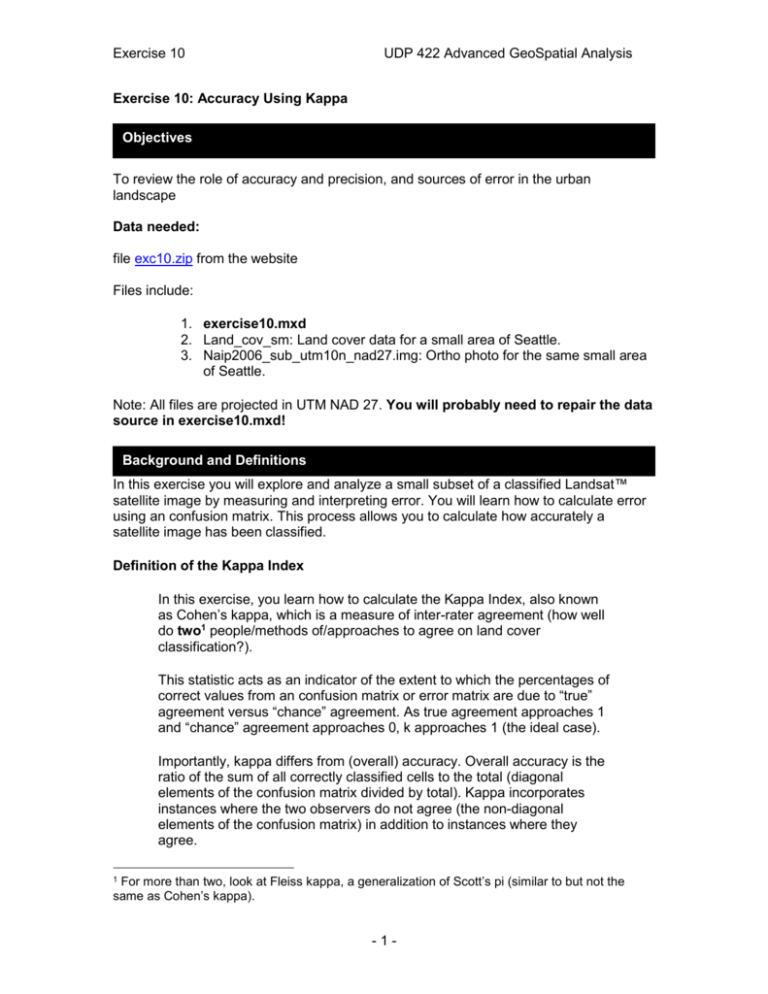

Exercise 10 UDP 422 Advanced GeoSpatial Analysis Exercise 10: Accuracy Using Kappa Objectives To review the role of accuracy and precision, and sources of error in the urban landscape Data needed: file exc10.zip from the website Files include: 1. exercise10.mxd 2. Land_cov_sm: Land cover data for a small area of Seattle. 3. Naip2006_sub_utm10n_nad27.img: Ortho photo for the same small area of Seattle. Note: All files are projected in UTM NAD 27. You will probably need to repair the data source in exercise10.mxd! Background and Definitions In this exercise you will explore and analyze a small subset of a classified Landsat™ satellite image by measuring and interpreting error. You will learn how to calculate error using an confusion matrix. This process allows you to calculate how accurately a satellite image has been classified. Definition of the Kappa Index In this exercise, you learn how to calculate the Kappa Index, also known as Cohen’s kappa, which is a measure of inter-rater agreement (how well do two1 people/methods of/approaches to agree on land cover classification?). This statistic acts as an indicator of the extent to which the percentages of correct values from an confusion matrix or error matrix are due to “true” agreement versus “chance” agreement. As true agreement approaches 1 and “chance” agreement approaches 0, k approaches 1 (the ideal case). Importantly, kappa differs from (overall) accuracy. Overall accuracy is the ratio of the sum of all correctly classified cells to the total (diagonal elements of the confusion matrix divided by total). Kappa incorporates instances where the two observers do not agree (the non-diagonal elements of the confusion matrix) in addition to instances where they agree. For more than two, look at Fleiss kappa, a generalization of Scott’s pi (similar to but not the same as Cohen’s kappa). 1 -1- Exercise 10 UDP 422 Advanced GeoSpatial Analysis Conceptually, κ can be defined as: Note that there is disagreement about if or how well ‘chance agreement’ is represented in this model2—but that is beyond the scope of this class! Directions Land Cover Class Description Value Class Definition 1 Mixed Urban A combination of urban materials and vegetation Predominantly low- and mid-density residential 2 Paved Urban Surfaces with > 75% impermeable area Includes high-density development, parking lots, streets, and roof tops 3 Forest Surface dominated by trees 4 Grass, Shrubs, Crops Agricultural fields, golf courses, lawns, and regrowth after clearcutting 5 Bare Soil Land that has been cleared, rocks, and sand 6 Clearcut Clearcut forest that has not had significant regrowth; very dry grass 7 Water Lakes, reservoirs, and streams You will use ArcGIS Spatial Analyst to do this exercise. Be sure that the Spatial Analyst extension is checked in Tools > Extensions. A: Creating the confusion matrix/ contingency table: 1. Open the ArcMap document exercise10.mxd 2. Note: when you open the ArcMap document you will need to set the data source for each layer to the data sources you unzipped. 3. There are two data files included here, a subset of a land cover grid with 6 classes and an aerial photograph corresponding to the same area in Seattle, WA3. Four sample grids have been drawn onto the land cover using a 3 x 3 cell grid. They are labeled with numbers and correspond to the four classes listed in Table 1: Mixed Urban, Paved Urban, Forest and 2 3 E.g.: http://www.john-uebersax.com/stat/kappa2.htm... The western shore of Greenlake, to be precise. -2- Exercise 10 UDP 422 Advanced GeoSpatial Analysis Water. We will use these sample units to check the classification accuracy of the four classes. Notice that three classes - bare soil, grass, and clearcuts- are being left out of our accuracy assessment because there are no good locations to sample these cover types in this photo. 4. Starting with the Mixed Urban grid (top of map), zoom into the general area and turn off the land cover layer. Be sure to zoom out far enough to see the photo image clearly without it being too pixilated (about 1:2,000 works well). 5. Now look at the piece of the aerial photo visible within each of the nine cells, and consider the definition of ‘mixed urban’ (“a combination of urban materials and vegetation”). How many do you think should be classified as mixed urban? If more than 50% of a grid cell matches your assessment of mixed urban, then count that grid cell as truly mixed urban. On the other hand, if more than 50% looks like another land cover type (water, paved, forest), then count that grid cell as one of these other land covers. Enter your estimated counts for each class under the Mixed Urban column of the matrix below (Table 2). The Sum of the column should equal 9 because we have 9 sub-sample units per class sample. 6. Do the same for the following 3 classes: Paved, Forest, and Water. Count the pixels by class and record the sums in the Confusion matrix. Confusion Matrix: Predicted Class Mixed Urban Paved Forest Water Total (Σ) Actual Class (from image) Mixed Urban Paved Forest Water Total (Σ) 36 Q1: Fill out the above Confusion Matrix. Q2: Calculate total number of correct pixels. Total number of correct pixels is the sum of elements along the major diagonal (grey cells). From this, calculate the overall accuracy by dividing the total correct pixels (sum of the diagonal) by the total number of pixels which is 36 (4 sample units * 9 sub-samples). -3- Exercise 10 UDP 422 Advanced GeoSpatial Analysis Q3: For one of the cells you classified differently than the computer did, take a screenshot and briefly explain why you chose to classify it the way you did. If you didn’t classify any cells differently than the computer, briefly explain your reasoning. If there are some cells that appear to be grass/crops/shrubs (which are not options in the confusion matrix), how did you classify these? Why? Q4: The User’s (Consumer’s) Accuracy are errors of commission (including a pixel in the predicted classification when it should be excluded), calculated by dividing the # of pixels correctly identified in a class by the total number predicted to be in that class. Producer’s Accuracy are errors of omission (excluding a pixel that should have been included in the predicted classification), calculated by dividing the # of pixels correctly classified as a class by the number that should be in that class, as determined by your analysis of the image. A great resource is available here: http://biodiversityinformatics.amnh.org/index.php?section_id=34&content_ id=131 Calculate both the User’s Accuracy and the Producer’s Accuracy for each class and write these numbers either in the table, or summarize them below. Please present your answer for both the Producer’s and User’s accuracy as a fraction (2/14) and a decimal (e.g. 0.298). Predicted Class Mixed Urban Paved Forest Water Total (Σ) Actual Class (from image) Mixed Urban Paved Forest Water Total (Σ) 36 User’s Accuracy -4- Producer’s Accuracy Exercise 10 UDP 422 Advanced GeoSpatial Analysis B: Calculate the Kappa Index: Table 3: Confusion matrix comparing image referenced class to computer-based classification (Note: this is different data than the confusion matrix you generated earlier) Actual Class (from image) Mixed Urban Predicted Class Mixed Urban Paved 68 12 Paved 7 Total (Σ) 112 Total (Σ) We can calculate κ using this information. This will require you to calculate the expected cell frequencies (expected agreement) and compare it with the observed agreement. The table above is the observed agreement. To calculate the expected agreement, you need to calculate the probability that they would either both classify a cell as ‘mixed urban’ randomly or that they would both classify a cell as ‘paved urban’ randomly. Each of these can (separately) be calculated by: 𝑡𝑜𝑡𝑎𝑙 # 𝑜𝑓 𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑒𝑑 𝑐𝑙𝑎𝑠𝑠 𝑡𝑜𝑡𝑎𝑙 # 𝑜𝑓 𝑎𝑐𝑡𝑢𝑎𝑙 𝑐𝑙𝑎𝑠𝑠 ∗ 𝑁 𝑁 Where [predicted class] is either Mixed Urban or Paved. Summing these estimates will give the probability of random agreement. This can be generalized to include more than two classes (so you could calculate the kappa for 4+ classes such as for the confusion matrix you generated), but we’re keeping it simple here so you can see the basic idea behind the kappa statistic. Please see these websites for more help: http://epiville.ccnmtl.columbia.edu/popup/how_to_calculate_kappa.html http://en.wikipedia.org/wiki/Cohen%27s_kappa Q5: Calculate the overall accuracy (observed agreement) and the Kappa Index for the data in Table 3. Please show your work (e.g. write out how you calculated the probability of random agreement). Is the total Kappa coefficient above or below the overall accuracy? Deliverable Answer questions for highlighted questions 1-5. Submit your assignment March 13, 2014. -5-