AnnBib2

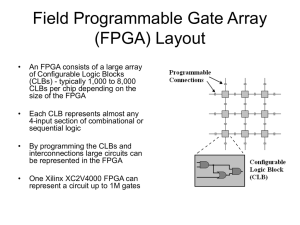

advertisement

McCune, Robert Ryan September 19th 2011 Grad OS, Striegel Annotated Bibliography 1. 2. 3. 4. 5. (Book) S. Brown, et al. “Field-Programmable Gate Arrays,” Kluwer Academic Publishers, Norwell, Massachusetts, 1992. Solid technical overview to FPGAs from Notre Dame Library. FPGAs versus VLSI, basics of logic blocks, available software, transfer to hardware, as well as industry discussion. Offers introduction and overview of FPGAs, valuable moving forward, put into perspective the work to get an FPGA board from start to finish. (Book) Alford, Roger C. “Programmable Logic Designer’s Guide,” Howard W. Sams & Company, Indianapolis, 1989. Could be a coursebook for Logic Design 101. Begins with logic device overview that is helpful moving forward, then moves into Boolean logic, and gradually builds up to more elaborate PLDs. Pair nicely with journal articles from the early decades that provide high-performance PLD design. (Journal Article) Charles Baugh and Bruce Wooley, “A Two’s Complement Parallel Array Multiplication Algorithm,” IEEE Transactions on Computers, Vol C-22, No. 12, December 1973, pages 1045-1047. DOI Old school paper presents an algorithm for high-speed multiplication. Utilizes two’s complement rule in an m-bit by n-bit array. Uses uncomplicated logic to run calculations in parallel, could easily implement on an FPGA board for high-performance computations. (Journal Article) Weinberger, Arnold. “High-Speed Programmable Logic Array Adders,” IBM Journal of Research and Development March 1979, Volume 23 Issue 2, pages 163-178. DOI Programmable logic arrays implement combinational logic circuits, an early and basic type of FPGA. This old school paper describes a one-cycle adder for ‘standard’ PLAs, utilizing two-bit input decoders and XOR outputs. Could possibly implement this design for a high-performance FPGA board, a large adder that completes in a single cycle. (Conference Article) B. R. Rau and C. D. Glaeser, “Some scheduling techniques and an easily schedulable horizontal architecture for high performance scientific computing.” Proceeding MICRO 14 Proceedings of the 14th annual workshop on Microprogramming, Piscataway, NJ, Dec. 1981. AMC Link This well-cited paper provides horizontal architectures that may work well for my project. I’ll attempt to build a simple FPGA board emphasizing high-performance for simple calculations. This paper provides comparable approaches for operating systems, i.e. cost-effective & high-performancex. An interesting experiment could be implementing the FPGA board against one of these performance-oriented OS’s. Begin to think if high-performance computing becomes customization for your particular algorithm, which is simple for a simple computation. Where do you draw the line, what would be interesting problems for today? 6. 7. 8. 9. 10. 11. (Conference Article) J. G. Tong, et al. “Soft-Core Processors for Embedded Systems.” International Conference on Microelectronics, 2006, pages 170-173. DOI The Soft-core processor is specially designed CPU modeled using hardware description language (HDL), common in FPGAs. The paper provides an overview, examples, and applications, including open-source processors that could possibly be implemented for research. A table comparing soft core specs can be used later when experimenting with performance. (Conference Article) Roman Lysecky and Frank Vahid, “A Study of the Speedups and Competitiveness of FPGA Soft Processor Cores using Dynamic Hardware/Software Partitioning.” DATE ’05: Proceedings of the conference on Design, Automation and Test in Europe – Volume 1. DOI Soft core processors have become more common in FPGA boards offering increased flexibility, but at the cost of performance/slower clock speeds compared to hard-core processors. Warp processing dynamically converts typical software instruction binary into an FPGA circuit binary for performance. This paper explores the positive effects of the MicroBlaze warp processor. Very relevant topic offering current research topics. (Conference Article) S. Sirowy, G. Stitt and F. Vahid. “C is for Circuits, Capturing FPGA Circuits as Sequential Code for Portability.” Symposium on Field-Programmable Gate Arrays, 2008. DOI FPGA boards are used more and more often to increase performance, speeding up C code. The authors analyzed circuits submitted to an IEEE Symposium and found 82% could be implemented as C code, offering important advantages including easy FPGA application distribution. (Conference Article) R. Lysecky, G. Stitt and Frank Vahid, “Warp Processors.” ACM Transactions on Design Automation of Electronic Systems (TOAES) Volume 11, Issue 3, July 2006, pg 659-681. DOI Describes architecture of a warp processor, used on FPGAs to improve performance. The warp processor detects a binary’s critical region and runs it through a customized circuit, requiring partitioning, decompilation, synthesis, placement and routing tools. Interested in the profiler, that dynamically detects the critical regions. (Conference Article) G. Stitt, R. Lysecky, F. Vahid, “Dynamic Hardware/Software Partitioning: A First Approach.” Design Automation Conference (DAC), 2003, pp. 250-255. DOI Vahid’s team’s first approach to dynamic partitioning of the binary. Includes architecture flow charts and lean tool specs. Appears to be very limited implementation, a first run, does not include very complex logic, more proof of concept. (Professor Website) Frank Vahid’s webspace, Warp Processing summary link. http://www.cs.ucr.edu/~vahid/warp/ Thorough overview of warp processing outside of a research paper context. Warp processing is his method of dynamically converting software binary to FPGA hardware instructions for dramatic performance increases. Disappointing that no warp processing tools are made available, 12. 13. 14. 15. 16. impossible to reproduce results. Would like more details on profiler and DPM. (Conference Article) Grant Wigley and David Kearney, “The Development of an Operating System for Reconfigurable Computing.” The 9th Annual IEEE Symposium on Field-Programmable Custom Computing Machines, 2001. Here Explores OS requirements on reconfigurable boards as FPGA boards become difficult to manage. Includes a prototype implementing algorithms for resource allocation, partitioning, placement, and routing, considering FPGA boards with over 10 million gates. Appreciated discussion on allocation and fragmentation looking for largest contiguous rectangle on board. Suggests operating systems across large FPGA boards is feasible. (Conference Article) Maire McLoone and John V. McCanny, “High Performance Single-Chip FPGA Rijndael Algorithm Implementations.” CHES ’01 Proceedings of the Third International Workshop on Cryptographic Hardware and Embedded Systems. Here FPGA boards can be utilized for high-performance computing, this conference provides an instance applied to cryptography. The Rijndael algorithm was selected by the NIST to replace DES as a new crypto standard. The paper outlines the FPGA layout that can encrypt Rijndael at 7 Gigs/sec, 3.5 times faster than any current implementation. (Journal Article) A. Lofgren, et al. “An analysis of FPGA-based UDP/IP stack parallelism for embedded Ethernet connectivity,” NORCHIP Conference, 2005. FPGA boards and parallelism. While my project is aiming to explore FPGA board computations versus a regular OS due to computational optimization, another benefit from FPGA boards is optimization. This paper explores FPGA boards with internet connectivity, which ties into the Woods article, and different stacks for performance. (Conference Article) L. Woods, et al. “Complex Event Detection at Wire Speed with FPGA,” Proceedings of the VLDB Endowment 3:1-2 September 2010, pages 660-669. AMC Paper discusses using FPGA boards between a CPU and network interface, using the hardware to data mine. Complex event detection comes across as recognizing data mining algorithms, cites and implements regular expressions. Includes logic gate schematics and state diagrams of non-deterministic finite automata. Complete and thorough analysis, envision my project being along these lines but likely less complex computations. (Conference Introduction) D. Buell, et al. “High-Performance Reconfigurable Computing,” The 2007 IEEE International Conference on Information Reuse and Integration, Las Vegas. Link High-performance reconfigurable computers implementing parallel processors as well as FPGAs. Most code obeys 10-90 rule, 90 percent of execution from 10 percent of code, so run lengthy code over reconfigurable, personalized hardware. Overview article includes introductions to other rich articles, including a C-to-FPGA compiler. 17. 18. 19. 20. 21. (Conference Article) M. Herbordt, et al. “Achieving High Performance with FPGA-Based Computing,” The 2007 IEEE International Conference on Information Reuse and Integration, Las Vegas. DOI Article from above journal with finer detail on FPGA design techniques. Identifies challenges of implementing FPGA boards for performance, problem of achieving speedups without excessive development while remaining flexible, portable, and maintainable. Outlines four methods for restructuring code for high-frequency computing on FPGA boards. (Journal Article) I. T. S. Li, et al. “160-fold acceleration of the SmithWaterman algorithm using a field programmable gate array (FPGA),” BMC Bioinformatics 2007, 8:185. DOI Case study implementing FPGA boards for high performance bioinformatics database computation. Smith-Waterman algorithm is used to find optimal local alignment between two sequences utilizing dynamic programming. Includes schematics and state diagram of implementation. Background on SmithWaterman algo demonstrates relevance in bioinformatics. (Conference Article) M. Sadoghi, et al. “Towards Highly Parallel Event Processing through Reconfigurable Hardware,” DaMoN ’11 Proceedings of the Seventh International Workshop on Data Management on New Hardware, New York. DOI The team presents an event processing platform built on FPGAs with varying emphasis on flexibility, adaptability, scalability, or pure-performance, taking on four different designs. The Propogations key-based counting algorithm ideal for the experiment. The FPGA boards are programmed using a soft-processor, programmable logic using C rather than Verilog that can be ported to other FPGAs, possible for my project. (Journal Article) A Pertsemlidis and John W Fondon III. “Having a BLAST with bioinformatics (and avoiding BLASTphemy),” Genome Biology 2001, September 2001. PubMed BLAST, the basic local alignment search tool, performs comparisons between pairs of genomic sequences and is a popular tool for computational biologists. Discusses the past searches for genome matching, beginning in 1995. Several implementations of the BLAST algorithm exist. Identifying similar sequences, a pretty basic problem, is a very important and computationally expensive procedure that could benefit greatly from high-performance computing. (Journal Article) S. Altschul, et al. “Gapped BLAST and PSI-BLAST: a new generation of protein database search programs.” Nucleic Acids Research, Volume 25, Issue 17, pp 3389-3402. DOI Several variations of the BLAST program have been implemented, with this paper yielding three times faster performance than the original. The authors tweak the triggering for word extensions, and implement a matrix scheme for better identification of word gaps. A highly cited paper but doubtful about direct application to FPGA, but helpful in weighing on the significance of the BLAST algorithm. 22. 23. 24. 25. (Conference Article) M. Herbordt, et al. “Single Pass, BLAST-Like, Approximate String Matching on FPGAs.” 14th Annual IEEE Symposium on Field Programmable Custom Computing Machines, Napa, CA, 2006. Pp. 271-226. DOI Two algorithms are presented for implementing BLAST on an FPGA board, as well as FPGA/Dynamic Programming. The two algorithms operate on a single pass through a database at streaming rate. Results are supplied for several implementations. Interesting results and application of BLAST to FPGA boards, but not directly applicable for this project. (Conference Article) Oskar Mencer, Martin Morf and Michaelf J. Flynn, “PAM-Blox: High Performance FPGA Design for Adaptive Computing.” IEEE Symposium on FPGAs for Custom Computing Machines, April 1998. Pp.167 DOI PAM-Blox is an object-oriented circuit generator on top of the PCI Pamette design environment. Using FPGA compiler technology, only with a hand-optimized library can the authors compete in providing a high performance, low power consumption solution, compared to current processors. It’s possible to outperform current environments, but only with meticulous detail. FPGA boards are non-trivial and take expert designers to construct hardware to outperform what’s readily available. I may have better luck with my project in pursuing a simple FPGA board configuration. (Journal Article) Y. Dandass, et al. “Accelerating String Set Matching in FPGA Hardware for Bioinformatics Research.” BMC Bioinformatics, 9:197 2008. DOI String set matching is one of the fundamental problems in bioinformatics, this paper presents yet another case of utilizing FPGA boards for performance. The Aho-Corasick algorithm is adapted to the boards to run in parallel. Experiments reveal 80% storage efficiencies and speeds of over 20 times faster using a 100Mhz clock, compared to tha on a 2.67 GHz workstation. (Conference Article) N. A. Woods, et al. “FPGA acceleration of quasiMonte Carlo in finance.” International Conference on Field Programmable Logic and Applications, 2008. Pp 335-340. DOI QuasiMonte Carlo methods are often used in quantitative finance for their accurate modeling of derivatives pricing. This paper presents a method for accelerating the simulation of several derivatives classes using FPGA boards. While using unconventional methods for traditional FPGA use, performance on the FPGA board runs more than 50 times faster than that of a single-threaded C++ implementation on a 3 GHz multi-core processor.