Autonomy, Delegation and Agency - Interactive Intelligence group

advertisement

Autonomy, Delegation, and Agency

Tjerk de Greef & Alex Leveringhaus

Document of Understanding

for the project

Military Human Enhancement

Page 1 of 8

Introduction

An Unmanned Areal Vehicle (UAV) is a system predominantly used for surveillance and

reconnaissance missions, both in war zones as in modern non-war life. Today, an UAV is

launched in the vicinity of an area of interest but controlled from a control cabinet often

thousands of miles away. However, these UAVs can be equipped with a payload and there is a

growing concern about giving these UAVs high levels of autonomy. Today, the there is a

growing risk within the general public that UAVs are deployed for the wrong causes with the

wrong arguments, effecting many ethical values.

Today, philosophers and artificial intelligence researchers are debating whether the

usage of UAVs should in the future become even fully autonomous to avoid atrocities given

that machines have no inherent emotional components (Arkin, 20XX) or that the human

should be in the control loop and that these UAV autonomy should be directed more towards

better teamwork (cf. Johnson, 2012; de Greef, 2012; Bradshaw et al. 20xx).

This document of understanding has a number of goals. First, it aims to look into the

concept of autority and look how other scholars have defined and used the concept. In

addition, the concept of autonomy isn’t probably enough to answer the question how highly

autonomous systems should act within a larger organizational structure. Therefore concepts

such as delegation, moral responsibility, and agency are studies. Furthermore, today’s use of

UAV where control and decision making is thousands of miles away, challenges questions on

the changed nature of war fighting and an immediate question comes to the fore in relation to

(moral) responsibility, and the perception-action loop of the human operator (are operators

becoming more trigger happy?). The problem remains to think about which values are affected

by such autonomous systems. The questions becomes, is it nice to have a fully autonomous

agent operational in an organizational system?

When defining, an actor A, an robot R, a third-party (T), and designer (D) a question looms

with regard to ethics:

..as part of a wider debate of ethics of interactions with artificial entities, maybe we should not just consider the

cases of agent abuse (P abuses R) and the potential negative influences of this (on P or third parties T), but also

the potential positive influences of agent interactions – does P being nice to R make P a nicer person? (Dix,

2008, p 335)

the ‘nearly human but not quite’ nature of these agents pushes us to make distinctions that are

not a problem when we compare human with stones, humans with cars, or even humans with

animals. (Dix, 2008, p 334)

First we will explore the concept of autonomy in relation to human-machine systems1.

1

Througout this paper we will use agents and robots for the samen purpose: to discuss an intelligent highly autonomous thing

Page 2 of 8

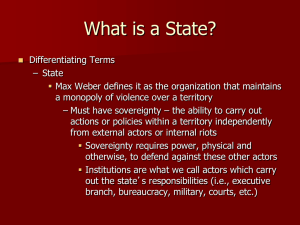

Autonomy

The word autonomy is derived from the Greek language and combines the auto, which means

self, and nomos, which means law. The combination of these Greek words leads to an ability to

determine ‘law self’. Most dictionaries relate self-government and independence (more

autonomous means loosing dependencies) with autonomy. More precisely, autonomy is

defined as “the right or condition of self-government” or as the “freedom from external control or influence”.

In his seminal work in moral philosophy, Kant defined autonomy as “the capacity of an agent to act

in accordance with objective morality rather than under the influence of desires”. In other words, Kant’s

autonomy relates to actions being taken by a person because it is believed that this is ‘good’,

independently of other incentives.

Generally, most scholars (e.g. Bradshaw et al., 2010) distinct between self-sufficiency

and self-directedness when reasoning about autonomous technology. The first, selfsufficiency, relates to the capability of an entity to take care of itself. Low indicates that the

robot is not capable of performing the task without significant help whereas high indicates that

the machine can perform the task (reliably) without assistance. The Google-car, for example, is

self-sufficient in terms that it the capacity to drive safely (self-sufficient). The second, selfdirectedness, relates to the freedom from outside control or, stated differently, freedom from

outside constraints (e.g. permissions, authorizations, obligations). Low indicates that, although

possibly capable of performing the task, the robot is not permitted to do so. High indicates

that the robot has the authority over its own actions, though it does not necessarily imply

sufficient competence. Google’s car, for example, is only permitted to drive in the state of

Nevada, not in other USA states.

The distinction between self-sufficiency and self-directness is not as clear-cut, nor

perpendicular (as suggested by some scholars (Bradshaw; Johnson)). When a computer

programmer embeds pre-programmed code in order to make the computer agent selfsufficient, the question looms whether this can be considered as outside control (i.e., the selfdirectedness axis). In this matter is might be wise to discuss different levels of conceptions,

meaning that computer programmers can have different schemas to implement self-sufficiency

based on their knowledge, skill, and experience. For example, programmer P1 might embed in

agent A software code allowing self-sufficiency for a specific context C. However, programmer

P2 might has an alternative solution for self-sufficiency for agent A in context C. Clearly, both

programmers have a good intention to implement self-sufficiency optimally leading to different

pre-programmed code conceptions.

Johnson (20xx) uses these the two dimensions of autonomy to distinguish between

four different autonomous cases (see Figure 1). An autonomous system can be a burden when

the technology is low on both axis. It has low capabilities (much input from the human

Page 3 of 8

needed) and the machine is not permitted to do anything (e.g. tele-operating a robot such as

currently done in rescue missions). On the other extreme, a machine is considered opaque

when it has the capabilities (self-sufficiency is high) and the machine has authority over its own

actions. It is generally those machines that lack transparency and are prone to automation

surprises (Sarter, Woods, & Billings, 1997). The two remaining cases related to over-trusted

systems and under-utilzed systems. A system is over-trusted when human actors have too much

faith in the capabilities (self-sufficiency) of an automated system and the system is capable to

make its own decisions. Typically, car driving DARPA’s Grand Challenge were in this category

given that hardly any cars made it to the finish but got stuck somewhere in the race track.

Under-utilized systems are those systems that have the capabilities but their self-directedness is

reduced significantly, leading to the under utilization of systems. Typically, this happens when

outcomes of incorrect decisions lead to consequences with a heavy toll, such as the deployment

of the early Mars Rovers or targeting systems.

Figure 1 – Johnson (20xx) uses the two dimension of autonomy to define four types of autonomous

cases

Neither over-trused or under-utilized systems are preferable given the over-estimation of

machine’s capabilites (allowing the machine to have full control over their actions) or low

usage of capabilities potential. However, opaque have transparency problems. There is a

growing group of scholars that acknowledge that opaque systems need to incorporate a team

perspective. Johnson promotes the idea of analysing the dependencies between the human and

the machine, leading to an understanding about what both can expect from each other. We call

this acting with a computer.

Delegation

Delegation is defined as “the act or process of delegating or being delegated”. To delegate is defined as

“entrust (a task or responsibility) to another person, typically one who is less senior than oneself”. A senior

warfare officer, for example, might delegate part of a task to a junior officer. Delegation is

often done to lower the workload, or……; or, to put it into the wording of Castelfranchi and

Page 4 of 8

????, what is the reason to have an intelligent entity in your proximity when you are not using it

you are lowering the output of the whole system.

But how does delegation relate to moral responsibility? Could you say that when a

delegatee delegates a task, (s)he is also shifting responsibility. One could perhaps say, that there

is a resonsibility within the delegate to execute the task predomonently. -> case neurenberg

trials?

Moral Responsibility

Responsibility relates to obligations to act, having control over, be trusted, or be the case of

something. More specifically, a person or agent that is morally responsible is …not merely a

person who is able to do moral right or wrong. Beyond this, she is accountable for her morally significant conduct.

Hence, she is an apt target of moral praise or blame, as well as reward or punishment. (Stanford

Excuclopedia of Philosophy). When an actor is accountable, (s)he is required or expected to

justify actions or decisions. If the justifications of the actions or decisions are not ok, the actor

should be blamed and given punishment. The thesaurus gives responsible as a synonym, but I

have the feeling that there exists a slight difference. But what is this difference?

Work by Friedman (19xx) shows that people hold computers responsible for their

actions. It is an open question, and this needs to be studies, whether people hold people

accountable or responsible? Or is this the same? This would make a nice experiment that

studies how people make computers accountable for the errors or misjudgments when

manipulating their level of autonomy (should we shift it them in the two dimensions described

above).

Agency

This section questions what agency exactly means. Generally, the artificial intelligence

community relates the word agent as …anything that can be viewed as perceiving its environment through

sensors and acting upon that environment through actuators… (Russel and Norvig, , p32)2. A plant, for

example, might be ascribed agency as it perceives sunlight, carbon dioxide, water, and nutrients

through a process called photosynthesis allowing growing. We would say it is a simple agent

and that this agent has no autonomy (although it is self-sufficient).

Cognitive robots are defined as ……<<look-up>>. Interestingly, it is stressed that, when

talking about AI agents, is it situated in an environment and that is able to act autonomously

on it in order to achieve its goals (Woolridge, Introduction to MAS) making the formal

approach to agency thus a tuple {ag, Env} where ag is an agent and Env the environment. The

idea is that there is no agent system without an environment.

Interestingly, the AI community defines autonomy also in terms of a learning capability resting within the agent in order to cope

within the environment, optimizing output

2

Page 5 of 8

Ethics & Morales – Just some things that might help??

Flavours of ethics (from Thimbleby, 2008)

Utilitarianism

Conseuqntialism

What happens matters

Virtue Ethics

If I am vertious (having high moral

standards), I do good

Professional ethics

No claim to general application, but are

focussed on particular domains

Deontological ethics

Situnational ethics

There are no valid general positions, but

ethics issues are to be debated and resplved

with respect to particular situations

We need to distinguish (Dix, 2008):

Morals – what is right or wrong,

Mores – what society regards as acceptable or not,

Laws – things that the state decrees we must or must not do

These are clearly not the same

Research Questions

1.

How does the ‘abdication of moral responsibility’ change when systems are move along

the two axis of autonomy from oblique to burden?

2.

What is the role of the human from a moral and ethical standpoint in automatic systems?

3.

Does the ‘abdication of moral responsibility’ change when, as some AI scholars promote,

a collaboration dimension is added to the autonomous oblique AI agent?

4.

What values are affected by the interaction between the human actor and computerized

autonomous actor3?

5.

Is ‘trigger happiness’ a key factor when UAV’s are controlled at a distance? In other

words, what are the effects on perception and decision making of controlling an AUV at a

large distance?

this implies, of course, a assumption that fully autonomous systems are not oblique but have some sort of transparancy and

cooperation dimension

3

Page 6 of 8

Page 7 of 8

Literature

Bradshaw, J. M., Feltovich, P. J., Jung, H., Kulkarni, S., Taysom, W., & Uszok, A. (2010).

Dimensions of adjustable autonomy and mixed-initiative interaction. In M. Nickles, M.

Rovatsos, & G. Weiss (Eds.), Agents and Computational Autonomy (Vol. 2969, pp. 17-39).

Springer. Retrieved from http://opus.bath.ac.uk/5497/

Dix, A. (2008). Response to “Sometimes it’s hard to be a robot: A call for action on the ethics of

abusing artificial agents. Interacting with Computers, 20(3), 334-337.

doi:10.1016/j.intcom.2008.02.003

Sarter, N. B., Woods, D. D., & Billings, C. E. (1997). Automation surprises. In G. Salvendy (Ed.),

Handbook of human factors and ergonomics (Vol. 2, pp. 1-25). Wiley. Retrieved from

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.134.7077&amp;rep=rep1&amp

;type=pdf

Thimbleby, H. (2008). Robot ethics? Not yet. A reflection on Whitby’s Sometimes it's hard to be

a robot. Interacting with Computers, 20(3), 338-341. Retrieved from

http://hdl.handle.net/10512/99999163

Page 8 of 8