@The system shall be able to compute the average of a sequence of

advertisement

@The system shall be able to compute the average of a sequence of real numbers. The user enters the numbers one by Two Variable Model: This is the "traditional model" (-The system has

one and then presses the button that starts the computation. The sequence of entered numbers should contain at least one inputs and outputs . The outputs are a mathematical function of the

number.

inputs.)⊲ In this model the inputs are the actual physical inputs to the

System ⊲ The mathematical functions are often quite complex ⊲ Review

by domain experts is hard to get

Four-variable model

Add in Space:

⊲ The requirements are described from three views-- (Monitored

[Ave =>] mean(b) : a function computes the average of a sequence

of real numbers entered.

variables)/(Input variables) view -- (Input variables)/(Output variables)

[Max&min =>] max(b)/min(b) : A function finds the maximum/

view-- (Output variables)/(Controlled variables) view

minimum number of the sequence of real numbers entered by users.

Controlled Variables: whose values the system is intended to

(in this case, change OutputDisplay’ = { min(b), max(b) })

restrict .Monitored Variables:whose values the system will measure

directly or indirectly. Input Variables: whose values can be read by

computers. Output Variables: whose values are set by the computers in

# Relation of the Environment

ButtonPressed

¬ ButtonPressed

the system [Internal Variables: Input + Output==Environmental

Sc_Re

Variables: Controlled + Monitored]

System-environment model (see left corner)

NbRead’ = input() ∧

NbProcessed

NotChge(Output Display, b, NbRead,

NbProcessed)

¬NbProcessed

ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed)

NbProcessed

ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed

b=∅b

b≠∅b

¬NbProcessed

# Relation of the System Sc_Rs

NbProcessed

b=∅b

¬NbProcessed

NbProcessed

b≠∅b

¬NbProcessed

ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed

ButtonPressed

NotChge(Output Display, b, NbRead,

NbProcessed)

b’= b ⨁ NbRead ∧

NbProcessed’ = true ∧

NotChge(OutputDisplay,

ButtonPressed,NbRead)

OutputDisplay’ = mean(b) ∧

b’=∅b ∧

ButtonPressed’ = false ∧

NotChge(NbRead, NbProcessed)

b’= b ⨁ NbRead ∧

NbProcessed’ = true ∧

NotChge(OutputDisplay,

ButtonPressed,NbRead)

NbProcessed’ = false ∧

NotChge(OutputDisplay, b,

ButtonPressed)

∨ ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed)

ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed)

NbRead’ = input() ∧

NbProcessed’ = false ∧

NotChge(OutputDisplay, b,

ButtonPressed)

∨ ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed)

ButtonPressed’ = true ∧

NotChge(OutputDisplay, b, NbRead,

NbProcessed

¬ ButtonPressed

false

b’= b ⨁ NbRead ∧

NbProcessed’ = true ∧

NotChge(OutputDisplay, ButtonPressed)

false

b’= b ⨁ NbRead ∧

NbProcessed’ = true ∧

NotChge(OutputDisplay, ButtonPressed)

Dominates Relation:

1. Paul, cleared for (TOP SECRET, {A, C}), wants to access a document classified (SECRET, {B, C}). Paul ¬dom Doc,

as {B, C} ⊈ {A, C} Paul cannot read or write the document

2. Jesse, cleared for (SECRET, {C}), wants to access a document classified(CONFIDENTIAL, {C})

Jessie ¬dom Doc, as CONFIDENTIAL < SECRET and {C} ⊆ {C} Jessie cannot write the document. can read.

3. Robin, who has no clearances (and so works at the UNCLASSIFIED level), wants to access a document classified

(CONFIDENTIAL, {B}).

Robin ¬dom Doc, as 𝑈𝑁𝐶𝐿𝐴𝑆𝑆𝐼𝐹𝐼𝐸𝐷 < 𝐶𝑂𝑁𝐹𝐼𝐷𝐸𝑁𝑇𝐼𝐴𝐿 𝑎𝑛𝑑 {B} ⊈ ∅ Robin can write the document. cannot

read.

Strict Integrity Policy (Biba’s Model) Subject can only read and write if and only if the objects are at the same levels.

For example: Anna, cleared for (CONFIDENTIAL, {A}), can read and write documents that classified

(CONFIDENTIAL, {A})

Q: Design a two-message authentication protocol, assuming that Alice and Bob know each other's public keys, which

accomplishes both mutual authentication and establishment of a session key

A: Alice picks a session key K, encrypts K with Bob’s public key, signs the message and send it with a timestamp T.. Bob

responds to Alice with the timestamp T encrypted with K.

Q: Consider an RSA digital signature scheme. Alice tricks Bob into signing messages m1 and m2 such that m = m1 xm2

mod n Bob . Prove that Alice can forge Bob’s signature on m.

𝑚1𝐾 𝑚𝑜𝑑 𝑛𝐵𝑜𝑏 × 𝑚2𝐾 𝑚𝑜𝑑 𝑛𝐵𝑜𝑏 = (𝑚1𝐾 × 𝑚2𝐾 )𝑚𝑜𝑑 𝑛𝐵𝑜𝑏 = (𝑚1 × 𝑚2 )𝐾 𝑚𝑜𝑑 𝑛𝐵𝑜𝑏

= ((𝑚1 × 𝑚2)𝐾 𝑚𝑜𝑑 𝑛𝐵𝑜𝑏 )𝑚𝑜𝑑 𝑛𝐵𝑜𝑏 = 𝑚𝐾 𝑚𝑜𝑑 𝑛𝐵𝑜𝑏

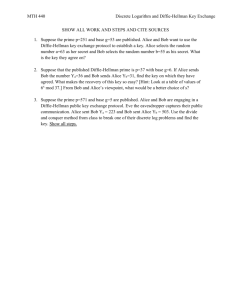

Assume that Diffie-Hellman scheme is used, A and B have chosen p=53 and g=13, and private keys k A = 3 and k B = 5.

Calculate public keys K A , K B and secret keys S A,B , S B,A ===> 𝐾𝐴 = 𝑔𝑘𝐴 𝑚𝑜𝑑 𝑝 = 133 𝑚𝑜𝑑 53 = 24, 𝐾𝐵 =

𝑘

𝑘

𝑔𝑘𝐵 𝑚𝑜𝑑 𝑝 = 135 𝑚𝑜𝑑 53 = 28, 𝑆𝐴,𝐵 = 𝐾𝐵 𝐴 𝑚𝑜𝑑 𝑝 = 283 𝑚𝑜𝑑 53 = 10, 𝑆𝐵,𝐴 = 𝐾𝐴 𝐵 𝑚𝑜𝑑 𝑝 = 245 𝑚𝑜𝑑 53 = 10

Work Context Diagram

Two-variable model

Domain dom(R )={s|∃t,(s,t)∈R}; Range range(R )={t|∃s,(s,t)∈R}

@Q={(0,3),(0,4),(1,5)}; R={(0,3),(0,6),(2,5)} dom(Q)={0,1}; dom(R)={0,2};

dom(Q)∩ dom(R)= dom( Q∩ R) Q⊓R={(0,3),(2,5),(1,5)}

@Q={(0,3),(0,4),(1,5)}; R={(0,5),(0,6),(2,5)} dom(Q)∩ dom(R)={0};Q∩

R=∅;dom(Q)∩ dom(R)≠ dom( Q∩ R) demonic meet doesn’t exit. @ the

demonic meet of a relation R and an empty set is just relation R itself.

Therefore, the demonic meet of P and Q is P @Q={(x,y)|even(x) ⋀ y=2x+1}

R={(x,y)|odd(x) ⋀ y=x-1}

Predicates the relation: RTsubset(X x Y) can be defined as:

(x,y)ϵRT↔PT(x,y)=true

Domain coverage: is every possible case considered

Disjoint domain: one heading per domain

Definedness: the grid is defined over the heading domain

Volere Template Contents1 Project Drivers 1.1 The Purpose of the Project

1.1.1 The User Business or Background of the Project Effort 1.1.2 Goals of

the Project 1.2 The Client, the Customer and other Stakeholders 1.2.1 The

Client 1.2.2 The Customer 1.2.3 Other Stakeholders 1.3 Users of the

Product 1.3.1 The Hands-On Users of the Product 1.3.2 Priorities Assigned

to Use 1.3.3 User Participation 1.3.4 Maintenance Users and Service

Technicians 2 Project Constraints 2.1 Mandated Constraints 2.1.1 Solution

Constraints 2.1.2 Implementation Environment of the Current System

2.1.3 Partner or Collaborative Applications 2.1.4 Off-the-Shelf Software

2.1.5 Anticipated Workplace Environment 2.1.6 Schedule Constraints 2.1.7

Budget Constraints 2.2 Naming Conventions and Definitions 2.2.1

Definitions of all Terms 2.2.2 Data Dictionary for Any Included Models 2.3

Relevant Facts and Assumptions 2.3.1 Facts 2.3.2 Assumptions 3

Functional Requirements 3.1 The Scope of the Work 3.1.1 The Current

Situation 3.1.2 The Context of the Work 3.1.3 Work Partitioning 3.2 The

Scope of the Product 3.2.1 Product Boundary 3.2.2 Product Use Case List

3.2.3 Individual Product Use Cases 3.3 Functional and Data Requirements

3.3.1 Functional Requirements 3.3.2 Data Requirements 4 Nonfunctional

Requirements 4.1 Look and Feel Requirements 4.1.1 Appearance

Requirements 4.1.2 Style Requirements 4.2 Usability and Humanity

Requirements 4.2.1 Ease of Use Requirements 4.2.2 Personalization and

Internationalization Requirements 4.2.3 Learning Requirements 4.2.4

Understandability and Politeness Requirements 4.2.5 Accessibility

Requirements 4.3 Performance Requirements 4.3.1 Speed and Latency

Requirements 4.3.2 Safety-Critical Requirements 4.3.3 Precision or

Accuracy Requirements 4.3.4 Reliability and Availability Requirements

4.3.5 Robustness or Fault-Tolerance Requirements 4.3.6 Capacity

Requirements 4.3.7 Scalability or Extensibility Requirements 4.3.8

Longevity Requirements 4.4 Operational and Environmental Requirements

4.4.1 Expected Physical Environment 4.4.2 Requirements for Interfacing

with Adjacent Systems 4.4.3 Productization Requirements 4.4.4 Release

Requirements 4.5 Maintainability and Support Requirements 4.5.1

Maintenance Requirements 4.5.2 Supportability Requirements 4.5.3

Adaptability Requirements 4.6 Security Requirements 4.6.1 Access

Requirements 4.6.2 Integrity Requirements 4.6.3 Privacy Requirements

4.6.4 Audit Requirements 4.6.5 Immunity Requirements 4.7 Cultural and

Political Requirements 4.7.1 Cultural Requirements 4.7.2 Political

Requirements 4.8 Legal Requirements 4.8.1 Compliance Requirements

4.8.2 Standards Requirements 5 Project Issues 5.1 Open Issues 5.2 Offthe-Shelf Solutions 5.2.1 Ready-Made Products 5.2.2 Reusable

Components 5.2.3 Products That Can Be Copied 5.3 New Problems 5.3.1

Effects on the Current Environment 5.3.2 Effects on the Installed Systems

5.3.3 Potential User Problems 5.3.4 Limitations in the Anticipated

Implementation Environment That May Inhibit the New Product 5.3.5

Follow-Up Problems 5.4 Tasks .4.1 Project Planning 5.4.2 Planning of the

Development Phases 5.5 Migration to the New Product 5.5.1

Requirements for Migration to the New Product 5.5.2 Data That Has to Be

Modified or translated for the New System 5.6 Risks 5.7 Costs 5.8 User

Documentation and Training 5.8.1 User Documentation Requirements 5.8.2 Training Requirements5.9 Waiting

Room5.10 Ideas for Solutions

1) Security is a multifaceted problem. The three aspects of security (confidentiality, integrity, and availability) can be

affected by the software quality at all stages of the software development process and by the environment in which the

system is deployed. The mechanisms that we build to implement the security aspects are based on hypotheses that

approximate the real world but still do not cover all the possible behaviours of the system or its environment. Therefore,

there is always a possible way to threaten a system.

2) Since the system is developed for the military, one should expect that an emphasis is put on the need to keep

information secret (confidentiality). Although integrity and availability are important, the compromise of confidentiality

would be catastrophic for a military organization. The school can be seen as a commercial firm. Therefore, it has an

important need to prevent tampering with their data (e.g., student grades), because they could not survive such

compromise. If the integrity of the computer holding the student information were compromised, the students’ personal

information could be altered. Therefore, the security aspect that is very important is integrity. Hence, the school

administrators should be asking questions about the integrity policies implemented as well as the mechanisms used to

prevent, detect, and recover from integrity related attacks on the system.

3)

a. L(Paul) = TOPSECRET > SECRET = L(doc), so Paul cannot write the document. Paul cannot read the document

either, because C(doc) = { B, C } ⊆ { A, C } = CPaul.

b. L(Anna) = CONFIDENTIAL ≥ CONFIDENTIAL = L(doc), but C(doc) = { B } ⊆ { C } = C(Anna) so Anna cannot

read the document, and C(Anna) = { C } ⊆ { B } = C(doc), so Anna cannot write the document.

c. L(Jesse) = SECRET ≥ CONFIDENTIAL = L(doc), and Cdoc = { C } ⊆ { C } = C(Jesse), so Jesse can read the

document. As L(Jesse) > L(doc), however, Jesse cannot write the document.

d. As L(Sammi) = TOPSECRET ≥ CONFIDENTIAL = L(doc) and C(doc) = { A} ⊆ { A, C } = C(Sammi), Sammi can

read the document. But the first inequality means Sammi cannot write the document.

e. As C(Robin) = ∅ ⊆ { B } = C(doc) and L(doc) = CONFIDENTIAL ≥ UNCLASSIFIED = L(Robin), Robin can write

the document. However, because L(doc) > L(Robin), she cannot read the document.

4) Alice picks a session key K and sends along a timestamp. She encrypts K with Bobs public key and signs the entire

message. Bob responds with the timestamp encrypted with K. Bob knows its Alice from the signature and timestamp.

Alice knows its Bob because only he can decrypt K.

5) Let (S(L), S(C)) be the security clearance and category set of the security level L. The Bell-LaPadula model allows a

subject in L to read an entity in L’ if, and only if, L dominates L’. By definition , this means S(L) ≤ S(L’) and S(C) ⊆

S(C’). As the security and integrity levels are the same, (S(L), S(C)) also defines the integrity clearance and category set

of the integrity level L. The Biba model allows a subject in L to read an entity in L’ if, and only if, L’ dominates L. Again,

this means S(L’) ≤ S(L) and S(C) ⊆ S(C’). Putting the two relations together, a subject in L can read an entity in L’ if, and

only if, S(L) ≤ S(L’) and S(L’) ≤ S(L), and S(C’) ⊆ S(C) and S(C) ⊆ S(C’). By asymmetry, this means a subject in L can

read an entity in L’ if, and only if, S(L) = S(L’) and S(C’) = S(C). Hence the subject and the entity being read must be at

the same security (integrity) level. A similar argument shows that, if a subject can write to an entity, the subject and entity

being written must be at the same security (integrity) level.

7)Assume that Diffie-Hellman scheme is used, A and B have chosen p=53 and g=13, and private keys kA = 3 and kB = 5.

Calculate public keys KA, KBand secret keys SA,B, SB,A. Another example in Diffie Hellman exchange below.

KA = 133 mod 53 = 24

KB = 135 mod 53 = 28

SBA = 245 mod 53 = 10

SAB = 283 mod 53 = 10

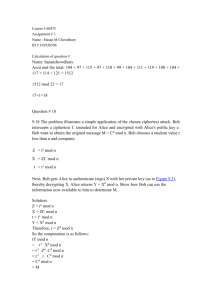

8) Consider an RSA digital signature scheme. Alice tricks Bob into signing messages m1 and m2 such that m = m1 m2

mod (nBob).Prove that Alice can forge Bob’s signature on m. Alice has Bob’s signature on m1 and m2 . Alice can get

Bob’s signature on m (mk mod nBob ) where m = m1 m2 mod nBob and k is Bob private key by multiplying m1 and m2

such that: (pic lower right)

3 types of attacks against an encryption: ciphertext only (attacker only has cipher, goal: plaintext), known plaintext

(attacker knows plaintext, goal: key, and chosen plaintext (asks for chosen plaintext to be ciphered, goal: key).

Substitution cipher: change characters in ciphertext: eg caesar cipher: substitution cipher in which each letter in the

plaintext is replaced by a letter some fixed number of positions down the alphabet. Vignere cipher: substitute the letters

some position down the alphabet; position is usually a sequence (3, 4, 5) Transposition cipher: rearrange plaintext: eg.

Rail-fence cipher: split message in half; 2nd half under first, zigzag through grid to form new string.

Diffie Hellmen key exchange

Alice and Bob agree to use a prime number p=23 and base g=5.

1. Alice chooses a secret integer a=6, then sends Bob A = ga mod p, A = 56

mod 23 = 8.

2. Bob chooses a secret integer b=15, then sends Alice B = gb mod p, B =

515 mod 23 = 19.

3. Alice computes s = B a mod p, 196 mod 23 = 2.

4. Bob computes s = A b mod p, 815 mod 23 = 2.

RSA Scheme example coprime: their greatest common divisor is 1,

totient(x): amount of coprime integers between 0 and x. d is kept as the

private key exponent, e is the public key exponent.

1. Choose two prime numbers

p = 61 and q = 53 Make sure that these prime numbers are distinct (i.e. they are not both the same number).

2. Compute n = pq

3. Compute the totients of product. For primes, the totient is maximal and equals the prime minus one.

Therefore

4. Choose any number e > 1 that is coprime to 3120. Choosing a prime number for e leaves you with a single check: that e is

not a divisor of 3120.

e = 17

5. Compute d such that

e.g., by computing the modular multiplicative inverse of e modulo

:

d = 2753

since 17 · 2753 = 46801 and 46801 mod 3120 = 1, this is the correct answer.

(iterating finds (15 times 3120)+1 divided by 17 is 2753, an integer, whereas other values in place of 15 do not produce

an integer. The extended euclidean algorithm finds the solution toBézout's identity of 3120x2 + 17x-367=1, and -367

mod 3120 is 2753)

The public key is (n = 3233, e = 17). For a padded plaintext message m, the encryption function

is

or abstractly:

The private key is (n = 3233, d = 2753). For an encrypted ciphertext c, the decryption function

is

or in its general form:

For instance, in order to encrypt m = 65, we calculate

To decrypt c = 2790, we calculate

.

the quotient function is the number of positive integers less then n and

relatively prim to n eg Tot(10)=4

relatively prime: no factors in common with n

RSA Algorithm

chose two large prime numbers p and q, then n=p*q, n is used as the

modulus.

tot(n)=(p-1)*(q-1), chose e such that 1 < . < tot(n), d =

Notation: x->y:{z}k means the entity x sends entity y a message z

enciphered with key k

interchange key: cryptographic key associated with a principal to a

communication

session key: cryptographic key associated with the communication itself.

It limits the amount of traffic enciphered with single key

Key Exchange: the key cannot be transmitted in the clear, a and b may

rely on third party c, and the cryptosystems and protocols are known, the

only secret data are keys. classic crypto's and public key use different

protocols.

public key cryptography key exchange and authentication

a->b:{Ksession}Eb can by forged because b cannot verify sender

A -> B : { A ||{ Ksession } dA } eB, here A has signed the message. If A does

not have B's public key, it needs to get it from a server, allowing "man in

the middle" attacks.

The Extended Four-Variable Model

A weakness of the four-variable model is that it does not explicitly specify

the software requirements, SOFT, but rather bounds it by specifying NAT,

REQ, IN, and OUT

Needham-Schroeder protocol

1) A -> C : { A || B || rand1}

2) C -> A : { A || B || rand1 || kSession || {A, KSession} kB } kA

3) A -> B : { A || kSession} kB

4) B -> A {rand2 } kSession

5) A -> B {rand2 - 1} kSession

A and B trust C, rand1 nad 2 are random numbers, the protocol assumes

that all cryptographic keys are secure.

Man in the middle Attack

A -> P {send me B's public keys} [intercepted by E]

E -> p {send me B's public keys}

P -> E : eB

E -> A : eE

A -> B : {kSession} eE [intercepted by E]

E -> B : {kSession} kB

E can read A <-> B traffic

certificate: a token or message containing identity of principal