the program notes

advertisement

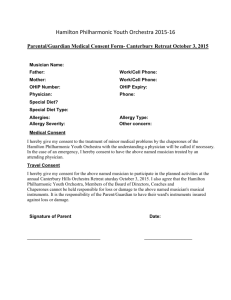

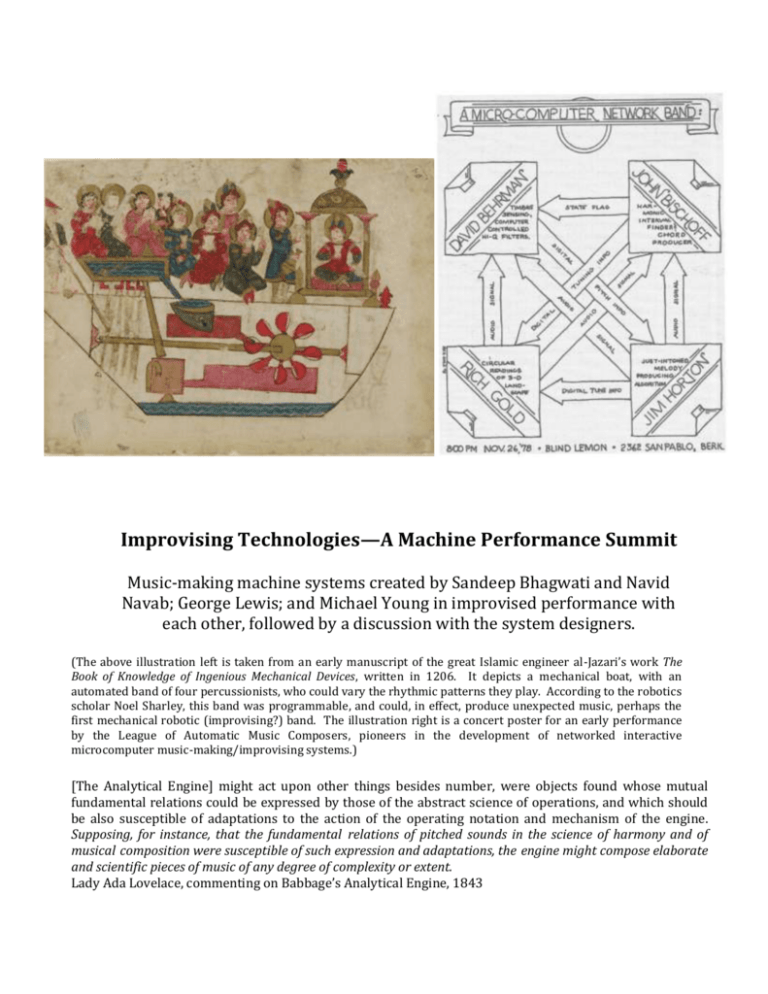

Improvising Technologies—A Machine Performance Summit Music-making machine systems created by Sandeep Bhagwati and Navid Navab; George Lewis; and Michael Young in improvised performance with each other, followed by a discussion with the system designers. (The above illustration left is taken from an early manuscript of the great Islamic engineer al-Jazari’s work The Book of Knowledge of Ingenious Mechanical Devices, written in 1206. It depicts a mechanical boat, with an automated band of four percussionists, who could vary the rhythmic patterns they play. According to the robotics scholar Noel Sharley, this band was programmable, and could, in effect, produce unexpected music, perhaps the first mechanical robotic (improvising?) band. The illustration right is a concert poster for an early performance by the League of Automatic Music Composers, pioneers in the development of networked interactive microcomputer music-making/improvising systems.) [The Analytical Engine] might act upon other things besides number, were objects found whose mutual fundamental relations could be expressed by those of the abstract science of operations, and which should be also susceptible of adaptations to the action of the operating notation and mechanism of the engine. Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent. Lady Ada Lovelace, commenting on Babbage’s Analytical Engine, 1843 For approximately a half century there has been research in the development, design, implementation and performance of interactive machine systems for the production of music. Such work has been undertaken for a variety of purposes--aesthetic, political, technological, creative, philosophical, cultural--but all such systems tend to be judged, at least partially, by the interest of the music they produce. This claim raises a host of questions—Judged by whom, and why?, Interesting in what sense? , How so music?—but there is no denying that systems which regularly produce unsatisfactory musical output will be thought to be failures, and mostlikely failures that correspond with one of the two extremes of how we tend to pre-critically think machine outputs function—either as too mechanistic and predictable, or as too random. Behaviors such as these constantly remind one that there is a machine present. The systems performing tonight have all been developed to perform with human musicians in highly improvisatory contexts, and so in order to be effective they need to be realistic (how so?) improvising partners. Yet what constitutes a good improviser is also a work in progress, and open to different, perhaps contradictory, accounts. Collective improvisation is a highly complex set of interactions, and so it is no surprise that the creators of the three systems performing tonight, when designing their systems, focus on distinct, if perhaps overlapping, sub-sets of what is involved in the creation of collective improvisation. There will be occasion to discuss this with the system’s creators after the performance. We have consciously chosen to present these systems in concert this evening in a manner that should allow you to easily hear each of them distinctly, and so easily pick out their contributions, one from the other. Please note that of these three systems only Voyager is designed to produce music in the absence of an input, and so all the pieces performed tonight will begin with a musical contribution by Voyager, whose signal will be picked up by the other systems prior to them entering in the improvisations. George Lewis: Voyager Since its premiere at the Massachusetts College of Art in October 1987, Voyager has gone through many transformations of hardware, software, and materials. The prehistory of Voyager runs from The Kim and I (1979), a non-interactive work written in 6502 assembly language, and Chamber Music for Humans and Non-Humans (1979-81), the first of my interactive works, written in the FORTH programming language. Both of these early works used digitally controlled analog hardware. Rainbow Family, also a FORTH program, was the first of my interactive virtual orchestra pieces, premiering at IRCAM in 1984 with up to four human improvisors performing with three networked Apple II-driven Yamaha DX-7 synthesizers (http://brahms.ircam.fr/works/work/10042/). Between 1987 and 2000, concert versions of Voyager were implemented in various dialects of FORTH, such as FORMULA (Forth Music Language) and HMSL (Hierarchical Music Specification Language). The sound-generating hardware originally consisted of sixteen channels of Yamaha FM synthesis, and later, sixty-four channels of MIDI sample players. In both cases, the system freely deployed microtonal resources. For the American Composers Orchestra premiere at New York’s Carnegie Hall of Virtual Concerto (2004) for symphony orchestra (humans) and improvising solo pianist, my associate Damon Holzborn and I translated the FORTH versions to Max/MSP to enable the system’s performance with the orchestra on a MIDI-capable acoustic piano, the Yamaha Disklavier. In all cases, the interactive conception of Voyager and its predecessors remains central: human and/or non-human musicians are engaged in live, completely improvised dialogue with a computer-driven, interactive "virtual improvisor." The computer program analyzes aspects of the improvisors’ performance in real time, using that analysis to guide an automatic composition (or, if you will, improvisation) program that generates both complex responses to the musician’s playing and independent behavior that arises from its own internal processes. The system does not need outside input to generate music and does not need to wait for such input to commence its performance. This kind of work deals with the nature of music and, in particular, the processes by which improvising musicians produce it, and over the decades, I’ve found that the questions raised by the work encompass not only technological or musictheoretical interests but philosophical, political, cultural and social concerns as well. George E. Lewis is the Edwin H. Case Professor of American Music at Columbia University. The recipient of a 2002 MacArthur Fellowship, a 1999 Alpert Award in the Arts, a 2011 United States Artists Walker Fellowship, and fellowships from the National Endowment for the Arts, Lewis studied composition with Muhal Richard Abrams at the AACM School of Music, and trombone with Dean Hey. A member of the Association for the Advancement of Creative Musicians (AACM) since 1971, Lewis's work in electronic and computer music, computer-based multimedia installations, and notated and improvisative forms is documented on more than 140 recordings. His work has been presented by the BBC Scottish Symphony Orchestra, Boston Modern Orchestra Project, Talea Ensemble, Dinosaur Annex, Ensemble Pamplemousse, Wet Ink, Ensemble Erik Satie, Eco Ensemble, and others, with commissions from American Composers Orchestra, International Contemporary Ensemble, San Francisco Contemporary Music Players, Harvestworks, Ensemble Either/Or, Orkestra Futura, Turning Point Ensemble, 2010 Vancouver Cultural Olympiad, IRCAM, Glasgow Improvisers Orchestra, and others. Lewis has served as Ernest Bloch Visiting Professor of Music, University of California, Berkeley; Jean Macduff Vaux Composer-in-Residence and Darius Milhaud Visiting Professor, Mills College; Paul Fromm Composer in Residence, American Academy in Rome; Resident Scholar, Center for Disciplinary Innovation, University of Chicago; and CAC Fitt Artist In Residence, Brown University. Lewis received the 2012 SEAMUS Award from the Society for Electro-Acoustic Music in the United States, and his book, A Power Stronger Than Itself: The AACM and American Experimental Music (University of Chicago Press, 2008) received the American Book Award and the American Musicological Society’s Music in American Culture Award. Lewis is the co-editor of the forthcoming two-volume Oxford Handbook of Critical Improvisation Studies, and is composing Afterword, an opera commissioned by the Gray Center for Arts and Inquiry at the University of Chicago, to be premiered at the Museum of Contemporary Art Chicago in Fall 2015. Sandeep Bhagwati with Navid Navab: Native Alien Native Alien is a composition architecture implemented as a software environment that generates a musical performance in close interaction with a live-musician. Assembled from modules crucial to some MAX/MSP-based or compatible IRCAM softwares (OMAX, CATART, SPAT, BACH) and various open source softwares, the Native Alien software environment listens to a musician as she improvises on various notational, conceptual and sonic "seeds", and after a multi-layered analysis and tagging process, it generates digital music that is shaped by the system's various preestablished, but highly fluid "states": the outcome is music that reflects the musician's agency, but re-contextualizes it. In some cases this process generates music that affords some kind of sonic or melodic/rhythmical echo, but in most cases what carries over the most is the musicians' high-level behaviour – phrase length, noisiness, dramaturgy, dynamicity. Native Alien subscribes to a rather comprehensive view of mixed interactivity: as involving a superposition of both pre-organized score and improvisation, involving both dialogical and non-dialogical relationships between musician and system, as involving both samples (albeit live-recorded) and live-processing, as involving both a sonic architecture and live-sound treatment. As implied by its name, the Native Alien Environment is both an instrument to learn ("native") and an "alien" environment whose hidden rules may at some times be unfathomable to the musician - even while the performance's overall architecture remains intelligible and accessible to the audiences. Indeed, the central idea of Native Alien is that of permanent unsettledness, of an environment constantly wavering between the reliability of a trustful dialogue - and the surprises of vigorous contestation. Native Alien is an attempt at a largely tradition-agnostic music environment. the actual modes of interacting with the system and the sonic signature of the resulting sounds allow for a large variety of music making approaches: the agnosticism of the system lies in the fact that it is as unsettling and unfamiliar in its structure for a free jazz improviser as for a Hindustani musician, involves the same degree of learning new interaction skills from a new music musician as from a Chinese musician, demands a similar degree of aesthetic openness from a early music as from a traditional jazz performer etc. We will see what it demands of other improvising machines! Sandeep Bhagwati is a multiple award-winning composer, theatre director and media artist. His compositions and comprovisations in all genres (including six operas) have been performed by leading performers at major venues and festivals worldwide. He has directed international music festivals and intercultural exchange projects with Indian and Chinese musicians and important new music ensembles. He was Professor of Composition at Karlsruhe Music University and Composer-inResidence at the IRCAM Paris, ZKM Karlsruhe, Beethoven Orchestra Bonn, IEM Graz, CalArts Los Angeles, Heidelberg University and Tchaikovsky Conservatory Moscow. As Canada Research Chair for Inter-X Arts at Concordia University since 2006, Professor Bhagwati currently directs matralab, a research/creation center for intercultural and interdisciplinary arts. His current work centers on comprovisation, inter-traditional aesthetics, the aesthetics of interdisciplinarity, gestural theatre, sonic theatre and interactive visual and non-visual scores. Navid Navab is a Montreal based alkemist, composer/improvisor, programmer, and sound designer. A graduate of Ontario Royal Conservatory of Music, Concordia University’s Electroacoustics and Computational Arts program, and McGill Music Technology, he has been working for the past several years creating deeply expressive media instruments synthesizing research at IRCAM, CRIMMT, CNMAT, Topological Media Lab and Matralab. Interested in the poetics of gesture, materiality, and embodiment, his work explores the social lives of objects and the enrichment of their embedded performative qualities. Navid uses gestures, rhythms and vibrations from everyday life as basis for real-time sound generation, resulting in augmented acoustical-poetry that enchants improvisational and pedestrian movements. Michael Young: piano_prosthesis The aspiration is to create a computational system able to collaborate with human improvisers in performance, i.e. able to cooperate proactively, on an equal basis. This is the agenda of the UKbased Live Algorithms for Music Network, created in 2004 by Michael Young and Tim Blackwell (Goldsmiths). This system is one of a developing series of pieces that bring together a specific instrument with a related (and transformed) library of samples and real-time manipulations. This is the material the computer can access and transform in performance in response to the musician's improvisation. The musical behaviours of the system, as well as its library of materials distinguish each version. The musician's improvisation is encoded by the computer through a statistical analysis of extracted features and by cataloguing these in real-time. The system assigns each of its observations to a specific set of materials and stochastic behaviours for audio output. Recurring aspects of the player’s performance are then recognised by the computer, and this recognition is ‘expressed’ by recalling the relevant set of output materials. As the improvisation develops, more behaviours are catalogued, leading to more variety in musical outputs. So, the machine expresses its recognition and creative response to the player by developing, and modifying, its own musical output, just as another player might. Both ‘musicians’ adapt to each other through mutual listening and response as the performance develops. The metaphor of prosthetic – rather than conversation – has a currency in debates about user-computer interaction; in this performance there is mutually prosthetic relationship between both collaborators, in both sound material and quasi-intentional behaviour. Coding is in Max/MSP. The challenge in this experimental performance is the substitution of the live performer by another machine improvising system. Communication between systems remains the same however – via audio (i.e. listening) not direct control. Michael Young. Composer and currently Pro Warden (Students and Learning) at Goldsmiths, University of London, UK. He is co-founder of the EPSRC-funded Live Algorithms for Music network 2004. He studied at the Universities of Oxford and Durham, completing a PhD in Composition in 1995. His work has focused on interactive systems: Argrophylax (2006) and ebbs- (2007) are scorebased pieces fusing live performance and mutable computer actions (CD Oboe+ Berio and Beyond, Oboe Classics CC2015). The photo/soundscape exhibition New World Circus is the most recent of collaboration with artist John Goto. Groundbreaking: Past Lives in Grains and Pixels (2007) and Exposure: Living in Extreme Environments (2008) are generative audiovisual installations developed with environmental scientist Paul Adderley with support from the Research Councils UK. The _prosthesis series has been developing since 2007 and includes versions for clarinet, trio, flute and oboe as well as piano. Chris Redgate’s latest CD release (Electrifying Oboe, Métier) includes two versions of oboe_prosthesis. www.michaelyoung.info