Level 2 - Faculty of Humanities

advertisement

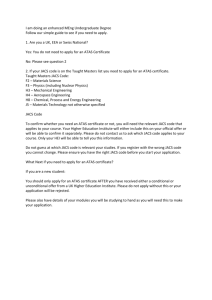

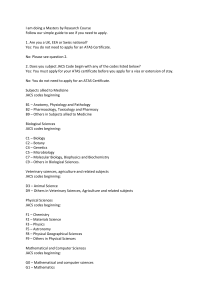

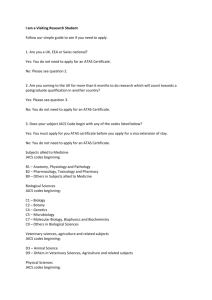

July 2011 Faculty of Humanities Information on the presentation and interpretation of results from the National Student Satisfaction Survey This document is intended to provide some background information for colleagues in Humanities to aid them in understanding the results of the National Student Survey. The information presented is a digest of the answers given to questions that have been asked of the Faculty Teaching and Learning Office over the past few years, and therefore may not cover every query colleagues may have. Reports that are made available: Report by JACS code – publicly available data Report by UoM Schools or subject areas – internally available data mapping results to internal School and Discipline structures Institutional comparisons Student comments Information is provided on the following: A. Publicly available data: NSS results by JACS code B. Internally available results: subject area results mapped to Schools and disciplines in Humanities C. Changes to presentation of NSS data for 2011: overall satisfaction results including sector adjusted benchmarks D. Use of publicly available data: information displayed on Unistats website A: Publicly available data: NSS results by JACS code 1. Publicly available results: These are the formal results that are published by Ipsos-Mori on behalf of HEFCE. Data is only made publicly available if there is a 50% response rate, together with at least 23 respondents. These results will eventually be published on the Unistats web pages. 2. If UoM’s student response does not meet the criteria for the results for a particular subject area to be made publicly available, there will be no data for that particular year . In terms of then publishing data to the Unistats website, Ipsos will do one or both of the following: i. combine the data with that from the previous year in order to give a value for that subject at JACS Subject Level 3 (see 5 below re levels). The data will be flagged as representing two years. 1 July 2011 ii. publish the result for a higher level JACS subject area. For example, a small language may not have enough responses to externally publish a separate result, but the aggregated result for a Level 2 subject area such as European Languages and Area Studies, would be published on Unistats. 3. What are the JACS subject areas? The Joint Academic Coding System: these are used for HE Institutions to report to HEFCE what students are studying. They do not reflect the internal structures of any particular institution. When a new programme is set up, a JACS code is assigned (or more than one if it is appropriate). The NPP1 form asks for the proposer to indicate which JACS code(s) should be applied. 4. Therefore, when the JACS subject area NSS score appears alongside your programme on the Unistats website, the score shown is not necessarily just for your particular programme but for the subject area and may reflect satisfaction on other programmes as well as your own. 5. What do the different levels of JACS code mean? There are many different JACS codes and each area is sub-divided, giving up to four different levels of coding: Level 1, (19 subjects), Level 2 (41 subjects), Level 3 (107 subjects) & Principal Subject JACS (165 subjects). Level 1 Social Studies Level 2 Economics Politics Sociology, Social Policy and Anthropology Level 3 Economics Politics Sociology Social Policy Anthropology Others in Social Studies Social Work Human and Social Geography Social Work Human and Social Geography Principle Subjects L1 - Economics L2 - Politics L3 - Sociology L4 - Social Policy L6 - Anthropology L0 - Social studies L9 - Others in Social studies L5 - Social Work L7 - Human and Social Geography 6. The JACS complete classification is on the HESA website - HESA - Higher Education Statistics Agency . The JACS subject codes are not the same as the UCAS JACS codes but these are linked through the Principle Subject code. For example the UCAS code for BSocSc Sociology is L300 and for the BA(Econ) Sociology is L302. 7. Joint Honours programmes: the responses from students on joint honours programmes are divided between the two JACS subject areas comprising their programme (ie. 0.5 FPE each). The student is responding to questions about their programme as a whole so there is no distinction in the scores given by students on joint honours programmes between the two subject areas (for example, a ‘4’ on teaching quality will be a 4 for each area but will count as a 0.5 FPE in calculations.) 2 July 2011 8. What is the FPE? The Full Person Equivalent figure appears alongside the NSS score. It indicates the sample size that Ipsos-Mori targeted within the particular JACS subject code. This may not match the number of students that you have registered to a programme in your School that comes under that subject area code, as it is likely to include students on programmes from outside of your School that are also assigned to that particular JACS subject area code. 9. Cross institutional programmes: For Humanities, this applies to Architecture (SED). 36% of the Architecture students are registered with UoM, the remainder being registered with MMU. The Architecture students do not know which institution they are assigned to but respond about their student experience as a whole within the Joint School of Architecture. Students’ responses are assigned to the institution to which the student is assigned at registration (they register with the Joint School and not any particular institution, and 36% are assigned to UoM). The size of the cohort assigned to UoM is much smaller than that assigned to MMU. Therefore, a slight difference in satisfaction for a small number of students can influence the result significantly either way. For the 2010 NSS, question 22: UoM: Architecture 70% were satisfied with the quality of teaching in this subject area (23 out of 42 responded) MMU: Architecture 87% were satisfied with the quality of teaching in this subject area (133 out of 196 responded) The impact of a few students can be demonstrated as follows: 16 out of 23 students being satisfied gives the 70% for the UoM stat. If 4 more students were satisfied, this would increase to 87%. It would be expected that the scores for Architecture at UoM and MMU would be the same. However, the smaller percentage of students assigned to UoM means that slight differences in satisfaction have a bigger impact on the percentage score. 10. Why do some institutions not show up in the institutional comparison data? This is because those institutions have not met the threshold for publicly publishing results. B: Internally Available Results: subject area results mapped to Schools and disciplines in Humanities 11. NSS results are also reported within the institution based upon the internal school and discipline structures. 12. If a subject area does not meet the 50% threshold, together with the23 respondents minimum requirement, HEFCE will allow institutions to publish these results internally provided there are at least 10 respondents. These results are given according to our own internal School or discipline areas. The dataset therefore would consist of the publicly available data plus this additional internally available data. 13. The University is given the opportunity to assign codes to the students who form the sample for the NSS. We use our School and Discipline codes assigned in Campus Solutions (Level 3 and Level 4 3 July 2011 respectively). These are the codes assigned as the ‘owning’ School or discipline. In Humanities some Schools use the Level 3 code and multi-disciplinary Schools tend to use the Level 4 codes. For example: SAHC = 3020, History is 4050 NB: 2010 was the first year that the University had reliable results at Level 4, i.e. for disciplines within Schools. 14. Every programme is assigned to an academic group in Campus Solutions (3xxx or 4xxx) which allocates them to the owning School or discipline for their programme. It is this link that assigns the relevant School or discipline code to the students in the NSS population. 15. For 2011, as a pilot the University (Student Experience Office) has created codes for some of the larger interdisciplinary subject areas. For example, Economics has been broken down into BA Econ, BEconSc, etc. Scores will also be reaggregated to be able to return discipline area and School scores for comparision with previous years. The pilot is to ascertain whether this makes the internally available data more useful. School School of Social Sciences Discipline 4002 4125 4186 4187 BA_ECON_ACC_FIN BA_ECON_BUS_STD BA_ECON_ECON BA_ECON_ECON_& BA_ECON_OTHER BA_ECON_SC Student numbers 37 51 165 58 200 117 127 151 54 80 1040 16. Joint Honours Programmes: On Campus Solutions the owning organisation will be split in accordance with the two disciplines comprising the programme. i. If the programme crosses two Schools, the results for that programme will be assigned to the ‘home’ School, which is normally the one listed first in the ‘owning’ organisations section on Campus Solutions. For example, BA (Hons) X and Y, for which the administering School is home to discipline X, the responses from students on that programme will be assigned to the administering School ii. If the programme crosses two disciplines within the same School, the results for that programme will be assigned to the first of the disciplines listed as an ‘owning’ organisation in Campus Solutions. For example, BA (Hons) X and Y, for X and Y are in the same School, if the internal data reports results at discipline level, the responses from students on that programme will be assigned to discipline X. 4 July 2011 17. Ipsos-Mori makes the data for the University’s respondents available to us and we are only allowed to publish this mapped data internally, and can only do that if the threshold of 10 respondents is met. 18. Data marked “Internal Use Only” may not be used outside the University. 198. Why do figures appear to be different from the publicly available data and the internally available data? The assigning of student respondents may differ when assigning to a subject area and then assigning to an internal School or Discipline and this can skew the different reports. For example for the 2010 NSS, Modern Middle Eastern History must be reported under a a History JACS subject area code as well as a Middle Eastern Studies JACS code. Responses are therefore included in History and African and Modern Middle Eastern Studies for the publicly available data, and reported only under LLC and MES on the internal data. This creates a difference between the publicly available results and internal results for MES. C: Changes to presentation of NSS data for 2011: overall satisfaction results including sector adjusted benchmarks 20. It was reported to Senate on 22nd June 2011 that HEFCE are planning to change the way in which the whole institution table for Question 22 results would be published. “…. comparisons based purely on the aggregate scores, both between institutions and between courses within institutions may be misleading if adjustments are not made for student profiles and course characteristics” (HEFCE letter to the President 25 May 2011, included in Senate papers). Underpinning this approach are the following principles: The need to avoid simplistic comparisons of institutions that do not take into account subject mix and student characteristics The need to be consistent in the treatment of data at institutional level (ie to provide relevant benchmarks and significance indicators alongside the raw data) The importance of selecting relevant factors in the calculation of the benchmarks, ie those variables over which the institution has limited or no control. 21. For 2011, sector-adjusted benchmarks will be released alongside the raw results, with significance indicators. Results for Question 22 from the 2010 data will also be published in this new format. 22. The benchmarks to be used are those that are considered to have a consistent and material effect on responses to Question 22 and are largely outside the institution’s control: subject ethinicity age mode of study sex disability 5 July 2011 23. Sector averages will be calculated for each benchmarking group. The benchmark for each institution will then be calculated by taking a weighted average of the scores for benchmarking groups taking into account the mix of students. 24. Example: The table below gives the benchmark calculations for a simple sector with only three institutions and only two factors that affect performance. The first column contains the sector results for each of the benchmark groups. The next three columns show the number of students in each benchmark group for each institution. The bottom row shows the benchmark of each institution, this is calculated by taking a weighted average of the benchmark scores for example, institution A’s benchmark is calculated as (10 x 50% + 20 x 60% + 5 x 40% + 10 x 55%)/45 -= 54%. Age Young Sex Male Female Mature Male Female Institutional Benchmarks Sector rates 50% 60% 40% 55% Institutional Populations A B 10 10 20 5 5 20 10 10 54% 48% C 20 15 5 5 53% 25. Significant indicators will be presented alongside the benchmarks. These are designed to aid users in determining whether the difference between the indicator and its benchmark is both statistically and materially significant. Historically these tests have been based on a difference of more than three standard deviations and at least three percentage points. D: Use of publicly available data: information displayed on Unistats 26. The information displayed on Unistats is a mix of FPE and head counts. 27. % Student Satisfied: Is the percentage of students studying that subject at that University who said they either definitely agree or mostly agree to the National Student Survey question "Overall, I am satisfied with the quality of the course". 28. What students thought about their course: this information is displayed when a user of the Unistats website selects the National Student Survey tab when viewing statistics on a particular course at UoM. There are 3 levels of information a user could view (for each level, only the information on responses to question 22 are shown for the purposes of giving an example): Level 1 Overall, I am satisfied with the quality of the course Overall, I am satisfied with the quality of the course. THE UNIVERSITY OF MANCHESTER: Music (f/t , f/d) Agree No.respondents 87% 36 of 49 Level 2 6 July 2011 Overall, I am satisfied with the quality of the course 1= Definitely disagree 2= Mostly disagree 3= Neither agree nor disagree 4= Mostly agree 5= Definitely agree Overall, I am satisfied with the quality of the course. THE UNIVERSITY OF MANCHESTER: Music (f/t , f/d) 1 2 3 4 5 0% 10% 3% 41% 46% n/a No.respondents 0 36 of 49 Level 3 Overall, I am satisfied with the quality of the course Where the course has a small number of students, it is possible that the % Agree is less precise. This is more likely as the difference between the upper and lower percentages increases. Overall, I am satisfied with the quality of the course. THE UNIVERSITY OF MANCHESTER: Music (f/t , f/d) Lower Agree 70% 87% Upper No.respondents 95% 36 of 49 Further information 29. Further information, including previous results, can be found on the Planning Support Office website at: http://www.campus.manchester.ac.uk/planningsupportoffice/PSO/MI/StudentFeedback/index.html Acknowledgements Thanks to the following for their input to this guide: Jenny Wragge, Student Experience Officer, Students Services Centre Kevin Blake, Planning Officer (External Reporting), Planning and Support Office. Emma Rose, Senior QAE Administrator, Faculty of Humanities July 2011 7 July 2011 8