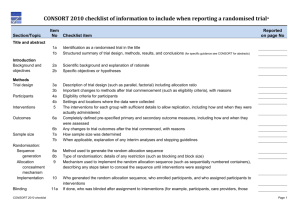

3a – Trial design

advertisement