Let`s say you just read my paper “Basics of laser scanning” Now I

advertisement

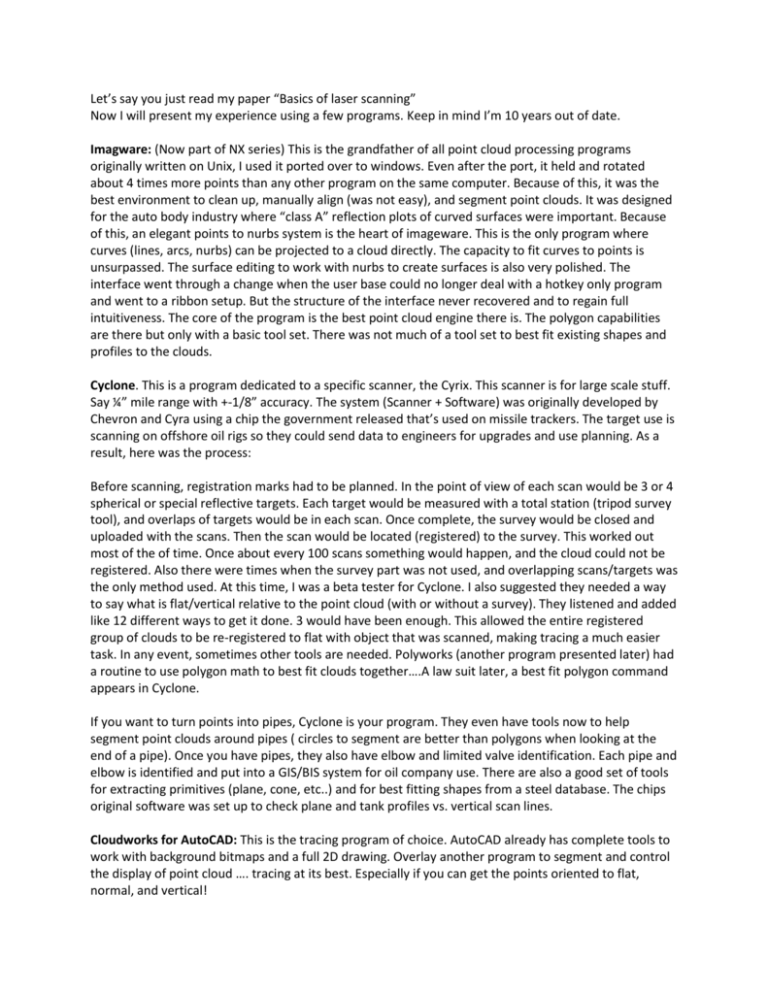

Let’s say you just read my paper “Basics of laser scanning” Now I will present my experience using a few programs. Keep in mind I’m 10 years out of date. Imagware: (Now part of NX series) This is the grandfather of all point cloud processing programs originally written on Unix, I used it ported over to windows. Even after the port, it held and rotated about 4 times more points than any other program on the same computer. Because of this, it was the best environment to clean up, manually align (was not easy), and segment point clouds. It was designed for the auto body industry where “class A” reflection plots of curved surfaces were important. Because of this, an elegant points to nurbs system is the heart of imageware. This is the only program where curves (lines, arcs, nurbs) can be projected to a cloud directly. The capacity to fit curves to points is unsurpassed. The surface editing to work with nurbs to create surfaces is also very polished. The interface went through a change when the user base could no longer deal with a hotkey only program and went to a ribbon setup. But the structure of the interface never recovered and to regain full intuitiveness. The core of the program is the best point cloud engine there is. The polygon capabilities are there but only with a basic tool set. There was not much of a tool set to best fit existing shapes and profiles to the clouds. Cyclone. This is a program dedicated to a specific scanner, the Cyrix. This scanner is for large scale stuff. Say ¼” mile range with +-1/8” accuracy. The system (Scanner + Software) was originally developed by Chevron and Cyra using a chip the government released that’s used on missile trackers. The target use is scanning on offshore oil rigs so they could send data to engineers for upgrades and use planning. As a result, here was the process: Before scanning, registration marks had to be planned. In the point of view of each scan would be 3 or 4 spherical or special reflective targets. Each target would be measured with a total station (tripod survey tool), and overlaps of targets would be in each scan. Once complete, the survey would be closed and uploaded with the scans. Then the scan would be located (registered) to the survey. This worked out most of the of time. Once about every 100 scans something would happen, and the cloud could not be registered. Also there were times when the survey part was not used, and overlapping scans/targets was the only method used. At this time, I was a beta tester for Cyclone. I also suggested they needed a way to say what is flat/vertical relative to the point cloud (with or without a survey). They listened and added like 12 different ways to get it done. 3 would have been enough. This allowed the entire registered group of clouds to be re-registered to flat with object that was scanned, making tracing a much easier task. In any event, sometimes other tools are needed. Polyworks (another program presented later) had a routine to use polygon math to best fit clouds together….A law suit later, a best fit polygon command appears in Cyclone. If you want to turn points into pipes, Cyclone is your program. They even have tools now to help segment point clouds around pipes ( circles to segment are better than polygons when looking at the end of a pipe). Once you have pipes, they also have elbow and limited valve identification. Each pipe and elbow is identified and put into a GIS/BIS system for oil company use. There are also a good set of tools for extracting primitives (plane, cone, etc..) and for best fitting shapes from a steel database. The chips original software was set up to check plane and tank profiles vs. vertical scan lines. Cloudworks for AutoCAD: This is the tracing program of choice. AutoCAD already has complete tools to work with background bitmaps and a full 2D drawing. Overlay another program to segment and control the display of point cloud …. tracing at its best. Especially if you can get the points oriented to flat, normal, and vertical! Polyworks: This is a program dedicated to polygons. This software uses a great best fit routine to get the clouds together. For smaller scale scanners with only a few scans, this works well. Great set of polygon tools. They also have some primitive and shape fitting, but the power of the polygon tools and cloud fitting are the reasons to use the program. If you have a scan of say one side of a face or archeological dig, this is the right program. Geomagic: This is another polygon program, but with what I would call a roller ball approach. First off the specialty of this program is creating an enclosed solid of polygons out of scans. Especially suited for stuff like hearing aids, teeth, medical in general. It takes work to get a sharp edge out of this program, but for some applications where the points are treated like clay using a large ball bearing to shape it is a good set of tools. Others worth a look: Rapid form, Rhino, Couldworks for Microstation. Integration with various GIS, BIM, BIS systems Also, every scanner has some set of software just to manage the scanned data. So there is a bunch of small start-ups right now trying to program this stuff. Here is the key to the whole thing: - Start with the core of imageware 1. Create a format for storing points with the following in mind: a. Fast moving data= low level coding=$$$ b. Want to capture, and rotate in realtime, millions of points/second (spherical system) c. Data could be any frequency (starting with the color spectrum) (Laser, MRI, Radar, etc.) d. The sensor frequency and slew rate would need to be stored e. The timing control settings……per device f. GPS, GIS, other coordinate information g. Link to entered database, info like: Scan 5 at 123 maple St. h. Redundancy settings, many scanner use redundancies to achieve accuracy. 2. If you want it all, it’s going to cost ya! Pick a format. Now is that format only for points or also other objects? 3. You have to create a duel engine. Half for points, half for objects. The points need to be multithreaded. Connect that to the single threaded engine of SE. I would want about 24 X 4 Ghz cores at 3 MS access times to do real time point cloud processing. And the best vid card I could get say 5K budget. 4. People wonder why I say so much power is needed when they can get X done with Y. It all depends on the scale. A Knee replacement is a completely different animal than say a state capital building (I have done both). 5. Now I will describe what I would call the full process: Let say the task at hand is to scan a typical 2000 Sq. Ft house. Inside and out. For that application, I want a 360 deg scanner w/ color and a photo(s) taken from each scan location. For the outside, let’s say 12 scans covered most everything. Let’s also say 40 scans were used inside the house between 15 rooms and spaces. Each scan is 1 million locations, 4 colors, 40 scans, so we have 160 million points collected. How do we put them together? With some pre planning it’s simple. Each instrument has a compass and gps location recorded. Once you use that to get the scans close, then you use best fit cloud information to get them together. But to do that right, you first have to go clean the clouds. So before you can even look at the scans together, you are manually editing the point clouds to remove artifacts from scanning. Let me explain the artifacts. When a laser half hits a corner of an object, the measurement is half way between the corner and whatever the other half of the beam hit. Also anything reflective like chrome or a mirror create havoc with laser measurements. A bit of talcum powder can resolve some situations. In any event, there is stuff to clean up in laser scans. Think of how the 3D projections in Star Wars have the lines in them, those lines are the artifacts being cleaned up. So one cloud at a time you open up the scans, rotate them until you find a good angle, then start slicing away the stuff you think is garbage. After about 30 hours at it you start to get fast. It takes a while to learn how to read points. How they round out the inside corners, and don’t catch the outside corners. How water and windows are reflective, and what a dog looks like running across the yard during a scan. Ok, all the scans are now clean. You then let the computer finish the job of putting the scans together for use. Now, what direction is up, where is north, and what plane do you want to call the front of that building, and what is flat? Once you get all of that worked out, you can then line up the points with the world with the right skew towards flat and vertical for tracing. Keep in mind that during the entire process the photos were used as reference to help determine what were valid and not valid points to delete. Now we start the business of converting the points to a format less heavy and more use full. There are varying degrees of accuracy. Let’s say we start with a simple room that’s about a 10’ cube. “About” was the key phrase there. Let’s say we isolated the points for the room. We could then isolate the points we think best represents each wall, convert them directly to planes, expand them, intersect, then trim away the outsides of the planes, and end up with no corners at 90 deg. Or the same could be done accepting implied vertical and flat and normal to the word, forcing 90 deg corners and much less accuracy. Another rout would be to use nurb curves for the corners of the room, and achieve a very high accuracy for a low point of reference count. And if say a sculpture was on one wall, a polygon model would be the right thing. So just looking at a cube like object may not be that simple. What ends up happening in situations like this is tracing. The Tracing process can force flat to the world. The points get organized into pieces for tracing. Plan, elevation, etc.….. Making a 3D model reverse engineering a house is not yet directly practical. The step of tracing is still required in between. Each window, door, molding, sink, fixture, would all need to be traced individually. What you were thinking would be a simple process took forever. I know that today there are a few companies who specialize in exactly this and have it down to a science can kick out plans quickly, but it took them time and effort to develop that process. One of the new things driving the desire for 3D scanners is 3D printing. This has very little to do with traditional (primitive) 3D modeling until you start to look at the required file size. If you can reduce the file size, you reduce the tooling requirements. Model files are much smaller than the point files or even the polygon files. Nurbs are the one format in between with one foot in both worlds. So in general there are the following formats in rank to file size: Points, Polygons, Nurbs, Primitives. The further down the line, the less data required for the same surface area within a specified level of accuracy. The goal of reverse engineering is at its hart, the process of segmenting points for the purpose of each level of desired accuracy. Then being able to make the choice of increasing or decreasing that decision for various selected regions. To be capable of this, takes lots of processing power. Let’s say I wanted a bit more detail around the gutter of the house. I needed all 20 of the outside scans loaded to change how some of them were processed. How long did that take? Taking a step back, what is needed is kind of like what Adobe PDF did for file size for 2D, this is needed for full 3D vector and 3D printing. All of the various formats have to be re-canned into one for printing. They are starting to fail at keeping up, and file size are growing again. I presented in an earlier post about processing point clouds, in that there are only so many ways to slice the cat. Once you have your cloud sliced up as desired, then you convert it to some object. The exact way the points were sliced up was determined by the conversion process. So let’s look at the conversion processes. Once you have a set of points cleaned and isolated, you can directly convert it to: Plane Sphere Cylinder Cone Any pre-defined shape cross section along a path (many ways) Line Arc Nurb curve Nurb surface Polygons Another point cloud The existing data base of object becomes important right as you get started. AISC steel & Pipe for example. Also using other inputs like photogrammetry, manual inputs, and laser reflectance can all be used to speed up the process. The general progression is: Points-polygons-nurbs-primitives. Polygons are used to fit the points buy the computer: Primitives (2D and 3D) are used to segment the points by the operator: (Point segments) The Segments are converted to geometry by the computer and user (I’m thinking nurbs or polygons). The converted geometry is then used to make the desired model (Nurbs and polygons to primatives). Each method of creating a primitive would have its own method of growth of the polygon or nurb surface depending on the desired result for crispness of corners. After all of the conversions The corners many of the desired results do not yet exist. Say you had a simple cube sitting there. What was converted was 5 planes and the underside is missing. All of the converted planes are smaller and inside the cube. The planes are grown, intersected, then the wire frame of the intersection is used to “model” the cube. When trying to do this, a problem occurs. The modeling fails because the corners are not at 90 deg. The vertical flat thing came back to bite us. When converting simple objects, varying levels of controls over orthogonal vs flat vs nurb vs polygon will need to be in place for every kind of geometry. If you ask what’s a kind of geometry: metal extrusions, Human Body, water and land erosion, Architecture, these are all different kinds of geometry. If you want to get into reverse engineering, you have to do it all and do it well. Points to polygons to nurbs to primitives. All with photo and gps/gis integration. Examples of Multi software practical applications. For delivery to DOT, road survey: Register scans to GPS and total station. Clean clouds of traffic and other noise Trace edge of road using coudworks Project traced edge through cloud using imagware, Re-calculate nurb curve to polygons and export to microstation. Structural tower: Besides the scans, I also like caliper measurements of any H and I beam edges. Convert 3 or four planes of the beams and determine the outer corners. Determine the beam size from a steel table. Extrude that beam down the extracted line. Repeat for each beam, tower etc. fairly quick process. This worked well in Cyclone as any mistakes were clearly obvious. Full on architecture. A combination of every tool possible is required. Consider you want to convert a spiral staircase with complex banisters into the smallest possible file size with the least operator input. Program that! At minimum the operator would have to confirm the cross sections of all extrusions and rotary profiles for crispness settings to regain corners. And the level of averaging would be another typical adjustment. All a very slow process with current tools using tracing, best fit curves and surfaces piece by piece. Then moved into a 3D molding software traditionally 3D Studio for the mixture of primitive, nurb, polygon, and bitmap mixture of tools. Dirt pile: This is a great application for laser scanning. An old school 2.5 Civil Tin works wonders for this. The only decision is how much to thin the data and how. Then you can report back the amount of coal or wood that is sitting in the pile. I’m going to jump back to basics. Cloud segmenting is at the core of all cloud processing. Being able to see the points is the most important part of segmenting. Back to the house case, where 20 scans were outside. Can my computer real time rotate 80 million points? Let’s say it can. What tools do we have? Most programs allow you to draw polygons or rectangles around a portion of a could from a point of view and isolate it. Some programs have cleaning tools that automatically reduce noise. What I have always looked for is Cubes and Spheres to isolate point regions. The best mixture of all the way to slice up clouds has yet to be figured out. Also worth throwing into the mix is scanner reflectance. With color scans, the color can be used as a segmenting tool. How about some photogrammetry thrown in.? Negotiating between segmenting and modeling along with flat and vertical along with varying model complexity and size is not an easy balance. I hope this helps. Once you open the can of point clouds, all of the above issues pop up.