MS 401finalreport

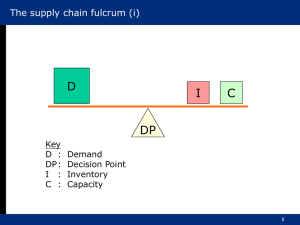

advertisement

-MS 401Term Project Final Report GROUP # 5 Can Cetin – 10594 Dogan Ugur Colak - 10611 Veysel Sonmez – 10389 Instructor : Murat Kaya 2009/2010 Spring PART I: FORECASTING The forecasting part of the project has 6 datas as products which need to be observed, analysed and forecasted according to Winter’s Triple Exponential Smoothing Forecast Method. This method is applicable for the seasonal datas, which are repetitive in a certain period of the year, month or week. At first, we observed that Winter’s Method is applicable for our datas, as the project requirements have already foreseen. The demand data graphic of the one of the products is below: 3000 2500 2000 1500 1000 500 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 Graph 1: Demand data graph of Product 4 Part a) In order to reach the forecast datas by applying the Winter’s Method, we need to construct the initial conditions at first. Moreover, progress of the project requires the values of the initial slope ( 𝐺24 ) and initial intercept (𝑆24) with respect to middle points of demand datas for each year (These might be expressed as 𝑉1and 𝑉2 ). Apparently, we initialized S and G value from the 24th period because, we are required forecast periods between 24 and 36 in part a) and between 24 and 28 in part b). The calculations of V, S and G values were done according to formulations below: Excel formulation: 𝑉1= average(𝐷1 ; 𝐷12); // 𝐷𝑖 = Demand values for period i 𝑉2 = average(𝐷13 ; 𝐷24); 𝐺24 = (𝑉2 -𝑉1) / N; // N = number of periods in each specific season ( =12) 𝑆24= 𝑉2 + [ (N+1) / 2 – 1]* 𝐺24 // [ (N+1) / 2 – 1] = Distance between intercept and 𝑉2 ( =5,5) After we found the slope and intercept datas for each product, the seasonal factors ( 𝐶𝑖 ) that affect the forecast datas in each period of a certain season are required to be specified. Normally, there is a formula for finding these seasonal factors, which is: 𝐶𝑖 = 𝐷𝑖 / ( V - [ (N+1) / 2 – j]* 𝐺24 ) However, in the light of this formulation, we simplified our work and determined distance of V point for each demand datas graphically, as “Distance to V points”. Therefore, we eliminate the effort dealing with the formula above. Table 1: “Distance to V points” table for determining seasonal factors In reference to this change, we updated our formula as: 𝐶𝑖 = 𝐷𝑖 / ( V + [𝐷𝑖𝑠𝑡𝑎𝑛𝑐𝑒 𝑡𝑜 𝑉 𝑝𝑜𝑖𝑛𝑡]𝑖 * 𝐺24 ) Furthermore, we found the seasonal factors for each of 24 months. However, these datas should refer the annual observation. In other words, there may not be 2 seasonal factors for a specific month in a year, unless you update the seasonal factors. Therefore we applied two sequential processes: Firstly, we took the average of 2 seasonal factors, which refers the same period. For example, for the March we determined the seasonal factor as: 𝐶𝑀𝑎𝑟𝑐ℎ = ( 𝐶3 + 𝐶15 ) / 2 The excel formulation of determination is; 𝐶𝑀𝑎𝑟𝑐ℎ = average (𝐶3 ; 𝐶15) The sum of all seasonal factors should be equal to number of periods in a specific season, which is represented by N and equal to 12 in our project. On the other hand, there might be a problem encountered frequently that the sum might not be equal to 12. In order to solve this problem, we need to put all seasonal factors into the normalization process, which is the second one. For instance, our sum is not equal to 12 and we would like to normalize all seasonal factors. Then, we might specifically normalize 𝐶𝑀𝑎𝑟𝑐ℎ as: 𝐶𝑀𝑎𝑟𝑐ℎ (𝑁𝑜𝑟𝑚𝑎𝑙𝑖𝑧𝑒𝑑) = ( 𝐶𝑀𝑎𝑟𝑐ℎ / sum) * 12 This process makes the summation of all seasonal factors equal to 12. Finally, we calculated the forecast values in part a) with respect to S,G and C values (The forecast datas are on the spreadsheet). Part b) In part b), we were required to make on-step ahead forecasts for the months between 24 and 28 by assigning arbitrary values to smoothing constants. Moreover, we were also required to observe which values of the smoothing constants minimize Mean Squarred Error for each of six products. At first, we had already obtained S,G and C values and we did not have to update the seasonal factors. We had also sales datas for the months between 24 and 28, so we could make one-step forward forecast. However, we need to determine Alpha, Beta and Gamma values arbitrarily (even we do not need Gamma values because of not updating the seasonal factors). Therefore, we specify eight different Alpha, Beta and Gamma values for the MSE optimization. These values are below: Table 2: Smoothing constant values determined arbitrarily For each product, we use these smoothing constants in a sequence and observed the Mean Squarred Error (MSE) datas. The best MSE solutions are below: Table 3: The best MSE solutions found among 8 trials by changing smoothing constants Finally, we observed the Alpha, Beta and Gamma values. Gamma values were redundant as it is stated before because of not updating seasonal factors. Beta values could go up and down. For instance in Table 3, Beta value of Product 4 minimizes MSE equals to 0,3 , while Beta value of Product 3 minimizes MSE equals to 0,7 . However, Alpha values that minimizes MSE are fluctuating the values between 0,12 and 0,4. This observation implies that smoother Alpha values in part b) are much more effective than the Beta and Gamma values. PART II: INVENTORY MANAGEMENT In the second part of the project we developed an inventory policy for 25 products. The forecast, unit cost, setup cost, annual interest rate and lead time data were provided in the data file. Also in every excel file we made a data sheet as we constructed our model responsive to all possible data changes. For example if any of the data in the data sheet is changed then the whole model will be updated immediately according to the new data. Part a) In the part II-A we used (s,S) policy of the (Q,R) model. We did not use just the (Q,R) model because it was not exactly fit to the problem such as the lead times were likely to be long. In the (Q,R) model we were checking on hand inventory while making the decision to give an order or not. But in this problem as the lead times are great we decided to use inventory position concept. Because lead times are long we had to check our inventory position every week. Our inventory position in the current week is equal to the sum of inventory position of the last week, order released in the current period minus current demand. We set s = R and S = Q+R. If the inventory position is less than s, we ordered (S-s) units which will be received after lead time amount of weeks. If the inventory position is at least s, we did not order at all. We took all the forecast data and upon them we determined the annual mean, the mean in the lead time and standard deviation. We calculated L(z) function and by iterations we obtained optimal Q and R values. Then for 25 products we obtained 25 different (Q,R) values and used them in our calculations. Then we calculated holding cost according to average inventory level, setup cost according to the number of our orders and shortage cost according to our negative signed ending inventory. For order releasing we checked the inventory position of previous week. If inventory position of previous term is smaller than s value we ordered S – inventory position. The orders arrived after lead time amount of weeks. We used OFFSET function in excel while carrying the cell to another cell. By this function we made the released orders to arrive after lead time amount of weeks. Part b) In this part we repeated the calculations which we have made in part a. But here we did not use shortage cost as in some cases it is difficult to determine the stock out cost. That is why here we used service level which is a kind of common substitute. The value is given as 97%, which means the probability of not stocking out during lead time in this model is 97%. Then we found the corresponding z value which is equal to 1,88. Besides that, as it is mentioned above our model is responsive to all possible data changes. If service level changes our z value changes correspondingly. While computing z value we used normsinv() function. We repeated our calculations according to the new Q and R values and computed holding cost, setup cost. Here we did not have any stock out cost as we are using service level. Part c) In this part we took future demand and given orders into consideration. There are orders which will be received in week 31,32 and 33. We used these orders as beginning inventories. For example an order arriving in week 32 is added to the beginning inventory of week 32 immediately. Again for quick responsiveness we added possible order options for the remaining 42 weeks. That is to say in our model it is available to receive an order let’s say in week 66. We can consider them as scheduled receipts. So our model can react to possible scheduled receipts in any week, not just first three weeks. Again we used the forecast data from the data sheet for the 45 weeks. Here we used the Q and R values which we determined in part b as question says so. We did not use the optimal Q and R values because we did not make any iteration, did not use mean and standard deviation in the lead time. That is why we could not determine the optimal Q,R values. As we are not using the optimal Q,R values we had stock outs in many weeks. This can be observed in the penalty cost in the excel sheet.