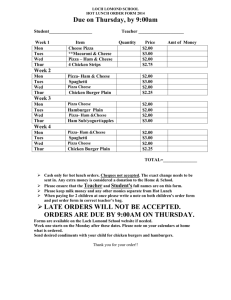

Exercise Compute J of fitnn1 - clic

advertisement

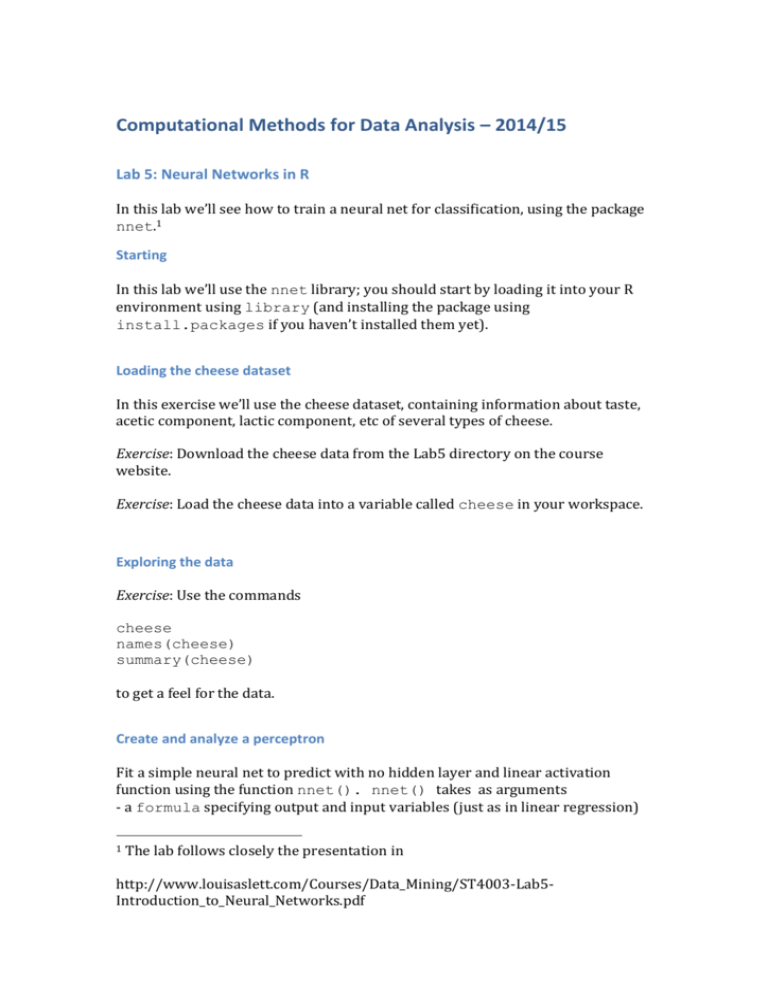

Computational Methods for Data Analysis – 2014/15

Lab 5: Neural Networks in R

In this lab we’ll see how to train a neural net for classification, using the package

nnet.1

Starting

In this lab we’ll use the nnet library; you should start by loading it into your R

environment using library (and installing the package using

install.packages if you haven’t installed them yet).

Loading the cheese dataset

In this exercise we’ll use the cheese dataset, containing information about taste,

acetic component, lactic component, etc of several types of cheese.

Exercise: Download the cheese data from the Lab5 directory on the course

website.

Exercise: Load the cheese data into a variable called cheese in your workspace.

Exploring the data

Exercise: Use the commands

cheese

names(cheese)

summary(cheese)

to get a feel for the data.

Create and analyze a perceptron

Fit a simple neural net to predict with no hidden layer and linear activation

function using the function nnet(). nnet() takes as arguments

- a formula specifying output and input variables (just as in linear regression)

1

The lab follows closely the presentation in

http://www.louisaslett.com/Courses/Data_Mining/ST4003-Lab5Introduction_to_Neural_Networks.pdf

- a parameter data specifying the data frame to be used

- a parameter size specifiying the number of hidden units (0 in this case)

- a parameter skip specifying whether the inputs are directly connected with

the outputs or now

- a parameter linout specifying whether a linear activation function is

desired.

(see http://cran.r-project.org/web/packages/nnet/nnet.pdf for full details of all

the arguments)

For example, the following command creates a perceptron with three input

variables, one output variable, and using a linear activation function instead of

the logistic function.

> fitnn1 = nnet(taste ~ Acetic + H2S + Lactic, cheese,

size=0, skip=TRUE, linout=TRUE)

> summary(fitnn1)

In the output of summary(), i1, i2 and i3 are the input nodes (so acetic, H2S

and lactic respectively); o is the output node (taste); and b is the bias. The values

under the links are the weights.

Evaluating the predictions of a perceptron

Let us now use the J cost function implemented in Lab 1 to evaluate the model:

J = function (model) {

return(mean(residuals(model) ^ 2) / 2)

}

Exercise Compute J of fitnn1

Let us now compare this to the fit we get by doing standard least squares

regression:

fitlm = lm(taste ~ Acetic + H2S + Lactic, cheese)

summary(fitlm)

Exercise Compute J of fitlm

Adding a Hidden Layer With a Single Node

We now add a hidden layer in between the input and output neurons, with a

single node.

> fitnn3 = nnet(taste ~ Acetic + H2S + Lactic, cheese,

size=1, linout=TRUE)

During backpropagation you should see values like the following:

# weights: 6

initial value 25372.359476

final value 7662.886667

converged

You can visualize the structure of the network created by nnet using the function

plot.nnet(), in the devtools library

library(devtools)

The following encantation enables devtools:

source_url('https://gist.githubusercontent.com/fawda123

/7471137/raw/466c1474d0a505ff044412703516c34f1a4684a5/n

net_plot_update.r')

We can now plot the architecture of fitnn3 with the following command:

plot.nnet(fitnn3)

(NB plot.nnet() doesn’t seem to work with perceptrons)

Train and Test subsets

In this exercise we are going to use part of our data for training, part for testing.

First of all, download the Titanic.csv dataset into a variable called data. This

dataset provides information on the fate of passengers on the maiden voyage of

the ocean liner ‘Titanic’. Explore the dataset.

Then split the dataset into a training set consisting of 2/3 the data and a test set

consisting of the other 1/3 using the method seen in Lab 4.

n <- length(data[,1])

indices <- sort(sample(1:n, round(2/3 * n)))

train <-data[indices,]

test <- data[-indices,]

The nnet package requires that the target variable of the classification (in this

case, Survived) be a two-column matrix: one column for No, the other for Yes

— with a 1 in the No column for the items to be classified as No, and a 1 in the

Yes column for the items to be classified as 0:

No Yes

Yes 0 1

Yes 0 1

No 1

Yes 0

0

1

Etc. Luckily, the class.ind utility function in nnet does this for us:

train$Surv = class.ind(train$Survived)

test$Surv = class.ind(test$Survived)

(Use head to see what the result is on train for instance.) We can

now use train to fit a neural net. The softmax parameter specifies a logistic

activation function.

fitnn = nnet(Surv~Sex+Age+Class, train, size=1,

softmax=TRUE)

fitnn

summary(fitnn)

And then test its performance on test:

table(data.frame(predicted=predict(fitnn, test)[,2] > 0.5,

actual=test$Surv[,2]>0.5))