Pelham Ch 6 answers

advertisement

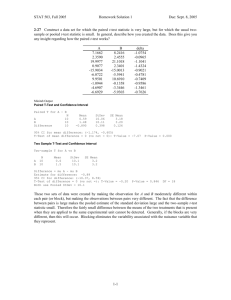

Ideal Answers to Chapter 6 Questions QUESTION 6.1a. A one sample t-test showed that it does take more than the comparison standard of $20 (twice the starting amount of $10) to make people feel twice as happy as if they had received $10, t (19) = 4.06, p = .001. The sample mean was $43.75, and this value exceeded the normative (i.e., linear) standard of $20 that would hold if people followed a linear rather than a curvilinear decision rule. The comparison standard of $20 is highly appropriate in this case because the null hypothesis is based on what would happen if people did follow a perfectly linear decision rule. It is, in a sense, the population value that would exist for a hypothetical group of linear decision makers. The p value of .001 shows that this finding would be significant even if we set alpha at the higher than usual level of p = .01. There is only about 1 chance in a thousand (p = .001) of getting a result this extreme or more extreme by chance if people are truly linear decision makers. QUESTION 6.1b. My analysis confirmed that SPSS gave me the correct value for this t-test (and thus must have correctly calculated the standard error of the mean). Based on the formula for the onesample t-test provided earlier, the t-test value should be: t = (43.75 – 20.00) / (26.1873/√20) = 23.75 / (26.1873 /4.472) = 23.75 / 5.8558 = 4.056 QUESTION 6.2a. If you were to recode these data to reflect whether people exceeded the $20 standard, a chi-square test to see if more than half of the participants exceeded this value would fail to yield a significant result, apparently due to a large drop in power associated with using such a crude measure, χ2 (1) = 3.2, p =.074, N = 20. QUESTION 6.2b. This marginally significant result wouldn’t change much, t (19) = 1.90, p = .072, if you conducted a t-test on the same recoded (dichotomized) data. The problem with recoding the data is that they are now less sensitive to individual scores. Saying it would take $100 to be twice as happy as getting $10 is much stronger support for the hypothesis than saying it would take $21, but the dichotomous data do not reflect this important fact. Dichotomizing is a bad idea. QUESTION 6.3. Table 1. Mean Increase in IQ Scores as a Function of Manipulated Teacher Expectancies Control Condition 12.00 (16.39) Bloomer (Positive Expectancy) 27.36 (12.57) Note. Standard deviations appear in parenthesis. Children who were identified as bloomers (mean increase 27.4 points) in this field experiment showed significantly greater increases in IQ over the course of the school year compared with those not labeled bloomers (mean increase 12.0 points), t (28) = -2.68, p = .012. Presumably the increase shown by the non-bloomers simply reflects normal maturation and learning. The extra 15 point increase shown by the bloomers appears to reflect the effects of being labeled. Of course, it’s unlikely that teachers were aware of any special treatment they were giving the bloomers, but they must have been treating the bloomers better than they treated the typical child, perhaps by paying more attention to the bloomers or giving them more encouragement. Of course, the fact that that the bloomers and non-bloomers were chosen at random (unbeknown to the teachers) controls for all kinds of individual differences between the kids about which we might otherwise worry. For example, it’s highly unlikely that the bloomers were smarter to begin with or came from wealthier homes than the non-bloomers. These results suggest that, even when such expectancies have no basis in fact, teacher expectancies may have a dramatic impact on children’s intellectual development. Teachers should be taught about the subtle forms of bias that must be responsible for these effects and, if at all possible, should be trained to avoid such biases. QUESTION 6.4. Recoding these data as dichotomous treats an IQ increase of 1 point exactly like an increase of 40 points. This is not at all sensitive to the size of these IQ changes. Thus, it’s not surprising that neither a chi square test of association (between treatment group and the dichotomous increase score), χ2 (1) = 3.47, p =.062, nor an independent-samples t-test yielded a significant result, t (28) = 1.92, p = .066. See output file for details. These data should not be recoded, especially when the large majority of children would be expected to show some small increases in IQ due to simple maturation and learning. As was the case for the happiness study, there is no good reason to convert interval level data to less sensitive nominal scales. QUESTION 6.5a. We created a simple independent variable based on whether these men did or did not live in a state resembling their first names (e.g., Cal living in California and Tex living in Texas would both would be coded affirmatively). We created a dependent variable by subtracting how much each man liked the letter that did not begin his name from the letter that did begin his name. So for men named Cal, for example, this score was liking for the letter C minus liking for the letter T (see the attached syntax commands). An independent samples t-test yielded a nearly significant effect in the expected direction, t (38) = 1.94, p = .060. The men living in states that resembled their names had a mean name letter preference of +1.2, and those who lived in states that did not resemble their names had a mean name letter preference of only +0.1. We must be very cautious about this result, however, because this difference was not quite significant. Note to Instructors: Depending on how students coded these data, the t value of 1.94 could be either positive or negative. QUESTIONS 6.5b & 6.5c. Of course, if we had conducted a 1-tailed (directional) test, these findings would be significant and would support the theory that name letter preferences may lie at the root of men’s tendency to gravitate toward states that resemble their names. Even if we were generous enough to conduct a one-tailed test, however, we would still need to be concerned about whether these men who disproportionately resided in states resembling their names actually moved to these states or whether they were disproportionately born there. If this alternate account of the results proved true, then the exaggerated liking that men had for their initials when they lived in a state resembling their names might simply reflect the positive social feedback they should be likely to get from the many loyal residents of their home states. Anyone who has ever visited Texas and seen the “Let’s brag about Texas” billboards might appreciate this concern. This alternate account is still interesting but it does not support the idea of implicit egotism. QUESTION 6.6. This archival study of heat and violence certainly seems to suggest that heat facilitates violence. In 2006, the average murder rate in the 10 hottest U.S. cities was 10.96 murders per 100,000 residents. In contrast the average murder rate for the 10 coldest cities was 0.51 murders per 100,000 residents. If we treat cities as the unit of analysis (a very conservative analysis), an independent samples t-test showed that this difference was significant, t (18) = 3.57, p = .002. However, we cannot safely conclude from these data alone that heat facilitates violence. As it turns out, the 10 hot cities appear to be much larger cities, on average, than the 10 cold cities. It is well documented that murder rates increase dramatically with population density. That is, big cities have much higher murder rates than small cities. Note to Instructors: Depending on how students coded these data, the t value could be either positive or negative 3.57. Without addressing this serious confound, the researcher cannot make any strong claims about the connection between heat and violence. Another less obvious confound is that almost all of the hot cities are located in Florida and Texas. Loosely speaking, these are both Southern states and it is possible that murder rates are higher in these mostly Southern cities because of some aspect of Southern culture (e.g., poverty, the culture of honor) rather than because of temperature per se. QUESTION 6.7a. The average accuracy score for this group of participants was .41, meaning that on average, they labeled 41% of the cola samples correctly. This value is only slightly higher than the chance value of .33, which is the value that reflects random guessing. A one-sample t-test showed that this observed value of .41 was not significantly greater than the chance value of 0.33, t (29) = 1.12, p = .274. People seem to have little or no ability to discriminate between the three colas in a blind taste test. Of course, to say this more convincingly we should use a much larger sample size. If the true population accuracy value is .41, we would just need a very large sample to document this small advantage over the chance value. Note: If students use the slightly more precise value of .333 as the chance standard, the result should be t (29) = 1.075, p = .291. QUESTION 6.7b. In contrast to this poor performance, the average person reported that he or she would be able to label the three colas at well above chance levels. The average confidence rating was 0.56 (56% predicted accuracy). A one-sample t-test revealed that this value of .56 was higher than the chance score of .33, t(29) = 5.68, p < .001. Note: t (29) = 5.60, p < .001, for those who use .333. A second one-sample t-test showed that this confidence value of 0.56 was also significantly higher than the actual accuracy score of 0.41, t (29) = 3.73, p = .001. Apparently, people are more confident than correct. This finding is consistent with a large literature on overconfidence showing that people often overestimate the accuracy of their judgments, especially when judgment tasks prove to be difficult (as this one certainly did). Note: t (29) = 3.70, p < .001, for those who use .411. QUESTION 6.7c. Women were slightly, but not significantly, more accurate than men. Respective accuracy rates for women and men were 0.44 and 0.38, t (28) = -0.45, p = .655. Despite the fact that women were slightly more accurate than men, men reported being significantly more confident than did women; respective confidence levels for women and men were 0.48 and 0.65, t (28) = 2.15, p = .041. Thus, despite performing slightly more poorly than women, men seem to be more confident than women. Putting these two findings together it seems appropriate to say that men were more overconfident than women.