home1-14

advertisement

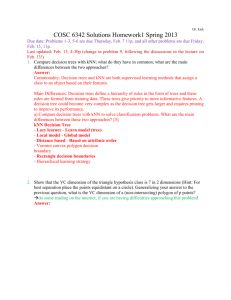

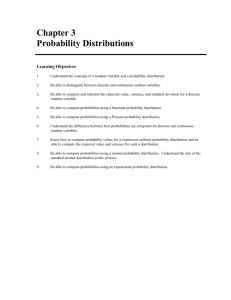

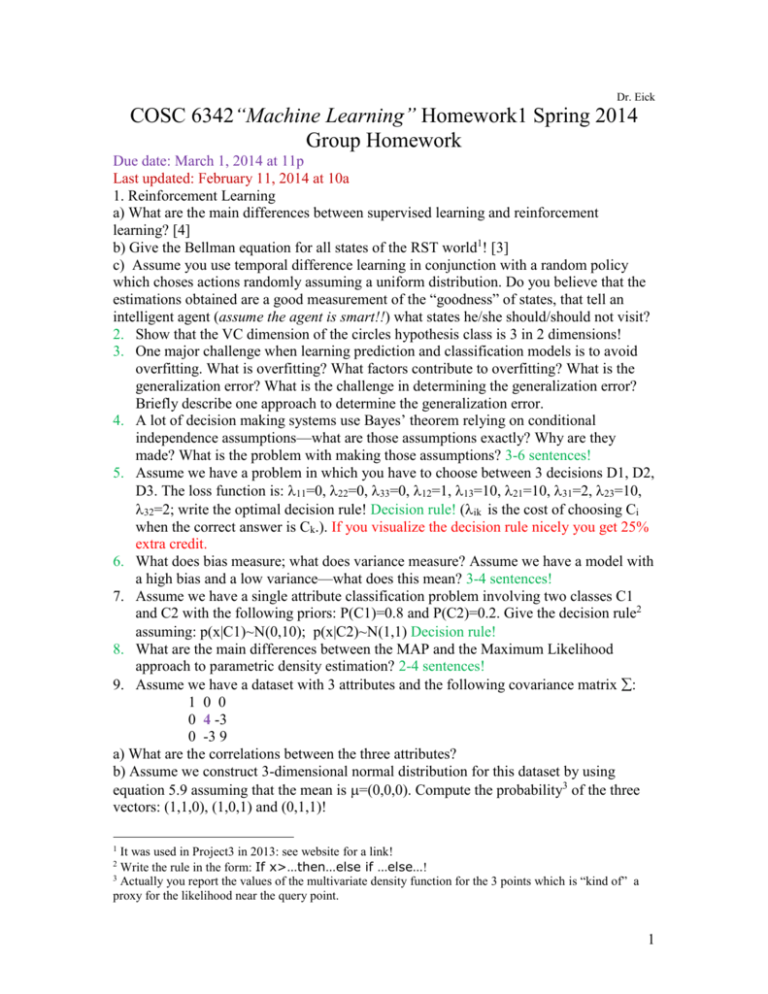

Dr. Eick COSC 6342“Machine Learning” Homework1 Spring 2014 Group Homework Due date: March 1, 2014 at 11p Last updated: February 11, 2014 at 10a 1. Reinforcement Learning a) What are the main differences between supervised learning and reinforcement learning? [4] b) Give the Bellman equation for all states of the RST world1! [3] c) Assume you use temporal difference learning in conjunction with a random policy which choses actions randomly assuming a uniform distribution. Do you believe that the estimations obtained are a good measurement of the “goodness” of states, that tell an intelligent agent (assume the agent is smart!!) what states he/she should/should not visit? 2. Show that the VC dimension of the circles hypothesis class is 3 in 2 dimensions! 3. One major challenge when learning prediction and classification models is to avoid overfitting. What is overfitting? What factors contribute to overfitting? What is the generalization error? What is the challenge in determining the generalization error? Briefly describe one approach to determine the generalization error. 4. A lot of decision making systems use Bayes’ theorem relying on conditional independence assumptions—what are those assumptions exactly? Why are they made? What is the problem with making those assumptions? 3-6 sentences! 5. Assume we have a problem in which you have to choose between 3 decisions D1, D2, D3. The loss function is: 11=0, 22=0, 33=0, 12=1, 13=10, 21=10, 31=2, 23=10, 32=2; write the optimal decision rule! Decision rule! (ik is the cost of choosing Ci when the correct answer is Ck.). If you visualize the decision rule nicely you get 25% extra credit. 6. What does bias measure; what does variance measure? Assume we have a model with a high bias and a low variance—what does this mean? 3-4 sentences! 7. Assume we have a single attribute classification problem involving two classes C1 and C2 with the following priors: P(C1)=0.8 and P(C2)=0.2. Give the decision rule2 assuming: p(x|C1)~(0,10); p(x|C2)~(1,1) Decision rule! 8. What are the main differences between the MAP and the Maximum Likelihood approach to parametric density estimation? 2-4 sentences! 9. Assume we have a dataset with 3 attributes and the following covariance matrix : 1 0 0 0 4 -3 0 -3 9 a) What are the correlations between the three attributes? b) Assume we construct 3-dimensional normal distribution for this dataset by using equation 5.9 assuming that the mean is =(0,0,0). Compute the probability3 of the three vectors: (1,1,0), (1,0,1) and (0,1,1)! 1 It was used in Project3 in 2013: see website for a link! Write the rule in the form: If x>…then…else if …else…! 3 Actually you report the values of the multivariate density function for the 3 points which is “kind of” a proxy for the likelihood near the query point. 2 1 c) Compute the Mahalanobis distance between the vectors (1,1,0), (1,0,1) and (0,1,1). Also compute the Mahalanobis distance between (1,1,-1) and the three vectors (1,0,0), (0.1.0). (0,0,-1). How do these results differ from using Euclidean distance? Try to explain why particular pairs of vectors are closer/further away from each other when using Mahalanobis distance. What advantages do you see in using Mahalanobis distance of Euclidean distance? 10. PCA— compute the three principal component for the covariance matrix of Problem 9 (use any procedure you like). What percentage of the variance do the first 2 principal component capture? Analyze the coordinate system that is formed by the first 2 principle components and try to explain why the third principal component would be removed, based on the knowledge you gained by answering Problem 9! 2